CHAPTER 7

ENCRYPTION

Stephen Cobb and Corinne LeFrançois

7.1 INTRODUCTION TO CRYPTOGRAPHY

7.2.2 More Cryptic Terminology

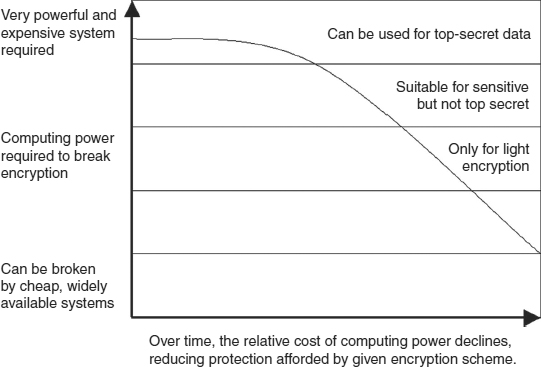

7.2.4 Brute Force Cryptanalysis

7.2.5 Monoalphabetical Substitution Ciphers

7.2.6 Polyalphabetical Substitution Ciphers

7.2.8 Early-Twentieth-Century Cryptanalysis

7.3.3 Transposition, Rotors, Products, and Blocks

7.3.4 Data Encryption Standard

7.4.4 Limitations and Combinations

7.5.1 Communications and Storage

7.5.2 Securing the Transport Layer

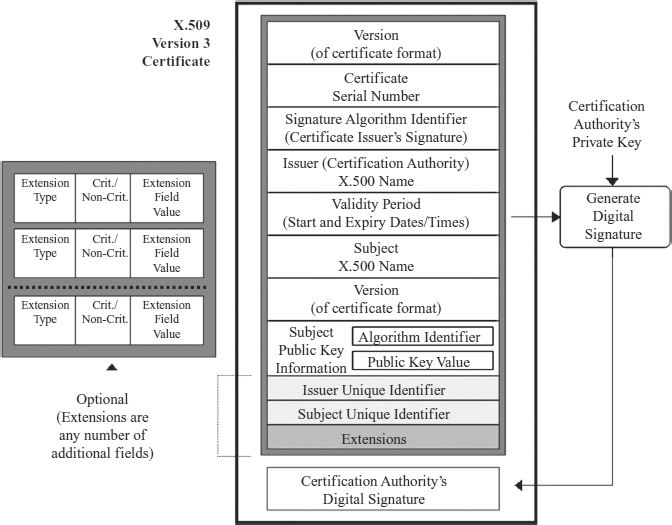

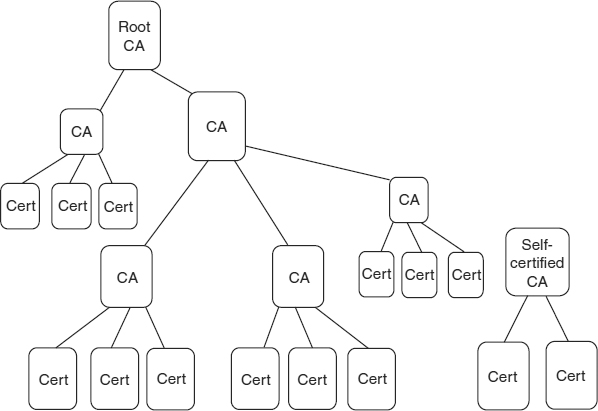

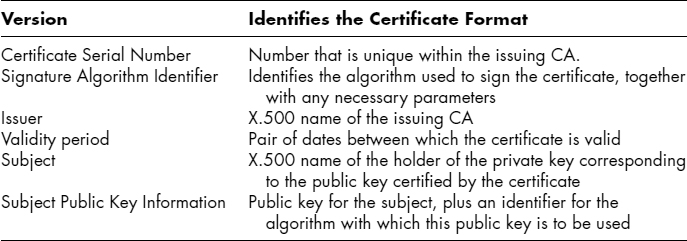

7.5.3 X.509v3 Certificate Format

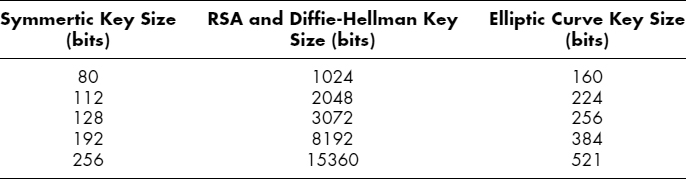

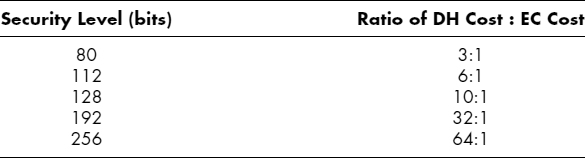

7.6.1 Elliptic Curve Cryptography

7.1 INTRODUCTION TO CRYPTOGRAPHY.

The ability to transform data so that they are accessible only to authorized persons is just one of the many valuable services performed by the technology commonly referred to as encryption. This technolosy has appeared in other chapters, but some readers may not be familiar with its principles and origins. The purpose of this chapter is to explain encryption technology in basic terms and to describe its application in areas such as file encryption, message scrambling, authentication, and secure Internet transactions. This is not a theoretical or scientific treatise on encryption, but a practical guide for those who need to employ encryption in a computer security context.

Organizations around the world increasingly rely on cryptography to communicate securely and to store information safely. Typically, the algorithms used by DoD organizations are employed and maintained for many years. For example, the Data Encryption Standard (DES) has been used in some form for over 20 years.1

This chapter is a brief overview of cryptography and its practical applications to the needs of normal business users, as distinct from the needs of high-security government agencies. A thorough examination of the mathematics that are the foundation of these topics is beyond the scope of this chapter, but we provide suggested readings for further study.

7.1.1 Terminology.

This list of basic terms will be helpful for readers as they continue through this chapter:

Algorithm—a finite list of well-defined instructions for accomplishing some task that, given an initial state, will terminate in a defined end state.

Cipher—the core algorithm used to encrypt data. A cipher transforms plaintext into ciphertext that is not reversible without a key.

Ciphertext—text in encrypted form, as opposed to the plain text. We show ciphertext in UPPERCASE throughout this chapter.

Codes—a list of equivalences (a codebook) allows the substitution of meaningful text for words, phrases or sentences in an innocuous message; for example, “I will buy flowers for Mama tomorrow for her party at 7 pm” might be decoded to mean “Launch the attack on the mother ship next week on Sunday.”

Decrypt/Decipher—the process of retrieving the plaintext from the ciphertext.

Encrypt/Encipher—to alter plaintext using a secret code so as to be unintelligible to unauthorized parties.

Key—a word or system for solving a cipher or code.

Plaintext—the original message to be encoded or enciphered. We show plaintext in lowercase throughout this chapter.

The science of cryptology (sometimes abbreviated as crypto) is the study of secure communications, formed from the Greek words κρψπτoσ (kryptos), meaning “hidden,” and λoγoσ (logos), “word.” More specifically, it is the study of two distinct, yet highly intertwined, fields of study: cryptography and cryptanalysis. Cryptography is “the science of coding and decoding messages so as to keep these messages secure.”2 Cryptanalysis is the art and science of “cracking codes, decoding secrets, violating authentication schemes, and in general, breaking cryptographic protocols,”3 all without knowing the secret key. Systems for encrypting information are referred to as cryptosystems.

Systems for encrypting information may also be referred to as ciphersystems, from cipher, meaning “zero,” or empty (a word rooted in the Arabic sifr). Terms using cipher and crypto are interchangeable, with some authors preferring cipher to avoid the religious and cultural connotations of crypt, a word with the same root as “encryption.” Thus, encryption may be referred to as encipherment, decryption referred to as decipherment, and so on.

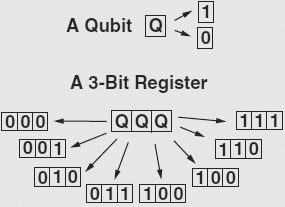

The most obvious use of encryption is to scramble the contents of a file or message, using some form of shared secret as a key. Without the key, the scrambled data remain hidden and cannot be unscrambled or decrypted. The total number of possible keys for an encryption algorithm is called the keyspace. The keyspace is a function of the length of the key and the number of possible values in each position of the key. For a keylength of n positions, with each position having v possible values, then the keyspace for that key would be vn. For example, with 3 positions and 2 values per position (e.g., 0 or 1), the possible keys would be 000, 001, 010, 011, 100, 101, 110, and 111 for a total keyspace of 8.

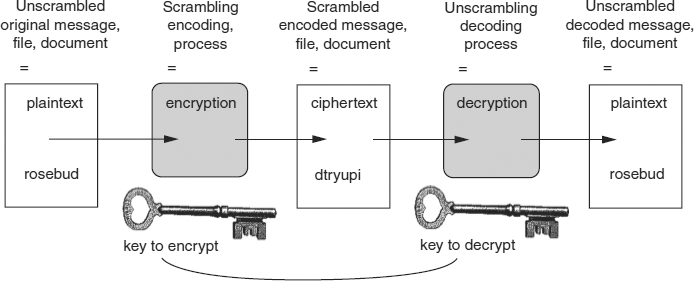

EXHIBIT 7.1 Diagram of Cryptographic Terms

In cryptographic terms, the contents of a file before encryption are plaintext, while the scrambled or encoded file is known as ciphertext (see Exhibit 7.1). As a field of intellectual activity, cryptology goes back many millennia. Used in ancient Egyptian, China, and India, it was discussed by the Greeks and regularly employed by the Romans. The first European treatise on the subject appeared in the fourteenth century. The subject assumed immense historic importance during both world wars. The British success in breaking codes that the Germans used to protect military communications in World War II was a major factor in both the outcome of the war and in the development of the first electronic computer systems.

Since then, cryptography and computer science have developed hand in hand. In 1956, the United States National Security Agency (NSA), the U.S. Government department in charge of monitoring the worldwide flow of information, began funding improvements in computer hardware, pumping some $25 million into Project Lightning. This five-year development effort, intended to produce a 1,000-fold increase in computing power, resulted in over 150 technical articles. It also gave rise to more than 300 patent applications and succeeded in advancing the frontiers of hardware design. The NSA, based in Fort Meade, Maryland, was also involved in the creation of DES as the commercial encryption standard for much of the last 20 years. Today, the NSA is widely believed to have the world's largest collection of supercomputers and the largest staff of cryptanalysts.

7.1.2 Role of Cryptography.

The central role of cryptography in computer security is ensuring the confidentiality of data. But cryptography can support other pillars of computer security, such as integrity and authenticity. This section looks at the different roles of cryptography.

7.1.2.1 Confidentiality.

The role of encryption in protecting confidentiality can be seen in a classic definition of encryption: “Encryption is a special computation that operates on messages, converting them into a representation that is meaningless for all parties other than the intended receiver.”4

Much of the literature on cryptography discusses the technology in terms of ensuring the confidentiality of messages, but this is functionally equivalent to protecting the confidentiality of data. The use of the term “message” reflects the traditional use to which cryptography has been put, both before and after the advent of computers. For example, Julius Caesar encrypted messages to Cicero 2,000 years ago, while today messages between a Web browser and a Web server are encrypted when performing a “secure” transaction.

When applying cryptography to computer security, it is sometimes appropriate to substitute the term “files” for “messages.” For example, hard drive encryption programs protect data files stored on a hard drive. However, data files take the form of messages when they are transferred from one computer to another, across a network, the Internet, or via phone lines. Practically speaking, data being transferred in this manner are exposed to a different set of dangers from those that threaten data residing on a computer in an office. Thus, the use of encryption to render files useless to anyone other than an authorized user is relevant both to files in transit and to those that reside on a server or a stand-alone computer, particularly when the latter is a laptop, notebook, or PDA.

7.1.2.2 Integrity.

In the second half of the last century, following the advent of programmable computer systems, the ability of cryptography to transform data was applied in many new and interesting ways. As will be seen in a moment, many cryptographic techniques use a lot of mathematical calculation. The ability of computers to perform many calculations in a short period of time greatly expanded the usefulness of cryptography, and also inspired the development of ever-stronger ciphersystems.

Maintaining the integrity of data is often as important as keeping them confidential. When writing checks, people take pains to thwart alteration of the payee or the amount. In some cases, integrity is more important than confidentiality. Changing the contents of a company press release as it passes from the company to the press could have serious consequences. It is not only human actions that threaten data integrity; mechanical failures and logical errors can also change data. It is vital that such changes be detected, as was discussed in Chapter 4 of this Handbook, where it was observed that “[a]ll data movements and translations increase the likelihood of internal error, and for this reason parity checks and validity tests have become indispensable.”

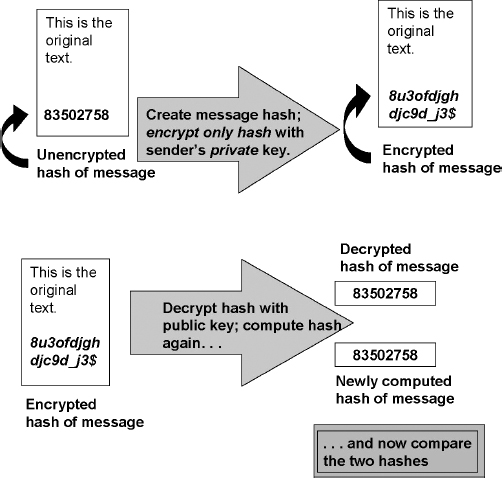

That chapter covered the role of parity bits for error detection, the function of redundancy checks, and the use of checksums to provide a modification-detection capability. A type of cryptographic hash or checksum called a Message Authentication Code (MAC) can protect against intentional, but unauthorized, data modification as well as against accidental modification. A MAC is calculated by applying a cryptographic algorithm and a secret value, called the key, to the data. The data are later verified by applying the cryptographic algorithm and the same secret key to the data to produce another MAC; this mAc then is compared to the initial MAC. If the two mACs are equal, then the data are considered authentic (see diagram in Exhibit 7.2, which uses the public key cryptosystem, discussed later). Otherwise, an unauthorized modification is assumed (any party trying to modify the data without knowing the key would not know how to calculate the appropriate MAC corresponding to the altered data).

7.1.2.3 Authentication.

In the context of computer security, authentication is the ability to confirm the identity of users. For example, many computers now ask users to log on before they can access data. By requesting a user name and password, systems attempt to assure themselves that only authentic users can gain access. However, this form of authentication is limited—merely assuring that the person logging on is someone who knows a valid user name and password pair. Cryptography plays a very important role in efforts to ensure stronger authentication, from encrypting the password data to the creation and verification of electronic identifiers such as digital signatures. These will be described in more detail later in this chapter, along with the differences between public key and private key cryptography, both of which may be used in these schemes.

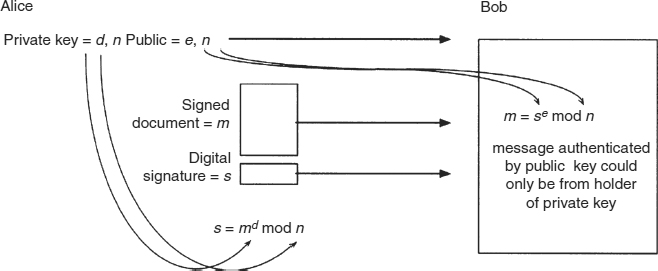

EXHIBIT 7.2 Message Authentication Code Using Public Key Cryptosystem

Source: Copyright © 2008 M. E. Kabay. Used with permission.

Using a public key system, documents in a computer system can be electronically signed by applying the originator's private key to the document. The resulting digital signature and document then can be stored or transmitted. The signature can be verified using the public key of the originator. If the signature verifies properly, the receiver has confidence that the document was signed using the private key of the originator and that the message had not been altered after it was signed. Because private keys are known only to their owner, it is also possible to verify the originator of the information to a third party.

7.1.2.4 Nonrepudiation.

An aspect of computer security that has increased greatly in significance, due to the growth in internetwork transactions, is nonrepudiation. For example, if someone places an electronic order to sell stocks that later increase in value, it is important to prove that the order definitely originated with the individual who placed it. Made possible by public key cryptography, nonrepudiation helps ensure that the parties to a communication cannot deny having participated in all or part of the communication.

7.1.3 Limitations.

One role that cryptography cannot fill is defense against data destruction. Although encryption does not assure availability, it does represent a very valuable extra line of defense for computer information when added to physical security, system access controls, and secure channels of communication. Indeed, when computers are mobile, or data are being communicated over insecure channels, encryption may be the main line of defense. However, even though applied cryptography can provide computer users with levels of security that cannot be overcome without specialized knowledge and powerful computers, encryption of data should not be thought of as an alternative to, or substitute for, system access control. According to Seberry and Pieprzyk4, the role of cryptography is to protect “information to which illegal access is possible and where other protective measures are inefficient.”

Encryption-based file access controls should be a third barrier after site and system access controls, if for no other reason than that encryption systems alone do little to prevent people deleting files.

7.2 BASIC CRYPTOGRAPHY.

The aim of cryptography is to develop systems that can encrypt plaintext into ciphertext that is indistinguishable from a purely random collection of data. This implies that all of the possible decrypted versions of the data except one will be hopelessly ambiguous, with none more likely to be correct than any of the others. One of the simplest ways to create ciphertext is to represent each character or word in the plaintext by a different character or word in the ciphertext, such that there is no immediately apparent relationship between the two versions of the same text.

7.2.1 Early Ciphers.

It is believed that the earliest text to exhibit the baseline attribute of cryptography, having a slight modification of the text, occurred in Egypt nearly 4,000 years ago. A scribe used a number of unusual symbols to confuse or obscure the meaning of the hieroglyphic inscriptions on the tomb of a nobleman named Khnumhotep II.5

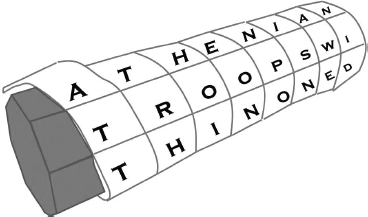

It is also believed that the first effective military use of cryptography was a simple transposition cipher (see Section 7.3.3) by the Spartans, who “as early as 400 BCE employed a cipher device called the scytale for secret communication between military commanders.”6 The scytale was a cylindrical or tapered stick with a thin strip of leather or parchment wrapped around it spirally.7 The message to be hidden was written lengthwise with no blank spaces. When unraveled, the parchment appeared to hold nothing but random letters. To read the parchment, the recipient had to have a stick with exactly the same dimensions as the sender. The distribution of appropriate decoding scytales took place before the military commanders departed for the field.8 For example, a particular combination of stick and strip could allow the cleartext (shown in lowercase):

atheniantroopswithinonedaysmarchofromebereadynow

to be broken into up to six rows of eight letters to be written across the rolled-up strip, in this way:

athenian

troopswi

thinoned

EXHIBIT 7.3 Scytale in Use

Source: Copyright © 2008 M. E. Kabay. Used with permission.

aysmarch

ofromebe

readynow

The message might appear on the scytale as shown schematically in Exhibit 7.3.

Reading the unwrapped strip without the stick would produce this ciphertext (shown in uppercase):

ATTAORTRHYFEHOISREEONMODNPOAMYISNRENAWECBONIDHEW

“The first attested use of [a substitution cipher] in military affairs comes from the Romans.”9 During that time, Julius Caesar encoded all his messages by simply replacing every letter with the letter three positions away. For example, the letter a would become the letter d, the letter b would become the letter e, and so on. Now called the Caesar cipher, this scheme is best-known of all the monoalphabetic algorithms (see Section 7.2.5).10 Consider the Caesar cipher illustrated in the next comparison using the modern English alphabet, with the letters of the alphabet simply shifted three places.

Plaintext: abcdefghijklmnopqrstuvwxyz Ciphertext: DEFGHIJKLMNOPQRSTUVWXYZABC

To encrypt a message, the sender finds each letter of the message in the plaintext alphabet and uses the letter below it in the ciphertext alphabet. Thus the clear message:

Plaintext: beware the ides of march

is transformed into the encrypted message:

Ciphertext: EHZDUH WKH LGHV RI PDUFK

This type of cipher is known as a substitution cipher. Although the Caesar cipher is relatively simple, substitution ciphers can be very powerful. Most examples of the Caesar cipher shift the alphabet three places, as shown, so that the ciphertext line begins with d, but some authors suggest Caesar might have used other numbers, so the term “Caesar cipher” is used for all ciphers that conform to this algorithm (an algorithm being a formula or recipe for solving a problem).

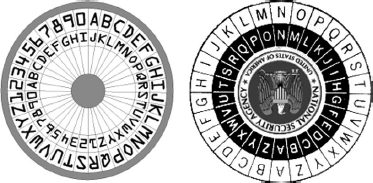

This level of encryption might seem rudimentary, but it is an important starting point for much that follows. For example, one way to visualize the Caesar cipher is as a pair of rings, one inside the other, as shown in Exhibit 7.4. Both circles contain the letters of the alphabet. If one is rotated relative to the other the result is a cipher wheel, something well suited to automation. Eventually this happened, at first mechanically, then electrically, and today digitally. Automation facilitates repetition, and messages encrypted with a substitution cipher can be more difficult to decipher if multiple different substitutions are used. Thus the code wheel earned a place in the seal of the NSA, the U.S. Government agency most influential in the development of encryption.

EXHIBIT 7.4 Code Wheels and the NSA Seal

7.2.2 More Cryptic Terminology.

The key or password for the Caesar cipher presented in the last section is the number of places the alphabet has been shifted, in this case three. Because this key must be kept private in order for the message to remain protected, it must be delivered to the recipient for the message to be decoded, or decrypted, back to plaintext. That is why the Caesar cipher is described as a private key algorithm and also a symmetrical encryption algorithm, the same private key being used to encrypt and decrypt the message. Algorithms of this type can be defeated by someone who has the key, an encrypted message, and knowledge of the algorithm used. This might sound like a statement of the obvious; however, as will be seen later in this chapter, there are encryption algorithms that use keys that can be openly exchanged without rendering the encrypted data accessible. Knowledge of the algorithm used can often be derived, or reverse-engineered, by analysis of its output.

Another seemingly obvious fact is that when a private key cipher is used in an effort to achieve confidentiality, one problem is swapped for another. The problem of exchanging messages while keeping the contents from unintended recipients is replaced by the problem of exchanging keys between sender and receiver without disclosing the keys. This new problem is known as the key-exchange problem. The key-exchange problem will be examined in more detail later.

7.2.3 Basic Cryptanalysis.

“The first people to understand clearly the principles of cryptography and to elucidate the beginnings of cryptanalysis were the Arabs.”11 By the fifteenth century, they had discovered the technique of letter frequency distribution analysis and had successfully decrypted a Greek message on its way to the Byzantine Emperor.12 In 1492, a man known as al-Kalka-shandi described this technique in an encyclopedia. He also described several cryptographic techniques, including substitution and transposition ciphers.13

Returning to the Caesar cipher, consider how this code could be broken using the science of cryptanalysis. When examined for a length of time, this particular code is fairly transparent. As soon as several letters are identified correctly, the rest fall into place. For example, because “the” is the most common three-letter word in the English language, testing “XLI” against “the” reveals that each letter of plaintext has a fixed relationship to the ciphertext: a shift of three to the right.

If that difference is applied to the rest of the message, the result is a piece of plaintext that is intelligible and thus assumed to be the correct solution to the problem. However, even in this simple example several sophisticated processes and assumptions are at work; they deserve closer attention before looking at more complex codes. First, the test of “the” against “XLI” assumes that the plaintext is English and that the attacker has some detailed knowledge of that language, such as the frequency of certain words. Second, it is assumed that the ciphertext follows the plaintext in terms of word breaks. Typically, this is not the case. Ciphertext usually is written in blocks of letters of equal length to further disguise it, as in:

Ciphertext: EHZDU HWKHL GHVRI PDUFK

When the recipient of the message decrypts it, the result, while not exactly easy reading, is nevertheless entirely intelligible:

Plaintext: bewar ethei desof march

Also note the convention of ignoring the case of individual letters and placing all plaintext in lowercase while all ciphertext is in capitals.

7.2.4 Brute Force Cryptanalysis.

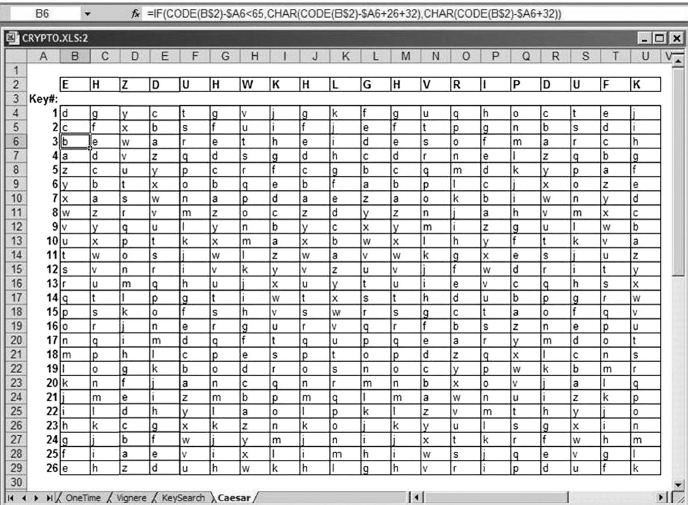

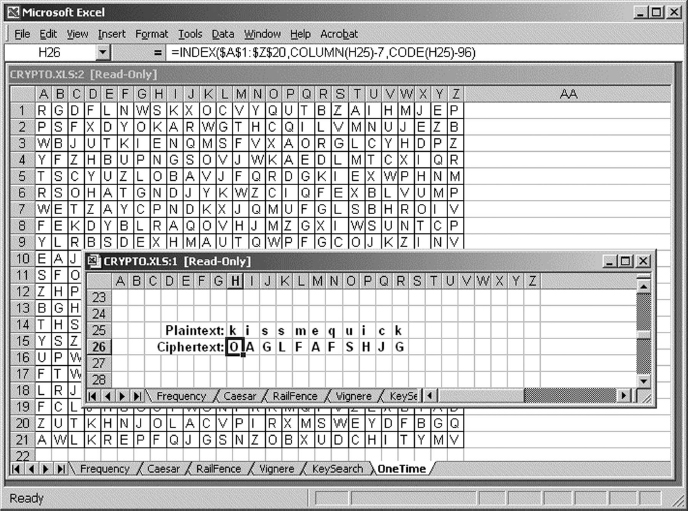

The next thing to note about the Caesar cipher is that, using the English alphabet, there are 26 possible keys. This means that someone intercepting the encrypted message could mount a standard form of attack known as brute force cryptanalysis. This method runs possible keys through the decryption algorithm until a solution is discovered. Statistically speaking, the correct key is reached after testing only half of all possible keys. In Exhibit 7.5, a spreadsheet table details a brute force attack on the Caesar ciphertext. In the example, the plaintext appears in line 6, Key #3.

EXHIBIT 7.5 Brute Force Attack on the Caesar Cipher

Note that three items of information are required for this attack, and all three of them are relevant to encryption on personal computers:

- A knowledge of the encryption algorithm used

- The number of possible keys

- The language of the plaintext

Using a computer in an office is somewhat different from sending messages on the field of battle (at least on a good day). Unlike an enemy spy, someone who is attempting to gain unauthorized access to data already has a fairly good idea of which algorithm is being used. (There are relatively few in use, and they often are directly associated with particular applications). This takes care of the first item. The primary obstacle to a brute force attack is the second item, number of keys. In the case of the Caesar cipher, the number of possible keys is relatively small, so the work involved in carrying out the attack can be completed very quickly, which is highly significant. Time is often the most important factor in practical cryptanalysis. Being able to decrypt messages within 24 hours is of little use if the information pertains to events that are measured in minutes, such as orders to buy and sell stock, or to launch air raids. If the cipher consisted entirely of random letter substitutions, like this:

Plaintext: abcdefghijklmnopqrstuvwxyz Ciphertext: UTWFRAQOYSEDCKJVBXGZIPHLNM

The number of possible keys (the keyspace) is now 26!, or ~4.03 × 1026, which looks even more daunting when written out:

403,291,461,126,606,000,000,000,000

Imagine a brute force attack using a computer that can perform 1 million decryptions per microsecond (considerably more number crunching than the average personal computer can perform). Using a single processor, it could take over 10 million years to execute a brute force attack on this code. Fortunately for the code breaker, there are other ways of cracking substitution ciphers, as discussed in a moment. The point is that, while brute force attacks are possible, they are not always practical.

Although it is true that by the central limit theorem of statistics, the most likely number of trials required to hit upon the correct key is one-half the total keyspace, the average reduction by a factor of 2 is negligible in the face of computational periods measured in years and the difficulty of identifying cleartext in the morass of incorrect decryptions.

Functionally, brute force attacks depend upon knowing which encryption algorithm is behind the ciphertext. Practically, they depend upon the feasibility of successes within an appropriate time frame. They also depend upon the third item of information in the list above: knowledge of the language of the plaintext. The solution to the Caesar cipher in Exhibit 7.5 tends to jump out because it is closer to plain English than any of the other solutions. However, without knowing what constitutes plaintext, a brute force attack will, at best, be inefficient, and, at worst, unsuccessful. This part of cryptanalysis, recognizing a positive result, is less amenable to automation than any other. The difficulty is compounded by encryption of purely numerical results where the correct cleartext can be impossible to determine without extensive additional knowledge.

7.2.5 Monoalphabetical Substitution Ciphers.

Both the Caesar cipher and the random substitution cipher shown are examples of monoalphabetic ciphers. This means that one letter of ciphertext stands for one letter of plaintext. This renders such codes susceptible to an attack quite different from brute force. Suppose a customs officer attempts to discover when and how an illegal weapons shipment will be entering the country. The following message is intercepted:

YZYGJ KZORZ OYXZR RKZRK XUXRJ XRZXU YKQQQ

The person who encoded this text clearly substituted new letters for the original letters of the message. To the experienced code breaker or cryptanalyst, the task of deciphering this message is quite a simple one. First count how many times each letter occurs in the text. This produces a list like this:

Ciphertext: R Z X Y K J U O G Frequency: 6 6 5 4 4 2 2 2 1

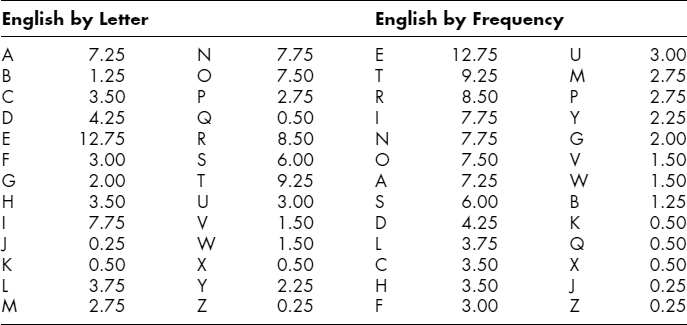

Note that the last three letters are discounted as they are merely filling out the five letter grouping. Next refer to a table of frequencies, which shows the relative frequency with which the letters of the alphabet occur in a specific language or dialect of that language. One such list is shown in Exhibit 7.6. This list was created for this example and proposes that the most commonly used letters in English in descending order of frequency are e, t, r, and so on. The actual order is more likely to be e, t, a, i, o, n, s, h, r, d, l, u, the order of keys on the English Linotype machine from the nineteenth century, although the precise order of frequencies can vary according to the region of origin or subject matter of the text.

Assuming that the original message is in English, a list that matches code letters to plaintext letters is easily derived.

Ciphertext: R Z X Y K J U O G Frequency: 6 6 5 4 4 2 2 2 1 Plaintext: e t r i n o a h s

EXHIBIT 7.6 Frequency Lists for English

Ciphertext: YZYGJ KZORZ OYXZR RKZRK XUXRJ XRZXU YKQQQ Plaintext: itiso nthet hirte enten rareo retra inqqq

This is readable as “it is on the thirteen ten rare ore train.” Although this example obviously was contrived to make a point, it clearly illustrates an important cryptographic tool that can quickly decipher something that at first seems to be very forbidding. The encryption in the previous example could have been based on a simple substitution cipher. For example, after using the password “TRICK” followed by the regular alphabet minus the letters in the password for the plaintext, the ciphertext is the alphabet written backward:

Plaintext: TRICKABDEFGHJLMNOPQSUVWXYZ Ciphertext: ZYXWVUTSRQPONMLKJIHGFEDCBA

Frequency analysis also works if the substitution is entirely random, as in the example shown earlier, the key for which is entirely random. The specialized tools, such as frequency tables, that are required to break codes point out a basic trade-off: If a basic level of protection is needed, it is easy to get but also easy to break, at least for an expert. The qualification “for an expert” is important because users of encryption need to keep its role in perspective. The salient questions are: Who can gain from decrypting the data, and what means do they have at their disposal? There is no point investing in powerful encryption hardware or software if those likely to attempt to read your files are not particularly sophisticated, dedicated, or well equipped. For example, a person who mails a postcard knows it can be read by anyone who sees it. Envelopes can be used to prevent this, hardly the ultimate in confidentiality, but widely used and relatively successful nonetheless.

7.2.6 Polyalphabetical Substitution Ciphers.

Even when the plaintext uses a wider range of letters than the contrived example, substitution ciphers can be cracked by frequency analysis. A powerful technique is to concentrate on the frequency of two-letter combinations, which are known as digraphs, the most common of which in English is “TH.” One way to counter frequency analysis is to use multiple substitutes for the more frequent letters. This cannot be done with a straightforward alphabetic coding. However, if using numbers for letters, it is possible to assign multiple numbers to some letters, such as 13 17 19 23 for E, which would help dilute the natural frequency of this letter. It would appear that supplying multiple substitutions, known as homophones, in proportion to the frequency of each letter would effectively counter frequency analysis. However, some of the underlying structure of the plaintext still survives, notably digraphs, which the cryptanalyst can use to crack the code.

In Europe during the Middle Ages, advances in cryptography were being made by the Papal States and Italian city-states to protect diplomatic messages. Then, in 1379, an Italian man named Gabriele de Lavinde created the first European manual on cryptography. “This manual, now in the Vatican archives, contains a set of keys for 24 correspondents and embraces symbols for letters, nulls, and several two-character code equivalents for words and names.”14 The nomenclature described by Lavinde's manual “was to hold sway over all Europe and America for the next 450 years.”15

Several other notable advances emerged in Europe during the period of Lavinde's manual. First, in 1470, Leon Battista Alberti published the first description of a cipher disk.16 Next, in 1563, Giambattista della Porta provided the first example of a digraphic cipher in which two letters are represented by one symbol.17

One method of decreasing the extent to which the structure of the plaintext is reflected in the ciphertext is to encrypt multiple letters of the plaintext. For example, “AR” might be encrypted as “CM.” This is the theory behind what is known as the Playfair cipher, which was invented in 1854 by a British scientist, Sir Charles Wheatstone, but that was named after his friend Baron Playfair who fought for its adoption by the British Foreign Office.18 Although the Playfair cipher remained in use through both world wars, it does not do enough to disguise the plaintext and cannot withstand a concerted frequency analysis.

7.2.7 The Vigenère Cipher.

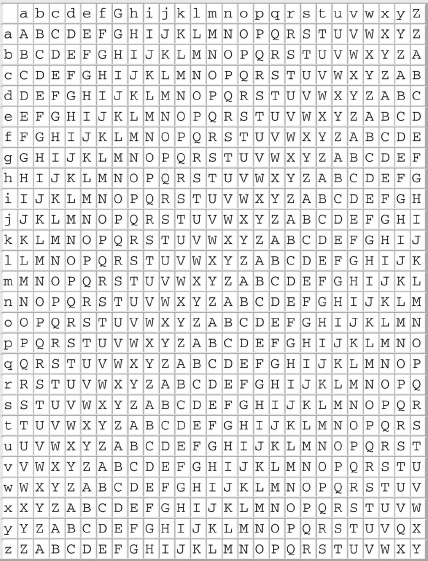

A particularly important technique in the evolution of polyalphabetic ciphers has its roots in the sixteenth century. In 1586, Blaise de vigenère published a square encryption/decryption table, named after him as the vigenère Square, and descriptions of the first plaintext and ciphertext autokey systems.19 The vigenère cipher involves a table of letters, like the one shown in Exhibit 7.7, that are used with a key, to provide different monoalphabetic substitutions as the encryption proceeds through the plaintext. Thus each letter of the ciphertext has a different relationship with the plaintext, like this:

Key: doomsdaydoomsdaydoomsdaydoomsday plaintext: sellentireportfolionowandbuygold ciphertext: VSZXWQTGJIAZVGYWCMDBFPFBOJIKUKLQ

The message is enciphered by looking at the row in the table that begins with the first letter of the key. Then go along that row until the column headed by the first letter of the plaintext. The ciphertext substitution is the letter at that intersection in the table.

EXHIBIT 7.7 Vigenère Table

Thus, row d, column s, yields V. Then proceed to the second letter, and so on. Note that the first time the letter e is encrypted the cipher is S, but the second time it is W. The two ls in sell are encoded as Z and X respectively, and so on.

Does this cipher completely obscure the structure of the plaintext? Stallings notes: “If two identical sequences of plaintext letters occur at a distance that is an integer multiple of the keyword length, they will generate identical ciphertext sequences.”20 This means that the cryptanalyst can determine the length of the keyword. Once this is done, the cipher can be treated as a number of monoalphabetic substitutions, that number being equal to the key length. Frequency tables are again brought into play, and the code can be cracked. The cryptographer's response to this weakness is to use a longer key, so that it repeats less often. In fact, one technique, autokey, invented by vigenère, is to form the key from the plaintext itself, together with one code word, like this

Key: doomsdaysellentireportfolionowan plaintext: sellentireportfolionowandbuygold ciphertext: VSZXWQTGJIAZVGYWCMDBFPFBOJILUKLQ

This system is very powerful, but it still can be attacked by statistical analysis based on frequencies, because the letters of the plaintext and key share roughly the same frequency distribution. The next level of defense is to use a keyword that is as long as the plaintext but bears no statistical relationship to it. This approach, which is of great cryptographic significance, was not hit upon until the twentieth century arrived, bringing with it binary code and global warfare.

7.2.8 Early-Twentieth-Century Cryptanalysis.

The advent of modern cryptography began with the invention and development of the electromagnetic telegraph system and the introduction of the Morse code. Samuel Morse brought a system of dots and dashes that allowed near real–time long-distance communication. He envisioned this system as a means of secure communications. It would be up to others to devise systems to encrypt telegraphic communications. Anson Stager, the supervisor of the U.S. Military Telegraph during the Civil War. Stager devised 10 ciphers for the Union Army that were never broken by the Confederacy.21

The use of telegraphic ciphers and codes continued into the two world wars. In fact, one of the most famous early successes of cryptanalysis prompted the entrance of the United States into World War I. When the war first started, the German transatlantic telegraph cable had been cut by the British, forcing all of Germany's international communications to route through the United Kingdom before being sent on to the Swedish or American transatlantic lines.22 In 1917, “British cryptographers deciphered a telegram from German Foreign Minister Arthur Zimmermann to the German Minister to Mexico, von Eckhardt.”23 It promised Mexico ownership over territory that belonged to the United States (California, e.g.), if Mexico joined the German cause and attacked the United States. The British informed President Wilson of their discovery, giving him a complete copy of the telegram, thus resulting in the United States declaring war on Germany.24 That telegram has become famous in the history of cryptanalysis as the Zimmermann Telegram.

World War II saw several Allied victories over the Axis powers by use of advanced cryptographic systems. Few of these victories are more widely known and celebrated than the cracking of the German Enigma cipher machine, described next:

Following the decryption of the Zimmerman Telegram during World War I and the effects that weak ciphers had on that war's outcome, Germany was looking for an unbreakable cipher and was interested in leveraging automation and the use of machinery to replace traditional paper and pencil techniques. The Enigma machine consisted of a basic keyboard, a display that would reveal the cipher text letter, and a scrambling mechanism such that each plain text letter entered as input via the keyboard was transcribed to its corresponding cipher text letter. The machine was modular in design and multiple scrambling disks were employed to thwart attempts at frequency analysis.25

A British cryptanalysis group, with the help of a group of Polish cryptanalysts, first broke the Enigma early in World War II, and some of the first uses of computers were for decoding Enigma ciphers intercepted from the Germans. Breaking Enigma was a major victory for the Allies, and in order to keep exploiting it, they kept the fact that they had cracked it a secret.26

Thus far, the encryption schemes or devices described have encrypted messages consisting of words and nothing more. However, the emergence of the computer, even in its initial rudimentary form, revolutionized cryptology “to an extent even greater than the telegraph or radio.”27 Most cryptologic advances since World War II have involved, or made use of, computers. In the last few decades, cryptographic algorithms have advanced to the point where computing them by hand would be unfeasible, and only computers can do the required mathematics.28 Relying on computers has broadened the kind of information that can benefit from encryption. Computers use a unique language that transforms all information stored into bits, each a 1 or a 0.29 “This, in effect, means that plaintext is binary in form, and can therefore be anything; a picture, a voice, an e-mail or even a video—it makes no difference, a string of binary bits can represent any of these.”30

7.2.9 Adding Up XOR.

In 1917, an engineer at AT&T, Gilbert Vernam, was working on a project to protect telegraph transmissions from the enemy. At that time, teletypewriters were used, based on a version of Morse code called Baudot code, after its French inventor. In Baudot code, each character of the alphabet is allotted five units, each of which is either an electrical current or absence of current, known as a mark or a space. For example, the letter a is represented by mark, mark, space, space, space. In binary terms, each unit constitutes a bit that is either 0 or 1 (the five-bit code for a would be 11000). This system of pulses allowed teletype machines to convert text to and from telegraph signals using a keyboard and punched paper tape for input (a hole represents a mark because it allows the reading device to make electrical contact and create a pulse, whereas a space is represented by leaving the paper intact). Anyone with a suitable machine could intercept and read the transmission.

The 32 possible combinations (25) in this code were assigned to the 26 letters plus six “shunts” that did various things like shift to capitals or go down to the next line. Vernam's brilliant idea was to use a tape of random characters in Baudot code as a key that could be electromechanically added to the plaintext. Kahn describes the method of addition like this:

If the key and plaintext pulses are both marks or both spaces, the ciphertext pulse will be a space. If the key pulse is a space and the plaintext pulse is a mark, or vice-versa, (in other words, if the two are different), the ciphertext will be a mark.31

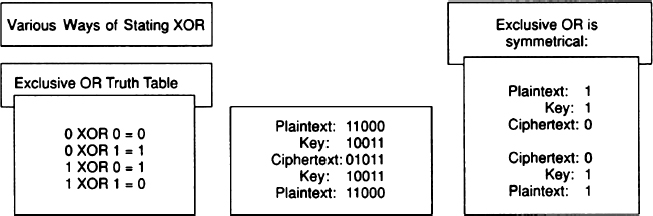

Today, this is known as Exclusive-Or, sometimes referred to as bit-wise XOR or just XOR for short (see Exhibit 7.8). XOR is widely used in computerized encryption schemes. Consider what happens when encoding the letter a using “B” as the key:

Plaintext: 1 1 0 0 0 (=a) Key: 1 0 0 1 1 (=B) Ciphertext: 0 1 0 1 1

EXHIBIT 7.8 Diagram of XOR

In the first column, 1 + 1 = 0, as indicated in Exhibit 7.8. To decipher the encrypted character simply perform the same operation, but add the ciphertext to the key:

Ciphertext: 0 1 0 1 1 Key: 1 0 0 1 1 (=B) Plaintext: 1 1 0 0 0 (=a)

At the time of its discovery, the significance of this method lay in its capacity for automation. The operator could feed the plaintext and key tapes into the teletype machine, and it would transmit an encrypted message with no further human input. No off-line preparation was required. Furthermore, as long as the receiver had the key tape, the teletype at the receiving end automatically printed out plaintext. This made Vernam's system the first to integrate encryption into the communication process, an essential feature of encryption systems for today's computer-based communications.

7.3 DES AND MODERN ENCRYPTION.

Although the use of XOR predated computers, the fact that it worked so well with binary code ensured that it would become an essential item in the modern cryptographer's toolkit. And so the focus of this chapter turns to modern cryptography and two of the most widely used cryptosystems today. The first is Data Encryption Standard (DES) and the second is Rivest, Shamir, Adleman (RSA).

7.3.1 Real Constraints.

As the preceding overview of the evolution of encryption suggests, major advances, which are few and far between, often are linked with the individuals who made them, such as vigenère, Playfair, and Vernam, none of whom had the benefit of computers. Today's computerized encryption schemes typically employ a number of classic techniques that, when combined, eliminate or minimize the short-comings of any single method. Several techniques will be discussed here, including transposition and rotors, that point the way to the most widely used encryption scheme to date: DES. First, however, consider the practical problems encountered by Vernam's otherwise brilliant scheme.

Vernam proposed a key that was a long series of random characters. This was coded on a loop of paper tape that eventually repeated (the tape held about 125 characters per foot). The length of the key made cryptanalysis of intercepted messages extremely difficult, but not impossible, because eventually the key repeated. With sufficient volume of ciphertext, the code would yield to frequency analysis. (Bear in mind that during time of war, or even military exercises, hundreds of thousands of words may be encrypted per day, providing a solid basis for cryptanalysis.)

7.3.2 One-Time Pad.

Several improvements then were suggested to avoid the impracticality of simply creating longer and longer key tapes. Another AT&T engineer, Lyman Morehouse, suggested using two key tapes of about eight feet in length, containing some 1,000 characters, to generate over 999,000 combinations of characters that could be fed into the encryption process as the key. This was an improvement in terms of practicality and security, but, as Major Joseph Mauborgne of the U.S. Army Signal Corps pointed out, heavy message traffic encrypted in this way still could be decoded. It was Mauborgne who realized that the only unbreakable cipher would use keys that are, as Kahn puts it “endless and senseless.”32 Thus he came up with what we know as the one-time system, the one unbreakable encryption scheme.

The one-time system sometimes is referred to as a one-time pad, because this is the way it has been deployed by intelligence agents in the field. The agent is issued a pad that aligns columns and rows of entirely random characters, as shown in Exhibit 7.9. The first letter of the plaintext is encrypted using the appropriate ciphertext from row 1, the second letter is encrypted from row 2, and so on. The result is ciphertext that contains no statistical relationship to the plaintext. When the message is encrypted the pad is destroyed. The recipient, who has a copy of the pad, uses it to reverse the process and decrypt the message.

The one-time pad essentially is a polyalphabetic substitution cipher, but with the same number of alphabets as there are characters in the message, thus defeating any kind of frequency analysis. A brute force attack is defeated by the fact that every possible result is as statistically significant as every other. As Kahn points out, a four-letter group of ciphertext could just as easily yield kiss, fast, slow, or any other possible four-letter combination.

EXHIBIT 7.9 One-Time Pad

So why is the unbreakable one-time system not in universal use? Well, it remains a favorite of intelligence agents in the field who have an occasional need to send short messages. However, for large-scale commercial or military encryption, it fails to solve the key size problem that Vernam's system brought to light. The key has to be as large as the total volume of encrypted information, and there is a constant demand for new keys. Furthermore, both sender and receiver have to hold and defend identical copies of this enormous key.

7.3.3 Transposition, Rotors, Products, and Blocks.

A completely different technique from substitution is transposition. Instead of substituting ciphertext characters for plaintext, the transposition cipher rearranges the plaintext characters. The simplest example is referred to as rail fence. For example, to encrypt “sell entire portfolio now and buy gold” each character is written on alternate lines, like this:

sletrprflooadugl elnieotoinwnbyod

which results in this ciphertext:

SLETRPRFLOOADUGLELNIEOTOINWNBYOD

So far, this does not present a serious challenge. More challenging is the next transposition into rows and columns that are numbered by akey (in this case, 37581426) so that the first set of ciphertext characters are under 1, the second under 2, and so on:

Key: 3 7 5 8 1 4 2 6

Plaintext: s e l l e n t i

r e p o r t f o

l i o n o w a n

d b u y g o l d

Ciphertext: EROGTFALSRLDNTWOLPOUIONDEEIBLONY

Although more complex, this transposition will still yield to cryptanalysis because it retains the letter frequency characteristics of the plaintext. The analyst also would look for digraphs and trigraphs while playing around with columns and rows of different length. (Kahn describes French code breakers during World War I literally cutting text into strips and sliding them up and down against each other to break German transposition ciphers.)

What makes transposition difficult to decipher is additional stages of encryption. For example, if the previous ciphertext is run through the system again, using the same key, all semblance of pattern seems to disappear.

Key: 3 7 5 8 1 4 2 6

Plaintext: e r o g t f a l

s r l d n t w o

l p o u i o n d

e e i b l o n y

Ciphertext: TNILAWNNESLEFTOOOLOILODYRRPEGDUB

The development of increasingly complex multiple-transposition ciphers pointed out the positive effects of multiple stages of encryption, which also apply to substitution ciphers. The prime examples of this are the rotor machines used by the Germans and Japanese in World War II. Some of the insights gained during the attack on German codes, such as Alan Turing's 1940 work on the application of information statistics to cryptanalysis, were considered so important that they remained classified for more than 50 years.

Although they eventually were defeated by Allied cryptanalysts, electromechanical systems such as Enigma were not only the most sophisticated precomputer encryption systems, but the effort to crack them was also a major catalyst in the development of computer systems themselves. When people started applying computer systems to code making rather than code breaking, they quickly hit upon the idea of chopping plaintext into pieces, or blocks, for easier handling. The term “block cipher” is used to describe ciphers that encrypt one block (e.g., 8 bytes of data) at a time, one block after another. Another result of computerizing the encryption process is a class of ciphers known as product ciphers. A product cipher has been defined as “a block cipher that iterates several weak operations such as substitution, transposition, modular addition/multiplication [such as XOR], and linear transformation.”33

The mathematics of product ciphers are beyond the scope of this chapter, but it is useful to note that “[n]obody knows how to prove mathematically that a product cipher is completely secure…[A] product cipher should act as a ‘mixing’ function which combines the plaintext, key, and ciphertext in a complex nonlinear fashion.”34 The parts of the product cipher that perform the rounds of substitution are referred to as S-boxes. The product cipher called Lucifer has two of these S-boxes, while DES encryption has eight S-boxes. The ability of a product cipher to produce truly random, nonlinear ciphertext depends on careful design of these S-boxes.

Examples of modern product ciphers include Lucifer (developed by IBM), DES (developed by IBM/NSA), LOKI (Brown, Pieprzyk, and Seberry), and FEAL (Shimizu and Miyaguchi). A class of product ciphers called Feistel ciphers operates on half of the ciphertext at each round, then swaps the ciphertext halves after each round. Examples of Feistel ciphers include Lucifer and DES, both of which are commercial systems, the subject of the next section of this chapter.

7.3.4 Data Encryption Standard.

Traditionally, the primary markets for code makers and computer makers have been the same: governments and banks. After World War II, computers were developed for both military and commercial purposes. By the mid-1960s, the leading computer maker was IBM, which could see that the growing role of electronic communications in commerce would create a huge market for reliable encryption methods. Over a period of years, mathematicians and computer scientists including Horst Feistel at the IBM research lab in Yorktown Heights, New York, developed a cipher called Lucifer that was sold to Lloyds of London in 1971 for use in a cash-dispensing system.35

The U.S. National Security Agency was in close touch with the Lucifer project, making regular visits to the lab (the constant flow of personnel between the NSA, IBM, and the mathematics departments of the major American universities tended to ensure that all new developments in the field were closely monitored). At roughly the same time, the National Bureau of Standards (NBS) was developing standard security specifications for computers used by the federal government. In 1973, the NBS invited companies to submit candidates for an encryption algorithm to be adopted by the government for the storage and transmission of unclassified information. (The government handles a lot of information that is sensitive but not sufficiently relevant to national security to warrant classification.)

IBM submitted a variation of its Lucifer cipher to the NBS, and after extensive testing by the NSA, this cipher was adopted as the nation's Data Encryption Standard (DES). The acronym actually refers to a document published as Federal Information Processing Standards Publication 46, or FIPS PUB 46 for short. This was published on January 15, 1977 and DES became mandatory for all “federal departments and agencies, for any…nonnational-security data.”36 The federal mandate also stated that commercial and private organizations were to be encouraged to use DES.37 As a result, DES became widely used, especially in the banking industry.38 The heart of DES is the Data Encryption Algorithm (DEA), which is described in a publication of the American National Standards Institute, titled American National Standard for Information Systems—Data Encryption Algorithm—Modes of Operation, 1983, referred to as ANSI X3.106-1983.

7.3.5 DES Strength.

DES became, and remained, the de facto standard for commercial encryption until the late 1990s, when doubts about its strength relative to the rapid advances in computer hardware and software led to a quest for an eventual replacement. However, DES is still widely deployed, so more detailed discussion of its use is needed before discussing its replacement. The first thing to note is that the only known method of deciphering data encrypted with DES without knowledge of the key is the use of brute force. This involves the computerized comparison of plaintext data with encrypted versions of the same data, using every possible key until both versions of the data match. With DES, the number of possible combinations is about 70 quadrillion. That is a very big number, and trying all those combinations within anything less than years requires relatively expensive hardware (or the carefully orchestrated application of large amounts of cheap hardware).

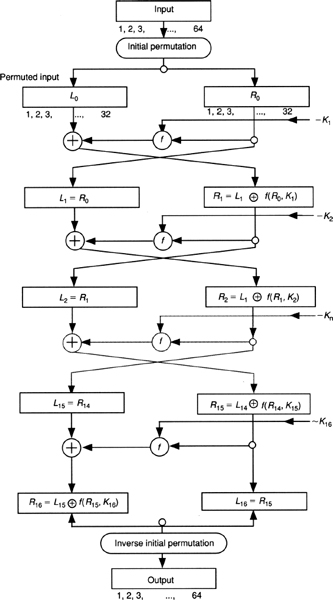

Technically speaking, the DEA is a combined substitution/transposition cipher, a product cipher that operates on blocks of data 64 bits, or 8 bytes, in length. Using 56 bits for the key produces a keyspace of 256, or 72, 057, 594, 037, 927, 940, a number in the region of 70 quadrillion. A diagram of DES is shown in Exhibit 7.10.

The difficulty of attacking DES can be increased fairly easily if double or triple encryption is used, but despite this, there has always been something of a cloud over DES. At the time the DEA was approved, two Stanford University professors who are preeminent in twentieth century cryptography, Martin Diffie and Whitfield Hellman, pointed out that the algorithm, as approved by the NBS, would be increasingly vulnerable to attack as computer equipment increased in power and came down in cost.

7.3.6 DES Weakness.

As the author George Sassoon writes, “Although both the U.S. Department of Commerce and IBM deny it vigorously, everyone in the know insists that the NSA enforced a halving of the DES key length to ensure that they themselves could break the ciphers even if nobody else could.” Although the NBS dismissed such criticisms, and the NSA flatly denied that they were behind any attempts to weaken the cipher, this opinion received some support from the NSA in 1986 when the agency announced it would no longer certify the DEA for nonclassified use, less than 10 years after the DES was approved. This move was prompted by the rapid development of parallel computers, which achieve amazing processing capabilities by using hundreds or even thousands of multiple processors, working in parallel. These machines offer enormous power at considerably less cost than traditional supercomputers. Perhaps the NSA could see the inevitability of something like the EFF DES Cracker, which was built in 1998 for less than $250,000 and broke a DES-encrypted message in fewer than three days.

The original Lucifer cipher used data blocks of 128 bits and a key of 112 bits. If this had been adhered to in the DEA, the difference in the number of possible key combinations would have been staggering. Although 256, the current keyspace, is a number greater than 7 with 16 zeroes behind it, 2112 is greater than 5 with 33 zeroes behind it. The practical consequence of this weakness in the DEA meant that the demand for stronger algorithms remained, and promising new ones emerged, such as Bruce Schneier's Blowfish.

EXHIBIT 7.10 Diagram of DES

There are still some positive aspects to DES that make it viable for some commercial uses. As was mentioned earlier, the cryptographic weakness of DES can easily be strengthened by double encryption, which doubles the difficulty of decryption, taking the task well into the realm of supercomputers and purpose-built, massively parallel machines. The fact that DES has been a standard for so long means that DES now is available in many forms, such as single-chip implementations that can be inserted into ROM sockets and integrated into all manner of hardware, such as expansion cards, PCMCIA cards, and smart cards.

7.4 PUBLIC KEY ENCRYPTION.

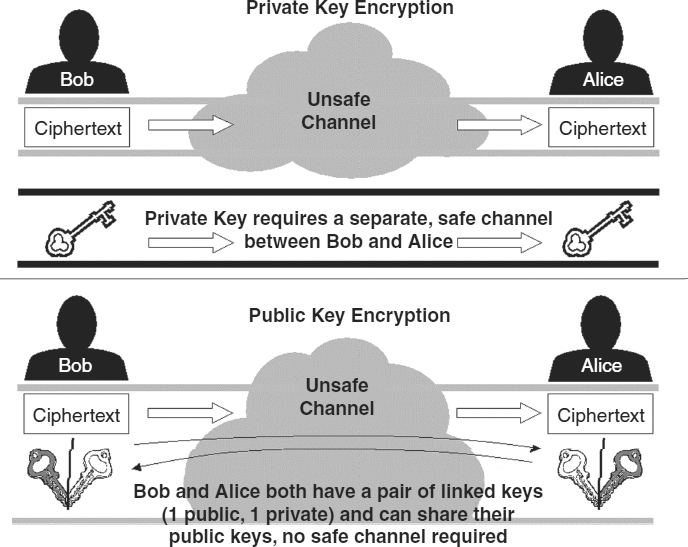

Even with a longer key, the DEA still would have a major weakness, one that it shares with all of the other private key encryption systems mentioned so far. That weakness is the need to keep the key secret. In this section we examine this problem, and the “public key” solutions that are now available.

7.4.1 Key-Exchange Problem.

When password-protected data are sent from one place to another, either electronically or by hand, the need to transmit the password to the recipient presents serious obstacles. In cryptography, these are known collectively as the key-exchange problem. This is the way it is described by the Crypt Cabal:39

If you want your friends to be able to send secret messages to you, you have to make sure nobody other than them sees the key….[This is] one of the most vexing problems of all prior cryptography: the necessity of establishing a secure channel for the exchange of the key. To establish a secure channel, one uses cryptography, but private-key cryptography requires a secure channel!

So, even when using very powerful private key systems, such as DES, password or key distribution is a major problem. After all, the reason for encrypting valuable information in the first place is because it is assumed someone is trying to steal it or tamper with it. This implies a motivated and skilled adversary. Such an adversary is likely to use every opportunity to discover the password that will unlock the information. The password is perhaps most at risk from such an adversary when it is passed from one person to another. Although it sounds like the stuff of Bond movies, it actually is a very real and practical problem that had to be faced in many areas of legitimate organized activity, from businesses to public institutions, even when a powerful DEA-based computerized encryption system became available.

suppose an Suppose an encrypted file of sensitive accounting data needs to get to the head office. How does the recipient know the password needed to access the file? The sender could make a phone call. But will it be overheard? How is the identity of the person at the other end to be verified? A courier could be dispatched with a sealed envelope. The password could be encrypted. But all of these channels present problems. How to guarantee that the courier is honest or that the envelope will arrive intact? And if the password is encrypted, it will need a password itself, which will have to be transmitted. The recipient of the file can be provided with the password before the message is encrypted, but this is no guarantee that the password will not be intercepted. There are ways of making matters more difficult for the attacker, but the ideal solution would be to use a key that was useless to the attacker. This possibility is diagrammed in Exhibit 7.11.

EXHIBIT 7.11 Comparison of Private and Public Key Encryption

7.4.2 Public Key Systems.

A public key encryption system offers encryption that does not depend on the decryption key remaining a secret. It also allows the receiver of keys and messages to verify the source. The first published description of a public key cryptosystem appeared in 1976, authored by Stanford University professor Martin Hellman and researcher Whitfield Diffie. Ralph Merkle independently arrived at a similar system.

Ralph Merkle first proposed the idea of public key cryptography in 1974, and Martin Hellman and Whitfield Diffie brought the same idea to the public forum in 1976.40 The idea was considered a seminal breakthrough, “for it had not occurred to anyone else in the long history of cryptology that the deciphering key could be anything other than the inverse of the enciphering key.”41 The Diffie-Hellman system employs a form of mathematics known as modular arithmetic. “Modular arithmetic is a way of restricting the outcome of basic mathematical operations to a set of integers with an upper bound.”42 An excellent example of this mathematical principle is found by examining a military clock:

Consider a clock on military time, by which hours are measured only in the range from zero to 23, with zero corresponding to midnight and 23 to 11o'clock at night. In this system, an advance of 25 hours on 3 o'clock brings us not to 28 o'clock, but full circle to 4 o'clock (because 25 + 3 = 28 and 28 − 24 = 4). In this case, the number 24, an upper bound on operations involving the measurement of hours, is referred to as a modulus. When a calculation involving hours on a clock yields a large number, we subtract the number 24 until we obtain an integer between 0 and 23, a process known as modular reduction. This idea can be extended to moduli of different sizes.43

The Diffie-Hellman protocol allows two users to exchange a symmetric key over an unsecure medium without having any prior shared secrets. The protocol has two publicly known and widely distributed system parameters: p, a large prime integer that is 1,024 bits in length,44 and g, an integer less than p. The two users wishing to communicate are referred to as Alice and Bob for simplicity's sake. They proceed in this way.

First, Alice generates a random private value a, and Bob generates a random private value b. Both a and b are [less than p]. Then they derive their public values using parameters p and g and their private values. Alice's public value is ga mod p and Bob's public value is gb mod p. They then exchange their public values. Finally, Alice computes gab = (gb)a mod p, and Bob computes gba = (ga)b mod p. Since gab = gba = k, Alice and Bob now have a shared secret key k.45

This protocol introduced a concept to cryptography known as the discrete log problem. “The discrete log problem is stated as follows: given g, p, and gx mod p, what is x?”46 It is generally accepted throughout the mathematical and cryptologic communities that the discrete log problem is difficult to solve, difficult enough for algorithms to rely on it for security.47

An algorithm to perform public key encryption was published in 1977 by Ronald Rivest of MIT, Adi Shamir of the Weizmann Institute in Israel, and Leonard Adleman of the University of Southern California. These three men formed the RSA Data Security Company, which was granted an exclusive license to the patent that MIT obtained on their algorithm. A large number of companies licensed software based on this algorithm, from AT&T to IBM and Microsoft. The RSA algorithm is currently at work in everything from online shopping to cell phones. Because it resolved the secret key dilemma, public key cryptography was hailed by many as a revolutionary technology, “representing a breakthrough that makes routine communication encryption practical and potentially ubiquitous,” according to Sci. Crypt FAQ, which states:

In a public-key cryptosystem, E_K can be easily computed from some public key X, which in turn is computed from K. X is published, so that anyone can encrypt messages. If decryption D_K cannot be easily computed from public key X without knowledge of private key K, but readily with knowledge of K, then only the person who generated K can decrypt messages.

The mathematical principles that make this possible are beyond the scope of this chapter. Somewhat more detail can be found in the RSA Laboratories' Frequently Asked Questions About Today's Cryptography, which is distributed by RSA Data Security, the company that markets products based on the RSA algorithm. In brief, public key encryption is possible because some calculations are difficult to reverse, something pointed out by Diffie and Hellman who first published the idea of public key encryption. Here is how RSA describes the calculations that make it possible (with minor clarification from the author):

Suppose Alice wants to send a private message, m, to Bob. Alice creates the ciphertext c by exponentiating:

c = me mod n

where e and n are Bob's public key. To decrypt, Bob also exponentiates:

m = cdmod n

where d is Bob's private key. Bob recovers the original message, m; the relationship between e and d ensures that Bob correctly recovers m. Because only Bob knows d, only Bob can decrypt.

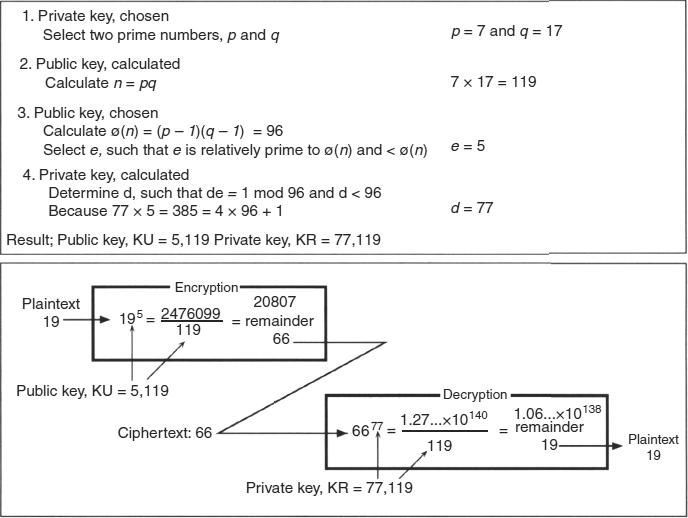

EXHIBIT 7.12 Public Key Diagram

This is diagrammed in Exhibit 7.12, which follows the scenario described. The lower part of the diagram uses numbers taken from an example given by Stallings. These numbers are much smaller than the actual numbers used by RSA. The point is that, given the ciphertext (c) and the public key (e, n) and knowledge of the algorithm, it is still impractical to decipher the message (m). This is because n is created by multiplying two prime numbers (normally represented as p and q) and e is derived from n combined with the secret key, d. To break the cipher, you need to factor a large number into a pair of prime numbers. How large? More than 150 digits in length (that is digits, not bits).

This cryptanalysis is very hard to do in a meaningful period of time, even with a very powerful computer. Large networks of computers have successfully factored a 100-digit number into two primes, but the RSA algorithm can use numbers even bigger if computer power and factoring algorithms start to catch up to the current implementations.

7.4.3 Authenticity and Trust.

The point of the public key cryptosystems is to provide a means of encrypting information that is not compromised by the distribution of passwords, but public key encryption does not solve all problems associated with key exchange. Because the keys are considered public knowledge, some means “must be developed to testify to authenticity, because possession of keys alone (sufficient to encrypt intelligible messages) is no evidence of a particular unique identity of the sender,” according to Sci. Crypt FAQ.

This has led to key-distribution mechanisms that assure listed keys are actually those of the given entities. Such mechanisms rely on a trusted authority, which may not actually generate keys but does employ some mechanism which guarantees that “the lists of keys and associated identities kept and advertised for reference by senders and receivers are ‘correct’”(Sci. Crypt FAQ). Another approach has been popularized by the program called Pretty Good Privacy, or PGP. This is the “Web of trust” approach that relies on users to distribute and track each other's keys and trust in an informal, distributed fashion.

Here is how RSA can be used to send evidence of the sender's identity in addition to an encrypted message. First, some information is encrypted with the sender's private key. This is called the signature and is included in the message sent under the public key encryption to the receiver. The receiver can “use the RSA algorithm in reverse to verify that the information decrypts sensibly, such that only the given entity could have encrypted the plaintext by use of the secret key” (Sci.Crypt FAQ).

What does “decrypts sensibly” mean? The answer involves something called a message digest, which is “a unique mathematical summary of the secret message” (Sci.Crypt FAQ). In theory, only the sender of the message could generate his or her valid signature for that message, thereby authenticating it for the receiver. Here is how RSA describes authentication, as diagrammed below in Exhibit 7.13.

Suppose Alice wants to send a signed document m to Bob. Alice creates a digital signature s by exponentiating: s = md mod n, where d and n belong to Alice's key pair. She sends s and m to Bob. To verify the signature, Bob exponentiates and checks that the message m is recovered: m = se mod n, where e and n belong to Alice's public key.

7.4.4 Limitations and Combinations.

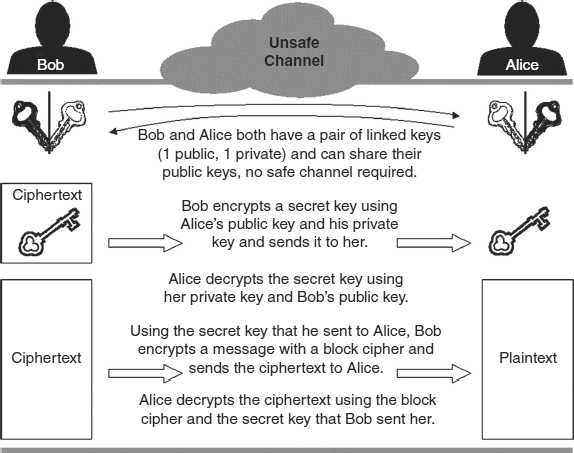

As mentioned earlier, many products use RSA today, including Microsoft Windows, Lotus Notes, Adobe Acrobat, Netscape Navigator, Internet Explorer, and many more. In most of these examples, RSA is used for its authentication capabilities rather than for large-scale data encryption. That is because public key systems have one very noticeable downside: They are slow. This is balanced by the fact that they are harder to break. According to RSA, DES generally is at least 100 times as fast as RSA when implemented in software. In hardware, DES is between 1,000 and 10,000 times as fast, depending on the implementations. RSA may narrow the gap in coming years as more specialized chips are developed. However, public key algorithms are unlikely to ever match the performance of private key ciphers such as DES. Fortunately there is a simple solution: Use a fast private key algorithm for the data encryption, but use a public key system to handle the key exchange and authentication, as diagrammed in Exhibit 7.14.

EXHIBIT 7.13 Authentication with RSA

EXHIBIT 7.14 Combining Public and Private Key Encryption

The private key encryption system might be DES or a system such as RC2 and RC4, both of which are available from RSA Data Security, or Schneier's Blowfish, which is freely available. Just as there are other private key systems besides DES, there are other public systems besides RSA. One method, called SEEK, is patented, trademarked, and marketed by Cylink of Sunnyvale, California. This method uses an alternative algorithm for public key distribution. Cylink manufactures a range of DES encryptors that use SEEK for key distribution.

7.5 PRACTICAL ENCRYPTION.

The primary market for encryption systems and devices is communications. However, the development of Internet commerce has resulted in a number of new and interesting crypto components that have considerable value for computer security.

7.5.1 Communications and Storage.

If you look at the commercial products on NIST's list of approved DES implementations, most are designed to protect information when it is being communicated, not when it is sitting on a machine for local use. This is understandable when you look at the development of computing, which has spread outward from “fortress mainframe.” Centralized data storage facilities lend themselves to physical access control. Encrypting data that stays behind walls and locked doors may be overkill in that scenario, particularly when there is a performance penalty involved.

Encryption was reserved for data in transit, between computers, across wires. This philosophy was extended to file servers on networks. File encryption on the server was not considered a priority as people assumed the server would be protected. Data encryption on stand-alone machines and removable media is a relatively recent development, particularly as more and more confidential data are packed into physically smaller and smaller devices. There are now many products with which to implement file encryption.

EXHIBIT 7.15 SSL 3.0 in Action

7.5.2 Securing the Transport Layer.

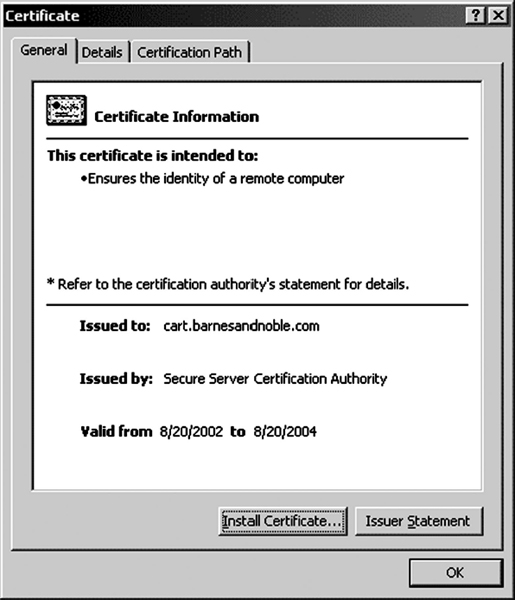

One of the most visible examples of encryption at work in computer security today is the security icon people see in their Web browser; see Exhibit 7.15 for examples of Netscape Navigator and Microsoft Internet Explorer. This is an example of something called transport layer security, which uses protocols that go by the name of SSL and TLS.

7.5.2.1 Popular Protocols.

SSLs stand for Secure Sockets Layer, the software encryption protocol developed by Netscape and originally implemented in Netscape Secure Server and the Netscape Navigator browser. SSL is also supported by Microsoft Internet Explorer and a number of other products. TLS stands for Transport Layer Security, the name given to an Internet standard based on SSL, by the IETF (as in Internet Engineering Task Force, RFC 2246). There are minor differences between SSLv3.0 and TLSv1.0 but no significant differences as far as security strength is concerned, and both protocols interoperate with each other.

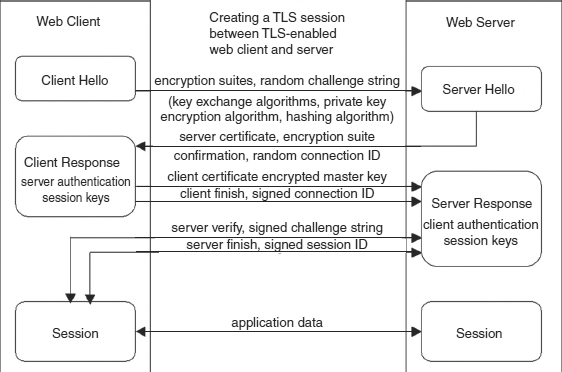

The TLS is a protocol, a standardized procedure for regulating data transmission between computers. It is actually composed of two layers of protocol. At the lowest level is the TLS Record Protocol, which is layered on top of some reliable transport protocol, typically the TCP in TCP/IP, the set of protocols that run the Internet. The TLS Record Protocol provides connection security that is both private (using symmetric cryptography for data encryption) and reliable (using a message integrity check). Above the TLS Record Protocol, encapsulated by it, is the TLS Handshake Protocol. This allows the server and client to authenticate each other, a major role for TLS in various forms of e-commerce, such as Internet banking. The TLS Handshake Protocol can also negotiate an encryption algorithm and cryptographic keys before any application protocol sitting on top of it, such as HTTP, transmits or receives its first byte of data (see Exhibit 7.16).

7.5.2.2 Properties of TLS.

In providing connection security, the TLS Handshake Protocol delivers three basic properties. The identity of the parties can be authenticated using public key cryptography (such as RSA). This authentication can be made optional, but typically it is required for at least one of the parties (e.g., the Yahoo Travel server authenticates itself to the user's browser client, but the user's client does not authenticate itself to the Yahoo Travel server, a distinction discussed in a moment).

The second and third basic properties of the TLS Handshake Protocol are that a shared secret can be securely negotiated, unavailable to eavesdroppers, even by an attacker who can place itself in the middle of the connection; and the protocol's negotiation is reliable. In the words of RFC 2246: “no attacker can modify the negotiation communication without being detected by the parties to the communication.”

EXHIBIT 7.16 Creating a TLS Session

TLS can use a variety of encryption algorithms. For the symmetric encryption that is part of the Record protocol, DES or RC4 can be used. The keys for this symmetric encryption are generated uniquely for each connection and are based on a secret negotiated by another protocol (such as the TLS Handshake Protocol). The record protocol includes a message integrity check using a keyed MAC, with secure hash functions such as SHA and MD5, used for MAC computations. The encryption suite to be used for a specific connection is specified during the initial exchange between client and server, as shown in Exhibit 7.16.

7.5.2.3 Tested in the Real World.

TLS/SSL has been widely used and extensively tested in the real world, and thoroughly probed by real cryptographers. Some of the caveats and limitations noted by these and other experts follow. The first is that neither a good standard nor a good design can guarantee a good implementation. For example, if TLS is implemented with a weak random number seed, or a random number generator that is not sufficiently random, the theoretical strength of the design will do nothing to protect the data that are thus exposed to potential compromise. (Although beyond the scope of this chapter, Pseudo-Random Number Generators, or PRNGs, play a vital part in many cryptographic operations, and they are surprisingly difficult to create; unless they closely simulate true randomness, an attacker will be able to predict the numbers they generate, thus defeating any scheme that relies on their “random” quality.)

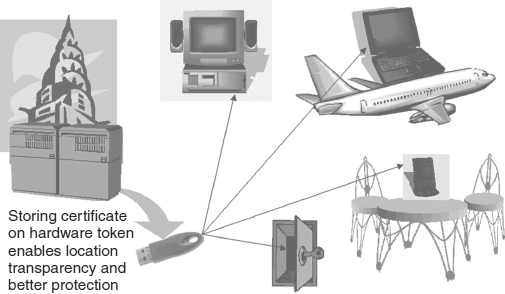

The second major caveat is that, if clients do not have digital certificates, the client side of the TLS session is not authenticated. This presents numerous problems. Most of today's “secure” Web transactions, from airline tickets booked at Yahoo Travel to shares traded at most online brokerages, represent a calculated risk on the part of the vendor. Although the client doing the buying is assured, by means of the merchant certificate, that the merchant at www.amazon.com really is Amazon, the merchant has no digital assurance that the client computer belongs to, or is being operated by, the person making the purchase. Of course, there are other assurances, such as the match between the credit card that the purchaser supplies and the other personal details that go along with it, such as billing address. But the merchant is still risking a charge-back and possibly other penalties for a fraudulent transaction.

In the case of larger and more sensitive financial transactions, the need to be assured of the client's identity is greater. A digital certificate is a step in the right direction, but it is a step many merchants have not yet taken, for several reasons. The first is the cost of issuing certificates to customers, and the second is the difficulty of getting those certificates onto their systems. Some merchants have decided that the cost and effort are worth it. For example, the Royal Bank of Scotland took this approach with its online banking system back in 1998.

There are other issues. The user needs to protect the certificate, even from such threats as hardware failure (user reformats the drive, loses the certificate) or unauthorized use (a family member uses the computer and thus has access to the certificate). Furthermore, the user needs to be able to move the certificate, for example, onto a laptop computer so that the bank account can be accessed while traveling. The obvious answer is to place the certificate on a robust removable medium (see Exhibit 7.17). Such media are generically referred to as hardware tokens. A standard for tokens has not yet emerged. Smart cards are an obvious choice, but card readers need to be deployed. There are alternatives, such as putting the certificate on a floppy disk or on a small key fob that plugs into a USB port.

7.5.2.4 Cost of Secured Transactions.