Chapter 18. Images and Signal Processing

18.1. Introduction

This chapter introduces the mathematics needed to understand what happens when we perform various operations on images like scaling, rotating, blurring, sharpening, etc., and how to avoid certain unpleasant artifacts when we do these operations. It’s a long chapter with lots of mathematics; we’ve done our best to keep it to a minimum without telling any lies. We begin with a very concise summary of the chapter, and gradually expand on the themes presented there.

The entire chapter can be regarded as an application of the Coordinate-System/Basis principle: Always use a basis that is well suited to your work. In this case, the objects we’re working with are not geometric shapes, as in Chapter 2, but images, or more accurately, real-valued functions on a rectangle or a line segment.

18.1.1. A Broad Overview

Even with the goal of minimal mathematics with no lies, it can be difficult to see the forest for the trees, so in this section we present an informal description of the keys ideas of this chapter. Much of what we say in this section is deliberately wrong. Usually there’s a corresponding true statement, which unfortunately has so many preconditions that it’s difficult to see the essential ideas. You should therefore consider this as a high-level guide to the remainder of the chapter.

We’ll be looking at the light arriving at one row of an image sensor, because almost all the interesting questions arise when we look at a single row. We’ll say that the amount of light arriving at position x is S(x). If we’re ray tracing, we might determine the value S(x) by tracing a ray starting at location x. If we’re using a real-world camera, the value S(x) is provided by nature. In either case, S is a real-valued function on an interval, and we’ll assume it’s continuous. So we’ll begin by looking at continuous functions on an interval.

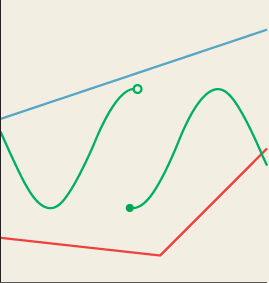

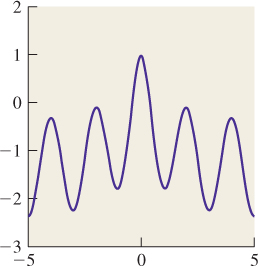

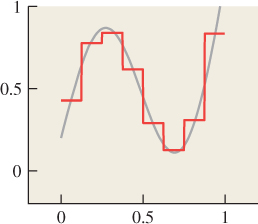

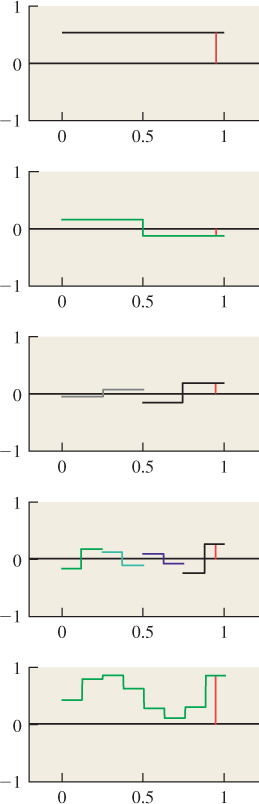

We can build a continuous function on [0, 2π] by summing up several periodic functions on that interval, as shown in Figure 18.1. Surprisingly, we can also (with the help of some integrals) start with a continuous function f on an interval and break it into a possibly infinite sum of periodic functions, in the reverse of the process shown in Figure 18.1. This decomposition is analogous to breaking a vector in R3 into three component vectors along the x-, y-, and z-axes. The coefficients of the component periodic functions completely determine f; the coefficients, listed in order of frequency, are called the “Fourier transform” of the function f. So, if f (x) = 2.1 cos(x) – 3.5 cos(2x) – 8 cos(3x), then its Fourier transform, denoted ![]() , is the function with

, is the function with ![]() (1) = 2.1,

(1) = 2.1, ![]() (2) = 3.5, and

(2) = 3.5, and ![]() (3) = –8. (Actually, decomposing the function f may involve both cosines and sines, but we’re going to ignore this.)

(3) = –8. (Actually, decomposing the function f may involve both cosines and sines, but we’re going to ignore this.)

Figure 18.1: Summing up periodic functions (in color) of different frequencies leads to more complicated functions, in this case the black (thickest) one.

The same idea works for functions on the real line: We can take a function f : R ![]() R and compute (with a lot of integration) a different function

R and compute (with a lot of integration) a different function ![]() : R

: R ![]() R with the property that

R with the property that ![]() (ω) tells us “how much f looks like a cosine of frequency ω,” just as the x-coordinate of a vector v in R3 tells us how much v “looks like” the unit vector along the x-axis. And if you tell me

(ω) tells us “how much f looks like a cosine of frequency ω,” just as the x-coordinate of a vector v in R3 tells us how much v “looks like” the unit vector along the x-axis. And if you tell me ![]() , I can recover f from it. So the f ⇔

, I can recover f from it. So the f ⇔ ![]() correspondence gives us two different ways to look at any function: The first (“the value representation”) tells the value of a function at each point; the second (“the frequency representation”) tells “how much it looks like a periodic function of frequency ω” for any ω.

correspondence gives us two different ways to look at any function: The first (“the value representation”) tells the value of a function at each point; the second (“the frequency representation”) tells “how much it looks like a periodic function of frequency ω” for any ω.

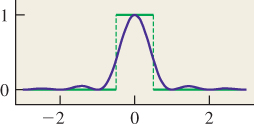

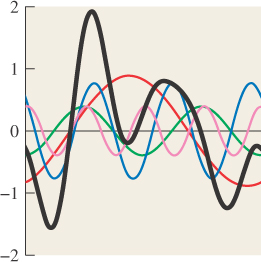

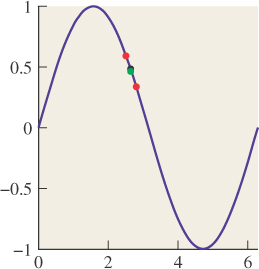

In the course of ray tracing, using one ray per pixel, traced from the pixel center, we’re taking the function S, defined on R, and evaluating it at the pixel centers, which we’ll assume are the integer points; that is, we’re looking at S(0), S(1), S(2), etc. It’s quite possible for two different functions S and T to have the same integer samples (see Figure 18.2). That’s reason for concern: The samples we’re collecting don’t uniquely determine the arriving light! Even so, when we display those samples on a (one-dimensional) LCD screen, we get a piecewise constant function (see Figure 18.3) that’s not very different from either S or T. And anyhow, our eyes tend to smooth out the transitions between adjacent display pixels, making the approximation even better.

Figure 18.2: The functions S (red) and T (green) have the same values at each integer point (black dots).

Figure 18.3: The light resulting from displaying samples of either the red or the green function on a 1D LCD screen, plotted in blue.

How serious a problem is the nonuniqueness of the preceding paragraph? Very. It’s what makes sloping straight lines on a black-and-white display look jagged, what makes wagon wheels appear to rotate backward in old movies, what makes scaled-down images look bad, and a whole host of other problems. It’s got a name: aliasing. We’ll now see where that name came from by looking at the samples of some very simple functions: the periodic functions from which all other functions can be created.

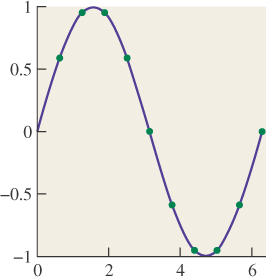

Let’s look at the interval [0, 2π], and consider ten equally spaced samples of the function x ![]() sin(x) on this interval, shown in Figure 18.4. From these samples, it’s pretty easy to reconstruct the original sine function. We can come very close to reconstructing it by just “connecting the dots,” for instance.

sin(x) on this interval, shown in Figure 18.4. From these samples, it’s pretty easy to reconstruct the original sine function. We can come very close to reconstructing it by just “connecting the dots,” for instance.

The same thing is true for the samples of x ![]() sin(2x) or (barely) x

sin(2x) or (barely) x ![]() sin(3x). But by the time we look at x

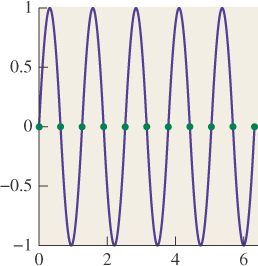

sin(3x). But by the time we look at x ![]() sin(5x) (see Figure 18.5), something odd happens: The samples are all zeroes. By looking at only the samples, we can’t tell the difference between sin(5x) and sin(0x). As Figure 18.6 shows, the same is true for sin(1x) and sin(11x).

sin(5x) (see Figure 18.5), something odd happens: The samples are all zeroes. By looking at only the samples, we can’t tell the difference between sin(5x) and sin(0x). As Figure 18.6 shows, the same is true for sin(1x) and sin(11x).

The means that if our arriving-light function S happened to be x ![]() sin(11x), we might think, from looking at the recorded samples, that it was sin(x) instead: The frequency-11 sine is masquerading as a frequency-1 sinusoid. It’s the fact that the sample values correspond to various different sinusoids that leads to the name “aliasing.” In general, if we take 2N equispaced samples, then sines of frequency k and k + 2N and k + 4N, etc., will all have identical samples. But if we restrict our function S to contain only frequencies strictly between –N and N, then the samples uniquely determine the function. The same idea works for functions defined on R rather than on an interval: If

sin(11x), we might think, from looking at the recorded samples, that it was sin(x) instead: The frequency-11 sine is masquerading as a frequency-1 sinusoid. It’s the fact that the sample values correspond to various different sinusoids that leads to the name “aliasing.” In general, if we take 2N equispaced samples, then sines of frequency k and k + 2N and k + 4N, etc., will all have identical samples. But if we restrict our function S to contain only frequencies strictly between –N and N, then the samples uniquely determine the function. The same idea works for functions defined on R rather than on an interval: If ![]() (ω) = 0 for ω ≥ ω0, then f can be reconstructed from its values at any infinite sequence of points with spacing π/ω0.

(ω) = 0 for ω ≥ ω0, then f can be reconstructed from its values at any infinite sequence of points with spacing π/ω0.

We can’t actually constrain the arriving-light function to not have high frequencies, however. If we photograph a picket fence from far away, the bright pickets against the dark grass can occur with arbitrarily high frequencies. The shorthand description of this situation is that “If your scene has high frequencies in it, then you’ll get aliasing when you render it.” The solution is to apply various tricks to remove the high frequencies before we take the samples (or in the course of sampling). Doing so in a way that’s computationally efficient and yet effective requires the deeper understanding that the rest of this chapter will give you.

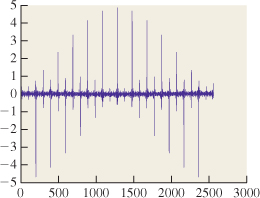

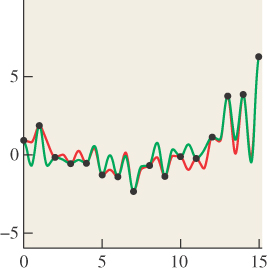

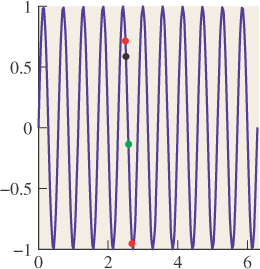

Here’s one example of a trick to remove high frequencies, just to give you a taste. Consider the sin(x) versus sin(11x) example we looked at earlier. We sampled these two functions at certain values of x. Let’s say one of them is x0. What would happen if you took your input signal S and instead of computing S(x0), you computed ![]() (S(x0) + S(x0 + r1) + S(x0 – r2)), where r1 and r2 are small random numbers, on the order of half the sample spacing of 2π/10? If the input signal S were S(x) = sin(x), the three values you’d average would all be very close to sin(x0); that is, the randomization would have little effect (see Figure 18.7). On the other hand, if the original signal was S(x) = sin(11x), then the three values you’d average would tend to be very different, and their average (see Figure 18.8) would generally be closer to zero than S(x0). In short, this method tends to attenuate high-frequency parts of the input, while retaining low-frequency parts.

(S(x0) + S(x0 + r1) + S(x0 – r2)), where r1 and r2 are small random numbers, on the order of half the sample spacing of 2π/10? If the input signal S were S(x) = sin(x), the three values you’d average would all be very close to sin(x0); that is, the randomization would have little effect (see Figure 18.7). On the other hand, if the original signal was S(x) = sin(11x), then the three values you’d average would tend to be very different, and their average (see Figure 18.8) would generally be closer to zero than S(x0). In short, this method tends to attenuate high-frequency parts of the input, while retaining low-frequency parts.

Figure 18.7: The original sample sin(x0) in black, with two nearby random samples, shown in red. The average (green) of the three heights is very nearly sin(x0).

Figure 18.8: Random samples (red) of x ![]() sin(11x) near x = x0 are quite different from the sample at x0 (black), so their average (green) is nearer to zero.

sin(11x) near x = x0 are quite different from the sample at x0 (black), so their average (green) is nearer to zero.

18.1.2. Important Terms, Assumptions, and Notation

The main ideas we’ll use in this chapter are convolution and the Fourier transform, which you may have encountered in algorithms courses or in the study of various engineering or mathematics problems.

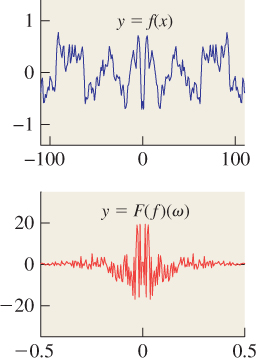

Fortunately, both convolution and Fourier transforms can be well understood in terms of familiar operations in graphics; we motivate the mathematics by showing its connection to graphics. Convolution, for instance, takes place in digital cameras, scanners, and displays. The Fourier transform may be less familiar, although the typical “graphical display” of an audio signal (see Figure 18.9), in which the amounts of bass, midrange, and treble are shown changing over time, shows, at each time, a kind of basic Fourier transform of a brief segment of the audio signal.

For us, the essential property of the Fourier transform is that it turns convolution of functions, which is somewhat messy, into multiplication of other functions, which is easy to understand and visualize.

The Fourier transformation (for functions on the real line) takes a function and represents it in a new basis; thus, this chapter provides yet another instance of the principle that expressing things in the right basis makes them easy to understand.

The same tools we use to understand images in this chapter will prove useful not only in analyzing image operations, however: They also appear in the study of the scattering of light by a surface, which can be interpreted as a kind of convolution operation [RH04], and in rendering, in which the frequency analysis of light being transported in a scene can yield insights into the nature of computations necessary to accurately simulate that light transport [DHS+05].

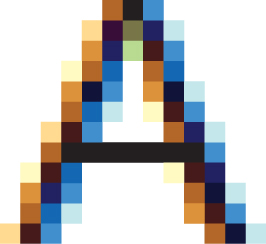

Before discussing convolution and other operations, we return to the topic of Section 9.4.2: the principle that you must know the meaning of every number in a graphics program. Before we discuss operations like convolution and Fourier transforms on images, we have to know what the images mean. The difficulty, which we discussed briefly in Chapter 17, is that in some cases we just don’t know. Alvy Ray Smith made a point of this in a paper titled “A Pixel Is Not A Little Square” [Smi95], in which he observes that the individual values in a pixel array do not in general represent the average of something over a small square on the image plane, and that algorithms that rely on this model of pixels are bound to fail in some cases. (More simply, he points out that a pixel does not represent a tiny square of constant value, even though the pixel may be displayed that way on an LCD screen!) As an extreme example, an object ID image contains, at each pixel, an identifier that tells which object is visible at that pixel. The pixel values in this case are not even necessarily numerical!

For this chapter only, to avoid messiness introduced by shifting by one-half in the x- and y-directions, we’re going to use display screen coordinates in which the display pixel indexed by (0, 0) has display coordinates that range from – ![]() to

to ![]() in both x and y, and the display pixel named (i, j) is a small square centered at (i, j) rather than at (i +

in both x and y, and the display pixel named (i, j) is a small square centered at (i, j) rather than at (i + ![]() , j +

, j + ![]() ). This means that pixel (i, j) is at x-location i and y-location j, and not in “row i and column j”; that is, we’re using geometric indexing rather than image indexing. Because this chapter contains no actual algorithms that depend on display pixel coordinates, this should cause no problems for you.

). This means that pixel (i, j) is at x-location i and y-location j, and not in “row i and column j”; that is, we’re using geometric indexing rather than image indexing. Because this chapter contains no actual algorithms that depend on display pixel coordinates, this should cause no problems for you.

For this chapter, we’re going to assume (initially) that images contain physical measurements of light, measured in physical units. For a digital camera, this might be something like the average radiance along a ray hitting a small rectangle on a CCD sensor, or perhaps an integral of that radiance over the area of that rectangle, or the total light energy that arrived at the rectangle while the shutter was open. (Some digital cameras will let you get such information when you store a photo in “raw” mode, and will even tell you when the sensor was oversaturated so that the stored value is a false measurement.) For a rendered image, the value stored at a pixel might be the radiance along a ray that passed through the single point at the center of the image area corresponding to the pixel, or an average of radiances of several rays through the pixel, etc. It might even be an average of samples from a region around the pixel center, where the regions for adjacent pixel centers overlap.

18.2. Historical Motivation

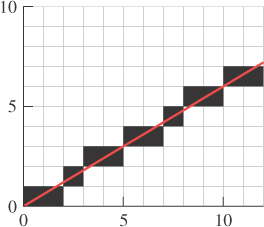

When graphics researchers first wanted to draw a line on a rectangular grid of pixels, the most obvious thing to do was to write the line in the form y = mx + b, and for each integer x-value, compute y = mx + b, which was usually not an integer, round it off to an integer y′, and put a mark at location (x, y′). This only works well if m is between –1 and 1; for greater slopes, it worked better to write x = my + b, that is, to swap the roles of x and y, but that’s not germane to this discussion. The kind of line produced with this method is shown in Figure 18.10, where we’ve drawn the line using little squares as pixel marks.

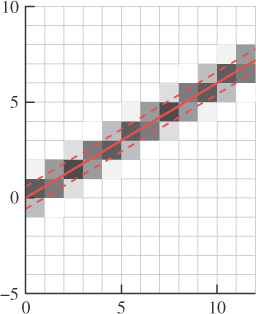

You can see that the line is fairly “jaggy”—it has jagged edges—but it’s hard to imagine how to avoid this if your only choice is to draw a black or a white square. Fortunately, display devices improved to display multiple gray levels. To avoid the staircase-like appearance of the jaggy line, we can fill in gray squares at the steps, or be even more sophisticated—we can set the gray-level for each square to be proportional to the amount that square overlaps a unit-width line, as shown in Figure 18.11. The resultant line, viewed close up like this, looks a bit odd. But seen from an appropriate distance (as in Figure 18.12), it looks very good—far better than the jaggy black-and-white line.

The “compute the overlap between the square and the thing you’re rendering” approach seems a bit ad hoc, but it also seems to work well in practice. The same idea, applied to text, produces fonts that are visually more appealing than the pure black-and-white fonts that arise when we use the “this pixel is black if the pixel center is within the character” approach (see Figures 18.13 and 18.14).

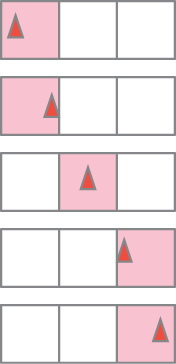

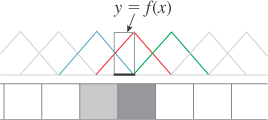

Merely computing overlaps with pixel squares isn’t a cure-all, as you can see by considering a sequence of images generated by a small moving object. Figure 18.15 shows a red triangle moving through three pixels of a “one-dimensional image” in the course of five “frames.” In the first and second frames, it’s completely in the first square, tinting it pink; in the fourth and fifth, it’s completely in the third. In frame three, it’s in the middle square. The result is that although the object is moving with uniform speed through the pixels, it appears to “rush” through the middle pixel, which is pink for only half as long as the pixels on either side.

To compensate for the differing amounts of time spent in each square, and thus the irregularity of the apparent motion, we can use a different strategy: Instead of measuring the area of the overlap between the object and the square, we can compute a weighted area overlap, counting area overlap near the square’s center as more important than area overlap near the edge of the square. While this approach does address the irregularity of the pure area-measuring approach, there remains another problem: As a small, dark object moves from left to right, the total brightness of the image varies. When the object is near the dividing line between two squares, neither is darkened much at all; when it’s near the middle of a square, that square is darkened substantially. The result is that the object appears to waver in brightness during the animation.

The somewhat surprising solution is to use a weighting function that says that an object contributes to the brightness of square i if it overlaps anywhere from the center of square i – 1 to the center of square i + 1. Figure 18.16 shows this in a side view. The object, shown as a small, black line segment, contributes both the left pixel, whose weighting function is shown in blue, and the center pixel, whose weighting function is shown in red, but not the right pixel, whose weighting function is shown in green. The left-pixel contribution is small, because the black line is near the edge of the “tent,” while the center-pixel contribution is larger. Notice that the sum of the weighting functions is the constant function 1, so no matter where we place the object, its total contribution to image brightness will be the same.

Figure 18.16: Weighted area measurement. The central red “tent-shaped” function is used as a weight for contributions to the center pixel.

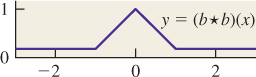

We can express the weighted-area-overlap approach to determining pixel values mathematically. Let’s suppose that x ![]() f(x) is the function shown in Figure 18.16 whose value is 1 for any point x within our object, and 0 elsewhere. And let’s suppose that the red tent-shaped function in Figure 18.16 is called x

f(x) is the function shown in Figure 18.16 whose value is 1 for any point x within our object, and 0 elsewhere. And let’s suppose that the red tent-shaped function in Figure 18.16 is called x ![]() g(x). Then the value we assign to the center pixel is given by

g(x). Then the value we assign to the center pixel is given by

The value assigned to the next pixel to the right, whose weighting function is just g shifted right one unit, is

A similar expression holds for every pixel: The values we’re computing are generated by multiplying f with a shifted version of g and then integrating. This operation—point-by-point multiplication of one function by a shifted version of another, followed by integration (or summation, in some cases)—appears over and over again in both graphics and mathematics and is called convolution, although we should warn you that the proper definition includes a negation so that we end up summing things of the form f(x)h(i – x); this negation leads both to convenient mathematical properties and to considerable confusion. Fortunately for us, in almost all our applications the functions that we convolve against have the property that h(x) = h(–x), so the negative sign has no impact.

In the next section, we’ll discuss various kinds of convolutions, their applications in graphics, and some of their mathematical properties.

The remainder of this chapter consists of applying ideas from signal processing to computer graphics. In that context, functions on the real line (or an interval) are often called signals (particularly when the parameter is denoted t so that we can think of f(t) as a value that varies with time). Convolving with a function like the “tent function” above, which is nonzero on just a small region, is called applying a filter or filtering, although the term can be used for convolution with any function.

18.3. Convolution

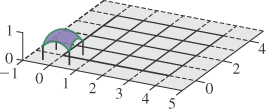

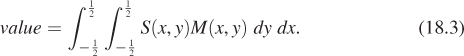

As we said already, convolution appears over and over again in graphics and the physical world. Nearly every act of “sensing” involves some sort of convolution, for instance. For example, consider one row of sensor pixels in an idealized digital camera. We’ll say that the light energy falling on the sensor at location (x, y) in one second is described as function S(x, y), and that S is independent of time. The camera shutter opens for one second, and each pixel accumulates arriving energy over that period of time, after which an accumulated value is recorded as the pixel’s value. But each pixel sensor—say, the one at pixel (0, 0)—has a responsivity function, (x, y) ![]() M(x, y), that tells how much pixel response there is for each bit of arriving light (see Figure 18.17). We’re being deliberately informal about units here because it’s the form of the computation we care about, not the actual values.

M(x, y), that tells how much pixel response there is for each bit of arriving light (see Figure 18.17). We’re being deliberately informal about units here because it’s the form of the computation we care about, not the actual values.

To determine the sensor pixel’s response to the incoming light, we multiply each bit of light S(x, y) by the responsivity M(x, y), and sum up over the entire pixel:

If we extend the definition of M to the whole plane of the sensor by defining M to be 0 outside the unit square corresponding to pixel (0, 0), we can rewrite this as

which may appear more complicated, but actually will result in simpler formulas elsewhere.

In a well-designed camera, the sensor responsivity should be the same for each pixel. What does this mean mathematically? It means, for instance, that for sensor pixel (2, 3), we’ll want to multiply S(x, y)M(x – 2, y – 3) and integrate, that is,

and in general, the formula for sensor pixel (i, j) will be

This expression has the form of a product of a function S with a shifted function M, integrated; the resultant value is a function of the shift amount, (i, j). That is the essence of a convolution, and indeed, Equation 18.6 is almost the definition of the convolution S ![]() M of the two functions. Two small adjustments are needed. First, since S and M are both functions on all of R2, their convolution is defined to be a function on all of R2. The values we’ve described above are the restriction of that function to the integer grid. Second, it’s very convenient to have a definition of convolution that makes f

M of the two functions. Two small adjustments are needed. First, since S and M are both functions on all of R2, their convolution is defined to be a function on all of R2. The values we’ve described above are the restriction of that function to the integer grid. Second, it’s very convenient to have a definition of convolution that makes f ![]() g = g

g = g ![]() f. For this to work out properly, there needs to be an extra negation; that is, we want Equation 18.6 to have the form

f. For this to work out properly, there needs to be an extra negation; that is, we want Equation 18.6 to have the form

We can arrange this by defining ![]() (x, y) = M(–x, –y). (For a typical sensor, the response function is symmetric, so

(x, y) = M(–x, –y). (For a typical sensor, the response function is symmetric, so ![]() and M are the same.) This final form is just a 2D analog of the 1D convolution. Simplifying to one dimension, we can now define the convolution of two functions f, g : R

and M are the same.) This final form is just a 2D analog of the 1D convolution. Simplifying to one dimension, we can now define the convolution of two functions f, g : R ![]() R:

R:

(a) Pause briefly and examine that definition carefully. It’s central to much of the remainder of this book.

(b) Perform the substitution s = t–x, ds = –dx in the integral of Equation 18.8 to confirm that (f ![]() g)(t) = (g

g)(t) = (g ![]() f)(t).

f)(t).

We can say that image capture by our digital camera consists of convolving the incoming light with the “flipped” sensor response function ![]() , and then restricting to the integer lattice Z × Z.

, and then restricting to the integer lattice Z × Z.

In almost all cases that we study, one of the functions f or g will be an even function, and hence the negation has no consequence at all. The two-dimensional convolution is defined very similarly. If f, g : R2 ![]() R are two functions on R2, then

R are two functions on R2, then

Convolution is also defined for two periodic functions of period P, but with the domain of integration replaced by any interval of length P.

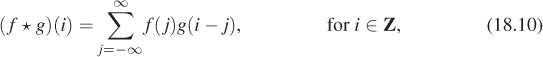

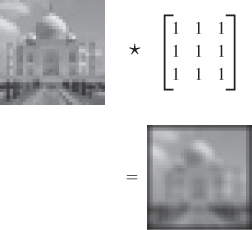

Convolution can also be applied to discrete signals, that is, to a pair of functions f, g : Z ![]() R; the definition is almost identical, except for the replacement of the integral with a summation:

R; the definition is almost identical, except for the replacement of the integral with a summation:

with an analogous definition for functions of two variables. If f, g : Z × Z ![]() R, then

R, then

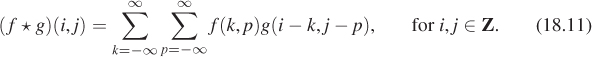

As an application of this kind of convolution, imagine that you have an image that is in very sharp focus, but you want to use it as a background for a composition in which it should appear out of focus, while the foreground objects should be in focus. One way to do this is to replace each pixel with an average of itself and its eight neighbors. Figure 18.18 shows the results on a small example. On a larger image, you might want to “blur” with a much larger block of ones, to achieve any noticeable effect. If we call f(i, j) the value of the original image pixel at (i, j), and let g(i, j) = 1 for –1 ≤ i, j ≤ 1, and 0 otherwise, then the blurred-image pixel at (i, j) is exactly (f ![]() g)(i, j). Notice, too, that the function g that we used in the blurring has the property that g(i, j) = g(–i, –j), that is, it’s symmetric about the origin, hence the negative sign in the definition of convolution is of no consequence.

g)(i, j). Notice, too, that the function g that we used in the blurring has the property that g(i, j) = g(–i, –j), that is, it’s symmetric about the origin, hence the negative sign in the definition of convolution is of no consequence.

The process we’ve just described is usually called filtering f with the filter g, where the function that’s nonzero only on a small region is called the “filter.” Because convolution is symmetric, the roles can be reversed, however, and we’ll have occasion to convolve with “filters” that are nonzero arbitrarily far out on the real line or the integers.

Consider convolving a grayscale image f with a filter g that’s defined by g(–1, –1) = g(–1, 0) = g(–1, 1) = –1, g(1, –1) = g(1, 0) = g(1, 1) = 1, and g(i, j) = 0 otherwise.

(a) Draw a plot of g.

(b) Describe intuitively where f ![]() g will be negative, positive, and zero. You might want to start out with some simple examples for f, like an all-gray image, or an image that’s white on its bottom half and black on the top, or white on the left half and black on the right, etc. Then generalize.

g will be negative, positive, and zero. You might want to start out with some simple examples for f, like an all-gray image, or an image that’s white on its bottom half and black on the top, or white on the left half and black on the right, etc. Then generalize.

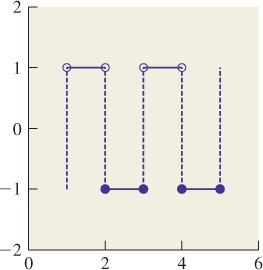

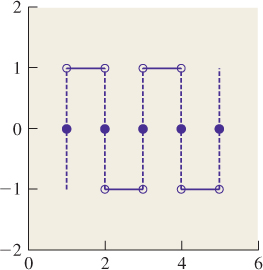

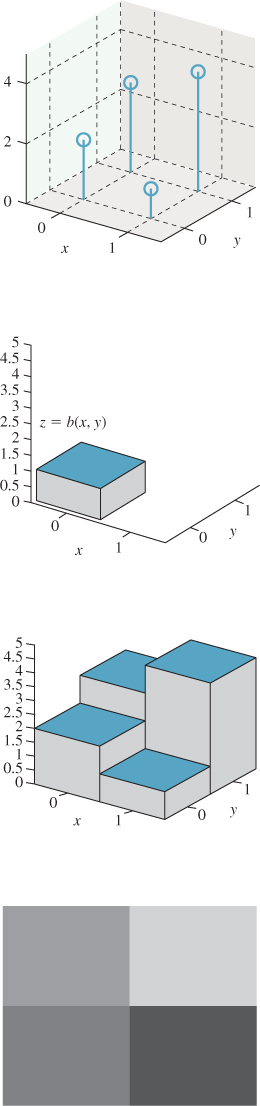

We’ve defined convolution for two continuum functions (i.e., functions defined on R) and for two discrete functions (i.e., defined on Z). There’s a third class of convolution that comes up in graphics: the discrete-continuum convolution. A familiar instance of this is display on a grayscale LCD monitor. Recall that for this chapter, the display pixel (i, j) is a small box centered at (i, j). Figure 18.19 shows the result of displaying a 2 × 2 image f (shown as a stem plot) with a “box” function b defined on R2 to produce a piecewise constant function on R2 representing emitted light intensity.

Figure 18.19: The values in a 2 × 2 grayscale image are convolved with a box function to get a piecewise constant function on a 2 × 2 square.

The emitted light at location (x, y) is given by

This doesn’t quite look like a convolution, because there’s no summation. But we can insert the summation without changing anything:

There’s no change because the box function is zero outside the unit box. In the early days of graphics, when CRT displays were common, turning on a single pixel didn’t produce a little square of light, it produced a bright spot of light whose intensity faded off gradually with distance. That meant that turning on pixel (4, 7) might cause a tiny bit of light to appear even at the area of the display we’d normally associate with coordinates (12, 23), for instance, or anywhere else. In that case, the summation in the formula for the light at position (x, y) was essential.

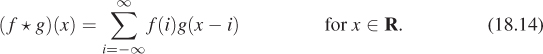

The general definition for the convolution of a discrete function f : Z ![]() R and a continuum function g : R

R and a continuum function g : R ![]() R is

R is

The result is a continuum function. We leave it to you to define continuous-discrete convolution, and to extend both definitions to the plane.

18.4. Properties of Convolution

As mentioned in Section 18.2, convolution has several nice mathematical properties. First, for all forms of convolution (discrete, continuous, or mixed) it’s linear in each factor, that is,

Second, it’s commutative, which we’ll show for the continuous case, answering the inline problem above:

Substituting s = t – x, ds = –dx, and x = t – s, we get

The proofs for the discrete and mixed cases are very similar.

Third, convolution is associative. The proof, which we omit, involves multiple substitutions.

Finally, continuous-continuous convolution has some special properties involving derivatives, such as f′ ![]() g = f

g = f ![]() g′ (under some fairly weak assumptions). It also generally increases smoothness: If f is continuous and g is piecewise continuous, then f

g′ (under some fairly weak assumptions). It also generally increases smoothness: If f is continuous and g is piecewise continuous, then f ![]() g is differentiable; similarly, if f is once differentiable, then f

g is differentiable; similarly, if f is once differentiable, then f ![]() g is twice differentiable. In general, if f is p-times differentiable and g is k-times differentiable, then f

g is twice differentiable. In general, if f is p-times differentiable and g is k-times differentiable, then f ![]() g is (p + k + 1)-times differentiable (again under some fairly weak assumptions).

g is (p + k + 1)-times differentiable (again under some fairly weak assumptions).

Alas, for a fixed function f, the map g ![]() f

f ![]() g is usually not invertible—you can’t usually “unconvolve.” We’ll see why when we examine the Fourier transform shortly.

g is usually not invertible—you can’t usually “unconvolve.” We’ll see why when we examine the Fourier transform shortly.

18.5. Convolution-like Computations

Convolution appears in other places as well. Consider the multiplication of 1231 by 1111:

1231

x1111

-----

1231

1231

1231

1231

-------

1367641

In computing this product, we’re taking four shifted copies of the number 1231, each multiplied by a different 1 from the second factor, summing them; this is essentially a convolution operation.

As another example, consider how a square occluder, held above a flat table, casts a shadow when illuminated by a round light source (see Figure 18.20). The brightness at a point P is determined by how much of the light source is visible from P. We can think of this by imagining the square is lit from each single point of the light source, casting a hard shadow on the surface, a shadow whose appearance is essentially a translated copy of the function f that’s one for points in the square and zero elsewhere (see Figure 18.21). We sum up these infinitely many hard-shadow pictures, with the result being a soft shadow cast by the lamp. This has the form of a convolution (a sum of many displaced copies of the same function). We can also consider the dual: Imagine each tiny bit of the rectangle individually obstructing the lamp’s light from reaching the table. The occlusion due to each tiny bit of rectangle is a disk of “reduced light”; when we sum up all these circular reductions, some table points are in all of them (the umbra), some table points are in just a few of the disks (the penumbra), and the remainder, the fully lit points, are visible to every point of the lamp. These two ways of considering the illumination arriving at the table—multiple displaced rectangles summed up, or multiple displaced disks summed up—correspond to thinking of f ![]() g as many displaced copies of f, weighted by values of g, or as many displaced copies of g, weighted by values of f.

g as many displaced copies of f, weighted by values of g, or as many displaced copies of g, weighted by values of f.

Figure 18.20: The square occluder casts a shadow with both umbra and penumbra when illuminated by a round light source.

Figure 18.21: The square shadows cast by the occluder when it’s illuminated from two different points of the light source (image lightened to better show shadows).

18.6. Reconstruction

Reconstruction is the process of recovering a signal, or an approximation of it, from its samples. If you examine Figure 18.4, for instance, you can see that by connecting the red dots, we could produce a pretty good approximation of the original blue curve. This is called piecewise linear reconstruction and it works well for signals that don’t contain lots of high frequencies, as we’ll see shortly.

We discussed earlier how the conversion of the light arriving at every point of a camera sensor into a discrete set of pixel values is modeled by a convolution, and how, if we display the image on an LCD screen, setting each LCD pixel’s brightness to the value stored in the image, we’re performing a discrete-continuous convolution, the discrete factor being the image and the continuous factor being a function that’s 1 at every point of a unit-width box centered on (0, 0) and 0 everywhere else. This second discrete-continuous convolution is another example of reconstruction, sometimes called sample and hold reconstruction.

The “take a photo, then display it on a screen” sequence (i.e., the sample-and-reconstruct sequence) is thus described by a sequence of convolutions. If taking a photo and displaying were completely “faithful,” the displayed intensity at each point would be exactly the same as the arriving intensity at the corresponding point of the sensor. But since the displayed intensity is piecewise constant, the only time this can happen is when the original lightfield is also piecewise constant (if, for instance, we were photographing a chessboard so that each square of the chessboard exactly matched one sensor pixel). In general, however, there’s no hope that sense-then-redisplay will ever produce the exact same pattern of light that arrived at the sensor. The best we can hope for is that the displayed lightfield is a reasonable approximation of the original. Since displays have limited dynamic range, however, there are practical limitations: You cannot display a photo of the sun and expect the displayed result to burn your retina.

18.7. Function Classes

There are several kinds of functions we’ll need to discuss in the next few sections. The first is the one used to mathematically model things like light arriving at an image plane, which is a continuous function of position. We can treat such a function as defined only on the image rectangle, R, or as being defined on the whole plane (which we’ll treat as R2). In either case, we require that the integral of the square of f is finite,1 that is,

1. Later we’ll consider complex-valued functions rather than real-valued ones. When we do so, we have to replace f(x) with | f(x)| in the integral.

where D is the domain on which the function is defined. (Functions satisfying this inequality are called square integrable; the interpretation, for many physically meaningful functions, is that they represent signals of finite total energy.) The domain D might be the rectangle R, the whole plane R2, the real line R, or some interval [a, b] when we’re discussing the one-dimensional situation. Functions that are square integrable form a vector space called L2, where we often write something like L2(R2) to indicate square-integrable functions on the plane. We say “f is L2” as shorthand for “f is square integrable.” The set of L2 functions on any particular domain is generally a vector space. It takes a little work to show that L2 is closed under addition, that is if f and g are L2, then so is f + g; but we’ll omit the proof, since it’s not particularly instructive.

A function x ![]() f(x) in L2(R) must “fall off” as x

f(x) in L2(R) must “fall off” as x ![]() ±∞, because if |f(x)| is always greater than some constant M > 0, then

±∞, because if |f(x)| is always greater than some constant M > 0, then ![]() , which goes to infinity as K

, which goes to infinity as K ![]() ∞.

∞.

The next class of functions is the discrete analog of L2: the set of all functions f : Z ![]() R such that

R such that

is denoted ![]() 2; these are called square summable.

2; these are called square summable.

There are two ways in which ![]() 2 functions arise. The first is through sampling of L2 functions. Sampling is formally defined in the next section, but for now note that if f is a continuous L2 function on R, then the samples of f are just the values f (i) where i is an integer, so sampling in this case amounts to restricting the domain from R to Z. The second way that

2 functions arise. The first is through sampling of L2 functions. Sampling is formally defined in the next section, but for now note that if f is a continuous L2 function on R, then the samples of f are just the values f (i) where i is an integer, so sampling in this case amounts to restricting the domain from R to Z. The second way that ![]() 2 functions arise is as the Fourier transform of functions in L2([a, b]) for an interval [a, b], which we’ll describe presently.

2 functions arise is as the Fourier transform of functions in L2([a, b]) for an interval [a, b], which we’ll describe presently.

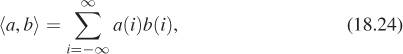

Finally, both ![]() 2 and L2 have inner products. For

2 and L2 have inner products. For ![]() 2(Z) we define

2(Z) we define

which is analogous to the definition in R3 of ![]() .

.

For L2(D), where D is either a finite interval or the real line, we define

This inner product on L2 has all the properties you might expect: It’s linear in each factor, and ![]() f, f

f, f![]() = 0 if and only if f = 0, at least if we extend the notion of f = 0 to mean that f is zero “almost everywhere,” in the sense that if we picked a random number t in the domain of f, then with probability 1, f (t) = 0. (In general, when we talk about L2, we say that two functions are equal if they’re equal almost everywhere.)

= 0 if and only if f = 0, at least if we extend the notion of f = 0 to mean that f is zero “almost everywhere,” in the sense that if we picked a random number t in the domain of f, then with probability 1, f (t) = 0. (In general, when we talk about L2, we say that two functions are equal if they’re equal almost everywhere.)

These inner-product definitions in turn let us define a notion of “length,” by defining ![]() , for f

, for f ![]() L2, and similarly for

L2, and similarly for ![]() 2. See Exercise 18.1 for further details.

2. See Exercise 18.1 for further details.

18.8. Sampling

The term sampling is much used in graphics, with multiple meanings. Sometimes it refers to choosing multiple random points Pi (i = 1, 2, ..., n) in the domain of a function f so that we can estimate the average value of f on that domain as the average of the values f (Pi) (see Chapter 30). Sometimes (as in the previous edition of this book) it’s used to mean “generating pixel values by some kind of unweighted or weighted averaging of a function on a domain,” the discrete nature of the pixel array being the motivation for the word “sampling.” In this chapter, we’ll use it in one very specific way. If f is a continuous function on the real line, then sampling f means “restricting the domain of f to the integers,” or, more generally, to any infinite set of equally spaced points (e.g., the even integers, or all points of the form 0.3 + n/2, for n ![]() Z).

Z).

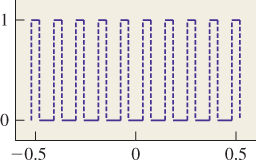

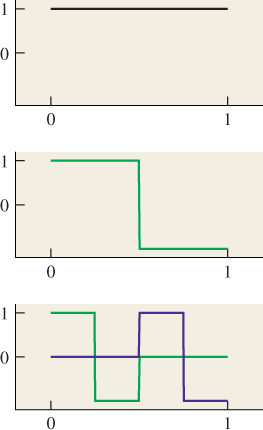

For discontinuous functions, the definition is slightly subtler; for those who’d rather ignore the details, it’s sufficient to say that if f is piecewise continuous, but has a jump discontinuity at the point x, then the sample of f at x is the average of the left and right limits of f at x. Thus, for a square wave (see Figures 18.22 and 18.23) that alternates between –1 and 1, the sample at any discontinuity is 0.

![]() The more general notion of sampling is motivated by the physical act of measurement. If we think of the variable in the function t

The more general notion of sampling is motivated by the physical act of measurement. If we think of the variable in the function t ![]() f (t) as time, then to measure f we must average its values over some nonzero period of time. If f is rapidly varying, then the shorter the period, the better the measurement. To define the sample of f at a particular time t0, we therefore mimic this measurement process. First, we consider points t0 – a and t0 + a, and define a function χt0,a : R

f (t) as time, then to measure f we must average its values over some nonzero period of time. If f is rapidly varying, then the shorter the period, the better the measurement. To define the sample of f at a particular time t0, we therefore mimic this measurement process. First, we consider points t0 – a and t0 + a, and define a function χt0,a : R ![]() R where χt0,a = 1 if t0 – a ≤ t ≤ t0 + a and 0 otherwise (see Figure 18.24). The function χt0,a serves the role of the shutter in a camera: When we multiply f by χt0,a, the values of f are “let through” only on the interval [t0 – a, t0 + a]. Next, we let

R where χt0,a = 1 if t0 – a ≤ t ≤ t0 + a and 0 otherwise (see Figure 18.24). The function χt0,a serves the role of the shutter in a camera: When we multiply f by χt0,a, the values of f are “let through” only on the interval [t0 – a, t0 + a]. Next, we let

U(a) is the “measurement” of f in the interval [t0 – a, t0 + a], in the sense that it’s the average value of f on that interval. Problem 18.2 relates this to convolution. Finally, we define the sample of f at t0 to be

that is, the limiting result of measuring f over shorter and shorter intervals. For a continuous function f, if a is small enough, then f (s) will be very close to f (t0) for any s ![]() [t0 – a, t0 + a], and the limit of U(a) is just f(t0)—the sample, defined by this measurement process, is exactly the value of f at t0 as we said above; the full proof depends on the mean value theorem for integrals. But for a discontinuous function like the square wave we saw above, the measurement process averages values to the left and right of t0, and the limit (in the case of a square wave) is the average of the upper and lower values. In cases messier than these simple ones, it can happen that the limit in Equation 18.27 can fail to exist, in which case the sample of f is not defined. We’ll never encounter such functions in practice, though.

[t0 – a, t0 + a], and the limit of U(a) is just f(t0)—the sample, defined by this measurement process, is exactly the value of f at t0 as we said above; the full proof depends on the mean value theorem for integrals. But for a discontinuous function like the square wave we saw above, the measurement process averages values to the left and right of t0, and the limit (in the case of a square wave) is the average of the upper and lower values. In cases messier than these simple ones, it can happen that the limit in Equation 18.27 can fail to exist, in which case the sample of f is not defined. We’ll never encounter such functions in practice, though.

18.9. Mathematical Considerations

The somewhat complex definition of sampling suggests that the mathematical details of L2 functions can be quite messy. That’s true, and making precise statements about sampling, convolution, Fourier transforms, etc., is rather difficult. Often the statement of the preconditions is so elaborate that it’s quite difficult to understand what a theorem is really saying. Rather than ignoring the preconditions or precision, which leads to statements that seem like nonsense except to the very experienced, and rather than providing the exact statements of every result, we’ll restrict our attention, to the degree possible, to “nice” functions (see Figure 18.25) that are either continuous, or very nearly continuous, in the sense that they have a discrete set of discontinuities, and on either side of a discontinuity they are continuous and bounded (i.e., there’s no “asymptotic behavior” like that of the graph of y = 1/x near x = 0). Figure 18.26 shows some functions that are not nice enough to study in this informal fashion, but which also don’t arise in practice.

Figure 18.26: “Not-nice” functions. The blue function is 0 except at points of the form p/2q, where its value is 1/2q.

The restriction to “nice” functions is enough to make most of the subtleties disappear. It’s also appropriate in the sense that we’re using these mathematical tools to discuss physical things, like the light arriving at a sensor. At some level, that light intensity is discontinuous—either another photon arrives or it doesn’t—but at the level at which we’re hoping to model the world, it’s reasonable to treat it as a continuous function of time and position.

We’re also typically studying the result of convolving the “arriving light” function with some other function like a box; the box is a nice enough function that convolving with it always yields a continuous function, and sampling this continuous function is easy—we just evaluate at the sample points. So the way we work with light tends to mean that we’re working with functions that “behave nicely” when we look closely at the mathematics.

For the remainder of this chapter, we’ll be considering things in one dimension: Our “images” will consist of just a row of pixels; our sensor will be a line segment rather than a rectangle, etc. We’ll sometimes illustrate the corresponding ideas in two dimensions (i.e., on ordinary images), but we’ll write the mathematics in one dimension only. So we’ll be working with a function f defined on the real line, and its samples defined on the integers. To make things simpler, we’ll restrict our attention to the case where f is an even function (see Figure 18.27), where f(x) = f(–x) for every x. Examples of even functions are the cosine, and the squaring function. Restricting to even functions lets us mostly avoid using complex numbers, while losing none of the essential ideas.

To review what we’ve done so far, we’ve defined convolution, and observed that many operations like display of images, capturing images by sensing (i.e., photography or rendering), blurring or sharpening of images, etc., can be written in terms of convolution, and that sampling at a point is defined by a limit of integrals, while sampling at all points is a limit of convolutions.

For the remainder of the chapter, we’re motivated by the question, “Suppose we have incoming light arriving at a sensor, and we want to make an image that best captures this arriving light for subsequent display or other uses; what should we store in the image array?” To answer this question, we need to do two things.

• Choose a new basis in which to represent images.

• Understand how convolution “looks” in this new basis.

The Fourier transform is how we’ll transform images into the new basis. And in the new basis, convolution of functions becomes multiplication of functions, which is much easier to understand and reason about.

18.9.1. Frequency-Based Synthesis and Analysis

Consider the interval H = (–![]() ,

, ![]() ], which we’ll use throughout this chapter; the letter “H” is mnemonic for “half.” By writing a sum like

], which we’ll use throughout this chapter; the letter “H” is mnemonic for “half.” By writing a sum like

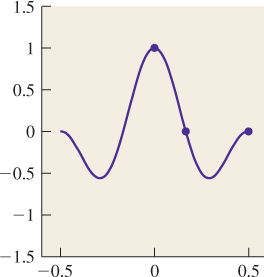

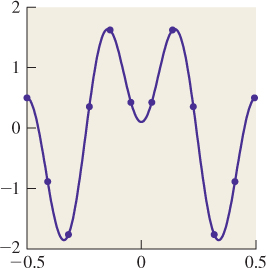

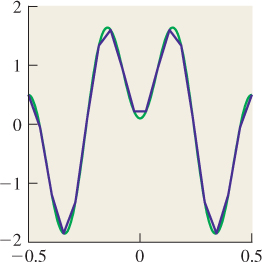

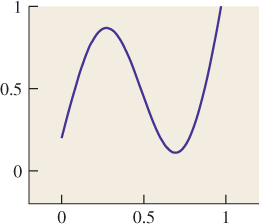

shown in Figure 18.28, we can produce an even function on that interval (i.e., one symmetric about the y-axis). In general, any sum of cosines of various integer frequencies will be an even function, because each component cosine is even. By changing how much of each frequency of cosine we mix in, we can get many different functions.

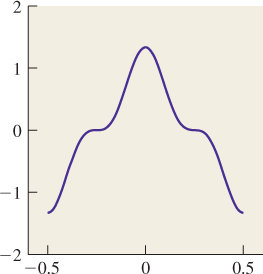

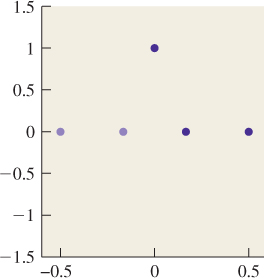

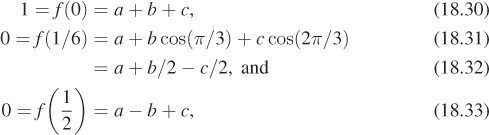

We can, for instance, find a combination of cos(0x), cos(2πx), and cos(4πx) that satisfies f(0) = 1, f(1/6) = 0, and f(![]() ) = 0. These constraints are shown in Figure 18.29; the shaded constraints on the left are there because the function is even, so its values on the left half of the real line must match those on the right half of the line.

) = 0. These constraints are shown in Figure 18.29; the shaded constraints on the left are there because the function is even, so its values on the left half of the real line must match those on the right half of the line.

We write

and then plugging in the constraints, we find that

from which we can determine that a = 0 and b = c = 1/2 (see Figure 18.30).

This is easy to generalize: If we’re given k constraints on the values of a function on the non-negative part of the interval I, then we can find a function written as a linear combination of cos(0x), cos(2πx), ..., cos(2π(k – 1)x) that satisfies those constraints. The proof relies on elementary properties of the cosine and sine.

Thus, we can “synthesize” various even functions by summing up cosines of many frequencies. We can even “direct” our synthesized function to have certain values at certain points, as in the second example above. We can synthesize odd functions by summing up sines of various frequencies as well, and by mixing sines and cosines, we can even synthesize functions that are neither even nor odd. Whatever function we synthesize, however, will be periodic of period 1, because each term in the sum is periodic with that period. Thus, if f is a sum of sines and cosines of different integer frequencies, we’ll have f(![]() ) = f (–

) = f (–![]() ).

).

More surprising, perhaps, is that any even continuous function f on the interval H that satisfies f(![]() ) = f(–

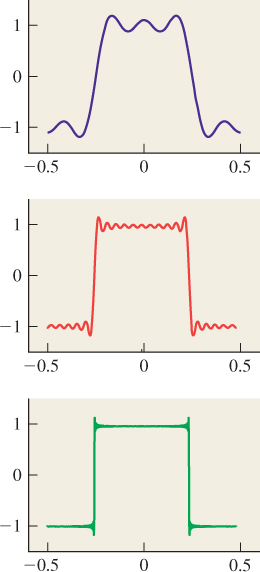

) = f(–![]() ) can be written as a sum of cosines of various integer frequencies. Even discontinuous functions can be almost written as such a sum. For instance, the square-wave function (see Figure 18.31) defined by

) can be written as a sum of cosines of various integer frequencies. Even discontinuous functions can be almost written as such a sum. For instance, the square-wave function (see Figure 18.31) defined by

can be almost expressed by the infinite sum

We say “almost expressed” because ![]() (±

(±![]() ) = 0—the average of the values of f to the left and right of ±

) = 0—the average of the values of f to the left and right of ±![]() —rather than being equal to f(±

—rather than being equal to f(±![]() ) = 1.

) = 1.

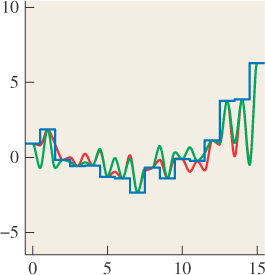

The sequence in Equation 18.35 can be approximated by taking a finite number of terms; a few of those approximations are shown in Figure 18.32.

Figure 18.32: The square wave approximated by 2, 10, and 100 terms. The slight overshoot in the approximations is called ringing.

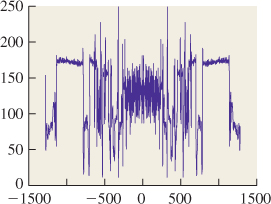

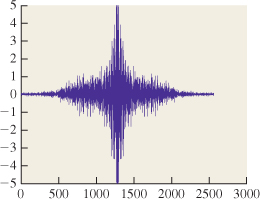

As a more graphically oriented example, let’s take one row of pixels from a symmetric image like that shown in Figure 18.33. We’ve actually taken half a row and flipped it over to get a perfectly symmetric line of 3,144 pixels, shown in Figure 18.34.

Figure 18.33: The Taj Mahal. Original image by Jbarta, at http://upload.wikimedia.org/wikipedia/commons/b/bd/Taj_Mahal,_Agra,_India_edit3.jpg.

If we write this function as a sum of cosines, the sum will have 3,144 terms, which is hard to read. It starts out as

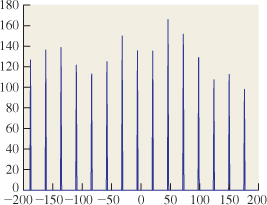

We can make an abstract picture of this summation by plotting the coefficients: At 0 we plot 129.28, at 1 we plot 5.67, at 2 we plot –2.34, etc. The result is shown in Figure 18.35, except that since the coefficient of cos(0x) is actually 141.8, we’ve adjusted the y-axis so that you can see the other details, thus hiding the large coefficient for the cos(0x) term. (That coefficient is just the average of all the pixel values.)

Figure 18.35: The first few coefficients for the sum representing the row of Taj Mahal pixels. We plot the coefficient of cos(2πkx) at position k.

Notice that as the frequencies get higher, the coefficients get smaller. In fact, in natural images, this “falling off with higher frequencies” is commonplace. Notice too that the function shown in Figure 18.34 has lots of variation at a very small scale, while the one shown in Figure 18.28 has variation only at a very large scale. If we made a plot for Figure 18.28 analogous to Figure 18.35, it would have only two nonzero values (at frequencies 0 and 1). In general, details at small scales mean there must be high frequencies in the image, just as we observed in Section 18.11. Since x ![]() cos(2πkx) has “features” at a scale of

cos(2πkx) has “features” at a scale of ![]() , in general any sum that stops at the cos(2πkx) term will have no features smaller than

, in general any sum that stops at the cos(2πkx) term will have no features smaller than ![]() . This kind of relationship between the pattern of coefficients and the appearance of the pixel-value plot is a powerful reasoning tool. We’ll next formalize it using the Fourier transform.

. This kind of relationship between the pattern of coefficients and the appearance of the pixel-value plot is a powerful reasoning tool. We’ll next formalize it using the Fourier transform.

But first, it’s useful to understand how the frequency decomposition of an image actually looks, so Figure 18.36 shows these to you, for a grayscale version of the Taj Mahal image (again, symmetrized). The frequency-0 part of the image is the average grayscale value; we’ve actually included this in both the middle-and high-frequency images to prevent having to use values less than 0.

18.10. The Fourier Transform: Definitions

In the next two sections we’ll define several different Fourier transforms, all of them closely related. We’ll only hint at the proofs of various claims, and instead rely mostly on suggestive examples. As motivation for you as you read these sections, here are the three main features of the Fourier transform that we’ll use in applications to computer graphics.

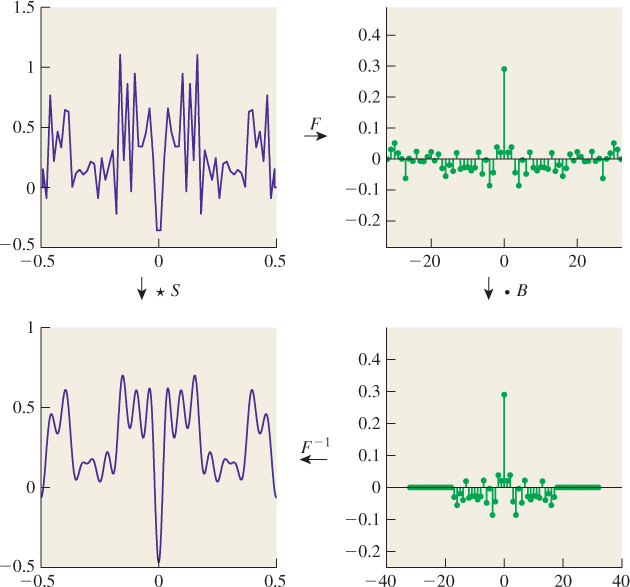

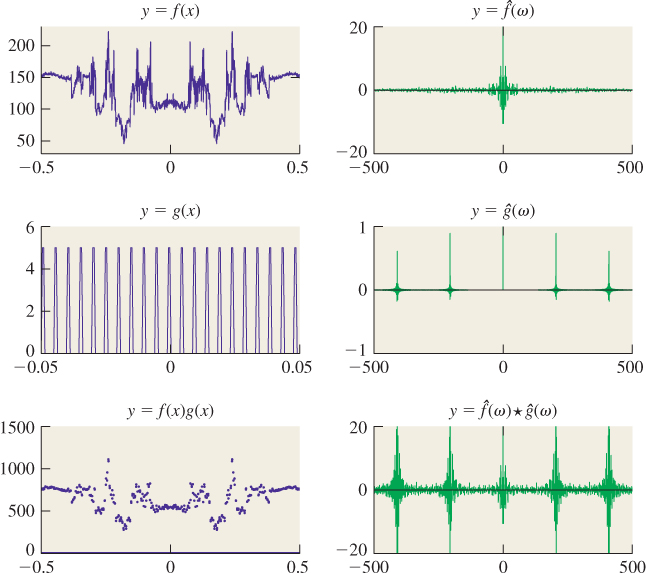

• The Fourier transform turns convolution into multiplication, and vice versa. If we write ![]() for the Fourier transform, this means that

for the Fourier transform, this means that

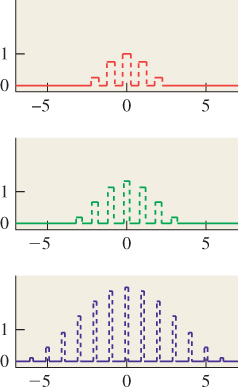

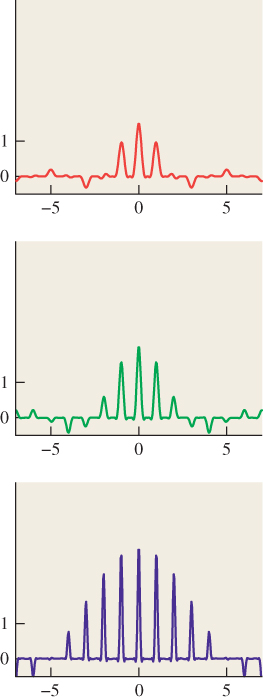

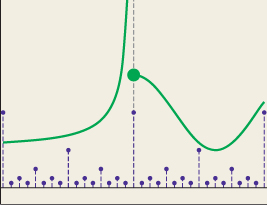

• If we define a function g like the one shown in Figure 18.37, with peaks that are equally spaced and very narrow, then the Fourier transform of g looks rather like g itself, except that the closer the spacing of the peaks in g, the wider the spacing of the peaks in the transform.

• Multiplying a function f by a function like g approximates “sampling f at equispaced points.” Thus, functions like g can be used to study the effects of sampling. Because of the convolution-multiplication duality, we’ll see that the sampled function has a Fourier transform that’s a sum of translated replicates of the original function’s transform.

18.11. The Fourier Transform of a Function on an Interval

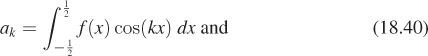

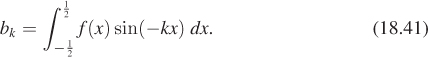

We hinted in Section 18.9.1 that if we had an even function in L2(H), then its Fourier transform was the set of coefficients used to write the function as a sum of cosines. In general, however, the Fourier transform is defined for any L2 function, not just even ones. The most basic definition is a little messy. For each integer k ≥ 0, define

Notice that b0 is always 0.

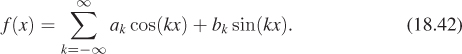

The sequences {ak} and {bk} are called the Fourier transform of f. If f is continuous and ![]() , then it turns out that

, then it turns out that

Surprisingly, the annoyance of having an unnecessary value (b0), the vagueness of “the Fourier transform consists of two sequences,” and the somewhat surprising appearance of the negative sign in the definition of bk can all be resolved by generalizing to complex numbers.

Instead of real-valued functions ![]() , we’ll consider complex-valued functions. And instead of considering the sine and cosine separately, we’ll define

, we’ll consider complex-valued functions. And instead of considering the sine and cosine separately, we’ll define

Show that (ek(x)+e–k(x))/2 = cos(2πkx), and (ek(x) – e–k(x))/(2i) = sin(2πkx), so that any function written as a sum of sines and cosines can also be written as a sum of eks, and vice versa.

The only other change is that the definition of the inner product must be slightly modified to

where ![]() is the complex conjugate. Making this change ensures that the inner product of f with f is always a non-negative real number so that its square root can be used to define the length ||f||.

is the complex conjugate. Making this change ensures that the inner product of f with f is always a non-negative real number so that its square root can be used to define the length ||f||.

With this inner product, the set of functions {ek : k ![]() Z} is orthonormal, that is,

Z} is orthonormal, that is,

the proof is an exercise in calculus and trigonometric identities.

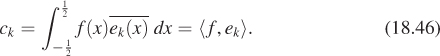

We define

It then turns out that for a continuous L2 function f satisfying ![]() ,

,

that is, computing the inner product of f with each basis element ek lets us write f as a linear combination of the ek’s. This is exactly analogous to the situation in R3, where a vector is the sum of its projections onto the three coordinate axes. The only difference here is that the sum is infinite, and so a proof is needed to establish that it converges.

The Fourier transform of f is now defined to be the sequence {ck : k ![]() Z}. With this revised definition, we see that the Fourier transform of f is just the list of coefficients of f when it’s written in a particular orthonormal basis. Such “lists of coefficients” form a vector space under term-by-term addition and scalar multiplication, and the Fourier transform is a linear transformation from L2 to this new vector space. Be sure you understand this: The Fourier transform is just a change of representation. It’s a very important one, though, because of the multiplication-convolution property.

Z}. With this revised definition, we see that the Fourier transform of f is just the list of coefficients of f when it’s written in a particular orthonormal basis. Such “lists of coefficients” form a vector space under term-by-term addition and scalar multiplication, and the Fourier transform is a linear transformation from L2 to this new vector space. Be sure you understand this: The Fourier transform is just a change of representation. It’s a very important one, though, because of the multiplication-convolution property.

The function f ![]() L2(H) is often referred to as being in the time domain, while its Fourier transform is said to be in the frequency domain. Since one is a function on an interval and the other is a function on the integers, the distinction between the two is quite clear. But for functions in L2(R), the Fourier transform is also in L2(R), and so being able to talk about the two domains is helpful. We’ll sometimes use “value domain” or “value representation” for the original function, and “frequency representation” for its Fourier transform, because f(x) tells us the value of f at x, while ck tells us how much frequency-k content there is in f.

L2(H) is often referred to as being in the time domain, while its Fourier transform is said to be in the frequency domain. Since one is a function on an interval and the other is a function on the integers, the distinction between the two is quite clear. But for functions in L2(R), the Fourier transform is also in L2(R), and so being able to talk about the two domains is helpful. We’ll sometimes use “value domain” or “value representation” for the original function, and “frequency representation” for its Fourier transform, because f(x) tells us the value of f at x, while ck tells us how much frequency-k content there is in f.

We mostly won’t care about the particular values ck in what follows, but we’ll want to be able to take a big-picture look at these numbers and say things like “For this function, it turns out that ck = 0 whenever |k| > 200,” or “The complex numbers ck get smaller and smaller as k gets larger.” (Recall that the “size” of a complex number z = a+bi is called its modulus, and is ![]() .) Because of this big-picture interest, rather than trying to plot ck for k

.) Because of this big-picture interest, rather than trying to plot ck for k ![]() Z, we instead plot |ck|. The advantage is that |ck| is a real number rather than a complex one, so it’s easier to plot. The plot of these absolute values is called the spectrum of f, and it tells us a lot about f. (The word “spectrum” arises from a parallel with light being split into all the colors of the spectrum.)

Z, we instead plot |ck|. The advantage is that |ck| is a real number rather than a complex one, so it’s easier to plot. The plot of these absolute values is called the spectrum of f, and it tells us a lot about f. (The word “spectrum” arises from a parallel with light being split into all the colors of the spectrum.)

The Fourier transform takes a function in L2(H) and produces the sequence of coefficients ck. It’s useful to think of this sequence as a function defined on the integers, namely k ![]() ck. In fact, the sum

ck. In fact, the sum

turns out to be the same as ![]() , which is finite because f is an L2 function. This means that k

, which is finite because f is an L2 function. This means that k ![]() ck is an

ck is an ![]() 2 function, and thus the Fourier transform takes L2(H) to

2 function, and thus the Fourier transform takes L2(H) to ![]() 2(Z). From now on we’ll denote the Fourier transform with the letter

2(Z). From now on we’ll denote the Fourier transform with the letter ![]() , so

, so

Notice that ![]() (f) is a function:

(f) is a function: ![]() (f)(k) is defined to be ck, the kth Fourier coefficient for f. For simplicity, we’ll sometimes denote the Fourier transform of f by

(f)(k) is defined to be ck, the kth Fourier coefficient for f. For simplicity, we’ll sometimes denote the Fourier transform of f by ![]() .

.

We’ll often use two properties of the Fourier transform.

• First, if f is an even function, then each ck is a real number (i.e., its imaginary part is 0). For even functions, we can therefore actually plot ck rather than |ck|; that’s what we did in Figure 18.35, although we only plotted it for k ≥ 0.

• Second, if f is real-valued (as are all the functions we care about, the real value being something like “the intensity of light arriving at this point”), then its Fourier transform is even, that is, ck = c–k for every k. That’s why we showed the plot of ck for k ≥ 0 in Figure 18.35: The values for k < 0 would have added no information.

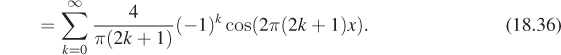

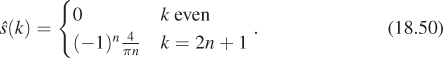

We have one example of the Fourier transform already: We wrote the square-wave function s, as a sum of cosines in Equation 18.35. From that sum, we can read off

The plot of ![]() is shown in Figure 18.38.

is shown in Figure 18.38.

18.11.1. Sampling and Band Limiting in an Interval

Now suppose that we have a function f on the interval H with ![]() (f)(k) = 0 for all |k| > k0. Such a function is said to be band-limited at k0. The function f can be written as a sum of sinusoidal functions, all of frequency less than or equal to k0. Since the “features” of a sinusoidal function of frequency k (the “bumps”) are of size

(f)(k) = 0 for all |k| > k0. Such a function is said to be band-limited at k0. The function f can be written as a sum of sinusoidal functions, all of frequency less than or equal to k0. Since the “features” of a sinusoidal function of frequency k (the “bumps”) are of size ![]() , the features of f must be no smaller than

, the features of f must be no smaller than ![]() . We can say that the function f is “smooth at the scale

. We can say that the function f is “smooth at the scale ![]() .” In a technical sense, f is completely smooth, but what we mean is that f has no bumpiness smaller than

.” In a technical sense, f is completely smooth, but what we mean is that f has no bumpiness smaller than ![]() .

.

Turning this notion around, suppose that the graph of f has a sharp corner, or a discontinuity. Then f cannot be band-limited—it must be made up of sinusoids of arbitrarily high frequencies! This is important: A function that’s discontinuous, or nondifferentiable, cannot be band-limited. The converse is false, however—there are plenty of smooth functions that contain arbitrarily high frequencies.

The set of all functions band-limited at k0 is a vector space—if we add two band-limited functions, we get another band-limited function, etc. The dimension of this vector space is 2k0 + 1, with coordinates provided by the numbers c0, c±1, ..., c±k0. (This is the dimension as a real vector space; each number cj has a real and an imaginary part, contributing two dimensions, except for c0, which is pure real.)

If we evaluate the function f at k0 + 1 equally spaced points in the interval H = (–![]() ,

, ![]() ] we get k0 + 1 complex numbers, which we can treat as 2k0 + 2 real numbers. If we ignore any one of these, we’re left with 2k0 + 1 real numbers. That is to say, we’ve defined a linear mapping from the band-limited functions to R2k0+1. This mapping turns out to be bijective. (The proof involves lots of trigonometric identities and some complex arithmetic.) What that tells us is somewhat remarkable:

] we get k0 + 1 complex numbers, which we can treat as 2k0 + 2 real numbers. If we ignore any one of these, we’re left with 2k0 + 1 real numbers. That is to say, we’ve defined a linear mapping from the band-limited functions to R2k0+1. This mapping turns out to be bijective. (The proof involves lots of trigonometric identities and some complex arithmetic.) What that tells us is somewhat remarkable:

If f is band-limited at k0, then any k0 + 1 equally spaced samples of f determine f uniquely. Conversely, if you are given values for k0 + 1 equally spaced samples (except for either the real or complex part of one value), then there’s a unique function f, band-limited at k0, that takes on those values at those points.

This is one form of the Shannon sampling theorem [Sha49] or simply sampling theorem. We can apply this to real-valued functions, whose Fourier transforms are even functions. This means that c–1 = c1, and c–2 = c2, etc. So, of the 2k0 + 1 degrees of freedom, we have only k0 + 1 degrees of freedom for a real-valued function. In this case, the sampling theorem says:

Suppose that f and g are real-valued functions on [–![]() ,

, ![]() ], and x0, ..., xk0 are k0 + 1 evenly spaced points in that interval, for example,

], and x0, ..., xk0 are k0 + 1 evenly spaced points in that interval, for example,

and yj = f(xj) for j = 0, ..., k0, and ![]() = g(xj).

= g(xj).

If yj = ![]() for all j, then f and g are equal, that is, a function band-limited at k0 is completely determined by k0 + 1 equally spaced samples. Furthermore, given any set of values

for all j, then f and g are equal, that is, a function band-limited at k0 is completely determined by k0 + 1 equally spaced samples. Furthermore, given any set of values ![]() , there is a unique function, f, band-limited at k0, with f(xj) = yj for every j.

, there is a unique function, f, band-limited at k0, with f(xj) = yj for every j.

Peeking ahead, this theorem is important because we generally build an image by taking equispaced samples of some function f, and we hope that the image really “captures” whatever information is in f. The sampling theorem says that if f is band-limited at some frequency, and if we take an appropriate number of samples for that frequency, then we can reconstruct f from the samples, that is, the image is a faithful representation of the function f.

This should make you ask, “Well, what happens if I take k0 samples of a real-valued function that’s not band-limited at k0? What band-limited function do those correspond to?” We’ll address this soon.

On the interval H = (–![]() ,

, ![]() ], consider the three points –

], consider the three points –![]() , 0, and

, 0, and ![]() .

.

(a) What real-valued function, f1, band-limited at k0 = 1, has values 1, 0, and 0 at these points? What functions f2 and f3 correspond to value sets 0, 1, 0 and 0, 0, 1? (You may want to use a computer algebra system to solve these parts.)

(b) Now find a band-limited function whose values at the three points are –![]() , 1, –

, 1, –![]() .

.

(c) What are the samples of x ![]() cos(4πx) at these three points? Does this contradict the sampling theorem?

cos(4πx) at these three points? Does this contradict the sampling theorem?

The sampling theorem can be read in reverse: If I’m taking samples with a spacing h between them, what’s the highest frequency I can tolerate in my signal if I want to be able to reconstruct it from the samples? The answer is that the wavelength of the signal must be greater than twice h. The frequency, known as the Nyquist frequency, is therefore π/h.

Suppose you prefer the convention that x ![]() sin(x) has frequency 1. What’s the Nyquist frequency if the sample spacing is h?

sin(x) has frequency 1. What’s the Nyquist frequency if the sample spacing is h?

18.12. Generalizations to Larger Intervals and All of R

If instead of functions on H = (–![]() ,

, ![]() ] we want to study functions on the interval (–M/2, M/2] of length M, we can make analogous definitions. The definition of the Fourier transform gets an extra factor of

] we want to study functions on the interval (–M/2, M/2] of length M, we can make analogous definitions. The definition of the Fourier transform gets an extra factor of ![]() ; the limits of integration change to ±M/2, and instead of using the function ek, for k

; the limits of integration change to ±M/2, and instead of using the function ek, for k ![]() Z, we must use

Z, we must use

The Fourier transform now sends L2(–M/2, M/2) to ![]() 2(

2(![]() Z), that is, functions on the set of all integer multiples of 1/M. Thus, as the interval we’re considering gets wider and wider (i.e., as M increases), the spacing between the frequencies involved in representing functions on that interval gets narrower and narrower.

Z), that is, functions on the set of all integer multiples of 1/M. Thus, as the interval we’re considering gets wider and wider (i.e., as M increases), the spacing between the frequencies involved in representing functions on that interval gets narrower and narrower.

It’s natural to “take a limit” and consider what happens when we let M ![]() ∞. It turns out that in addition to the Fourier transform defined for L2(–M/2, M/2), we can define a Fourier transform for L2(R).

∞. It turns out that in addition to the Fourier transform defined for L2(–M/2, M/2), we can define a Fourier transform for L2(R).

For f ![]() L2(R), we define F(f): R

L2(R), we define F(f): R ![]() R by the rule

R by the rule

where

We can think of ![]() (f)(ω) as telling “how much frequency ω stuff there is in f,” but this is a little misleading; it’s perhaps better to say that

(f)(ω) as telling “how much frequency ω stuff there is in f,” but this is a little misleading; it’s perhaps better to say that ![]() (f)(ω) says “how much f looks like a periodic function of frequency ω.”

(f)(ω) says “how much f looks like a periodic function of frequency ω.”

Just as in the case of finite intervals, if ![]() (f)(ω) = 0 for |ω| > ω0, we say that f is band-limited at frequency ω0.

(f)(ω) = 0 for |ω| > ω0, we say that f is band-limited at frequency ω0.

Before we leave the subject of Fourier transforms, there’s one last topic to cover: If we consider a periodic function h of period one, then h is definitely not in L2(R), because it doesn’t tend to zero at ±∞, so the integral of Equation 18.53 won’t generally converge. On the other hand, the corresponding integral over just one period of the function is the one used in defining the Fourier transform on an interval, Equation 18.46. Thus, we can use the interval formulation to talk about Fourier transforms for periodic functions as well.

![]() Roughly speaking, if we truncate a periodic function f of period one by setting f(x) = 0 for |x| > M, the L2(R) transform of the resultant function tends to be concentrated near integer points, and its value there tends to be proportional to M. As M gets larger, the concentration grows greater, until in the limit, the L2(R) transform is zero except at integer points, where it’s infinite. By dividing by M, at each stage we can convert the infinite values to finite ones, and they look just like the L2(H) transform of a single period of f.

Roughly speaking, if we truncate a periodic function f of period one by setting f(x) = 0 for |x| > M, the L2(R) transform of the resultant function tends to be concentrated near integer points, and its value there tends to be proportional to M. As M gets larger, the concentration grows greater, until in the limit, the L2(R) transform is zero except at integer points, where it’s infinite. By dividing by M, at each stage we can convert the infinite values to finite ones, and they look just like the L2(H) transform of a single period of f.

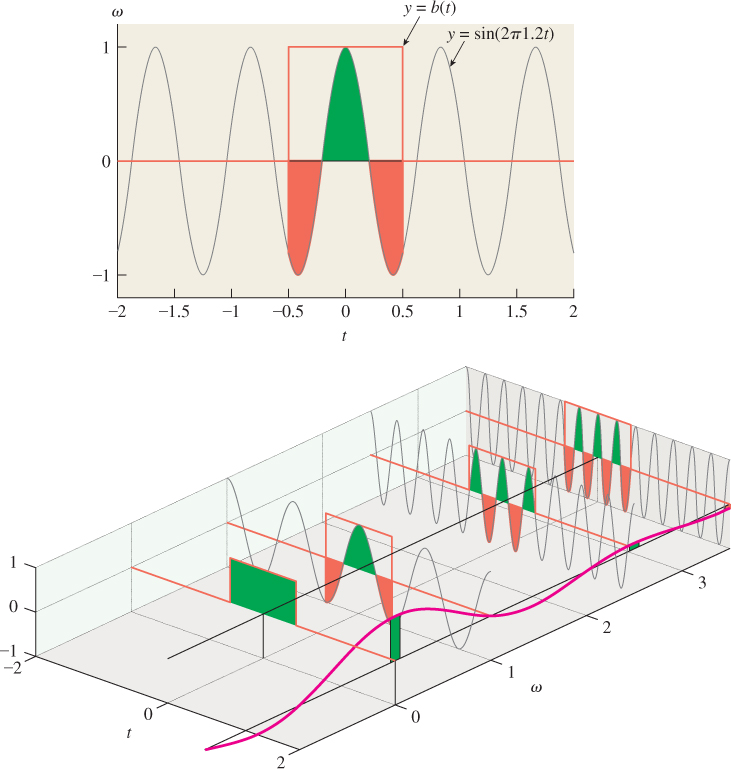

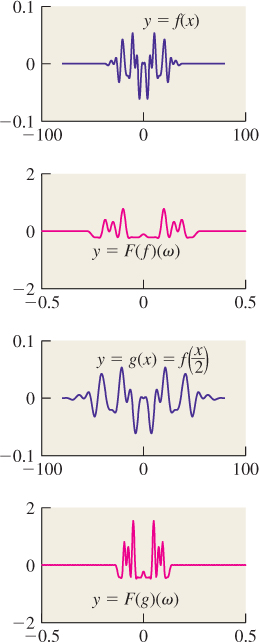

18.13. Examples of Fourier Transforms

18.13.1. Basic Examples

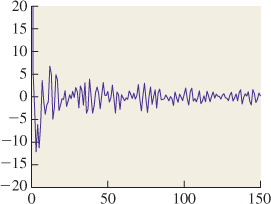

We’ve already seen (in Figures 18.34 and 18.35) the Fourier transform of one row of a natural image. The rapid falloff of ![]() (f)(ω) as ω grows is typical; in general, you can expect the Fourier transform to fall off like 1/ωa for some a > 1. For a synthetic image, which has sharp edges (e.g., a checkerboard), you might expect a 1/ω falloff, as we saw in the case of a square wave. In general, we’ll plot signals in blue and their transforms in magenta, although on a few occasions we’ll plot several signals on one axis and their transforms on another axis, using the same color for each signal and its transform. We’ll plot discrete signals—ones whose domain is Z—using stemplots.

(f)(ω) as ω grows is typical; in general, you can expect the Fourier transform to fall off like 1/ωa for some a > 1. For a synthetic image, which has sharp edges (e.g., a checkerboard), you might expect a 1/ω falloff, as we saw in the case of a square wave. In general, we’ll plot signals in blue and their transforms in magenta, although on a few occasions we’ll plot several signals on one axis and their transforms on another axis, using the same color for each signal and its transform. We’ll plot discrete signals—ones whose domain is Z—using stemplots.

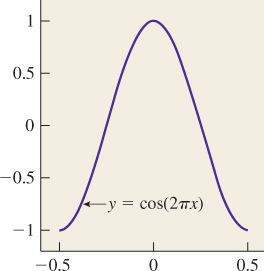

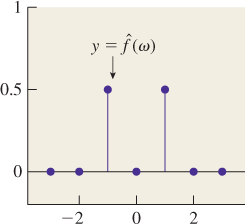

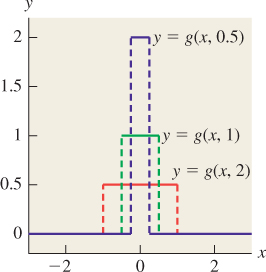

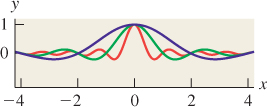

18.13.2. The Transform of a Box Is a Sinc

Let

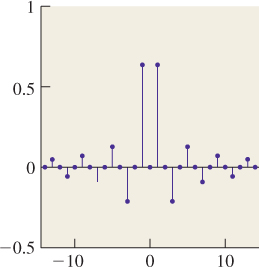

be a box function defined on the real line. Because it’s an even function and it’s real-valued, its Fourier transform will be even and real-valued. We can evaluate ![]() (b)(ω) directly from the definition.

(b)(ω) directly from the definition.

The calculation above works for all ω ≠ 0; for ω = 0, we have ![]() (b)(0) = 1, which you should verify by writing out the integral.

(b)(0) = 1, which you should verify by writing out the integral.