Chapter 32. Rendering in Practice

32.1. Introduction

In this chapter we show implementations of two renderers—a path tracer and a photon mapper—with some of the optimizations that make them worth using. Both approaches are currently in wide use, are fairly easy to understand, and form complete solutions to the rendering problem in the sense that they can be shown (under reasonable conditions) to provide consistent estimates of the values we seek (i.e., “properly” rendered images).

We’re not recommending these as ideal renderers. Rather, we treat them as case studies. They are rich enough to exhibit of the complexities and features of a modern renderer; they provide the foundation necessary for you to read research papers on rendering.

We assume that you’ve implemented the basic ray tracer described in Chapter 15. Much of this chapter also depends heavily on Chapters 30 and 31.

In the course of implementing these renderers, we describe ways to structure the representation of geometry in a scene, of scattering, and of samples that contribute to a pixel. These are not always in a form immediately recognizable from the mathematical formulation of the previous chapters, as you’ll know from Chapter 14.

In Section 32.8, we discuss the debugging of rendering programs, showing some example failures and their causes, and suggesting how you can learn to identify the kind of bug from the kind of visual artifacts you see.

32.2. Representations

As you build a ray-casting-based renderer, your choices of representations will have large-scale impacts. Is the scattering model you’ve chosen rich enough to represent the phenomena you wish to simulate? Is it easy to sample from a probability distribution proportional to ω ![]() fs(ωi,ω)|ω · n| for some fixed vector ωi? Is your scattering energy-conservative? Does your scene representation make ray-scene intersection fast and robust? Does your representation of luminaires make it easy to select points on a luminaire uniformly with respect to area?

fs(ωi,ω)|ω · n| for some fixed vector ωi? Is your scattering energy-conservative? Does your scene representation make ray-scene intersection fast and robust? Does your representation of luminaires make it easy to select points on a luminaire uniformly with respect to area?

For each of the questions above, describe how a basic ray tracer’s output or running time might be affected by the answer being “Yes” or “No.”

Beyond these choices, there are the practical matters of modeling. For instance, the scattering properties of a surface are usually defined or measured relative to the surface normal (and perhaps relative to a tangent basis as well), while we’ve treated the scattering model (or at least the bidirectional scattering distribution function or BSDF) as a function of a point in space and two direction vectors. In practice, of course, we trace a ray to find a point of some surface, and then find the BSDF at that point as a surface property, with its parameters being determined both by surface position and by various texture maps. We’ll begin by discussing this particular simplification.

32.3. Surface Representations and Representing BSDFs Locally

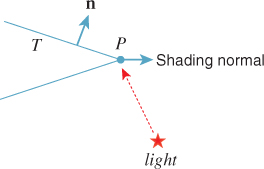

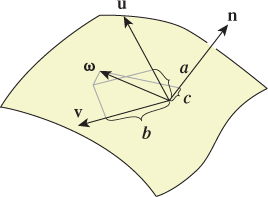

Consider a patch of surface so small that it may locally be considered flat, and a local coordinate frame at a point P, with unit normal vector n and unit tangent vectors u and v such that u, v, n is an orthonormal basis of 3-space, as shown in Figure 32.1. This decomposition of a surface into a tangent space and a normal space depends on local flatness; it’s problematic at edges and corners (like those of a cube), where it’s not obvious which directions should be called “tangent” or “normal.” This is a real problem for which graphics has yet to determine a definitive answer.

Figure 32.1: A local basis at a point P, consisting of mutually perpendicular unit vectors. The vector ω is shown being decomposed into a linear combination of these via dot products.

For any vector ω at P, we can easily represent ω = au + bv + cn, by computing dot products: a = ω · u, etc.

If ω, n, u, and v are expressed in world coordinates, and M is a 3 × 3 matrix whose rows are u, v, and n, then show that  Knowing this lets us convert from world coordinates to local tangent-plus-normal coordinates.

Knowing this lets us convert from world coordinates to local tangent-plus-normal coordinates.

Consider now the BSDF fs(P, ωi, ωo), which is a function of a surface point and two (unit) vectors at that point. If we write ωi = au + bv + cn and ωo = a′u + b′v + c′n, we can define a new function,

We can go further, however. Since ωi and ωo are unit vectors, we can express them in polar coordinates using φ for longitude and θ for latitude, corresponding to the standard use of θ for the angle between ω and n. This gives θ = cos–1(b) and φ = atan2(c, a), and we can write

The function ![]() r is what a gonioreflectometer actually measures. Notice that fs and

r is what a gonioreflectometer actually measures. Notice that fs and ![]() r are merely different representations of the same thing, like the rectangular- or polar-coordinate representations of a curve. (We discussed such shifts of representation in Chapter 14)

r are merely different representations of the same thing, like the rectangular- or polar-coordinate representations of a curve. (We discussed such shifts of representation in Chapter 14)

The function ![]() s has a form in which certain common properties of BSDFs can be easily expressed. For instance, the Lambertian bidirectional reflectance distribution function (BRDF) is completely independent of θo, φi, and φo. Because of this, the particular choice of u and v is irrelevant for the Lambertian BRDF: The dot products of ωi and ωo with u and v are only used in computing φi and φo.

s has a form in which certain common properties of BSDFs can be easily expressed. For instance, the Lambertian bidirectional reflectance distribution function (BRDF) is completely independent of θo, φi, and φo. Because of this, the particular choice of u and v is irrelevant for the Lambertian BRDF: The dot products of ωi and ωo with u and v are only used in computing φi and φo.

The Phong and Blinn-Phong BRDFs both depend on θi and θo, but their dependence on φi and φo is rather special: They depend only on the difference of φi – φo (indeed, on this difference taken mod 2π).

(a) Explain the claim that the Blinn-Phong BRDF depends only on the difference of φi and φo.

(b) Show that in fact it depends only on the magnitude of the difference: The sign is irrelevant.

This dependence on the difference in angles again means that the BSDF expressed in (θ, φ) terms, ![]() s, is independent of the choice of u and v: If we rotated these in the tangent plane by some amount α, then both φi and φo would change by α (and possibly by an additional 2π), and their difference (mod 2π) would remain invariant. BSDFs with this property are said to be isotropic, and they can be represented by functions of the three variables θi, θo, and φ = (φi – φo) mod 2π. The great majority of materials currently used in graphics are represented by BSDFs (indeed, BRDFs) that fall into this category; the exceptions (anisotropic materials) are things like brushed aluminum, in which the brushing direction introduces an anisotropy. Materials that are represented using subsurface scattering often have interior structure that makes them anisotropic as well, so the simplified representation is often inapplicable to those.

s, is independent of the choice of u and v: If we rotated these in the tangent plane by some amount α, then both φi and φo would change by α (and possibly by an additional 2π), and their difference (mod 2π) would remain invariant. BSDFs with this property are said to be isotropic, and they can be represented by functions of the three variables θi, θo, and φ = (φi – φo) mod 2π. The great majority of materials currently used in graphics are represented by BSDFs (indeed, BRDFs) that fall into this category; the exceptions (anisotropic materials) are things like brushed aluminum, in which the brushing direction introduces an anisotropy. Materials that are represented using subsurface scattering often have interior structure that makes them anisotropic as well, so the simplified representation is often inapplicable to those.

The preceding discussion has been in terms of the angles θi, φi, θo, and φo to emphasize that the BSDF is a function on a four-dimensional domain. In practice, however, it is the sines and cosines of these angles that most often enter into the computations, at least for analytically expressed BSDFs. (For tabulated BSDFs, we can tabulate based not on θi and θo, but on their cosines, so the same argument applies to those.) In practice, a BSDF implementation will typically take a point, P, and the two vectors ωi and ωo, and promptly express these vectors in terms of u, v, and n.

How does all this look in an implementation? Part of G3D’s implementation of a generalized Blinn-Phong model is shown in Listing 32.1.

There are several design choices here. The first is that a SurfaceElement is used to represent the intersection of a ray with a surface in the scene. Among other things, it has data members material and shading. The material stores things like the Phong exponent, the reflectivities in the red, green, and blue spectral regions, etc. The shading stores the intersection point, the texture coordinates there, and the surface normal there. (It may help, when reading expressions like p.shading.normal, to treat “shading” as an adjective. Thus, p.shading.normal is the shading normal, while p.geometric.normal is the geometric normal.)

Listing 32.1: Part of an implementation of Blinn-Phong reflectance.

1 Color3 SurfaceElement::evaluateBSDFfinite(w_i, w_o) {

2 n = shading.normal;

3 cos_i = abs(w_i.dot(n));

4

5 Color3 S(Color3::zero());

6 Color3 F(Color3::zero());

7 if ((material.glossyExponent != 0) && (material.glossyReflect.nonZero())) {

8 // Glossy

9

10 // Half-vector

11 const Vector3& w_h = (w_i + w_o).direction();

12 const float cos_h = max(0.0f, w_h.dot(n));

13

14 // Schlick Fresnel approximation:

15 F = computeF(material.glossyReflect, cos_i);

16 if (material.glossyExponent == finf())

17 S = Color3::zero()

18 } else {

19 S = F * (powf(cos_h, material.glossyExponent) * ...

20 }

21 }

22 ...

The surface normal is used immediately to compute cos θi, an example of expressing one of the two input vectors in the local frame of reference. The half-vector (direction() returns a unit vector) is computed from ωi and ωo, which are called w_i and w_o in the code. The Schlick approximation of the Fresnel term is computed and used to determine the glossy reflection. The remainder of the elided code computes the diffuse reflection. Missing from this code are the evaluations of the mirror-reflection term and of transmittance based on Snell’s law, each of which corresponds to an impulse in the scattering model. The splitting off of these impulse terms makes the computation of the reflected light much simpler. Recall that what we’ve been expressing as an integral, namely,

is really shorthand for a linear operator being applied to L, one that is defined in part by a convolution integrand like the one above, and in part by impulse terms like mirror reflectance, where for a particular value of ωi, the integrand is nonzero only for a specific direction ωo; the value of the “integral” is some constant (the impulse coefficient) times L(P, –ωi).

Trying to approximate terms like mirror reflectance by Monte Carlo integration is hopeless: We’ll never pick the ideal outgoing direction at random. Fortunately, these terms are easy to evaluate directly, so no approximation is needed. The SurfaceElement class therefore provides a method (see Listing 32.2) that returns all the impulses needed to evaluate the reflected radiance (in this case, the mirror-reflection impulse and the transmission impulse, although if we were rendering a birefringent material, there would be two transmissive impulses, so returning an array of impulses is natural).

G3D is designed around triangle meshes. The SurfaceElement class therefore contains some mesh-related items as well (see Listing 32.3).

Listing 32.2: A method that returns the impulse parts of a scattering model.

1 void getBSDFImpulses (Vector3& w_i, Array<Impulse>& impulseArray) {

2 const Vector3& n = shading.normal;

3

4 Color3 F(0,0,0);

5

6 if (material.glossyReflect.nonZero()) {

7 // Cosine of the angle of incidence, for computing

8 //Fresnel term

9 const float cos_i = max(0.001f, w_i.dot(n));

10 F = computeF(material.glossyReflect, cos_i);

11

12 if (material.glossyExponent == inf()) {

13 // Mirror

14 Impulse& imp = impulseArray.next();

15 imp.w = w_i.reflectAbout(n);

16 imp.magnitude = F;

17 ...

Listing 32.3: Further members of the SurfaceElement class.

1 class SurfaceElement {

2 public:

3 ...

4 struct Interpolated {

5 /** The interpolated vertex normal. */

6 Vector3 normal;

7 Vector3 tangent;

8 Vector3 tangent2;

9 Point2 texCoord;

10 } interpolated;

11

12 /** Information about the true surface geometry. */

13 struct Geometric {

14 /** For a triangle, this is the face normal. This is useful

15 for ray bumping */

16 Vector3 normal;

17

18 /** Actual location on the surface (it may be changed by

19 displacement or bump mapping later. */

20 Vector3 location;

21 } geometric;

22 ...

The vectors tangent and tangent2 correspond to u and v above; when the surface is modeled, these must be specified at every vertex. Using the derivative of texture coordinates is one way to generate these; if we have texture coordinates (ui, vi) at vertex i, we can find a linear approximation for u across the interior of the triangle, and from this determine a direction u in which u grows fastest. We can then define v as n×u to get an orthonormal basis. Note, however, that this is a per-triangle computation, and the computation of u is not guaranteed to be consistent across triangles. Indeed, because of the mapmaker’s dilemma (you can’t flatten the globe onto a single piece of paper preserving angles and distances), the consistent assignment of texture coordinates across a whole surface is generally impossible. It’s important that any anisotropic BRDF be used only in areas where u and v are defined consistently.

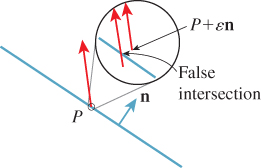

The vector geometric.normal is the triangle-face normal rather than the surface normal that it approximates. Depending on how the surface was originally modeled, these may be identical, or the surface normal may be some weighted combination of face normals, or it may be determined by some other method entirely. The triangle-face normal is useful in ray-tracing algorithms because if P is a point that’s supposed to lie on a triangle T (see Figure 32.2), it may be that a ray traced from P into the scene first hits the scene at a point of T, because a roundoff or representation error places P slightly to one side of T. By slightly displacing (bumping) P along the normal to T, that is, by replacing P with P+![]() n for some small

n for some small ![]() , we can avoid such false intersections. (Perhaps “nudging” would be a better term, to avoid conflict with the notion of bump-mapping, but “bumping” is the term used by G3D.) How large should

, we can avoid such false intersections. (Perhaps “nudging” would be a better term, to avoid conflict with the notion of bump-mapping, but “bumping” is the term used by G3D.) How large should ![]() be? A good rule of thumb is “no more than 1% of the size of the smallest object you expect to see in the scene.” This implicitly establishes a condition on your models: No significant object or feature should be less than 100 times the largest gap between two adjacent floating-point numbers of the size you will be using. For instance, if everything in your scene will have coordinates between −100 and 100, and you will use IEEE 32-bit floating-point numbers, then since the largest gap between two floating-point numbers near 100 is about 4 × 10–6, you should not expect to model any feature smaller than 4 × 10–4 units.

be? A good rule of thumb is “no more than 1% of the size of the smallest object you expect to see in the scene.” This implicitly establishes a condition on your models: No significant object or feature should be less than 100 times the largest gap between two adjacent floating-point numbers of the size you will be using. For instance, if everything in your scene will have coordinates between −100 and 100, and you will use IEEE 32-bit floating-point numbers, then since the largest gap between two floating-point numbers near 100 is about 4 × 10–6, you should not expect to model any feature smaller than 4 × 10–4 units.

If we’re tracing a ray P + td, we could “bump” P along the ray, that is, bump it slightly in the direction d. Argue that this is a bad idea by considering rays that are almost tangent to the surface.

32.3.1. Mirrors and Point Lights

If we allow point lights in the scene, then when we trace rays “from the eye,” we’ll hit the point light with probability zero, so that it’ll almost never happen in practice. Similarly, if we allow perfect mirrors, we cannot write the scattering operator as an integral to be approximated by naive sampling—we’ll essentially never sample the direction of the mirror-reflected ray. When we combine the two, things are even worse.

Consider a scene consisting of a smooth mirrored ball illuminated by a point light. If we ray-trace from the eye through the pixel centers, we’ll almost certainly miss the point light; if we ray-trace from the light, we’ll miss the pixel centers. But if we suppose that the point light is in the scene as a proxy for a spherical light of some small radius r, then we know that we should see a highlight on the mirrored ball.

Losing that highlight is perceptually significant, even though the highlight might appear at only a single pixel of the image. We have three choices: We can abandon the convenient fiction of a point light, we can adjust the BRDF to compensate for the abstraction, or we can choose some other method for estimating the radiance arriving at the eye from that location. In an ideal world, with infinite rendering resources, we’d choose to use tiny point lights and cast a great many rays. Within the context of ray tracing, we can clamp the maximum shininess (i.e., the specular exponent) when we are combining a BRDF with a direct luminaire in the reflection operator. This ensures that with sufficiently fine sampling, the point light will produce a highlight. Of course, it also slightly blurs the reflection of every other object in the scene. The difference in appearance between a specular exponent of 10,000 and ∞ tends to be unnoticeable in general, so this is an acceptable compromise. On the other hand, if the specular exponent is 10,000, a very fine sampling around the highlight direction is required, or else we’ll get high variance in our image. This leads us to the third alternative. It may make sense to separate out the impulse reflection of point lights (or even small lights) into a separate computation to avoid these sampling demands, but we will not pursue this approach here.

32.4. Representation of Light

In our theoretical discussion, we treated light as being defined by the radiance field (P, ω) ![]() L(P, ω): At any point P, in any direction ω, L(P, ω) represented the radiance along the ray through P in direction ω, measured with respect to a surface at P perpendicular to ω. When P is in empty space, this is a good abstraction. When P is a point exactly on a surface, there are two problems.

L(P, ω): At any point P, in any direction ω, L(P, ω) represented the radiance along the ray through P in direction ω, measured with respect to a surface at P perpendicular to ω. When P is in empty space, this is a good abstraction. When P is a point exactly on a surface, there are two problems.

1. The precise relationship between geometric modeling and physics has been left undefined. We haven’t said whether a solid is open (i.e., does not contain its boundary points, like an open interval) or closed; equivalently, we haven’t said whether a ray leaving from a surface point of a closed surface intersects that surface or not.

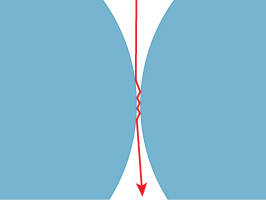

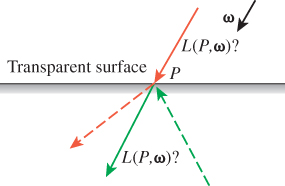

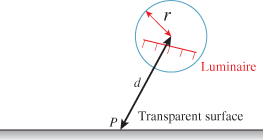

2. When P is a point on the surface of a transparent solid, like a glass sphere, and ω points into the solid (see Figure 32.4), there are two possible meanings for L(P, ω): the light arriving at P from distant sources, or the light traveling from P into the interior of the surface. Because of Snell’s law, material opacity, and internal reflection, these two are almost never the same.

We addressed the second problem in Chapter 26, by defining an incoming and outgoing radiance for each pair (P, ω), where P was a surface point and ω was a unit vector, by comparing ω with the normal n(P) to the surfaces; we also mentioned that Arvo’s division of the radiance field into field radiance and surface radiance accomplishes the same thing.

For the first problem, we will say that a point on the boundary of a solid is actually part of that solid, so a point P on the surface of a glass ball is actually part of the ball (and for a varnished piece of wood, a point on the boundary is treated as being in both the varnish and the wood). This means that a ray leaving P in the direction nP first intersects the ball at P. (As a practical matter, avoiding the intersection at t = 0 requires a comparison of a real number against zero, which is prone to floating-point errors, so including the first hit point is easier than avoiding it.) Thus, with this model of surface points, the notion of “bumping” is not merely a convenience for avoiding roundoff error problems, it’s a necessity.

32.4.1. Representation of Luminaires

32.4.1.1. Area Lights

Our simple scene model supports a very basic kind of area light: We represent area light sources with a polygon mesh (often a single polygon) and an emitted power Φ. At each point P of a polygon, light is emitted in every direction ω with ω · nP > 0; the radiance along all such rays, in all directions, is constant over the entire luminaire.

The radiance along each ray can be computed by dividing the luminaire’s power among the individual polygons by area; we thus reduce the problem to computing the radiance due to a single polygon of area A and power Φ. That radiance is ![]() , as we saw in Section 26.7.3.

, as we saw in Section 26.7.3.

We will need to sample points uniformly at random (with respect to area) on a single area light. To do so, we compute the areas of all triangles, and form the cumulative sums A1, A1 + A2, ..., A1 + ... + Ak = A, where k is the number of triangles. To sample at random, we pick a uniform random value u between 0 and A; we find the triangle i such that A1 + ... + Ai ≤ u, and then generate a point uniformly at random on that triangle (see Exercise 32.6).

We’ll also want to ask, for a given point P of the surface and direction ω with ω · nP > 0, what is the radiance L(P, ω)? For our uniformly radiating luminaires, this is a constant function, but for more general sources, it may vary with position or direction.

32.4.1.2. Point Lights

Point-light sources are specified by a location1 P and a power Φ. Light radiates uniformly from a point source in all directions. As we saw in Chapter 31, it doesn’t make sense to talk about the radiance from a point source, but it does make sense to compute the reflected radiance from a point source that’s reflected from a point Q of a diffuse surface. The result is

1. We only allow finite locations; extending the renderer to correctly handle directional lights is left as a difficult exercise.

If a point light hits a mirror surface or transmits through a translucent surface, we can then compute the result of its scattering from the next diffuse surface, etc. This eventually becomes a serious bookkeeping problem, and since point lights are merely a convenient fiction, we ignore it: We compute only diffuse scattering of point lights. Although addressing this properly in a ray-tracing-based renderer is difficult, we’ll see later that in the case of photon mapping, it’s quite simple.

One useful compromise for point-light sources is to say that for the purpose of emission directly toward the eye, the point source is actually a glowing sphere of some small radius, r, while when it’s used in the calculation of direct illumination, it’s treated as a point. This compromise, however, has the drawback that it requires the design of the class for representing lights to know something about the kinds of rays that will be interacting with it (i.e., an eye ray will be intersection-tested against a small sphere, while a secondary ray will never meet the light source at all), which violates encapsulation.

32.5. A Basic Path Tracer

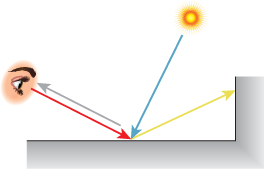

Recall the basic idea of ray tracing and path tracing: For each pixel of the image, we shoot several rays from the eye through the pixel area. A typical ray (the red one in Figure 32.5) hits the scene somewhere, and we compute the direct light arriving there (the nearly vertical blue ray), and how it scatters back along the ray toward the eye (gray). We then trace one or more recursive rays (such as the yellow ray that hits the wall), and compute the radiance flowing back along them, and how it scatters back toward the eye, etc. Having computed the radiance back toward the eye along each of the rays through our pixel, we take some sort of weighted average and call that the pixel value.

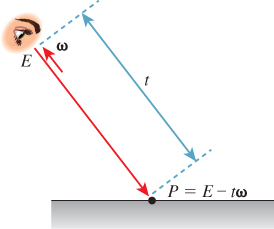

Because of the usual description of ray tracing (“Start from the eye, and follow a ray out into the scene until it hits a surface at some point P, and ...”), we’ll use the convention that the rays we discuss are always the result of tracing from the eye, that is, the first ray points away from the eye, the second ray points away from the first intersection toward a light or another intersection, etc. (see Figure 32.6).

Figure 32.6: The algorithm works from the eye toward the light source (red); photons travel in the opposite direction (blue).

On the other hand, the radiance we want to compute is the radiance that flows along the ray in the other direction. If the eye ray r starts at the eye, E, and goes in direction ω, meeting the scene at a point P, then we want to compute L(P, –ω), that is, we want to compute the radiance in the opposite of r’s direction. We’ll have various procedures like Radiance3 estimateTotalRadiance(Ray r, ...); such functions always return the radiance flowing in the direction opposite that of r.

32.5.1. Preliminaries

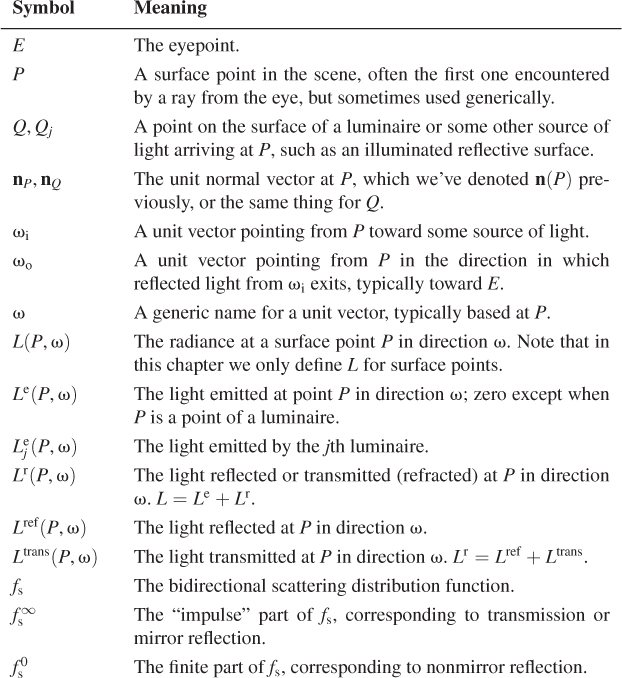

We begin with a very simple path tracer, in which the image plane is divided into rectangular areas, each of which corresponds to a pixel. If a ray toward the eye passes through the (i, j)th rectangle, we treat the radiance as a sample of the radiance arriving at that rectangle. Despite the simplicity of the path tracer, we’ll use a lot of symbols, which we list in Table 32.1; we’ll define each one as we encounter it.

Let’s suppose that there are k luminaires in the scene, each producing an emitted radiance field (Q, ω) ![]() Lej(Q, ω), (j = 1, ..., k) which for any point-vector pair (Q, ω) with Q on a surface and ω · nQ > 0 is zero, except for points on the jth luminaire, and directions ω in which the light emits radiance. Most often this radiance field will be Lambertian, that is, Lej(Q, ω) will be a constant for Q on the luminaire and any ω with ω · nQ > 0; it’s zero otherwise. But for now, we’ll just assume that it’s a general light field.

Lej(Q, ω), (j = 1, ..., k) which for any point-vector pair (Q, ω) with Q on a surface and ω · nQ > 0 is zero, except for points on the jth luminaire, and directions ω in which the light emits radiance. Most often this radiance field will be Lambertian, that is, Lej(Q, ω) will be a constant for Q on the luminaire and any ω with ω · nQ > 0; it’s zero otherwise. But for now, we’ll just assume that it’s a general light field.

Furthermore, let’s assume that all surfaces are opaque—the only scattering that takes place is reflection. The change to include transmission will be relatively minor.

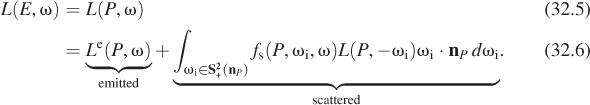

The rendering equation tells us that if P is the first point at which the ray t ![]() E – tω hits the geometry in the scene (see Figure 32.7), then

E – tω hits the geometry in the scene (see Figure 32.7), then

We can rewrite the second term as a sum by splitting the L in the integrand into two parts. As in Chapter 31, we let Lr = L – Le denote the reflected light (later, it will be reflected and refracted light) in the scene. At most surface points, Lr = L, because most points are not emitters. At emitters, however, Le is nonzero, so Lr and L differ. Thus,

Explain why, for a point Q on some luminaire and some direction ω, Lr(Q, ω) might be nonzero.

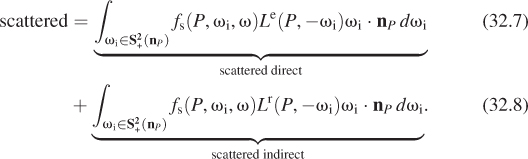

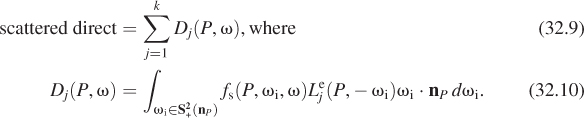

The first integral, representing scattered direct light, can be further expanded. We write ![]() as a sum of the illuminations due to the k individual luminaires, so that

as a sum of the illuminations due to the k individual luminaires, so that

Thus, Dj(P, ω) represents the light reflected from P in direction ω due to direct light from source j.

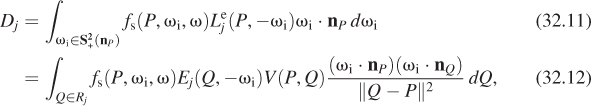

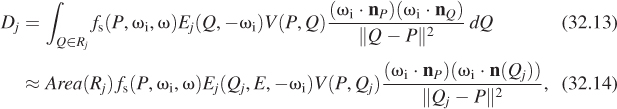

Rather than computing Dj by integrating over all directions ωi in ![]() , we can simplify by integrating over only those directions where there’s a possibility that Le(P, –ωi) will be nonzero, that is, directions pointing toward the jth luminaire. We do so by switching to an area integral over the region Rj constituting the jth luminaire; the change of variables introduces the Jacobian we saw in Section 26.6.5:

, we can simplify by integrating over only those directions where there’s a possibility that Le(P, –ωi) will be nonzero, that is, directions pointing toward the jth luminaire. We do so by switching to an area integral over the region Rj constituting the jth luminaire; the change of variables introduces the Jacobian we saw in Section 26.6.5:

where ωi = S(Q – P) is the unit vector from P toward Q, and we have introduced the visibility term V(P, Q) in case the point Q is not visible from P. (Note that this transformation converts our version of the rendering equation into the form written by Kajiya [Kaj86].) The preceding argument only works for area luminaires. In the case of a point luminaire, this integral must be computed by a limit as in Chapter 31.

For an area luminaire, we estimate the integral with a single-sample Monte Carlo estimate:

where Qj is a single point chosen uniformly with respect to area on the region Rj that constitutes the jth source.

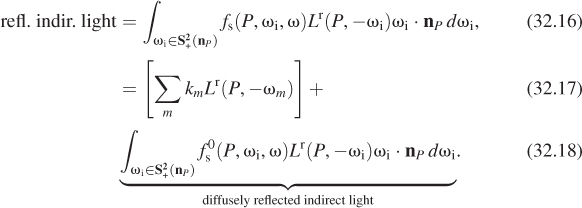

The second integral, representing the scattering of indirect light, can also be split into two parts, by decomposing the function ωi ![]() fs(P, ωi, ω) in the integrand into a sum,

fs(P, ωi, ω) in the integrand into a sum,

where ![]() represents the impulses like mirror reflection (and later, Snell’s law transmission), and

represents the impulses like mirror reflection (and later, Snell’s law transmission), and ![]() is the nonimpulse part of the scattering distribution (i.e., fs is a real-valued function rather than a distribution). Each impulse can be represented by (1) a direction (the direction ωi such that –ωi either reflects or transmits to ω at P), and (2) an impulse magnitude 0 ≤ k ≤ 1, by which the incoming radiance in direction –ωi is multiplied to get the outgoing radiance in direction ω. We’ll index these by the letter m (where m = 1 is reflection and m = 2 is transmission). Thus, we can write

is the nonimpulse part of the scattering distribution (i.e., fs is a real-valued function rather than a distribution). Each impulse can be represented by (1) a direction (the direction ωi such that –ωi either reflects or transmits to ω at P), and (2) an impulse magnitude 0 ≤ k ≤ 1, by which the incoming radiance in direction –ωi is multiplied to get the outgoing radiance in direction ω. We’ll index these by the letter m (where m = 1 is reflection and m = 2 is transmission). Thus, we can write

Finally, we can again estimate that last integral—the diffusely reflected indirect light—by a single-sample Monte Carlo estimate: We pick a direction ωi according to some probability density on the hemisphere (or the whole sphere, when we’re considering refraction as well as reflection), and estimate the integral with

Note that while the BRDF doesn’t literally make sense for an impulse-like mirror reflection, the computation we perform to compute mirror-reflected radiance has a form remarkably similar to that of Equation 32.19. We wrote it (Equation 32.17) in the form

where ω1 was the reflection of ωi (ω2 was the transmitted direction). The coefficient k1 plays the same role as the coefficient

of the radiance in the current case. In each case, we simply need our representation of the BRDF to be able to return the appropriate coefficient.

32.5.2. Path-Tracer Code

The central code in the path tracer is shown in Listing 32.4.

Listing 32.4: The core procedure in a path tracer.

1 Radiance3 App::pathTrace(const Ray& ray, bool isEyeRay) {

2 // Compute the radiance BACK along the given ray.

3 // In the event that the ray is an eye-ray, include light emitted

4 // by the first surface encountered. For subsequent rays, such

5 // light has already been counted in the computation of direct

6 // lighting at prior hits.

7

8 Radiance3 L_o(0.0f);

9

10 SurfaceElement surfel;

11 float dist = inf();

12 if (m_world->intersect(ray, dist, surfel)) {

13 // this point could be an emitter...

14 if (isEyeRay && m_emit)

15 L_o += surfel.material.emit;

16

17 // Shade this point (direct illumination)

18 if ( (!isEyeRay) || m_direct) {

19 L_o += estimateDirectLightFromPointLights(surfel, ray);

20 L_o += estimateDirectLightFromAreaLights(surfel, ray);

21 }

22 if (!(isEyeRay) || m_indirect) {

23 L_o += estimateIndirectLight(surfel, ray, isEyeRay);

24 }

25 }

26

27 return L_o;

28 }

The broad strokes of this procedure match the path-tracing algorithm fairly closely. Not shown is the outer loop that, for each pixel in the image, creates a ray from the eye through that pixel and then calls the pathTrace procedure (perhaps doing so multiple times per pixel and taking a [possibly weighted] average of the results).

The computation consists of five parts: finding where the ray meets the scene (and storing the intersection in a SurfaceElement called surfel), and then summing up emitted radiance, radiance due to direct lighting from area lights, radiance due to direct lighting from point lights, and a recursive term, all evaluated at the intersection point. The inclusion of each term is governed by a flag (m_emit, m_direct, m_indirect) that lets us experiment with the program easily when we’re debugging. If we turn off direct and indirect light, it’s really easy to tell whether the lamps themselves look correct, for instance.

Let’s look at the four terms individually. The emissive term simply takes the emitted radiance at the surface point, called surfel.geometric.position in the code, but which we’ll call P in this description, and adds it to the computed radiance. This assumes that the surface is a Lambertian emitter so that the outgoing radiance in every direction from P is the same. If instead of having a constant outgoing radiance, the emitted radiance depended on direction, we might have written:

1 if (includeEmissive) {

2 L_o += surfel.material.emittedRadianceFunction(-ray.direction);

3 }

where the emitted radiance function describes the emission pattern. Notice that we compute the emission in the opposite of the ray direction; the ray goes from the eye toward the surface, but we want to know the radiance from the surface toward the eye.

We add to this emitted radiance the reflection of direct light (i.e., light that goes from a luminaire directly to P, and that scatters back along our ray), and the reflection of indirect light (i.e., all light leaving the intersection point that’s neither emitted light nor scattered direct light).

To compute the direct lighting from point lights (see Listing 32.5), we determine a unit vector w_i from the surface to the luminaire, and check visibility; if the luminaire is visible from the surface, we use w_i in computing the reflected light. This follows a convention we’ll use consistently: The variable w_i corresponds to the mathematical entity ωi; the letter “i” indicates “incoming”; the ray ωi points from the surface toward the source of the light, and ωo points in the direction along which it’s scattered. This means that the variable w_i will be the first argument to surfel,evaluateBSDF(...), and often a variable w_o will be the second argument. This convention matters: While the finite part of the BRDF is typically symmetric in its two arguments, both the mirror-reflectance and transmissive portions of scattering are often represented by nonsymmetric functions.

Listing 32.5: Reflecting illumination from point lights.

1 Radiance3 App::estimateDirectLightFromPointLights(

2 const SurfaceElement& surfel, const Ray& ray){

3

4 Radiance3 L_o(0.0f);

5

6 if (m_pointLights) {

7 for (int L = 0; L < m_world->lightArray.size(); ++L) {

8 const GLight& light = m_world->lightArray[L];

9 // Shadow rays

10 if (m_world->lineOfSight(

11 surfel.geometric.location + surfel.geometric.normal * 0.0001f,

12 light.position.xyz())) {

13 Vector3 w_i = light.position.xyz() - surfel.shading.location;

14 const float distance2 = w_i.squaredLength();

15 w_i /= sqrt(distance2);

16

17 // Attenuated radiance

18 const Irradiance3& E_i = light.color / (4.0f * pif() * distance2);

19

20 L_o += (surfel.evaluateBSDF(w_i, -ray.direction()) * E_i *

21 max(0.0f, w_i.dot(surfel.shading.normal)));

22 debugAssert(radiance.isFinite());

23 }

24 }

25 }

26 return L_o;

27 }

There are three slightly subtle points highlighted in the code. The first is that we don’t ask whether the luminaire is visible from the surface point; as we discussed earlier, we have to ask whether it’s visible from a slightly displaced surface point, which we compute by adding a small multiple of the surface normal to the surface-point location. The second is that we make sure that the direction from P to the luminaire and the surface normal at P point in the same hemisphere; otherwise, the surface can’t be lit by the luminaire. This test might seem redundant, but it’s not, for two reasons (see Figure 32.8). One is that the surface point might be at the very edge of a surface, and therefore be visible to a luminaire that’s below the plane of the surface. The other is that the normal vector we use in this “checking for illumination” step is the shading normal rather than the geometric normal. Since we actually compute the dot product with the shading normal, this can result in smoothly varying shading over a not-very-finely tessellated surface.

This is another general pattern: During computations of visibility, we’ll use the geometric data associated with the surface element. But during computations of light scattering, we’ll use surfel.shading.location. In general, our representation of the surface point has both geometric and shading data: The geometric data is that of the raw underlying mesh, while the shading data is what’s used in scattering computations. For instance, if the surface is displacement-mapped, the shading location may differ slightly from the geometric location. Similarly, while the geometric normal vector is constant across each triangular face, the shading normal may be barycentrically interpolated from the three vertex normals at the triangular face’s vertices.

The third subtlety is the computation of the radiance. As we discussed in Chapter 31, if we treat the point luminaire as a limiting case of a small, uniformly emitting spherical luminaire, the outgoing radiance resulting from reflecting this light is a product of a BRDF term, a cosine, and a radiance that varies with the distance from the luminaire; we called that E_i in the program. (We’ve also, as promised, ignored specular scattering of point lights.)

When we turn our attention to area luminaires (see Listing 32.6), much of the code is identical. Once again, we have a flag, m_areaLights, to determine whether to include the contribution of area lights. To estimate the radiance from the area luminaire, we sample one random point on the source, that is, we form a single-sample estimate of the illumination. Of course, this has high variance compared to sampling many points on the luminaire, but in a path tracer we typically trace many primary rays per pixel so that the variance is reduced in the final image. When testing visibility, we again slightly displace the point on the source as well as the point on the surface. Other than that, the only subtlety is in the estimation of the outgoing radiance. Since our light’s samplePoint samples uniformly with respect to area, we have to do a change of variables, and include not only the cosine at the surface point but also the corresponding cosine at the luminaire point, and the reciprocal square of the distance between them. By line 23, we’ve used these ideas to estimate the radiance from the area light scattered at P, except for impulse scattering, because evaluateBSDF returns only the finite portion of the BSDF.

At line 26 we take a different approach for impulse scattering: We compute the impulse direction, and trace along it to see whether we encounter an emitter, and if so, multiply the emitted radiance by the impulse magnitude to get the scattered radiance.

Listing 32.6: Reflecting illumination from area lights.

1 Radiance3 App::estimateDirectLightFromAreaLights(const SurfaceElement& surfel,

const Ray& ray){

2 Radiance3 L_o(0.0f);

3 // Estimate radiance back along ray due to

4 // direct illumination from AreaLights

5 if (m_areaLights) {

6 for (int L = 0; L < m_world->lightArray2.size(); ++L) {

7 AreaLight::Ref light = m_world->lightArray2[L];

8 SurfaceElement lightsurfel = light->samplePoint(rnd);

9 Point3 Q = lightsurfel.geometric.location;

10

11 if (m_world->lineOfSight(surfel.geometric.location +

12 surfel.geometric.normal * 0.0001f,

13 Q + 0.0001f * lightsurfel.geometric.normal)) {

14 Vector3 w_i = Q - surfel.geometric.location;

15 const float distance2 = w_i.squaredLength();

16 w_i /= sqrt(distance2);

17

18 L_o += (surfel.evaluateBSDF(w_i, -ray.direction()) *

19 (light->power()/pif()) * max(0.0f, w_i.dot(surfel.shading.normal))

20 * max(0.0f, -w_i.dot(lightsurfel.geometric.normal)/distance2));

21 debugAssert(L_o.isFinite());

22 }

23 }

24 if (m_direct_s) {

25 // now add in impulse-reflected light, too.

26 SmallArray<SurfaceElement::Impulse, 3> impulseArray;

27 surfel.getBSDFImpulses(-ray.direction(), impulseArray);

28 for (int i = 0; i < impulseArray.size(); ++i) {

29 const SurfaceElement::Impulse& impulse = impulseArray[i];

30 Ray secondaryRay = Ray::fromOriginAndDirection(

31 surfel.geometric.location, impulse.w).bumpedRay(0.0001f);

32 SurfaceElement surfel2;

33 float dist = inf();

34 if (m_world->intersect(secondaryRay, dist, surfel2)) {

35 // this point could be an emitter...

36 if (m_emit) {

37 radiance += surfel2.material.emit * impulse.magnitude;

38 }

39 }

40 }

41 }

42 }

43 return L_o;

44 }

At this point we’ve computed the emissive term of the rendering equation, and the reflected term, at least for light arriving at P directly from luminaires. We now must consider light arriving at P from all other sources, that is, light from some point Q that arrives at P having been reflected at Q rather than emitted. Such light is reflected at P to contribute to the outgoing radiance from P back toward the eye. Once again, we estimate this incoming indirect radiance with a single sample. To do so, we use our path-tracing code recursively. We build a ray starting at (or very near) P, going in some random direction ω into the scene; we use our path tracer to tell us the indirect radiance back along this ray, and reflect this, via the BRDF, into radiance transported from P toward the eye. Of course, in this case, we must not include in the computed radiance the light emitted directly toward P—we’ve already accounted for that. We therefore set includeEmissive to false at line 24. Listing 32.7 show this.

Listing 32.7: Estimating the indirect light scattered back along a ray.

1 Radiance3 App::estimateIndirectLight(

2 const SurfaceElement& surfel, const Ray& ray, bool isEyeRay){

3 Radiance3 L_o(0.0f);

4 // Use recursion to estimate light running back along ray

5 // from surfel, but ONLY light that arrives from

6 // INDIRECT sources, by making a single-sample estimate

7 // of the arriving light.

8

9 Vector3 w_o = -ray.direction();

10 Vector3 w_i;

11 Color3 coeff;

12 float eta_o(0.0f);

13 Color3 extinction_o(0.0f);

14 float ignore(0.0f);

15

16 if (!(isEyeRay) || m_indirect) {

17 if (surfel.scatter(w_i, w_o, coeff, eta_o, extinction_o, rnd, ignore)) {

18 float eta_i = surfel.material.etaReflect;

19 float refractiveScale = (eta_i / eta_o) * (eta_i / eta_o);

20

21 L_o += refractiveScale * coeff *

22 pathTrace(Ray(surfel.geometric.location, w_i).bumpedRay(0.0001f *

23 sign(surfel.geometric.normal.dot( w_i)),

24 surfel.geometric.normal), false);

25 }

26 }

27 return L_o;

28 }

The great bulk of the work is done in surfel.scatter(), which takes a ray r arriving at a point and either absorbs it or determines an outgoing direction r′ for it, and a coefficient by which the radiance arriving along r′ (i.e., the radiance L(P, –r′)) should be multiplied to generate a single-sample estimate of the scattered radiance at P in direction –r.

Before examining the scatter() code, let’s review that description more closely. First, scatter() can be used either in ray/path tracing or in photon tracing. The second use is perhaps more intuitive: We have a bit of light energy arriving at a surface, and it is either absorbed or scattered in one or more directions. The scatter() procedure is used to simulate this process. If the absorption at the surface is, say, 0.3, then 30% of the time scatter() will return false. The other 70% of the time it will return true and set the value of ωo. Given the direction ωi toward the source of the light, the probability of picking a particular direction ωo (at least for surfaces with no mirror terms or transmissive terms) for the scattered light is roughly proportional to fs(ωi, ωo). In an ideal world, it would be exactly proportional. In ours, it’s generally not, but the returned coefficient contains a fs(ωi, ωo)/p(ωo) factor, where p(ωo) is the probability of sampling ωo, which compensates appropriately.

What happens if there is a mirror reflection? Let’s say that 30% of the time the incoming light is absorbed, 50% of the time it’s mirror-reflected, and the remaining 20% of the time it’s scattered according to a Lambertian scattering model. In this situation, scatter() will return false 30% of the time. Fifty percent of the time it will return true and set ωo to be the mirror-reflection direction, and the remaining 20% of the time ωo will be distributed on the hemisphere with a cosine-weighted distribution (i.e., with high probability of being emitted in the normal direction and low probability of being emitted in a tangent direction).

Let’s see this in practice, and look at the start of G3D’s scatter method in Listing 32.8.

Listing 32.8: The start of the scatter method.

1 bool SurfaceElement::scatter

2 (const Vector3& w_i,

3 Vector3& w_o,

4 Color3& weight_o, // coeff by which to multiply sample in path-tracing

5 float& eta_o,

6 Color3& extinction_o,

7 Random& random,

8 float& density) const {

9

10 const Vector3& n = shading.normal;

11

12 // Choose a random number on [0, 1], then reduce it by each kind of

13 // scattering’s probability until it becomes negative (i.e., scatters).

14 float r = random.uniform();

15

16 if (material.lambertianReflect.nonZero()) {

17 float p_LambertianAvg = material.lambertianReflect.average();

18 r -= p_LambertianAvg;

19

20 if (r < 0.0f) {

21 // Lambertian scatter

22 weight_o = material.lambertianReflect / p_LambertianAvg;

23 w_o = Vector3::cosHemiRandom(n, random);

24 density = ...

25 eta_o = material.etaReflect;

26 extinction_o = material.extinctionReflect;

27 debugAssert(power_o.r >= 0.0f);

28

29 return true;

30 }

31 }

32 ...

As you can see, the material has a lambertianReflect member, which indicates reflectance in each of three color bands;2 the average of these gives a probability p of a Lambertian scattering of the incoming light. If the random value r is less than p, we produce a Lambertian-scattered ray; if not, we subtract that probability from r and move on to the next kind of scattering.

2. We’ll call these “red,” “green,” and “blue” in keeping with convention, but with no important change in the implementation, we could record five or seven or 20 spectral samples.

Convince yourself that this approach has a probability p of producing a Lambertian-scattered ray.

The actual scattering is fairly straightforward: The cosHemiRandom method produces a vector with a cosine-weighted distribution in the hemisphere whose pole is at n. The method also returns the index of refraction (both real and imaginary parts) of the material on the n side of the intersection point, and a coefficient, (called weight_o here) that is precisely the number we’ll need to use when we do Monte Carlo estimation of the reflected radiance. (The returned value density is not the probability density, but a rather different value included for the benefit of other algorithms, and we ignore it.)

The remainder of the scattering code is similar. Recall that the reflection model we’re using is a weighted sum of a Lambertian, a glossy component, and a transmissive component, where the weights sum to one or less. If they sum to less than one, there’s some absorption. The weights are specified for R, G, and B, and the sum must be no more than one in each component.

Listing 32.9: Further scattering code.

1 Color3 F(0, 0, 0);

2 bool Finit = false;

3

4 if (material.glossyReflect.nonZero()) {

5

6 // Cosine of the angle of incidence, for computing Fresnel term

7 const float cos_i = max(0.001f, w_i.dot(n));

8 F = computeF(material.glossyReflect, cos_i);

9 Finit = true;

10

11 const Color3& p_specular = F;

12 const float p_specularAvg = p_specular.average();

13

14 r -= p_specularAvg;

15 if (r < 0.0f) { // Glossy (non-mirror) case

16 if (material.glossyExponent != finf()) {

17 float intensity = (glossyScatter(w_i, material.glossyExponent,

18 random, w_o) / p_specularAvg);

19 if (intensity <= 0.0f) {

20 // Absorb

21 return false;

22 }

23 weight_o = p_specular * intensity;

24 density = ...

25

26 } else {

27 // Mirror

28

29 w_o = w_i.reflectAbout(n);

30 weight_o = p_specular * (1.0f / p_specularAvg);

31 density = ...

32 }

33

34 eta_o = material.etaReflect;

35 extinction_o = material.extinctionReflect;

36 return true;

37 }

38 }

The glossy portion of the model has an exponent that can be any positive number or infinity. If it’s infinity, then we have a mirror reflection; otherwise, we have a Blinn-Phong-like reflection, which is scaled by a Fresnel term, F. Listing 32.9 shows this code.

Finally, we compute the transmissive scattering due to refraction, with the code shown in Listing 32.10. The only subtle point is that the Fresnel coefficient for the transmitted light is one minus the coefficient for the reflected light.

Listing 32.10: Scattering due to transmission.

1 ...

2 if (material.transmit.nonZero()) {

3 // Fresnel transmissive coefficient

4 Color3 F_t;

5

6 if (Finit) {

7 F_t = (Color3::one() - F);

8 } else {

9 // Cosine of the angle of incidence, for computing F

10 const float cos_i = max(0.001f, w_i.dot(n));

11 // Schlick approximation.

12 F_t.r = F_t.g = F_t.b = 1.0f - pow5(1.0f - cos_i);

13 }

14

15 const Color3& T0 = material.transmit;

16

17 const Color3& p_transmit = F_t * T0;

18 const float p_transmitAvg = p_transmit.average();

19

20 r -= p_transmitAvg;

21 if (r < 0.0f) {

22 weight_o = p_transmit * (1.0f / p_transmitAvg);

23 w_o = (-w_i).refractionDirection(n, material.etaTransmit,

material.etaReflect);

24 density = p_transmitAvg;

25 eta_o = material.etaTransmit;

26 extinction_o = material.extinctionTransmit;

27

28 // w_o is zero on total internal refraction

29 return ! w_o.isZero();

30 }

31 }

32

33 // Absorbed

34 return false;

35 }

The code in Listing 32.10 is messy. It’s full of branches, and there are several approximations and apparently ad hoc tricks, like the Schlick approximation of the Fresnel coefficient, and the setting of the cosine of the incident angle to be at least 0.001, embedded in it. This is typical of scattering code. Scattering is a messy process, and we must expect the code to reflect this, but the messiness also arises from the challenges of floating-point arithmetic on machines of finite precision. Perhaps a more positive view is that the code will be called many times with many different parameter values, and it’s important that it be robust regardless of this wide range of inputs.

There is an alternative, however. If we actually know microgeometry perfectly, and we know the index of refraction for every material, we can compute scattering relatively simply—there’s a reflection term and a transmission term, and what’s neither reflected nor transmitted is absorbed. As long as the microgeometry is of a scale somewhat larger than the wavelength of light that we’re scattering, this provides a complete model. Unfortunately, at present it’s an impractical one, for several reasons. First, representing microgeometry at the scale of microfacets requires either an enormous amount of data or, if it’s generated procedurally, an enormous amount of computation. Second, if we accurately represent microgeometry, then every surface becomes mirrorlike at a small enough scale. To get the appearance of diffuse reflection at a point P requires that thousands of rays hit the surface near P, each scattering in its own direction. Ray-tracing a piece of chalk suddenly requires a thousand rays per pixel instead of just one! Third, the exact index of refraction for many materials is unknown or hard to measure, especially the coefficient of extinction.

Our representation of scattering by summary statistics like the diffuse coefficient is a way to take this intractable model and make it workable, with only slight losses in fidelity, based on the observation that the precise microgeometry almost never matters in the final rendering; if we render 20 pieces of chalk with the same macrogeometry, they’ll all look essentially identical.

There’s a third alternative between these two: You can store measured BRDF data. Storing such data, at a reasonably fine level of detail, can be expensive. (If your material has a glossy highlight that resembles the one produced by a Phong exponent of 1000, then the BRDF drops from its peak value to half that value in about 7°, suggesting that you might need to sample at least every 2° to faithfully capture it, requiring about 17,000 samples.) Drawing a direction ω with probability proportional to ω ![]() fs(ωi, ω) is far more problematic, but it is feasible. You might think that you could have the best of both worlds by choosing an explicit parametric representation (e.g., spherical harmonics, or perhaps some generalized Phong-like model) and finding the best fit to the measured data. This is a fairly common practice in the film industry today, and it works well for some materials like metals, but it can produce huge errors for diffuse surfaces when you use common analytic models that fail to model subsurface effects accurately [NDM05]. Nonetheless, it’s currently an active area of research, one with considerable promise for simplifying the computation of the scattering integral.

fs(ωi, ω) is far more problematic, but it is feasible. You might think that you could have the best of both worlds by choosing an explicit parametric representation (e.g., spherical harmonics, or perhaps some generalized Phong-like model) and finding the best fit to the measured data. This is a fairly common practice in the film industry today, and it works well for some materials like metals, but it can produce huge errors for diffuse surfaces when you use common analytic models that fail to model subsurface effects accurately [NDM05]. Nonetheless, it’s currently an active area of research, one with considerable promise for simplifying the computation of the scattering integral.

32.5.3. Results and Discussion

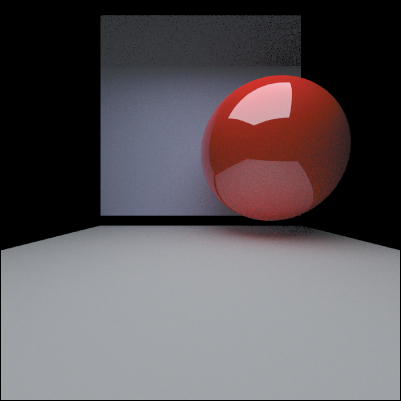

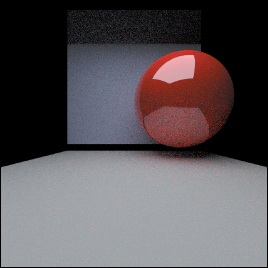

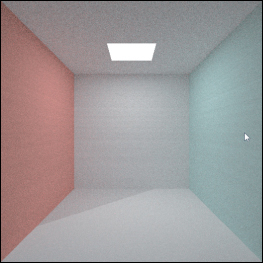

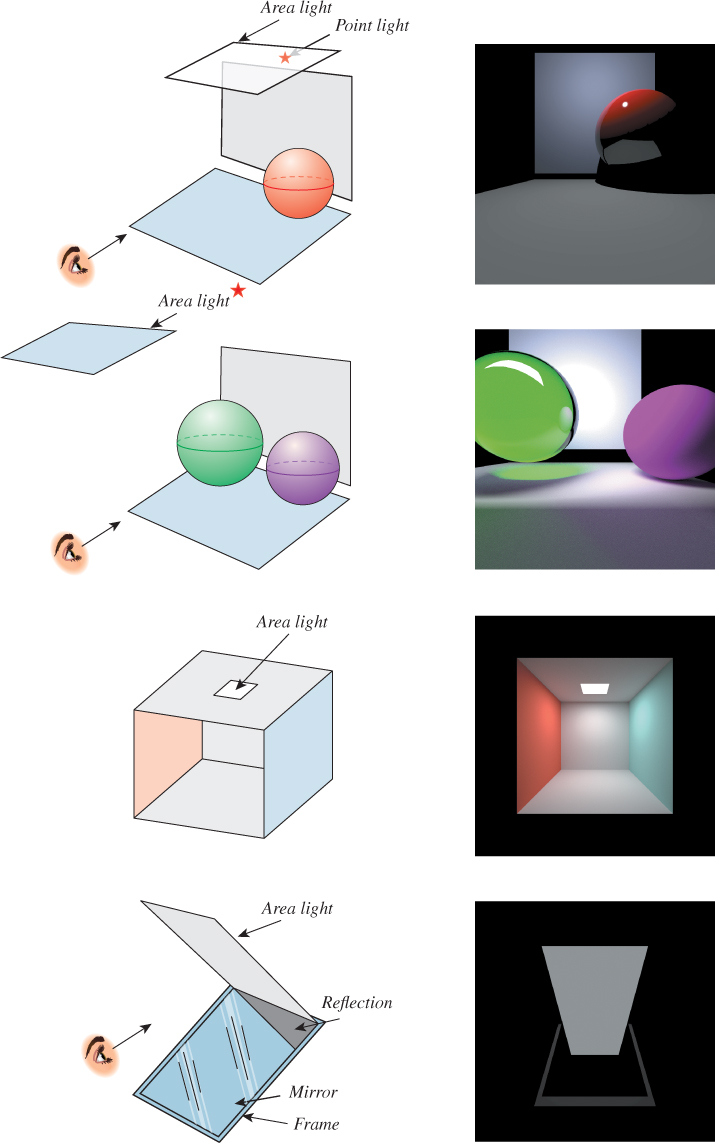

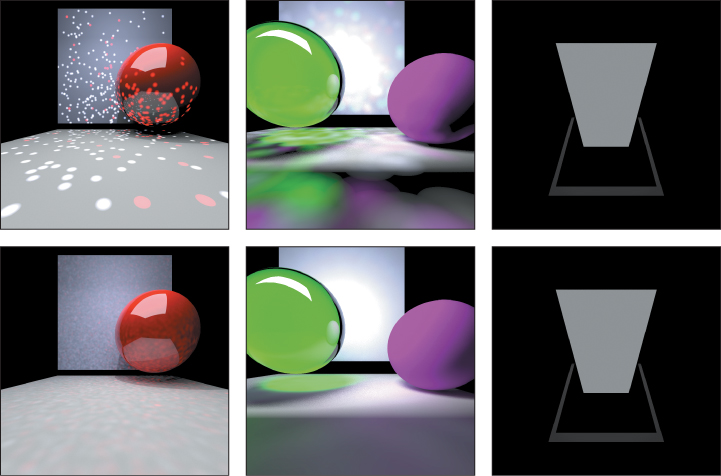

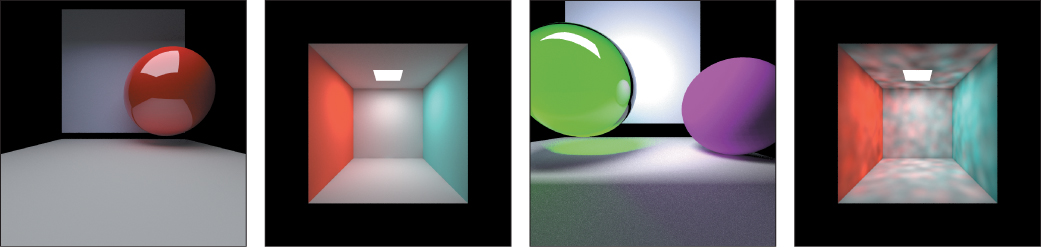

Figure 32.9 shows four simple scenes we’ll use in evaluating renderers, both drawn and ray-traced. The first, with its diffuse floor and back wall, and brightly colored semidiffuse sphere, provides a nice, simple test of bounding volume hierarchy, visibility, and rendering with reflection but not transmission. Since most of the scattering in the scene is diffuse, it only provides limited testing of scattering from multiple surfaces: We can’t visually check multiple interobject reflections the way we could in a scene with 12 mirrored spheres, for instance.

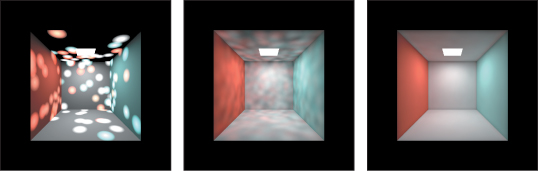

Figure 32.9: Four simple scenes, with their rendered versions shown to the right. The scene at the top is ray-traced (and hence the light comes from the point source; the area source is ignored); the three remaining scenes are path-traced to show the effects of the area luminaires.

In the second scene, we’ve added a transparent sphere whose refractive index is somewhat greater than that of air, to let us verify that transmissive scattering is working properly. (By the way, you can add a perfectly transmissive and completely nonreflective sphere with refractive index 1.0 to a scene, and it should make no difference to the scene’s appearance. Of course, if your renderer bounds the number of ray-surface interactions, it may have an effect on the rendering nonetheless.) The transparent sphere reflects some light from the other sphere, reflects some light onto it, and generates a diffuse pattern of light on the floor. None of these effects (color bleeding between diffuse surfaces, the caustic, or reflected light on the solid sphere) would be visible in a ray-traced version of the scene.

The third scene is the Cornell box, a standard test scene with diffuse surfaces, and an area light, in which color bleeding and multiple inter-reflections are evident in an accurate rendering, but are missing from the ray tracing.

The final scene consists of a large area light tilted toward the viewer, and a large mirror below it, also tilted toward the viewer, with a diffuse rectangle behind it to form a border for the mirror. The viewer sees not only the light, but also its reflection in the perfect mirror. Together these give the appearance of a single long continuous rectangle.

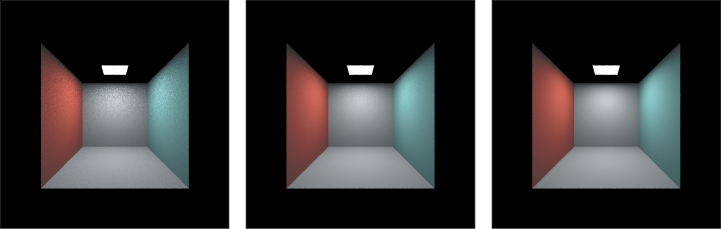

Figure 32.10 shows a path-traced version of the first scene. There are some obvious differences between this and the ray-traced scene. First, the area light, which we ignored in the ray tracing, has been included in the path tracing. Second, there’s noise in the path-traced image—everything looks somewhat speckled. We’ll return to this presently. Third, the shadows are softer. Light is reflecting from the floor onto the sphere, lighting the lower half somewhat, which in turn helps light the shadowed part of the floor. Fourth, there is color bleeding: The pinkish color on the floor and back wall is from light that’s reflected from the sphere.

The softened shadows and color bleeding are what you should expect when you consider how path tracing works. The noise, however, seems like a serious drawback. On the other hand, the ray-traced version exhibits aliasing, especially on the shadow edges. That’s because in the ray tracer, the rays from the eye through two nearby pixels end up reflecting from (or refracting through) the sphere in almost parallel directions. In the path tracer, there’s a coin toss: About 80% of the time a ray hitting the sphere, for instance, is reflected in a specific direction, and just as in ray tracing, nearby rays are refracted to nearby rays. But there’s also a 20% chance of absorption. If we trace, say, ten primary rays per pixel, it’s reasonable to expect seven, eight, nine, or ten of these rays to be reflected (i.e., from zero to four of them to be absorbed). That’ll lead to adjacent pixels having quite different radiance sums. To reduce this variance between adjacent pixels, we need to send quite a lot of primary rays (perhaps hundreds or thousands per pixel). You can even use the notion of confidence intervals from statistics to determine a number of samples so large that the fraction of absorbed rays is very nearly the absorption probability so that interpixel variation is small enough to be beneath the threshold of observation. In fact, Figure 32.10 was rendered with 100 primary rays per pixel, and despite this, the reflection of the floor in the red sphere appears slightly speckled. Figure 32.11 shows the speckle more dramatically.

32.6. Photon Mapping

Let’s now move on to a basic implementation of photon mapping. Recall that the main idea in photon mapping is to estimate the indirect light scattered from diffuse surfaces by shooting photons3 from the luminaires into the scene, recording where they arrive (and from what direction), and then reflecting them onward to be further recorded in subsequent bounces, eventually attenuated by absorption or by having the recursion depth-limited. When it comes time to estimate scattering at a point P of a diffuse surface, we search for nearby photons and use them as estimates of the arriving light at P, which we then push through the reflectance process to estimate the light leaving P.

3. Recall that a “photon” in photon mapping represents a bit of power emitted by the light, typically representing many physical photons.

Not surprisingly, much of the technology used in the path-tracing code can be reused for photon mapping. In our implementation, we have built a photon-map data structure based on a hash grid (see Chapter 37); as we mentioned in our discussion of photon mapping, any spatial data structure that supports rapid insertion and rapid neighborhood queries can be used instead.

We’ve defined two rather similar classes, EPhoton and IPhoton, to represent photons as they are emitted and when they arrive; the “I” in IPhoton stands for “incoming.” An EPhoton has a position from which it was emitted, and a direction of propagation, which are stored together in a propagation ray, and a power, representing the photon’s power in each of three spectral bands. An IPhoton, by contrast, has a position at which it arrived, a direction to the photon source from that position, and a power. Making distinct classes helps us keep separate the two distinct ways in which the term “photon” is used. In our implementation, an EPhoton is emitted, and its travels through the scene result in one or more IPhotons being stored in the photon map.

The basic structure of the code is to first build the photon map, and then render the scene using it. Listing 32.11 shows the building of the photon map: We construct an array ephotons of photons to be emitted, and then emit each into the scene to generate an array iphotons of incoming photons, and store these in the map m_photonMap.

Listing 32.11: The large-scale structure of the photon-mapping code.

1 main(){

2 set up image and display, and load scene

3 buildPhotonMap();

4 call photonRender for each pixel

5 display the resulting image

6 }

7

8 void App::buildPhotonMap(){

9 G3D::Array<EPhoton> ephotons;

10 LightList lightList(&(m_world->lightArray), &(m_world->lightArray2), rnd);

11 for (int i = 0; i < m_nPhotons; i++) {

12 ephotons.append(lightList.emitPhoton(m_nPhotons));

13 }

14

15 Array<IPhoton> ips;

16 for (int i = 0; i < ephotons.size(); i++) {

17 EPhoton ep = ephotons[i];

18 photonTrace(ep, ips);

19 m_photonMap.insert(ips);

20 }

21 }

The LightList represents a collection of all point lights and area lights in the scene, and can produce emitted photons from these, with the number of photons emitted from each source being proportional to the power of that source. Listings 32.12 and 32.13 show a little bit of how this is done: We sum the power (in the R, G, and B bands) for each light to get a total power emitted. The probability that a photon is emitted by the ith light is then the ratio of its average power (over all bands) to the average of the total power over all bands. These probabilities are stored in an array, with one entry per luminaire.

Listing 32.12: Initialization of the LightList class.

1 void LightList::initialize(void)

2 {

3 // Compute total power in all spectral bands.

4 foreach point or area light

5 m_totalPower += light.power() //totalPower is RGB vector

6

7 // Compute probability of emission from each light

8 foreach point or area light

9 m_probability.append(light.power().average() / m_totalPower.average());

10 }

With these probabilities computed, the only subtlety remaining is selecting a random point on the surface of an area light (see Listing 32.13). If the area light has some known geometric shape (cube, sphere, ...), we can use the obvious methods to sample from it (see Exercise 32.12). On the other hand, if it’s represented by a triangle mesh, we can first pick a triangle at random, with the probability of picking a triangle T proportional to the area of T, and then pick a point in that triangle uniformly at random. Exercise 32.6 shows that generating samples uniformly on a triangle may not be as simple as you think.

The areaLightEmit code uses cosHemiRandom to generate a photon in direction (x, y, z) with probability cos(θ), where θ is the angle between (x, y, z) and the surface normal. Why?

Listing 32.13: Photon emission in the LightList class.

1 // emit a EPhoton; argument is total number of photons to emit

2 EPhoton LightList::emitPhoton(int nEmitted)

3 {

4 u = uniform random variable between 0 and 1

5 find the light i with p0 + ... + pi-1 ≤ u < p0 + ... + pi.

6 if (i < m_nPointLights)

7 return pointLightEmit((*m_pointLightArray)[i], nEmitted, m_probability[i]);

8 else

9 return areaLightEmit((*m_areaLightArray)[i - m_nPointLights], nEmitted,

10 m_probability[i]);

11 }

12

13 EPhoton LightList::pointLightEmit(GLight light, int nEmitted, float prob){

14 // uniformly randomly select a point (x,y,z) on the unit sphere

15 Vector3 direction(x, y, z);

16 Power3 power = light.power() / (nEmitted * prob);

17 Vector3 location = location of the light

18 return EPhoton(location, direction, power);

19 }

20

21 EPhoton LightList::areaLightEmit(AreaLight::Ref light, int nEmitted, float prob){

22 SurfaceElement surfel = light->samplePoint(m_rnd);

23 Power3 power = light->power() / (nEmitted * prob);

24 // select a direction with cosine-weighted distribution around

25 // surface normal. m_rnd is a random number generator.

26 Vector3 direction = Vector3::cosHemiRandom(surfel.geometric.normal, m_rnd);

27

28 return EPhoton(surfel.geometric.location, direction, power);

29 }

What remains is the photon tracing itself (see Listing 32.14). We use a G3D helper class, the Array<IPhoton>, to accumulate incident photons. This class has a fastClear method that simply sets the number of stored values to zero rather than actually deallocating the array; this saves substantial allocation/deallocation overhead. The photonTraceHelper procedure keeps track of the number of bounces that the photon has undergone so far so that the bounce process can be terminated when this reaches the user-specified maximum. Note that in contrast to Jensen’s original algorithm, we store a photon at every bounce, whether it’s diffuse or specular. For estimating radiance at surface points with purely impulsive scattering models (e.g., mirrors), these photons will never be used, however, so there’s no impact on the results.

Once again, there are no real surprises in the program. The scatter method does all the work. We’ve hidden one detail here: The scatter method should be different from the one used in path tracing. If we’re only studying reflection, and only using symmetric BRDFs (i.e., all materials satisfy Helmholtz reciprocity), then the two scattering methods are the same. But in the case of asymmetric scattering (such as Fresnel-weighted transmission), it’s possible that the probability that a photon arriving at P in direction ωi scatters in direction ωo is completely different from the probability that one arriving in direction ωo scatters in direction ωi. Our surface really needs to provide two different scattering methods, one for each situation. In our code, we’ve used the same method for both. That’s wrong, but it’s also very common practice, in part because the effects of making the code right are (a) generally small, and (b) generally not something we’re perceptually sensitive to. You might want to spend a little while trying to imagine a scene in which the distinction between the two scattering rules matters.

Listing 32.14: Tracing photons, which is rather similar to tracing rays or paths.

1 void App::photonTrace(const EPhoton& ep, Array<IPhoton>& ips) {

2 ips.fastClear();

3 photonTraceHelper(ep, ips, 0);

4 }

5

6 /**

7 Recursively trace an EPhoton through the scene, accumulating

8 IPhotons at each diffuse bounce

9 */

10 void App::photonTraceHelper(const EPhoton& ep, Array<IPhoton>& ips, int bounces) {

11 // Trace an EPhoton (assumed to be bumped if necessary)

12 // through the scene. At each intersection,

13 // * store an IPhoton in "ips"

14 // * scatter or die.

15 // * if scatter, "bump" the outgoing ray to get an EPhoton

16 // to use in recursive trace.

17

18 if (bounces > m_maxBounces) {

19 return;

20 }

21

22 SurfaceElement surfel;

23 float dist = inf();

24 Ray ray(ep.position(), ep.direction());

25

26 if (m_world->intersect(ray, dist, surfel)) {

27 if (bounces > 0) { // don’t store direct light!

28 ips.append(IPhoton(surfel.geometric.location, -ray.direction(), ep.power()));

29 }

30 // Recursive rays

31 Vector3 w_i = -ray.direction();

32 Vector3 w_o;

33 Color3 coeff;

34 float eta_o(0.0f);

35 Color3 extinction_o(0.0f);

36 float ignore(0.0f);

37

38 if (surfel.scatter(w_i, w_o, coeff, eta_o, extinction_o, rnd, ignore)) {

39 // managed to bounce, so push it onwards

40 Ray r(surfel.geometric.location, w_o);

41 r = r.bumpedRay(0.0001f * sign(surfel.geometric.normal.dot( w_o)),

42 surfel.geometric.normal);

43 EPhoton ep2(r, ep.power() * coeff);

44 photonTraceHelper(ep2, ips, bounces+1);

45 }

46 }

47 }

One difference between photon propagation and radiance propagation is that at the interface between media with different refractive indices, the radiance changes (because a solid angle on one side becomes a different solid angle on the other), while for photons, which represent power transport through the scene, there is no such change. Thus, there’s no ηi/ηo factor in the photon-tracing code.

Having built the photon map, we must render a picture based on it. Our first version will closely resemble the path-tracing code, in the sense that we’ll break up the computation into direct and indirect light, and handle diffuse and impulse scattering individually. We’ll use a ray-tracing approach (i.e., recursively trace rays until some fixed depth); making the corresponding path-tracing approach is left as an exercise for the reader. The photon map is used only to estimate the diffusely reflected indirect light arriving at a point.

Computing the light arriving at pixel (x, y) of the image, using a ray-tracing and photon-mapping hybrid, is the job of the photonRender procedure, shown in Listing 32.15, along with some of the methods it calls.

Listing 32.15: Generating an image sample for pixel (x, y) from the photon map.

1 void App::photonRender(int x, int y) {

2 Radiance3 L_o(0.0f);

3 for (int i = 0; i < m_primaryRaysPerPixel; i++) {

4 const Ray r = defaultCamera.worldRay(x + rnd.uniform(),

5 y + rnd.uniform(), m_currentImage->rect2DBounds());

6 L_o += estimateTotalRadiance(r, 0);

7 }

8 m_currentImage->set(x, y, L_o / m_primaryRaysPerPixel);

9 }

10

11 Radiance3 App::estimateTotalRadiance(const Ray& r, int depth) {

12 Radiance3 L_o(0.0f);

13 if (m_emit) L_o += estimateEmittedLight(r);

14

15 L_o += estimateTotalScatteredRadiance(r, depth);

16 return L_o;

17 }

18

19 Radiance3 App::estimateEmittedLight(Ray r){

20 ...declarations...

21 if (m_world->intersect(r, dist, surfel))

22 L_o += surfel.material.emit;

23 return L_o;

24 }

To generate a measurement at pixel (x, y) we shoot m_primaryRaysPerPixel into the scene, and estimate the radiance returning along each ray. It’s possible that a ray hits a light source; if so, the source’s radiance (EmittedLight) must be counted in the total radiance returning along the ray, along with any light reflected from the luminaire.

We’ve ignored the case where the ray hits a point luminaire (point sources have no geometry in our scene descriptions, so such an intersection is never reported). There are two reasons for this. The first is that the intersection of the ray and the point light is an event with (mathematical) probability zero, so in an ideal program with perfect precision, it should never occur. This is a frequently used but somewhat specious argument: First, the discrete nature of floating-point numbers makes probability-zero events occur with very small, but nonzero, frequency. Second, models like point-lighting are usually taken as a kind of “limit” of nonzero-probability things (like small, disk-shaped lights); if the limit is to make any sense, the effect of the limiting case should be the limit of the effects in the nonlimiting cases. If the disk-shaped lights are given values that increase with the inverse square of the radius, then these nonlimiting cases may produce nonzero effects, which should show up in the limiting case as well.

The other, far more practical, reason for not letting rays hit point lights is that point lights are an abstract approximation to reality, used for convenience, and if you want them to be visible in your scene you can model them as small spherical lights. In our test cases, there are no point lights visible from the eye, so the issue is moot.

In general, not only might light be emitted by the place where the ray meets the scene, but also it may be scattered from there as well. The estimateTotalScatteredRadiance procedure handles this (see Listing 32.16) by summing up the direct light, impulse-scattered indirect light, and diffusely reflected direct light.

Listing 32.16: Estimating the total radiance scattered back toward the course of the ray r.

1 Radiance3 App::estimateTotalScatteredRadiance(const Ray& r, int depth){

2 ...

3 if (m_world->intersect(r, dist, surfel)) {

4 L_o += estimateReflectedDirectLight(r, surfel, depth);

5 if (m_IImp || depth > 0) L_o +=

6 estimateImpulseScatteredIndirectLight(r, surfel, depth + 1);

7 if (m_IDiff || depth > 0) L_o += estimateDiffuselyReflectedIndirectLight(r, surfel);

8 }

9 return L_o;

10 }

In each case, there’s a Boolean control for whether to include this aspect of the light. We’ve chosen to apply this control only to the first reflection (i.e., if m_IImp is false, then impulse-scattered indirect light is ignored only when it goes directly to the eye).

Listing 32.17: Impulse-scattered indirect light.

1 Radiance3 App::estimateImpulseScatteredIndirectLight(const Ray& ray,

2 const SurfaceElement& surfel, int depth){

3 Radiance3 L_o(0.0f);

4

5 if (depth > m_maxBounces) {

6 return L_o;

7 }

8

9 SmallArray<SurfaceElement::Impulse, 3> impulseArray;

10

11 surfel.getBSDFImpulses(-ray.direction(), impulseArray);

12 foreach impulse

13 const SurfaceElement::Impulse& impulse = impulseArray[i];

14

15 Ray r(surfel.geometric.location, impulse.w);

16 r = r.bumpedRay(0.0001f * sign(surfel.geometric.normal.dot( r.direction())),

17 surfel.geometric.normal);

18 L_o += impulse.magnitude* estimateTotalScatteredRadiance(r, depth + 1);

19

20 return L_o;

21 }