Chapter 31. Computing Solutions to the Rendering Equation: Theoretical Approaches

31.1. Introduction

In this chapter we discuss the theory of solving the rendering equation, concentrating on the mathematics of various approaches and on what kinds of approximations are involved in these approaches, deferring the implementation details to the next chapter. Fortunately, much of the mathematics can be understood by analogy with far simpler problems. When we render, we’re trying to compute values of L, the radiance field, or expressions involving combinations (typically integrals) of many values of L. Thus, the unknown is the whole function L. That’s in sharp contrast to the equations like

that we see in algebra class, where the unknown, x, is a single number. Nonetheless, such simple equations provide a useful model for the approximations made in the more complicated task of finding L; we discuss these first, and then go on to apply these ideas to rendering.

31.2. Approximate Solutions of Equations

There’s no hope of solving the rendering equation exactly for any scene with even a moderate degree of complexity. Instead, we are forced to approximate solutions. There are four common forms of approximation that are routinely used in graphics:

• Approximating the equation

• Restricting the domain

• Using statistical estimators

• And bisection/Newton’s method

Because the last of these is not used much in rendering, it’ll get brief treatment. The statistical approach, however, which now dominates rendering, will occupy much of the rest of the chapter.

We’ll discuss these in the context of a much simpler problem: Find a positive real number x for which

The numerical solution of this equation is x = 0.5265 ..., but let’s pretend that we don’t know that, and we’re restricted to computations easily done by hand, like addition, subtraction, multiplication, division, and finding integer powers of a real number.

31.3. Method 1: Approximating the Equation

Instead of solving 50x2.1 = 13, which would involve the extraction of a 2.1th root, we could solve a “nearby” equation like

which simplifies to ![]() , and get the answer x = 0.5. Since multiplication and exponentiation are both continuous, it should be no surprise that the solution to this slightly “perturbed” equation is quite close to the solution of the original (see Figure 31.1). Solving the perturbed equation is easy.

, and get the answer x = 0.5. Since multiplication and exponentiation are both continuous, it should be no surprise that the solution to this slightly “perturbed” equation is quite close to the solution of the original (see Figure 31.1). Solving the perturbed equation is easy.

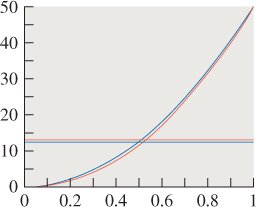

Figure 31.1: The graph of y = 50x2 (blue) is very close to that of y = 50x2.1 (just below it, in red); the x-coordinate of the intersection of the blue graph with the line y = 12.5 is very near that of the red graph with the line y = 13.

You might well complain that the word “nearby” was left undefined in the preceding paragraph. As a different example, consider solving

for x. The solution is x = 105. But if we alter the equation just a little, making the right-hand side 0 instead of 0.1, the solution becomes x = 0: A small perturbation in the equation led to a huge perturbation in the solution. Determining the sensitivity of the solution to perturbations in the equation is (for more complicated equations like the rendering equation) often extremely difficult; in practice, it’s done by saying things like, “It seems pretty obvious that the moonlight coming through my closed bedroom curtains wouldn’t look very different if the moon were oval rather than round.” In other words, it’s done by using domain expertise to decide which kinds of approximations are likely to produce only minor perturbations in the results.

An example of this in rendering is the approximation of reflection from an arbitrary surface by the Lambert reflection model, or the approximation of the “Is that light source visible from this point?” function, by the function that always says “yes.” The first leads to solutions where nothing looks shiny, and the second leads to solutions where there are no shadows; each is often a better approximation than an all-black image, and a poor approximation is frequently better than no solution at all.

31.4. Method 2: Restricting the Domain

Instead of trying to find a positive real number x satisfying

we can ask, “Is there a positive integer satisfying (or nearly satisfying) it?” (See Figure 31.2.) Such a domain restriction can simplify things enormously. In the case of this equation, we see that the left-hand side is an increasing function of x, and that when x = 1, its value is already 50. So any integer solution must lie between zero and one. We need only try these two possible solutions to see which one works (or, if none works, which is “best”). We quickly find that x = 0 gives 50x2.1 = 0, which is too small, and x = 1 gives 50, which is too large.

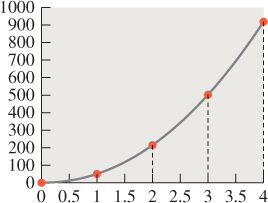

Figure 31.2: The graph of y = 50x2.1, restricted to x = 0, 1, 2, 3, 4, shown as a stem plot with small red circles, atop the graph on the whole real line (shown in gray).

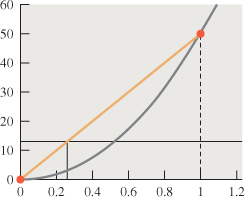

We then have two choices: We can report the “best” solution in the restricted domain (x = 0), or we can perhaps say, “The ideal solution lies somewhere between 0 and 1, much closer to 0 than to 1; linear interpolation gives x = 0.26 as a best-guess answer.” (See Figure 31.3.)

Figure 31.3: Because the value at x = 0 is too small, and at x = 1 it’s too large, we estimate the solution x by intersecting the connect-the-dots plot (orange) with the line y = 13 to get x = 0.26.

Our use of linear interpolation incorrectly assumes that the values of the left-hand side F(x) = 50x2.1 vary almost linearly as a function of x between x = 0 and x = 1, which is why the estimated answer isn’t very close to the true one. More generally, if the domain of some variable is D, and we restrict to a subset D′ ![]() D, then estimating a solution in D from approximate solutions in D′ requires that D′ is “large enough” that any point d of D lies near enough to points of D′ that F(d) can be well inferred from values of F at nearby points of D′.

D, then estimating a solution in D from approximate solutions in D′ requires that D′ is “large enough” that any point d of D lies near enough to points of D′ that F(d) can be well inferred from values of F at nearby points of D′.

We’ll see an example of domain restriction in rendering when we discuss radiosity. Note that methods 1 and 2 both violate the Approximate the Solution principle: they approximate the problem rather than the solution.

31.5. Method 3: Using Statistical Estimators

A third approach is to “estimate” the solution statistically, that is, find a way to produce a sequence of values x1, x2, ... such that each xi is a possible solution, and such that the average an of x1, x2, ..., xn gets closer and closer to a solution as n gets large.

In this case, we’re trying to solve

whose solution is

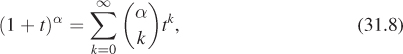

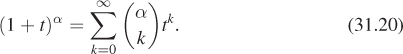

This can be easily evaluated on a computer, but we’re assuming we lack the ability to compute anything more complicated than an integer power of a real number. (When we look at the rendering equation, the corresponding statement will be, “Suppose we lack the ability to compute anything except an integral number of bounces of a ray of light,” which is very reasonable: It’s hard to imagine what it might mean to compute 2.1 bounces of a light ray!) We can still find a solution using the binomial theorem, which says that

where

is defined for any real number α and for k = 0, 1, .... We’ll be applying this to the case ![]() and

and ![]() , so that

, so that ![]() , so that evaluating Equation 31.8 will give us the value of the solution in Equation 31.7.

, so that evaluating Equation 31.8 will give us the value of the solution in Equation 31.7.

To do so requires summing an infinite series, however. The great insight is the realization that the sum of an infinite series can be estimated by looking at individual elements of the series.

31.5.1. Summing a Series by Sampling and Estimation

We now lay the foundations for all the Monte Carlo approaches to rendering, starting with a few simple applications of probability theory.

31.5.1.1. Finite Series

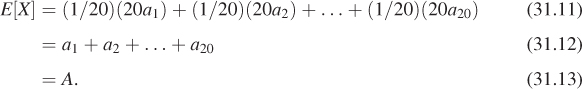

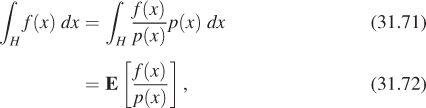

Suppose that we have a finite series

and we want to estimate the sum, A. We can do the following: Pick a random integer i between 1 and 20 (with probability 1/20 of picking each possible number), and let X = 20ai. Then X is a random variable. Its expected value is the weighted average of its values, with weights being the probabilities, that is,

We’ve got a random variable whose expected value is the sum we’re seeking! By actually taking samples of this random variable and averaging them, we can approximate the sum.

Suppose that all 20 numbers a1, a2, ... are equal. What’s the variance of the random variable X? How many samples of X do you need to take to get a good estimate of A in this case?

In general, the variance of X is related to how much the terms in the sequence vary: If all the terms are identical, then X has no variance, for instance. It’s also related to the way we chose the terms, which happens to have been uniform, but we’ll use nonuniform samples in other examples later. When we apply these ideas to rendering, we will end up sampling among various paths along which light can travel; the value being computed will be the light transport along the path. Since some paths carry a lot of light (e.g., a direct path from a light source to your eye) and some carry very little, a large variance is present; to make estimates accurate will require lots of samples, or some other approach to reducing variance. For a basic ray tracer, this means you may need to trace many rays per pixel to get a good estimate of the radiance arriving at a single image pixel.

31.5.1.2. Infinite Series

It’s tempting to generalize to infinite series A = a1 + a2 + ... in the obvious way: Pick a non-negative integer i, and let X = ai; make all choices of i equally probable, and then the expected value of X should be A. There are two problems with this, however. First, there’s the missing factor of 20. In the finite example, we multiplied each ai by 20 because the probability of picking it was 1/20. This means that in the infinite case, we’d need to multiply each ai by infinity, because the probability of picking it is infinitesimal. This doesn’t make any sense at all. Second, the idea of picking a positive integer uniformly at random sounds good, but it’s mathematically not possible. We need a slightly different approach, motivated by Equation 31.11, in which each term of the series is multiplied by the probability of picking that term (1/20) and by the inverse of that probability (20). All we need to do is abandon the idea of a uniform distribution.

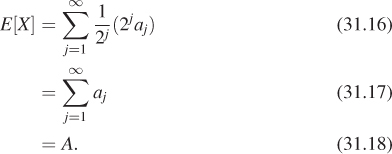

To sum the series

we can pick a non-negative integer j with probability 1/2j so that the probability of picking j = 1 is 1/2 and the probability of picking j = 10 is 1/210 = 1/1024. (This particular choice of probabilities was made because it’s easy to work with, and it’s obvious that the probabilities sum to 1, but any other collection of positive numbers that sum to 1 would work equally well.)

We then let

Just as before, the expected value of X is

And just as before, the variance in the estimate is related to the terms of the series. If aj happens to be 2-j, then the variance is zero and the estimator is great. If aj = 1/j2, then the variance is considerably larger, and we’ll need to average lots of samples to get a low-variance estimate of the result.

As we said, the particular choice we made in picking j—the choice to select j with probability 2-j—was simple, but we could have used some other probability distribution on the positive integers; depending on which distribution we choose, the estimator may have lower or higher variance.

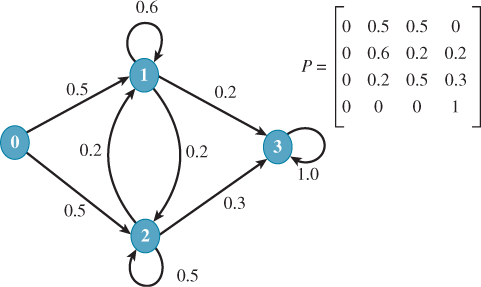

When it comes to applying this approach to rendering, the choice of j will become the choice of “how many bounces the light takes.” If we have a scene in which the albedo of every surface is about 50%, then we expect only about half as much light to travel along paths of length k + 1 as did along paths of length k.

In this case, assigning half the probability to each successive path length makes some sense. In general, picking the right sampling distribution is at the heart of making such Monte Carlo approaches work well.

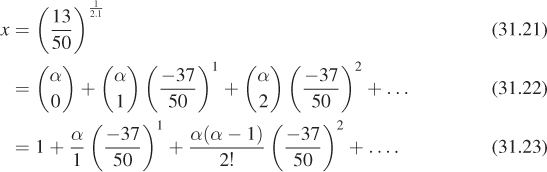

31.5.1.3. Solving 50x2.1 = 13 Stochastically

Applying these methods to our particular equation, we know that

and that in general we can transform the right-hand side of the equation using the binomial theorem

Doing so, with ![]() and

and ![]() , we get

, we get

Now, to estimate a solution, we pick a positive integer j with probability 2–j, and evaluate the jth term. As we wrote this chapter, we flipped coins and counted the number of flips until heads, generating the sequence 3, 3, 1; our three estimates of x are thus the third, third, and first terms of the series, multiplied by 8, 8, and 2, respectively:

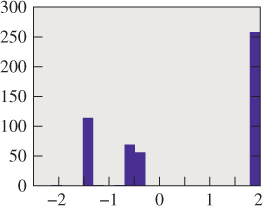

Recall that the correct solution to the problem is 0.5265. The average of our three samples is x = .3024, which admittedly is not a very good estimate of the solution. When we used 10,000 terms, the estimate was 0.5217, which is considerably closer (see Figure 31.4).

Figure 31.4: A histogram of 500 estimates of the root of 50x2.1 = 13; their average is quite near 0.5625.

You may be concerned that we’ve assumed we can write a power series for x, but that when we get to the rendering equation, such a rewrite may not be so easy. Fortunately, in the case of the rendering equation, the rewrite as an infinite series is actually quite easy, although estimating the sum of the resultant series still involves the same randomized approaches.

31.6. Method 4: Bisection

Our final approach to solving the equation is to find a value of x for which 50x2.1 is less than 13 (such as x = 0) and one for which it’s greater than 13 (such as x = 1). Since f(x) = 50x2.1 is a continuous function of x, it must take on the value 13 somewhere between x = 0 and x = 1. We can evaluate ![]() and find that it’s less than 13; we now know that the solution’s between

and find that it’s less than 13; we now know that the solution’s between ![]() and 1. Repeatedly evaluating f at the midpoint of an interval that we know contains the answer, and then discarding half the interval on the basis of the result, rapidly converges on a very small interval that must contain the solution.

and 1. Repeatedly evaluating f at the midpoint of an interval that we know contains the answer, and then discarding half the interval on the basis of the result, rapidly converges on a very small interval that must contain the solution.

This can be seen as a kind of binary search on the real line. There are also “higher order” methods, like Newton’s method, in which we start at some proposed solution x0 and say, “If f were linear, then we could write it as y = f(x0) + f′(x0)(x – x0).” But that function is zero at ![]() . So let’s evaluate f at x1 and see whether it’s any smaller there, and iterate. If x0 happens to be near a root of f, this tends to converge to a root quite fast. If it’s not (or if f′(x0) = 0), then it doesn’t work so well.

. So let’s evaluate f at x1 and see whether it’s any smaller there, and iterate. If x0 happens to be near a root of f, this tends to converge to a root quite fast. If it’s not (or if f′(x0) = 0), then it doesn’t work so well.

Despite the appeal of these approaches, there’s no easy analog in the case of functional equations (ones where the answer is a function rather than a number) like the rendering equation. There’s no simple way to generalize the notion of one number being between two others to the more general category of functions.

Nonetheless, bisection gets used a lot in graphics, and these four approaches to solving equations serve as archetypes for solving equations throughout the field.

31.7. Other Approaches

There are other approaches to equations that cannot be easily illustrated with our 50x2.1 = 13 example. For instance, you might say, “I can solve systems of two linear equations in two unknowns ...but only if the coefficients are integers rather than arbitrary real numbers.” In doing so, you’re not really solving the general problem (“two linear equations in two unknowns”), but it may be that the subclass of problems you can solve is interesting enough to merit attention. In graphics, for instance, early rendering algorithms could only work with a few point lights rather than arbitrary illumination; some later algorithms could only work on scenes where all surfaces were Lambertian reflectors, etc.

As a second example, you may arrive at a method of solution that’s too complex, and choose to approximate the method of solution rather than the original equation. For instance, the Monte Carlo approach used to sum an infinite series above might seem overly complicated, and you might choose to just sum the first four terms. This sounds laughable, but in practice it can often work quite well. Most basic ray tracers, for instance, trace secondary rays only to a predetermined depth, which amounts to truncating a series solution after a fixed number of terms.

31.8. The Rendering Equation, Revisited

Recall that a radiance field is a function on the set ![]() of all surface points in our scene, and that it takes a point, P

of all surface points in our scene, and that it takes a point, P ![]()

![]() , and a direction, ω, and returns a real number indicating the radiance along a ray leaving P in direction ω. Our model of the radiance field is a function L :

, and a direction, ω, and returns a real number indicating the radiance along a ray leaving P in direction ω. Our model of the radiance field is a function L : ![]() × S2

× S2 ![]() R.

R.

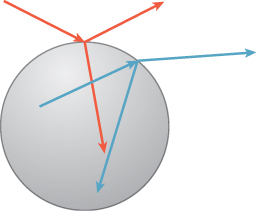

There is a subtlety here that we discussed in Section 29.4: There are some “sur-face points” that are part of two surfaces. For instance, if we have a solid glass sphere (see Figure 31.5), the point at the north pole of the sphere is really best thought of as two points: one on the “outside” and the other on the “inside.” Light traveling northward at the outer point is either reflected or transmitted, while light traveling northward at the inner point is arriving there and is about to be transmitted or reflected by the glass-air interface. As we suggested, we can enhance the notion of the light field to take three arguments—a point, a direction, and a normal vector that defines the “outside” for this point—but in the remainder of this chapter, we’re going to instead discuss only reflection (except at a few carefully indicated points), since (a) the two-points-in-one-place idea complicates the notation, which is complex enough already, and (b) the actual changes in the programs that we’ll see in Chapter 32 to account for transmission are relatively minor and straightforward. As for the matter of keeping two separate copies of the north pole, in practice, as we’ll discuss in Chapter 32, we’ll only keep a single copy of the geometry, and there will be no explicit representation of the light field; on the other hand, the meaning of an arriving light ray, and how it is treated, will depend on the dot product of its direction with the unit normal n, resulting in several if-else clauses in our programs.

Figure 31.5: Light from above the sphere both reflects and refracts, as does light in the inside of the sphere.

We’ll continue to write fs for the scattering function, however, but you’ll need to remember that in the case of transmission, some ω · n terms may need absolute-value signs on them.

The rendering equation characterizes the radiance field (P, ω) ![]() L(P, ω) in a scene by saying that the radiance at some surface point, in some direction, is a sum of (a) the radiance emitted at that point in that direction, and (b) all the incoming light at that point that is scattered in that direction. This equation has the form

L(P, ω) in a scene by saying that the radiance at some surface point, in some direction, is a sum of (a) the radiance emitted at that point in that direction, and (b) all the incoming light at that point that is scattered in that direction. This equation has the form

Recall the meaning of the terms:

• E is the emitted radiance field, with E(P, ω) = 0 unless P is a point of some luminaire, and ω is a direction in which that luminaire emits light from P.

• T(L) is the scattered radiance field due to L;T(L)(P, ωo) is the light scattered from the point P in the direction ωo when the radiance field for the whole scene is L.1

1. We use the letter T rather than S (for “scattering”) because S will be used later in describing various light paths.

To be specific, T is defined by

The critical feature of this expression, for our current discussion, is that L appears in the integral.

Note that if we solve Equation 31.27 for the unknown L, we don’t yet have a picture! We have a function, L, which can be evaluated at a bunch of places to build a picture. In particular, we might evaluate L(P, ω) for each pixel-center P and the corresponding vector ω from the pinhole of a pinhole camera through P (assuming a physical camera, in which the film plane is behind the pinhole).

What are the domain and codomain of T? In other words, what sort of object does T operate on, and what sort of result does it produce? The answer to the latter question is not “It produces real numbers.”

![]() The function T is a higher-order function: It takes in functions and produces new functions. You’ve seen other such higher-order functions, like the derivative, in calculus class, and perhaps have encountered programming languages like ML, Lisp, and Scheme, in which such higher-order functions are commonplace.

The function T is a higher-order function: It takes in functions and produces new functions. You’ve seen other such higher-order functions, like the derivative, in calculus class, and perhaps have encountered programming languages like ML, Lisp, and Scheme, in which such higher-order functions are commonplace.

![]() Let’s consider this integral from the computer science point of view. We have a well-defined problem we want to solve (“find L”), and we can examine how difficult a problem this is. First, for even fairly trivial scenes, it’s provable that there’s no simple closed-form solution. Second, observe that the domain of L is not discrete, like most of the things we see in computer science, but instead is a rather large continuum—there are three spatial coordinates and two direction coordinates in the arguments to L, so it’s a function of five real variables. (Note: In graphics, it’s common to call this a “five-dimensional function,” but it’s more accurate to say that it’s a function whose domain is five-dimensional.) In computer science terminology, we’d call a classic problem like a traveling salesman problem or 3-SAT “difficult,” because the only known way to solve such a problem is no simpler, in big-O terms, than enumerating all potential solutions. By comparison, because of the continuous domain, the rendering equation is even harder, because it’s infeasible even to enumerate all potential solutions. Your next thought may be to develop a nondeterministic approach to approximate the solution. That’s a good intuition, and it’s what most rendering algorithms do. But unlike many of the nondeterministic algorithms you’ve studied, while we can characterize the runtime of these randomized graphics algorithms, that in itself isn’t meaningful, because the errors in the approximation are unbounded in the general case: Because the domain is continuous, and we can only work with finitely many samples, it’s always possible to construct a scene in which all the light is carried by a few sparse paths that our samples miss.

Let’s consider this integral from the computer science point of view. We have a well-defined problem we want to solve (“find L”), and we can examine how difficult a problem this is. First, for even fairly trivial scenes, it’s provable that there’s no simple closed-form solution. Second, observe that the domain of L is not discrete, like most of the things we see in computer science, but instead is a rather large continuum—there are three spatial coordinates and two direction coordinates in the arguments to L, so it’s a function of five real variables. (Note: In graphics, it’s common to call this a “five-dimensional function,” but it’s more accurate to say that it’s a function whose domain is five-dimensional.) In computer science terminology, we’d call a classic problem like a traveling salesman problem or 3-SAT “difficult,” because the only known way to solve such a problem is no simpler, in big-O terms, than enumerating all potential solutions. By comparison, because of the continuous domain, the rendering equation is even harder, because it’s infeasible even to enumerate all potential solutions. Your next thought may be to develop a nondeterministic approach to approximate the solution. That’s a good intuition, and it’s what most rendering algorithms do. But unlike many of the nondeterministic algorithms you’ve studied, while we can characterize the runtime of these randomized graphics algorithms, that in itself isn’t meaningful, because the errors in the approximation are unbounded in the general case: Because the domain is continuous, and we can only work with finitely many samples, it’s always possible to construct a scene in which all the light is carried by a few sparse paths that our samples miss.

![]() One strategy for generating approximate solutions is to discretize the domain in some way so that we can bound the error. That’s also a good idea, because we might then be able to enumerate some sizable portion of the solution space. I can’t look at light transport for every point on a curved surface, but I can look at it for every vertex of a triangle-mesh approximation of that surface. Graphics isn’t unique in this. The moment you take computer science out of pure theory and start applying it to physics, you’ll find that problems are often of more than exponential complexity, and you often need to find good approximations that work well on the general case, even if you can’t bound the error in all cases.

One strategy for generating approximate solutions is to discretize the domain in some way so that we can bound the error. That’s also a good idea, because we might then be able to enumerate some sizable portion of the solution space. I can’t look at light transport for every point on a curved surface, but I can look at it for every vertex of a triangle-mesh approximation of that surface. Graphics isn’t unique in this. The moment you take computer science out of pure theory and start applying it to physics, you’ll find that problems are often of more than exponential complexity, and you often need to find good approximations that work well on the general case, even if you can’t bound the error in all cases.

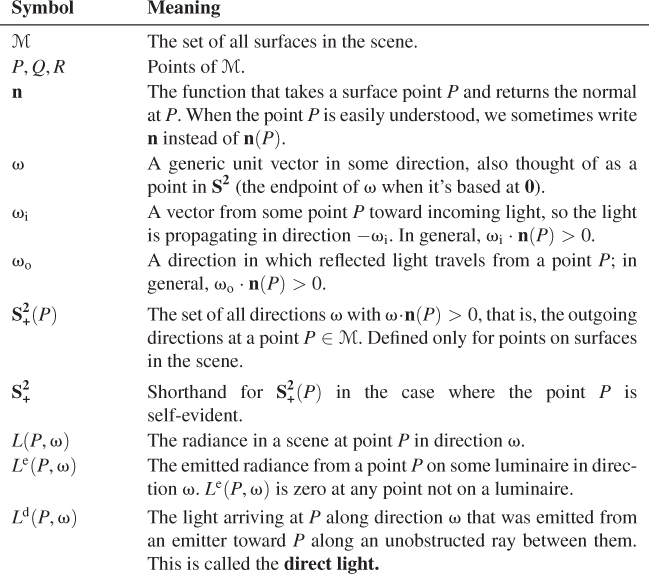

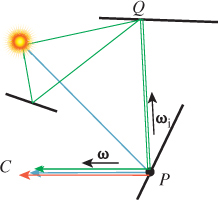

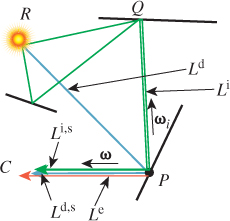

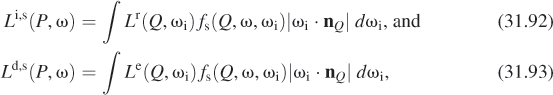

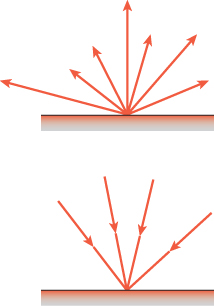

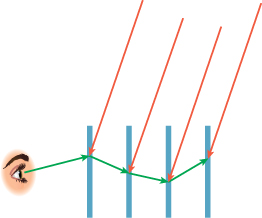

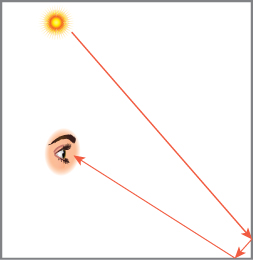

Recall from Chapter 29 the division of light in a scene into two categories (see Figure 31.6): At a point of a surface, light may be arriving from various points in the distance, a condition called field radiance. This light hits the surface and is scattered; the resultant outgoing radiance is called surface radiance.

Figure 31.6: Top: The surface radiance consists of all the light leaving a point of a surface. Bottom: The field radiance consists of all the incoming light.

It’s also helpful to divide radiance even further: The surface radiance at a point P can be divided into the emitted radiance there (nonzero only at luminaires) and the reflected radiance. These correspond to the two terms on the right-hand side of the rendering equation. Dually, the field radiance at P can be divided into direct lighting, Ld(P, ω) at P (i.e., radiance emitted by luminaires and traveling through empty space to P), and indirect lighting, Li(P, ω) at P (i.e., radiance from a point Q to a point P along the ray P – Q, but that was not emitted at Q). We’ll return to these terms in the next chapter.

Suppose that P and Q are mutually visible. How are the emitted and reflected radiance at Q, in the direction P – Q, related to the direct and indirect light at P, in the direction P – Q? Express these in terms of Ld, Li, Lr, and Le, being careful about signs. Use ω = S(P – Q) in expressing your answer.

Writing the rendering equation in the form of Equation 31.27 makes it clear that the scattering operator transforms one radiance field (L) into another (T(L)). Not only does it do so, but it does so linearly: If we compute T(L1 + L2), we get T(L1) + T(L2), and T(rL) = rT(L) for any real number r, as you can see from Equation 31.28. This linearity doesn’t arise from some cleverness in the formulation of the rendering equation. It’s a physically observable property, commonly called the principle of superposition in physics, and it’s extremely fortunate, for those hoping to solve the rendering equation, that it holds. Later, in Chapter 35, we’ll see this principle of superposition applying to forces and velocities and other things that arise in physically based animations, and once again it will simplify our work considerably.

We can rewrite the rendering equation in the form

or even

where I denotes the identity operator: It transforms L into L, and we’ve used TL to denote the application of the operator T to the radiance field L.

Much of the remainder of this chapter describes approaches to solving this equation. Remember as we examine such approaches that Le, the light emitted by each light source, is given as an input, as is the bidirectional reflectance distribution function (BRDF) at each surface point, so that the operator T can be computed. The unknown is the radiance field, L.

![]() The similarity of this formulation to the way eigenvalue problems are described in linear algebra is no coincidence. We’ll use many of the same techniques that you saw in studying eigenvalues as we look at solving the rendering equation.

The similarity of this formulation to the way eigenvalue problems are described in linear algebra is no coincidence. We’ll use many of the same techniques that you saw in studying eigenvalues as we look at solving the rendering equation.

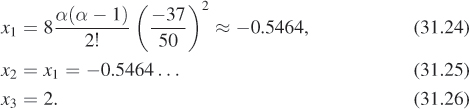

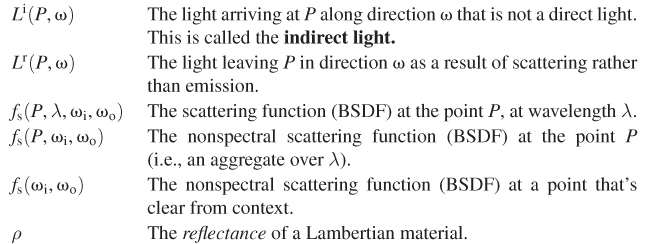

31.8.1. A Note on Notation

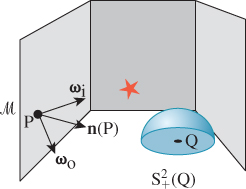

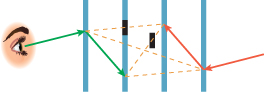

We summarize in Table 31.1 the notation we’ll use repeatedly throughout this chapter. Figure 31.7 gives the geometric situation to which items in this table refer.

Figure 31.7: Some standard notation. The vector ωi points toward the light source (indicated by a star).

Notice that the subscript “i” in ωi is set in Roman font rather than italics; that’s to indicate that it’s a “naming-style” subscript (like VIN to denote input voltage) rather than an “indexing-style” subscript (like bi, denoting the ith term in a sequence).

When we aggregate over λ, it’s important to decide once and for all whether this aggregate denotes a sum (perhaps an integral from λ = 400 nm to 750 nm), or an average; you can do either one in your code, but you must do it consistently.

Occasionally we will have several incoming vectors at a point P, and we’ll need to index them with names like ω1, ω2, .... When we want to refer to a generic vector in this list, we’ll use ωj, avoiding the subscript i to prevent confusion with the previous use. You will have to infer, from context, that these vectors are all being used to describe incoming light directions, that is, serving in the role of ωi.

As we discussed in Chapter 26, many terms, and associated units, are used to describe light. In an attempt to avoid problems, we’ll use just a few: power (in watts), flux (in watts per square meter), radiance (in watts per square meter steradian), and occasionally spectral radiance (in watts per square meter steradian nanometer).

31.9. What Do We Need to Compute?

Much of the work in rendering falls into a few categories:

• Developing data structures to make the ray-casting operation fast, which we discuss in Chapter 36

• Choosing representations for the function fs that are general enough to capture the sorts of reflectivity exhibited by a wide class of surfaces, yet simple enough to allow clever optimizations in rendering, which we’ve already seen in Chapter 27

• Determining methods to approximate the solution of the rendering equation

It is this last topic that concerns us in this chapter.

The rendering equation characterizes the function L that describes the radiance in a scene. Do we really need to know everything about L? Presumably radiance that’s scattered off into outer space (or toward some completely absorbing surface) is of no concern to us—it cannot possibly affect the picture we’re making. In fact, if we’re trying to make a picture seen from a pinhole camera whose pinhole is at some point C, the only values we really care about computing are of the form L(C, ω). To compute these we may need to compute other values L(P, η) in order to better estimate the values we care about.

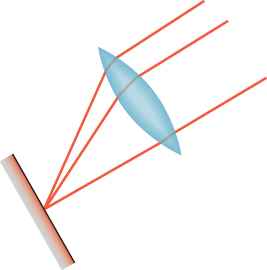

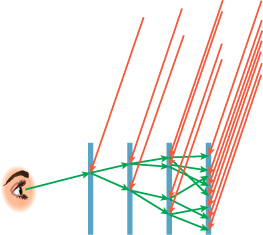

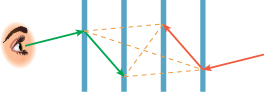

Suppose, however, that we want to simulate an actual camera, with a lens and with a sensor array like the CCD array in many digital cameras. To compute the sensor response at a pixel P, we need to consider all rays that convey light to P—rays from any point of the lens to any point of the sensor cell corresponding to P (see Figure 31.8).

Figure 31.8: Light along any ray from the lens to the sensor cell contributes to the measured value at that cell.

As we said in Chapter 29, light arriving along different rays may have different effects: Light arriving orthogonal to the film plane may provoke a greater response than light arriving at an angle, and light arriving near the center of a cell may matter more than light arriving near an edge—it all depends on the structure of the sensor. The measurement equation, Equation 29.15, says that

where Mij is a sensor-response function that tells us the response of pixel (i, j) to radiance along the ray through P in direction –ω.

One perfectly reasonable idealization is that the pixel area is a tiny square, and that Mij is 1.0 for any ray through the lens that meets this square, and 0 otherwise. Even with this idealization, however, the pixel value that we’re hoping to compute is an integral over the pixel area and the set of directions through the lens. Even if we assume a lens so tiny that the latter integral can be accurately estimated by a single ray (the pinhole approximation), there’s still an area integral to estimate.

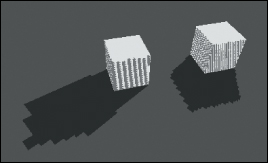

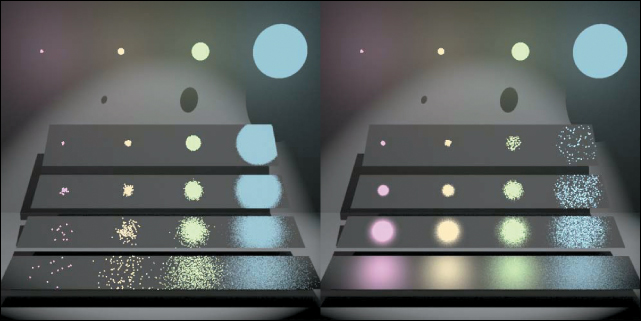

One very bad way to estimate this integral is with a single sample, taken at the center of the pixel region (i.e., the simplest ray-tracing model, where we shoot a ray through the pixel center). What makes this approach particularly bad are aliasing artifacts: If we’re making a picture of a picket fence, and the spacing of the pickets is slightly different from the spacing of the pixels, the result will be large blocks of constant color, which the eye detects as bad approximations of what should be in each pixel (see Figure 31.9).

Figure 31.9: Pixel-center samples of a picket-fence scene lead to large blocks of black-and-white pixels.

If we instead take a random point in each pixel, then this aliasing is substantially reduced (see Figure 31.10). Instead, we see salt-and-pepper noise in the image.

Figure 31.10: Random ray selection within each pixel reduces aliasing artifacts, but replaces them with noise.

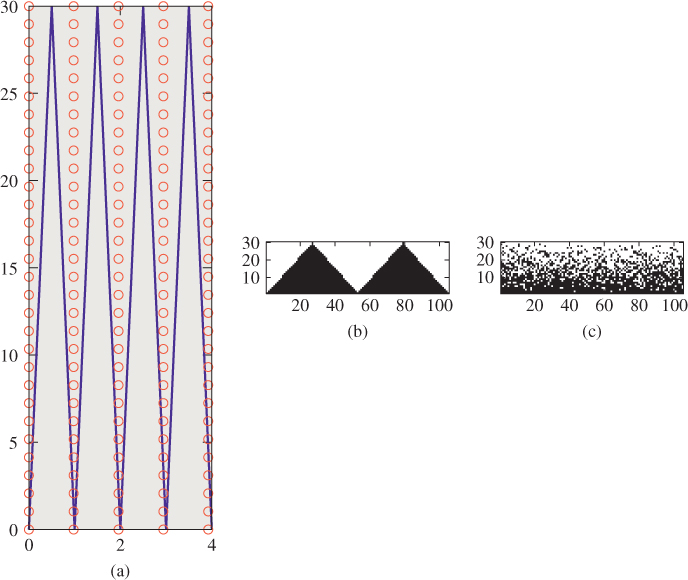

Because our visual system does not tend to see “edges” in such noise, but is very likely to see incorrect edges in the aliased image, the tradeoff of aliases for noise is a definite improvement (see Figure 31.11).

Figure 31.11: (a) A close-up view of a portion of a sawtooth-shaped geometry (note that each sawtooth occupies a little more than one unit on the x-axis) and the locations of pixel samples (small circles). (b) The resultant image. Even though there are 102 teeth in this 104-pixel-wide image, aliasing causes us to see just two. (c) When we take “jittered samples” (each sample is moved up to a half pixel both vertically and horizontally), the resultant image is noisy, but exhibits no aliasing.

This notion of taking many (randomized) samples over some domain of integration and averaging them applies in far more generality. We can integrate over wavelength bands (rather than doing the simpler RGB computations that are so common, which amount to a fixed-sample strategy). We can integrate over the lens area to get depth-of-field effects and chromatic aberration. For a scene that’s moving, we can simulate the effect of a shutter that’s open for some period of time by integrating over a fixed “time window.” All of these ideas were described in a classic paper by Cook et al. [CPC84], which called the process distributed ray tracing. Because of possible confusion with notions of distributed processing, we prefer the term distribution ray tracing which Cook now uses to describe the algorithm [Coo10]. We’ll discuss the particular sampling strategies used in distribution ray tracing in Chapter 32.

In short: To render a realistic image in the general case, we need to average, in some way, many values, each of which is L(P, ω) for some point P of the image plane and some direction ω ![]() S2.

S2.

31.10. The Discretization Approach: Radiosity

We’ll now briefly discuss radiosity—an approach that produces renderings for certain scenes very effectively—and then return to the more general scenes that require sampling methods and discuss how to effectively estimate the value L(P, ω) in the algorithms that work on those scenes.

The radiosity method for rendering differs from the methods we’ve seen in Chapter 15; in those methods, we started with the imaging rectangle and said, “We need to compute the light that arrives here, so let’s cast rays into the scene and see where they hit, and compute the light arriving at the hit point by various methods.” Whether we did this one pixel at a time or one light at a time or one polygon at a time was a matter of implementation efficiency. The key thing is that we said, “Start from the imaging rectangle, and use that to determine which parts of the light transport to compute.” A radically different approach is to simulate the physics directly: Start with light emitted from light sources, see where it ends up, and for the part that ends up falling on the imaging rectangle, record it. This approach was taken by Appel [App68], who cast light rays into the scene and then, at the image plane location of the intersection point (if it was visible), drew a small mark (a “+” sign). In areas of high illumination there were many marks; in areas of low illumination, almost none. By taking a black-and-white photograph of the result (which was drawn with a pen on plotter paper) and then examining the negative for the photograph, he produced a rendering of the incident light.

Radiosity takes a similar approach, concentrating first on the light in the scene, and only later on the image produced. Because the surfaces in the scene are assumed Lambertian, the transformation from a representation of the surface radiance at all points of the scene to a final rendering is relatively easy.

The radiosity approach has two important characteristics.

• It’s a solution to a subproblem, in the sense that it only applies to Lambertian reflectors, and is generally applied to scenes with only Lambertian emitters.

• It’s a “discretization” approach: The problem of computing L(P, ωo) for every P ![]()

![]() and

and ![]() is reduced to computing a finite set of values. The scene is partitioned into small patches, and we compute a radiosity value for each of these finitely many patches.

is reduced to computing a finite set of values. The scene is partitioned into small patches, and we compute a radiosity value for each of these finitely many patches.

The division into patches means that radiosity is a finite element method, in which a function is represented as a sum of finitely many simpler functions, each typically nonzero on just a small region. (The word “finite” here is in contrast to “infinitesimal”: Rather than finding radiance at every single point of the surface, each point being “infinitesimal,” we compute a related value on “finite” patches.)

Radiosity was the first method to produce images exhibiting color bleeding (in which a red wall meeting a white ceiling could cause the ceiling to be pink near the edge where they meet), and not requiring an “ambient term” in its description of reflection—a term included in scattering models (see Chapter 27) to account for all the light in a scene that wasn’t “direct illumination,” which had presented problems for years previously. Figure 31.12 shows an example.

Figure 31.12: A radiosity rendering of a simple scene. Note the color-bleeding effects. (Courtesy of Greg Coombe, “Radiosity on graphics hardware” by Coombe, Harris and Lastra, Proceedings of Graphics Interface 2004.)

The first step in radiosity is to partition all surfaces in the scene into small (typically rectangular) patches. The patches should be small enough that the illumination arriving at a patch is roughly constant over the patch so that the light leaving the patch will be too, and hence can be represented by a single value. This “meshing” step has a large impact on the final results, which we’ll discuss presently. For now, let’s just assume the scene surfaces are partitioned into many small patches. We’ll use the letters j and k to index the patches, and use Aj to indicate the area of patch j, Bj to denote a value proportional to the radiance leaving any point of patch j, in any outgoing direction2 ωo, and nj to indicate the normal vector at any point of patch j.

2. By “outgoing direction,” we mean that ωo · nj > 0; the radiance is independent of direction because the surfaces are assumed Lambertian.

Each patch j is assumed to be a Lambertian reflector, so its BRDF is a constant function,

where ρj is the reflectivity and P is any point of the patch. Furthermore, each luminaire is assumed to be a “Lambertian” emitter of constant radiance, that is, Le(P, ωo) is a constant for P in patch j and ωo an outgoing vector at P.

This simple form for scattering and the assumption about constant emission together mean that the rendering equation can be substantially simplified.

For the moment, let’s make four more assumptions. The first is that the scene is made up of closed 2-manifolds, and no 2-manifold meets the interior of any other (e.g., two cubes may meet along an edge or face, but they may not interpenetrate). This also means that we don’t allow two-sided surfaces (i.e., a single polygon that reflects from both sides)—these must be modeled as thin, solid panels instead.

For the other three, we let P and P′ be points of patch i and Q and Q′ be points of patch j, and ni and nj be the patch normal vectors. Then we assume the following.

• The distance between P and Q is well approximated by the distance from the center Cj of patch j to the center Ck of patch k.

• nj · (P –Q) ≈ nj(P′ –Q′), that is, any two lines between the patches are almost parallel.

• If nj · nk < 0, then every point of patch j is visible from patch k, and vice versa. So, if two patches face each other, then they are completely mutually visible.

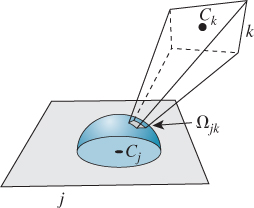

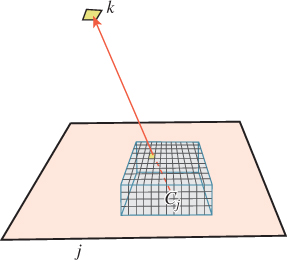

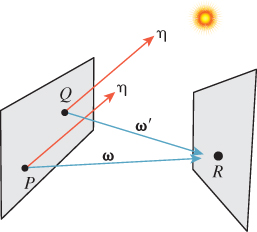

We need one more definition: We let Ωjk denote the solid angle of directions from patch j to patch k (see Figure 31.13), assuming that they are mutually visible; if they’re not, then Ωjk is defined to be the empty set.

Figure 31.13: Patch k is visible from patch j; when it’s projected onto the hemisphere around Cj, we get a solid angle called ωjk.

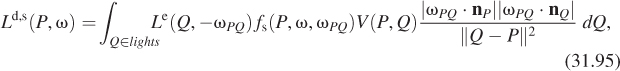

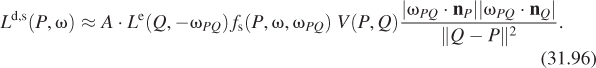

Now let’s use these assumptions to simplify the rendering equation. Let’s start with a point P in some patch j. The rendering equation says that for a direction ωo with ωo · nj > 0, that is, an outgoing direction from patch j,

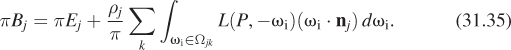

We now introduce some factors of π to simplify the equation a bit. We let Bj = L(P, ωo)/π. Since L(P, ωo) is assumed independent of the outgoing direction ωo, the number Bj does not have ωo as a parameter. Similarly, we define Ej = Le(P, ωo)/π. And substituting fr(P, ωi, ωo) = ρj/π, we get

The inner integral, over all directions in the positive hemisphere, can be broken into a sum over directions in each Ωjk, since light arriving at patch j must arrive from some patch k. The equation thus becomes

The radiance in the integral is radiance leaving patch k, and is therefore just πBk. Substituting, and rearranging the constant factors of π a little, we get

Dividing through by π, we get

The coefficient of Bk inside the summation is called the form factor fjk for patches j and k. So the equation becomes

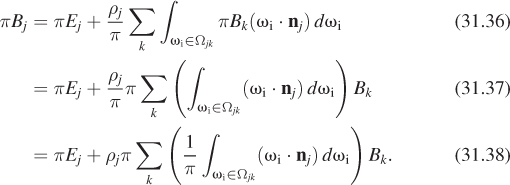

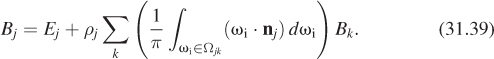

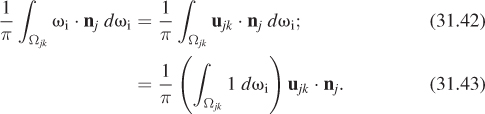

which is called the radiosity equation. Before we try to solve it, let’s look at the form factor more carefully. For patches j and k, it is

Using the second assumption (that all rays from patch k to patch j are essentially the same) we see that the vector ωi can be replaced by ujk = S(Ck – Cj), the unit vector pointing from the center of patch j to the center of patch k. Since ujk · nj is a constant, it can be factored out of the integral.

The form factor can then be written:

The remaining integral is just the measure of the solid angle Ωjk, which is the area Ak of patch k, divided by the square of the distance between the patches (i.e., by ||Cj–Ck||2), using the third assumption and scaled down by the cosine of the angle between nk and ujk (by the Tilting principle). Thus, the form factor becomes

(a) The form of Equation 31.44 makes it evident that fjk/Ak = fkj/Aj. Explain why, if j and k are mutually visible, exactly one of the two dot products is negative.

(b) Suppose that patch k is enormous and occupies essentially all of the hemisphere of visible directions from patch j. What will the value of fjk be, approximately?

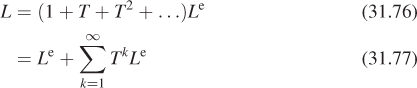

If we compute all the numbers fjk and assemble them into a matrix, which we multiply by a diagonal matrix D(ρ) whose jth diagonal entry is ρj, and we assemble the radiosity values Bj and emission values Ej into vectors b and e, then the radiosity equation, under the assumptions listed above, becomes

This can be simplified (just like the integral form of the rendering equation) to

which is just a simple system of linear equations (albeit possibly with many unknowns).

Standard techniques from linear algebra can be used to solve this equation (see Exercise 31.2).![]() The existence of a solution depends on the matrix D(ρ)F being “small” compared to the identity, that is, having all eigenvalues less than one. This is a consequence of our assumption that all the reflectivities were less than one (and your computation of the largest possible form factor). Note that we do not suggest solving the equation by inverting the matrix; in general that’s O(n3), while approximation techniques like Gauss-Seidel work extremely well (and much faster) in practice.

The existence of a solution depends on the matrix D(ρ)F being “small” compared to the identity, that is, having all eigenvalues less than one. This is a consequence of our assumption that all the reflectivities were less than one (and your computation of the largest possible form factor). Note that we do not suggest solving the equation by inverting the matrix; in general that’s O(n3), while approximation techniques like Gauss-Seidel work extremely well (and much faster) in practice.

Computing fjk is a once-per-scene operation. Once the matrix F is known, we can vary the lighting conditions (the vector e) and then recompute the emitted radiance at each patch center (the vector b) quite quickly.

Once we know the vector b, how do we create a final image, given a camera specification? We can create a scene consisting of rectangular patches, where patch j has value Bj, and then rasterize the scene from the point of view of the camera. Instead of computing the lighting at each pixel, we use the value stored for the surface shown at that pixel: if that pixel shows patch j, we store the value πBj at that pixel. The resultant radiance image is a radiosity rendering of the scene.

This, however, is rarely done as described; such a radiosity rendering looks very “blocky,” while we know from experience that totally Lambertian environments tend to have very smoothly varying radiance. Instead of rendering the computed radiance values directly, we usually interpolate them between patch centers, using some technique like bilinear interpolation, or even some higher-order interpolation. This is closely analogous to the approach discussed in Section 31.4, in which we solved an equation on the integers and then interpolated to guess a solution on the whole real line. In this case, we’ve found a piecewise-constant function (represented by the vector b) that satisfies our discretized approximation of the rendering equation, but we’re displaying a different function, one that’s not piecewise constant.

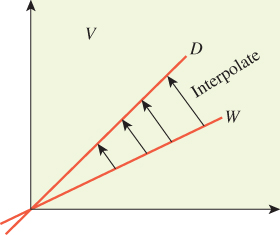

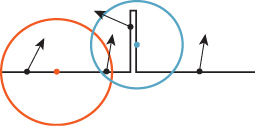

![]() What we’ve done is to take the space V of all possible surface radiance fields, and consider only a subset W of it, consisting of those that are piecewise constant on our patches. We’ve approximated the equation and found a solution to this in W; we’ve then transformed this solution (by linear interpolation) into a different subspace D consisting of all piecewise-linear radiance fields. If D and W are “similar enough,” then this is somewhat justified (see Figure 31.14).

What we’ve done is to take the space V of all possible surface radiance fields, and consider only a subset W of it, consisting of those that are piecewise constant on our patches. We’ve approximated the equation and found a solution to this in W; we’ve then transformed this solution (by linear interpolation) into a different subspace D consisting of all piecewise-linear radiance fields. If D and W are “similar enough,” then this is somewhat justified (see Figure 31.14).

Figure 31.14: Schematically, the space V of all surface-radiance fields contains a subspace W of piecewise constant fields, and another subspace D of piecewise linear fields. There’s a map from W to D defined by linear interpolation.

One way to address this apparent contradiction is to not assume that the radiance is piecewise constant, and instead assume it’s piecewise linear, or piecewise quadratic, and do the corresponding computations. Cohen and Wallace [CWH93] describe this in detail.

The computation of form factors is the messiest part of the radiosity algorithm. One approach is to render the entire scene, with a rasterizing renderer, five times, projecting onto the five faces of a hemicube, (the top half of a cube as shown in Figure 31.15). Rather than storing a radiance value at each pixel, you store the index k of the face visible at that pixel. You can precompute the projected solid angle for each “pixel” of a hemicube face once and for all; to compute the projected solid angle subtended by face k, you simply sum these pixel contributions over all pixels storing index k. For this to be effective, the hemicube images must have high enough resolution that a typical patch projects to hundreds of hemicube “pixels”; as scene complexity grows (or as we reduce the patch size to make the “constant radiance on each patch” assumption more correct), this requires hemicube images with increasingly higher resolution.

Figure 31.15: We project the scene onto a hemicube around P; since patch k is visible from P through the pixel shown, the hemicube image at that pixel stores the value k.

In solving the radiosity equation, Equation 31.46, some approximation techniques do not use every entry of F; it therefore makes sense to compute entries of F on the fly sometimes, perhaps caching them as you do so. Various approaches to this are described in great detail by Cohen and Wallace [CWH93].

Before we leave the topic of radiosity, we should mention four more things.

First, in our development, we assumed that patches were completely mutually visible; the hemicube approach to computing form factors removes this requirement. On the other hand, the hemicube approach does assume that the solid angle subtended by patch k from the center of patch j is a good representation of the solid angle subtended at any other point of patch j. That’s fine when j and k are distant, but when they’re nearby (e.g., one is a piece of floor and another is a piece of wall, and they share an edge) the assumption is no longer valid. The form-factor computation must then be written out as an integral over all points of the two patches, and even for simple geometries it has, in general, no simple expression. Schroeder [SH93] expresses this form factor in terms of (fairly) standard functions, but the expression is too complex for practical use.

Second, meshing has a large impact on the quality of a radiosity solution; in particular, if there are shadow edges in the scene, the final quality is far better if the mesh edges are aligned with those shadow edges, or if the patches near those edges are very small (so that they can effectively represent the rapid transition in brightness near the edge). Lischinski et al. [LTG92] describe approaches to precomputing meshes that are well adapted to representing the rapid transitions that will appear during a radiosity computation.

A different approach to the meshing problem is to examine, for each patch, the assumption of constant irradiance across the patch. We do this by evaluating the irradiance at the corners of the patch and comparing them. If the difference is great enough, we split the patch into two smaller patches and repeat, thus engaging in progressive refinement. This approach is not guaranteed to work: It’s possible that, for some patch, the irradiance varies wildly across the patch but happens to be the same at all corners; in this case, we should subdivide, but we will not. One thing that’s fortunate about this approach is that when we subdivide, there’s relatively little work to do: We need to compute the form factors for the newly generated subpatches and remove the form factors for the patch that was split. We also need to take the current surface-radiance estimate for the split patch and use it to assign new values to the subpatches; it suffices to simply copy the old value to the subpatches, although cleverer approaches may speed convergence.

Third, although radiosity, as we have described it, treats only pure-Lambertian surfaces and emitters, one can generalize it in many directions: Instead of assuming that outgoing radiance is independent of direction, one can build meshes in both position and direction (i.e., subdivide the outgoing sphere at point Ci into small patches, on each of which the radiance is assumed constant); this allows for more general reflectance functions, but it increases the size of the computation enormously. Alternatively, one can represent the hemispherical variation of the emitted light in some other basis, such as spherical harmonics; an expansion in spherical harmonics is the higher-dimensional analogue of writing a periodic function using a Fourier series. Ramamoorthi et al. [RH01] have used this approach in studying light transport. In each case, specular reflections are difficult to handle: We either need very tiny patches on the sphere’s surface, or we need very high-degree spherical harmonics, both of which lead to an enormous increase in computation. One approach to this problem is to separate out “the specular part” of light transport into a separate pass. Hybrid radiosity/ray-tracing approaches [WCG87] attempt to combine the two methods, but this approach to rendering has largely given way to stochastic approaches in recent years.

Fourth, we’ve been a little unfair to radiosity. The simplification of the rendering equation under the assumption that all emittance and reflectance is Lambertian is the true “radiosity equation.” It’s quite separate from the division of the scene into patches, and the resultant matrix equation. Nonetheless, the two are often discussed together, and the matrix form is often called the radiosity equation. Cohen and Wallace’s first chapter discusses radiosity in full generality, and treats the discretization approach we’ve described as just one of many ways to approximate solutions to the equation.

31.11. Separation of Transport Paths

The distinction between diffuse and specular reflections is so great that it generally makes sense to handle them separately in your program. For instance, if a surface reflects half its light in the mirror direction, absorbs 10%, and scatters the remaining 40% via Lambert’s law, your code for computing an outgoing scattering direction from an incoming direction will look something like that in Listing 31.1. This is the algorithmic version of the discussion in Section 29.6, and it involves the ideas of mixed probabilities discussed in Chapter 30.

Listing 31.1: Scattering from a partially mirrorlike surface.

1 Input: an incoming direction wi, and the surface normal n

2 Output: an outgoing direction wo, or false if the light is absorbed

3

4 function scatter(...):

5 r = uniform(0, 1)

6

7 if (r > 0.5): // this is mirror scattering

8 wo = -wi + 2 * dot(wi, n) * n;

9 else if (r > 0.1): // diffuse scattering

10 wo = sample from cosine-weighted upper hemisphere

11 else: // absorbed

12 return false;

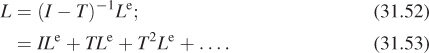

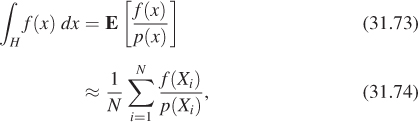

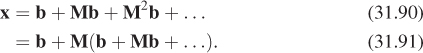

31.12. Series Solution of the Rendering Equation

The rendering equation, written in the form

is an equation of the form

that’s familiar from linear algebra, except that in place of a vector x of three or four elements, we have an unknown function, L; instead of a target vector b, we have a target function, the emitted radiance field Le; and instead of the linear transformation being defined by multiplication by a matrix M, it’s defined by applying some linear operator. ![]() Roughly speaking, the difference is between the finite-dimensional problems familiar from linear algebra, and the infinite-dimensional problems that arise when working with spaces of real-valued functions. (Indeed, the radiosity approximation amounts to a finite-dimensional approximation of the infinite-dimensional problem, so the radiosity equation ends up being an actual matrix equation.)

Roughly speaking, the difference is between the finite-dimensional problems familiar from linear algebra, and the infinite-dimensional problems that arise when working with spaces of real-valued functions. (Indeed, the radiosity approximation amounts to a finite-dimensional approximation of the infinite-dimensional problem, so the radiosity equation ends up being an actual matrix equation.)

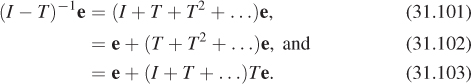

For the moment, let’s pretend that the problem we care about really is finite dimensional: that L is a vector of n elements, for some large n, and so is Le, while I – T is an n × n matrix. The solution to the equation is then

In general, computing the inverse of an n × n matrix is quite expensive and prone to numerical error, particularly for large n. But there’s a useful trick. We observe that

Verify Equation 31.50 by multiplying everything out. Remember that matrix multiplication isn’t generally commutative. Why is it OK to swap the order of multiplications in this case?

Suppose that as k gets large, Tk+1 gets very small (i.e., all entries of Tk+1 approach zero). Then the sum of all powers of T ends up being the inverse of (I – T), that is, in this special case we can in fact write

Multiplying both sides by Le, we get

In words, this says that the light in the scene consists of that emitted from the luminaires (ILe), plus the light emitted from luminaires and scattered once (TLe), plus that emitted from luminaires and scattered twice (T2Le), etc.

Our fanciful reasoning, in which we assumed everything was finite-dimensional, has led us to a very plausible conclusion. In fact, the reasoning is valid even for transformations on infinite-dimensional spaces. The only restriction is that T2 must be interpreted as “apply the operator T twice” rather than “square the matrix T.”

We did have to assume that Tk ![]() 0 as k gets large, however. When T is a matrix, this simply means that all entries of Tk go toward zero as k gets large. For a linear operator on an infinite dimensional space, the corresponding statement is that TkH goes to zero as k gets large, where H is an arbitrary element of the domain of T. (In our case, this means that for any initial emission values, if we trace the light through enough bounces, it gets dimmer and dimmer.)

0 as k gets large, however. When T is a matrix, this simply means that all entries of Tk go toward zero as k gets large. For a linear operator on an infinite dimensional space, the corresponding statement is that TkH goes to zero as k gets large, where H is an arbitrary element of the domain of T. (In our case, this means that for any initial emission values, if we trace the light through enough bounces, it gets dimmer and dimmer.)

We’ll assume, from now on, that the scattering operator T has the property that Tk ![]() 0 as k

0 as k ![]() ∞ so that the series solution of the rendering equation will produce valid results.

∞ so that the series solution of the rendering equation will produce valid results.

Of course, the series solution has infinitely many terms to sum up, each of them expensive to compute, so it’s not, as written, a practical method for rendering a scene. On the other hand, as we’ve already seen with radiosity, there are practical approximations to be made based on this series solution.

31.13. Alternative Formulations of Light Transport

We’ve described light transport in term of the radiance field, L, which is defined on R3 × S2 or ![]() × S2, where

× S2, where ![]() is the set of all surface points in a scene. (Since radiance is constant along rays in empty space, knowing L at points of

is the set of all surface points in a scene. (Since radiance is constant along rays in empty space, knowing L at points of ![]() determines its values on all of R3.) And we’ve used the scattering operator, which transforms an incoming radiance field to an outgoing one in writing the rendering equation. But there are alternative formulations.

determines its values on all of R3.) And we’ve used the scattering operator, which transforms an incoming radiance field to an outgoing one in writing the rendering equation. But there are alternative formulations.

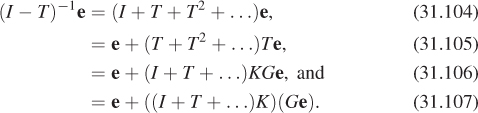

Arvo [Arv95] describes light transport in terms of two separate operators. The first operator, G, takes the surface radiance on ![]() and converts it to the field radiance, essentially by ray casting: Surface radiance leaving a point P in a direction ω becomes field radiance at the point Q where the ray first hits

and converts it to the field radiance, essentially by ray casting: Surface radiance leaving a point P in a direction ω becomes field radiance at the point Q where the ray first hits ![]() . The second operator, K, takes field radiance at a point P and combines it with the BRDF at P to produce surface radiance (i.e., it describes single-bounce scattering locally). Thus, the transport operator T can be expressed as T = K ο G.

. The second operator, K, takes field radiance at a point P and combines it with the BRDF at P to produce surface radiance (i.e., it describes single-bounce scattering locally). Thus, the transport operator T can be expressed as T = K ο G.

Kajiya [Kaj86] takes a different approach in which light directly transported from any point P ![]() M to any point Q

M to any point Q ![]() M is represented by a value I(P, Q); if P and Q are not mutually visible, then I(P, Q) is zero. Kajiya calls the quantity I the unoccluded two-point transport intensity. (The letters I, ρ, M, and g used in this and the following section will not be used again; we are merely explaining the correspondence between his notation and ours.) Kajiya’s version of the BRDF is not expressed in terms of a point and two directions, but rather in terms of three points; he writes ρ(P, Q, R) for the amount of light from R to Q that’s scattered toward the point P. His “emitted light” function also has points as parameters rather than point-direction parameters:

M is represented by a value I(P, Q); if P and Q are not mutually visible, then I(P, Q) is zero. Kajiya calls the quantity I the unoccluded two-point transport intensity. (The letters I, ρ, M, and g used in this and the following section will not be used again; we are merely explaining the correspondence between his notation and ours.) Kajiya’s version of the BRDF is not expressed in terms of a point and two directions, but rather in terms of three points; he writes ρ(P, Q, R) for the amount of light from R to Q that’s scattered toward the point P. His “emitted light” function also has points as parameters rather than point-direction parameters: ![]() (P, Q) is the amount of light emitted from Q in the direction of P. Kajiya’s quantities exclude various cosines that appear in our formulation of the rendering equation, including them instead as part of the integration (his integrals are over the set

(P, Q) is the amount of light emitted from Q in the direction of P. Kajiya’s quantities exclude various cosines that appear in our formulation of the rendering equation, including them instead as part of the integration (his integrals are over the set ![]() of all surfaces in the scene, while ours are usually over hemispheres around a point; the change-of-variables formula introduces the necessary cosines, as described in Section 26.6.4). Kajiya’s formulation of the rendering equation is therefore

of all surfaces in the scene, while ours are usually over hemispheres around a point; the change-of-variables formula introduces the necessary cosines, as described in Section 26.6.4). Kajiya’s formulation of the rendering equation is therefore

where g(P, Q) is a “geometry” term that in part determines the mutual visibility of P and Q: It’s zero if P is occluded from Q. Expressing this in terms of operators, he writes

where M is the operator that combines I with ρ in the integral. The series solution then becomes

This formulation has the advantage that the computation of visibility is explicit: Every occurrence of g represents a visibility (or ray-casting) operation.

31.14. Approximations of the Series Solution

As we mentioned, summing an infinite series to solve the rendering equation is not really practical. But several approximate approaches have worked well in practice. We follow Kajiya’s discussion closely.

The earliest widely used approximate solution consisted (roughly) of the following:

• Limiting the emission function to point lights

• Computing only one-bounce scattering (i.e., paths of the form LDE)

That is to say, the approximation to Equation 31.56 used was

where ![]() 0 denotes the use of only point lights. (The first term has

0 denotes the use of only point lights. (The first term has ![]() , because it was possible to render directly visible area lights.) Note that the second term should have been gMg

, because it was possible to render directly visible area lights.) Note that the second term should have been gMg![]() 0, that is, it should have accounted for whether the illumination could be seen by the surface (i.e., was the surface illuminated?). But such visibility computations were too expensive for the hardware, with the result that these early pictures lacked shadows.

0, that is, it should have accounted for whether the illumination could be seen by the surface (i.e., was the surface illuminated?). But such visibility computations were too expensive for the hardware, with the result that these early pictures lacked shadows.

Note that since ![]() 0 consisted of a finite collection of point lights, the integral that defines M became a simple sum.

0 consisted of a finite collection of point lights, the integral that defines M became a simple sum.

As an approach to solving the rendering equation, this involves many of the methods described in Section 31.2: The restriction to a few point lights amounts to solving a subproblem. The truncation of the series amounts to approximating the solution method rather than the equation. The transport operator, M, used in the early days was also restricted: All surfaces were Lambertian, although this was soon extended to include specular reflections as well.

Improving the algorithm to use g![]() 0 instead of

0 instead of ![]() 0 (i.e., including shadows) was a subject of considerable research effort, with two main approaches: exact visibility computations, and inexact ones. Exact visibility computations are discussed in Chapter 36.

0 (i.e., including shadows) was a subject of considerable research effort, with two main approaches: exact visibility computations, and inexact ones. Exact visibility computations are discussed in Chapter 36.

A typical inexact approach consists of rendering a scene from the point of view of the light source to produce a shadow map: Each pixel of the shadow map stores the distance to the surface point closest to the light along a ray from the light to the surface. Later, when we want to check whether a point P is illuminated by the light, we project P onto the shadow map from the light source, and check whether it is farther from the light source than the distance value stored in the map. If so, it’s occluded by the nearer surface and hence not illuminated. This approach has many drawbacks, the main one being that a single sample at the center of a shadow map pixel is used to determine the shadow status of all points that project to that pixel; when the view direction and lighting direction are approximately opposite, and the surface normal is nearly perpendicular to both, this can lead to bad aliasing artifacts (see Figure 31.16).

Figure 31.16: Aliasing produced by a low-resolution shadow map. The aliasing on the shadows is the problem; the stripes on the cubes themselves arise from a different problem. (Courtesy of Fabien Sanglard.)

By the way, the approaches used in the early days of graphics were not, at the time, seen as approximate solutions to the rendering equation. They were practical “hacks,” sometimes in the form of applications of specific observations (e.g., Lambert’s law for reflection from a diffuse surface) to more general situations than appropriate, and sometimes were approximations to the phenomena that were observed, without any particular reference to the underlying physics. When you read older papers, you’ll seldom see units like watts or meters; you’ll also on rare occasions notice an extra cosine or a missing one. Be prepared to read carefully and think hard, and trust your own understanding.

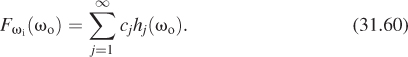

31.15. Approximating Scattering: Spherical Harmonics

We’ve discussed patch-based radiosity, in which the field radiance is approximated by a piecewise constant function; one can also think of this as an attempt to write the field radiance in a particular basis for a subspace of all possible field-radiance functions, in this case the basis consisting of functions that are identically one on some patch j, and zero everywhere else. Linear combinations of these functions are the piecewise constant functions used in radiosity.

A similar approach is to represent the surface radiance at a point (which is a function on the hemisphere of incoming directions) in some basis for the space of functions on the sphere. Assuming we limit ourselves to continuous functions, such a basis is provided by spherical harmonics, h1, h2, ..., which are the analog, for S2, of the Fourier basis functions sin(2πnx) (n = 1, 2, ...) and cos(2πnx) (n = 0, 1, 2, ...) on the unit circle. The first few spherical harmonics, in xyz-coordinates, are proportional to 1, x, y, z, xy, yz, zx, and x2 – y2, with the constant of proportionality chosen so that each integrates to one on the sphere. In spherical polar coordinates, they can be written 1, cos θ, sin θ, sin φ, sin 2θ, sin θ sin φ, cos θ cos φ, and cos 2θ. Like the Fourier basis functions on the circle, they are pairwise orthogonal: The integral of the product of any two distinct harmonics over the sphere is zero. Figure 31.17 shows the first few harmonics, plotted radially. The plot of h1, which is the constant function 1, yields the unit sphere.

Figure 31.17: The first few spherical harmonics. For each point on the unit sphere (1, θ, φ) in spherical polar coordinates, we plot a point (r, θ, φ), where r = | hj(θ, φ)|. The absolute value avoids problems where negative values get hidden, but is slightly misleading.

To be clear: If you have a continuous function f : S2 ![]() R, you can write f as a sum3 of spherical harmonics:

R, you can write f as a sum3 of spherical harmonics:

3. ![]() Limiting to a finite sum gives an approximation to the function; if f is discontinuous, then the sum converges to f only in regions of continuity.

Limiting to a finite sum gives an approximation to the function; if f is discontinuous, then the sum converges to f only in regions of continuity.

The coefficients cj depend on f, of course, just as when we wrote a function on the unit circle as a sum of sines and cosines, the coefficients of the sines and cosines depended on the function. In fact, they’re determined the same way: by computing integrals.

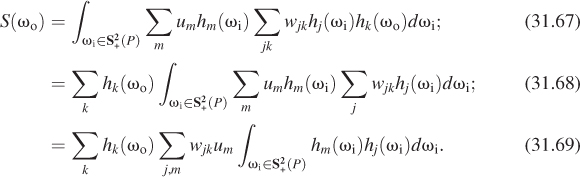

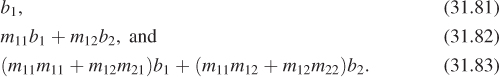

The cosine-weighted BRDF at a fixed point P is a function of two directions ωi and ωo, that is, the expression

defines a map ![]() . So the preceding statement about representing functions on S2 via harmonics does not directly apply. But we can approximate the cosine-weighted BRDF

. So the preceding statement about representing functions on S2 via harmonics does not directly apply. But we can approximate the cosine-weighted BRDF ![]() at P with spherical harmonics in a two-step process. To simplify notation, we’ll omit the argument P for the remainder of this discussion.

at P with spherical harmonics in a two-step process. To simplify notation, we’ll omit the argument P for the remainder of this discussion.

First, we fix ωi and consider the function ωo ![]()

![]() (ωi, ωo); this function on S2—let’s call it Fωi—can be expressed in spherical harmonics:

(ωi, ωo); this function on S2—let’s call it Fωi—can be expressed in spherical harmonics:

If we chose a different ωi, we could repeat the process; this would get us a different collection of coefficients {cj}. We thus see that the coefficients cj depend on ωi; we can think of these as functions of ωi and write

Now each function ωi ![]() cj(ωi) is itself a function on the sphere, and can be written as a sum of spherical harmonics. We write

cj(ωi) is itself a function on the sphere, and can be written as a sum of spherical harmonics. We write

Substituting this expression into Equation 31.61, we get

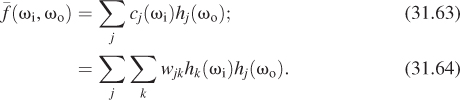

The advantage of this form of the expression is that when we evaluate the integral at the center of the rendering equation, namely,

both L and fs are expressed in the spherical harmonic basis. This will soon let us evaluate the integral very efficiently. Note, however, that in expressing the BRDF as a sum of harmonics, we were assuming that the BRDF was continuous; this either rules out any impulses (like mirror reflection), or requires that we replace all equalities above by approximate equalities.

Unfortunately, while L may be expressed with respect to the global coordinate system, fs is usually expressed in a coordinate system whose x- and z-directions lie in the surface, and whose y-direction is the normal vector. Transforming L’s spherical-harmonic expansion into this local coordinate system requires some computation; it’s fairly straightforward for low-degree harmonics, but it gets progressively more expensive for higher degrees. If we write the field radiance L at point P in spherical harmonics in this local coordinate system (absorbing a minus sign as we do so),

then the central integral takes the form

The integral in this expression is 0 if j and m differ, and 1 if they’re the same. So the entire expression simplifies to express the surface radiance in direction ωo as

The inner sum can be seen as a matrix product between the row vector u of the coefficients of the field radiance and the matrix of coefficients for the cosine-weighted BRDF. The product vector provides the coefficients for the surface radiance in terms of spherical harmonics in the local coordinate system.

At the cost of expressing the BRDF and field radiance in terms of spherical harmonics, we’ve converted the central integral into a matrix multiplication. This is another instance of the Basis principle: Things are often simpler if you choose a good basis. In particular, if you’re likely to be integrating products, a basis like the spherical harmonics, in which the basis functions are pairwise orthogonal, is especially useful.

If the field radiance can be assumed to be independent of position (e.g., if most light comes from a partly overcast sky), then the major cost in this approach is transforming the spherical harmonic expression for field radiance in global coordinates to local coordinates. If not, there’s the further problem of converting surface radiance at one point, expressed in terms of spherical harmonics there, into field radiance at other points, expressed in terms of spherical harmonics at those points.

This approach, which we’ve only sketched here, has been developed thoroughly by Ramamoorthi [RH01]. There are several important challenges.

• There is a conversion of harmonic decompositions in different coordinate systems, which we’ve already described.

• We replaced the work of integration with the work of matrix multiplication; that’s only a good idea if the matrix size is not too large. Unfortunately, the more “spiky” a function is, the more terms you need in its spherical-harmonic series to approximate it well (just as we need high-frequency sines and cosines to approximate rapidly changing functions in one dimension). Since many BRDFs are indeed spiky (mirror reflectance is a particularly difficult case), a good approximation may require a great many terms, making the matrix multiplication expensive, especially if the matrix is not sparse.

• We’ve ignored a critical property of irradiance at surfaces: It’s nonzero only on the upper hemisphere. That makes the equator generally a line of discontinuity, and fitting spherical harmonics to discontinuities is difficult: A good fit requires more terms.