Chapter 36. Visibility Determination

36.1. Introduction

Determining the visible parts of surfaces is a fundamental graphics problem. It arises naturally in rendering because rendering objects that are unseen is both inefficient and incorrect. This problem is called either visible surface determination or hidden surface removal, depending on the direction from which it is approached.

The two distinct goals for visibility are algorithm correctness and efficiency. A visibility algorithm responsible for the correctness of rendering must exactly determine whether an unobstructed line of sight exists between two points, or equivalently, the set of all points to which one point has an unobstructed line of sight. The most intuitive application is primary visibility: Solve visibility exactly for the camera. Doing so will only allow the parts of the scene that are actually visible to color the image so that the correct result is produced. Ray casting and the depth buffer are by far the most popular methods for ensuring correct visibility today.

A conservative visibility algorithm is designed for efficiency. It will distinguish the parts of the scene that are likely visible from those that are definitely not visible, with respect to a point. Conservatively eliminating the nonvisible parts reduces the number of exact visibility tests required but does not guarantee correctness by itself. When a conservative result can be obtained much more quickly than an exact one, this speeds rendering if the conservative algorithm is used to prune the set that will be considered for exact visibility. For example, it is more efficient to identify that the sphere bounding a triangle mesh is behind the camera, and therefore invisible to the camera, than it is to test each triangle in the mesh individually.

Backface culling and frustum culling are two simple and effective methods for conservative visibility testing; occlusion culling is a more complex refinement of frustum culling that takes occlusion between objects in the scene into account. Sophisticated spatial data structures have been developed to decrease the cost of conservative visibility testing. Some of these, such as the Binary Space Partition (BSP) tree and the hierarchical depth buffer, simultaneously address both efficient and exact visibility by incorporating conservative tests into their iteration mechanism. But often a good strategy is to combine a conservative visibility strategy for efficiency with a precise one for correctness.

![]() The Culling Principle

The Culling Principle

It is often efficient to approach a problem with one or more fast and conservative solutions that narrow the space by culling obviously incorrect values, and a slow but exact solution that then needs only to consider the fewer remaining possibilities.

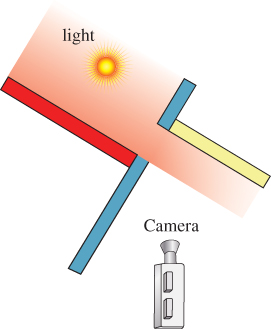

Primary visibility tells us which surfaces emit or scatter light toward a camera. They are the “last bounce” locations under light transport and are the only surfaces that directly affect the image. However, keep in mind that a global illumination renderer cannot completely eliminate the points that are invisible to the camera. This is because even though a surface may not directly scatter light toward the camera, it may still affect the image. Figure 36.1 shows an example in which removing a surface that is invisible to the camera changes the image, since that surface casts light onto surfaces that are visible to the camera. Another example is a shadow caster that is not visible, but casts a shadow on points that are visible to the camera. Removing the shadow caster from the entire rendering process would make the shadow disappear. So, primary visibility is an important subproblem that can be tackled with visibility determination algorithms, but it is not the only place where we will need to apply those algorithms.

Figure 36.1: The yellow wall is illuminated only by light reflected from the hidden red polygon. Removing it will cause the yellow wall to be illuminated only by light from the blue surface.

The importance of indirect influence on the image due to points not visible to the camera is why we define exact visibility as a property that we can test for between any pair of points, not just between the camera and a scene point. A rendering algorithm incorporating global illumination must consider the visibility of each segment of a transport path from the source through the scene to the camera. Often the same algorithms and data structures can be applied to primary and indirect visibility. For example, the shadow map from Chapter 15 is equivalent to a depth buffer for a virtual camera placed at a light source.

There are of course nonrendering applications of algorithms originally introduced for visibility determination. Collision detection for the simulation of fast-moving particles like bullets and raindrops is often performed by tracing rays as if they were photons. Common modeling intersection operations such as cutting one shape out of another are closely related to classic visibility algorithms for subdividing surfaces along occlusion lines.

The motivating examples throughout this chapter emphasize primary visibility. That’s because it is perhaps the most intuitive to consider, and because the camera’s center of projection is often the single point that appears in the most visibility tests. For each example, consider how the same principles apply to general visibility tests. As you read about each data structure, think in particular about how many visibility tests at a point are required to amortize the overhead of building that data structure.

In this chapter, we first present a modern view of visibility following the light transport literature. We formally frame the visibility problem as an intersection query for a ray (“What does this ray hit first?”) and as a visibility function on pairs of points (“Is Q visible from P?”). We then describe algorithms that can amortize that computation when it is performed conservatively over whole primitives for efficient culling. This isn’t the historical order of development. In fact, the topic developed in the opposite order.

Historically, the first notion of visibility was the question “Is any part of this triangle visible?” That grew more precise with “How much of this triangle is visible?” which was a critical question when all rendering involved drawing edges on a monochrome vector scope or rasterizing triangles on early displays and slow processors. With the rise of ray tracing and general light transport algorithms, a new visibility question was framed on points. That then gave a formal definition for the per-primitive questions, which expanded under notions of partial coverage to the framework encountered today. Of course, classic graphics work on primitives was performed with an understanding of the mathematics of intersection and precise visibility. The modern notion is just a redirection of the derivation: working up from points and rays with the rendering equation in mind, rather than down from surfaces under an ad hoc illumination and shading model.

36.1.1. The Visibility Function

Visible surface determination algorithms are grounded in a precise definition of visibility. We present this formally here in terms of geometry as the basis for the high-level algorithms. While it is essential for defining and understanding the algorithms, this direct form is rarely employed.

A performance reason that we can’t directly apply the definition of visibility is that with large collections of surfaces in a scene, exhaustive visibility testing would be inefficient. So we’ll quickly look for ways to amortize the cost across multiple surfaces or multiple point pairs.

A correctness concern with direct visibility is that under digital representations, the geometric tests involved in single tests are also very brittle. In general, it is impossible to represent most of the points on a line in any limited-precision format, so the answer to “Does this point occlude that line of sight?” must necessarily almost always be “no” on a digital computer. We can escape the numerical precision problem by working with spatial intervals—for example, line segments, polygons, and other curves—for which occlusion of a line of sight is actually representable, but we must always implicitly keep in mind the precision limitation at the boundaries of those intervals. Thus, the question of whether a ray passes through a triangle if it only intersects the edge is moot. In general, we can’t even represent that intersection location in practice, so our classification is irrelevant. So, beware that everything in this chapter is only valid in practice when we are considering potential intersections that are “far away” from surface boundaries with respect to available precision, and the best that we can hope for near boundaries is a result that is spatially coherent rather than arbitrarily changing within the imprecise region.

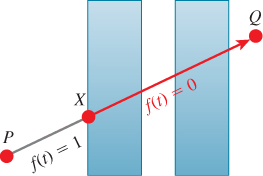

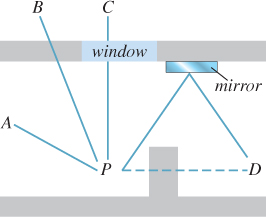

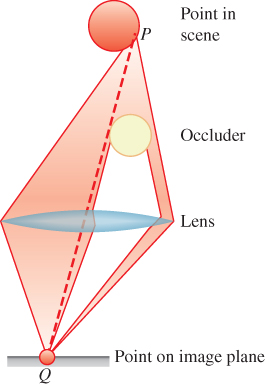

Given points P and Q in the scene, let visibility function V(P, Q) = 1 if there is no intersection between the scene and the open-ended line segment between P and Q, and V(P, Q) = 0 otherwise. This is depicted in Figure 36.2. Sometimes it is convenient to work with the occlusion function H(P, Q) = 1 – V(P, Q). The visibility function is necessarily symmetric, so V(P, Q) = V(Q, P).

Figure 36.2: V(P, A) = 1 because there is no occluder. V(P, B) = 0 because a wall is in the way. V(P, C) = 0 because, even though P can see C through the window, the window is an occluder as far as mathematical “visibility” is concerned. Likewise, V(P, D) = 0, even though P sees a reflection of D in the mirror.

Note that the “visibility” in “visibility function” refers strictly to geometric line-of-sight visibility. If P and Q are separated by a pane of glass, V(P, Q) is zero because a nonempty part of the scene (the glass pane) is intersected by the line segment between P and Q. Likewise, if an observer at Q has no direct line of sight to P, but can see P through a mirror or see its shadow, we still say that there is no direct visibility: V(P, Q) = 0.

Let X be the first scene point encountered along a ray with origin P in direction ![]() . Point X partitions the ray into two visibility ranges. To see this, define f to be visibility as a function of distance from Q, that is, let

. Point X partitions the ray into two visibility ranges. To see this, define f to be visibility as a function of distance from Q, that is, let ![]() . Between X and P, 0 ≤ t ≤ |X ≈ P|, there is visibility, so f(t) = 1 as shown in Figure 36.3. Beyond X, t > ||X – P||. For that domain there is no visibility because X is an occluder, so f(t) = 0.

. Between X and P, 0 ≤ t ≤ |X ≈ P|, there is visibility, so f(t) = 1 as shown in Figure 36.3. Beyond X, t > ||X – P||. For that domain there is no visibility because X is an occluder, so f(t) = 0.

Evaluating the visibility function is mathematically equivalent to finding that first occluding point X, given a starting point P and ray direction ![]() . The first occluding point along a ray is the solution to a ray intersection query. Chapter 37 presents data structures for efficiently solving ray intersection queries. It is not surprising that a common way to evaluate V(P, Q) is to solve for X. If X exists and lies on the line segment PQ, then V(P, Q) = 0; otherwise, V(P, Q) = 1.

. The first occluding point along a ray is the solution to a ray intersection query. Chapter 37 presents data structures for efficiently solving ray intersection queries. It is not surprising that a common way to evaluate V(P, Q) is to solve for X. If X exists and lies on the line segment PQ, then V(P, Q) = 0; otherwise, V(P, Q) = 1.

Some rendering algorithms explicitly evaluate the visibility function between pairs of points by testing for any intersection between a line segment and the surfaces in a scene. Examples include direct illumination shadow tests in a ray tracer and transport path testing in bidirectional path tracing [LW93, VG94], and Metropolis light transport [VG97].

Others solve for the first intersection along a ray instead, thus implicitly evaluating visibility by only generating the visible set of points. Examples include primary and recursive rays in a ray tracer and deferred-shading rasterizers. These are all algorithms with explicit visibility determination. They resolve visibility before computing transport (often called “shading” in the real-time rendering community) between points, thus avoiding the cost of scattering computations for points with no net transport. Simpler renderers compute transport first and rely on ordering to implicitly resolve visibility. For example, a naive ray tracer might shade every intersection encountered along a ray, but only retain the radiance computed at the one closest to the ray origin. This is equivalent to an (also naive) rasterization renderer that does not make a depth prepass. Obviously it is preferable to evaluate visibility before shading in cases where the cost of shading is relatively high, but whether to evaluate that visibility explicitly or implicitly greatly depends on the particular machine architecture and scene data structure. For example, rasterization renderers prefer a depth prepass today because the memory to store a depth buffer is now relatively inexpensive. Were the cost of a full-screen buffer very expensive compared to the cost of computation (as it once was, and might again become if resolution or manufacturing changes significantly), then a visibility prepass might be the naive choice and some kind of spatial data structure again dominate rasterization rendering.

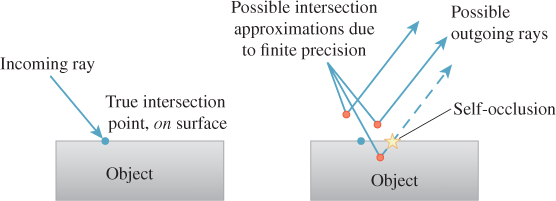

Observe that following conventions from the literature, we defined the visibility function on the open line segment that does not include its endpoints. This means that if the ray from P to Q first meets scene geometry at a point X different from P, then V(P, X) = 1. This is a convenient definition given that the function is typically applied to points on surfaces. Were we to consider the closed line segment, then there would never be any visibility between the surfaces of a scene—they would all occlude themselves. We would have to consider points slightly offset from the surfaces in light transport equations.

In practice, the distinction between open and closed visibility only simplifies the notation of transport equations, not implementations in programs. That is because rounding operations implicitly occur after every operation when working with finite-precision arithmetic, introducing small-magnitude errors (see Figure 36.5). So we must explicitly phrase all applications of the visibility function and all intersection queries with some small offset. This is often called ray bumping because it “bumps” the origin of the visibility test ray a small distance from the starting surface. Note that the bumping must happen on the other end as well. For example, to evaluate V(Q, P), attempt to find a scene point X = Q+S(Q – P)t for < t < |P–Q|–![]() . If and only if there is no scene point satisfying that constraint, then V(Q, P) = 1. Failing to choose a suitably large

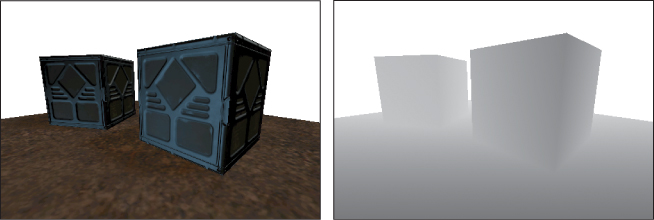

. If and only if there is no scene point satisfying that constraint, then V(Q, P) = 1. Failing to choose a suitably large ![]() value can produce artifacts such as shadow acne (i.e., self-shadowing), speckled highlights and reflections, and darkening of the indirect components of illumination shown in Figure 36.4. The noisy nature of these artifacts arises from the sensitivity of the comparison operations to the small-magnitude representation error in the floating-point values.

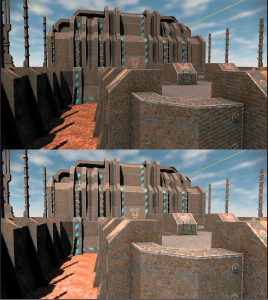

value can produce artifacts such as shadow acne (i.e., self-shadowing), speckled highlights and reflections, and darkening of the indirect components of illumination shown in Figure 36.4. The noisy nature of these artifacts arises from the sensitivity of the comparison operations to the small-magnitude representation error in the floating-point values.

Figure 36.4: (Top) Self-occlusion from insufficient numerical precision or offset values causes the artifacts of shadow acne and speckling in indirect illumination terms such as mirror reflections. (Bottom) The same scene with the shadow acne removed.

Figure 36.5: Finite precision leads to self-occlusions. “Bumping” the outgoing ray biases the representation error in a direction less likely to produce artifacts by favoring the points above the surface as the ray origin.

36.1.2. Primary Visibility

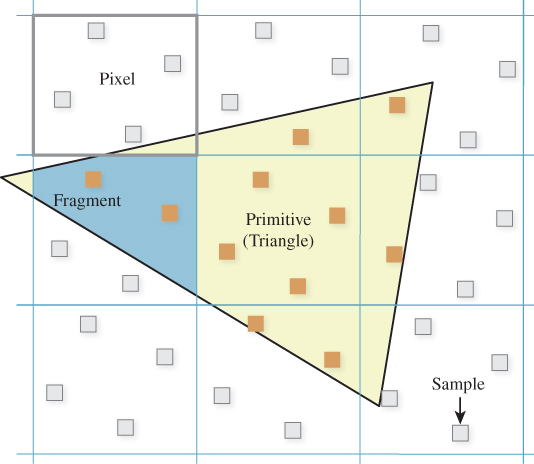

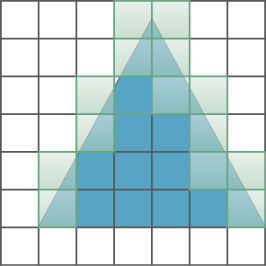

Primary visibility (a.k.a. eye ray visibility, camera visibility) is visibility between a point on the aperture of a camera and a point in the scene. To render an image, one visibility test must be performed per light ray sample on the image plane. In the simplest case, there is one sample at the center of each pixel. Computing multiple samples at each pixel often improves image quality. See Section 36.9 for a discussion of visibility in the presence of multiple samples per pixel.

A pinhole camera has a zero-area aperture, so for each sample point on the image plane there is only one ray along which light can travel. That is the primary ray for that point on the image plane. Consider three points on the primary ray: sample point Q on the imager, the aperture A, and a point P in the scene. Since there are no occluding objects inside the camera, V(Q, P) = V(A, P).

Since the visibility function evaluations or intersection queries at all samples share a common endpoint of the pinhole aperture, there are opportunities to amortize operations across the image. Ray packet tracing and rasterization are two algorithms that exploit this technique. For more details, see Chapter 15, which develops the amortized aspect of rasterization and presents the equivalence of intersection queries under rasterization and ray tracing.

36.1.3. (Binary) Coverage

Coverage is the special case of visibility for points on the image plane. For a scene composed of a single geometric primitive, the coverage of a primary ray is a binary value that is 1 if the ray intersects the primitive between the image plane and infinity, and 0 otherwise. This is equivalent to the visibility function applied to the primary ray origin and a point infinitely far away along the primary ray.

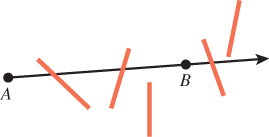

For a scene containing multiple primitives, primitives may occlude each other in the camera’s view. The depth complexity of a ray is the number of times it intersects the scene. At any given intersection point P, the quantitative invisibility [App67] is the number of other primitive intersections that lie between the ray origin and P. Figure 36.6 shows examples of each of these concepts.

Figure 36.6: The quantitative invisibility of two points is the number of surface intersections on the segment between them. The quantitative invisibility of B with respect to A is 2 in this figure. The depth complexity of a ray is the total number of surface intersections along the ray. The ray from A through B has depth complexity 3 in this figure.

For some applications, backfaces—surfaces where the dot product of the geometric normal and the ray direction is positive—are ignored when computing depth complexity and quantitative invisibility. The case where the intersection of the ray and a surface is a segment (e.g., Figure 36.7) instead of a finite number of points is tricky. We’ve already discussed that the existence of such an intersection is already suspect due to limited precision, and in fact for it to occur at all with nonzero probability requires us to have explicitly placed geometry in just the right configuration to make such an unlikely intersection representable. Of course, humans are very good at constructing exactly those cases, for example, by placing edges at perfectly representable integer coordinates and aligning surfaces with axes in ways that could never occur in data measured from the real world. We note that for a closed polygonal model, it is common to ignore these line-segment intersections, but the definition of depth complexity and quantitative invisibility for this case varies throughout the literature.

In the case of multiple primitive intersections, we say that the coverage is 1 at the first intersection and 0 at all later ones to match the visibility function definition.

At the end of this chapter, in Section 36.9, we extend binary coverage to partial coverage by considering multiple light paths per pixel.

36.1.4. Current Practice and Motivation

Most rendering today is on triangles. The triangles may be explicitly created, or they may be automatically generated from other shapes. Some common modeling primitives that are reducible to triangles are subdivision surfaces, implicit surfaces, point clouds, lines, font glyphs, quadrilaterals, and height field models.

Ray-tracing renderers solve exact visibility determination by ray casting (Section 36.2): intersecting the model with a ray to produce a sample. Data structures optimized for ray-triangle intersection queries are therefore important for efficient evaluation of the visibility function. Chapter 37 describes several of these data structures. Backface culling (Section 36.6) is implicitly part of ray casting.

Hardware rasterization renderers today tend to use frustum culling (Section 36.5), frustum clipping (Section 36.5), backface culling (Section 36.6), and a depth buffer (Section 36.3) for per-sample visible surface determination. Those methods provide correctness, but they require time linear in the number of primitives. So relying on them exclusively would not scale to large scenes. For efficiency it is therefore necessary to supplement those with conservative methods for determining occlusion and hierarchical methods for eliminating geometry outside the view frustum in sublinear time.

A handful of applications rely on the painter’s algorithm (Section 36.4.1) of simply drawing everything in the scene in back-to-front order and letting the ordering resolve visibility. This is neither exact nor conservative1, but it has the benefit of extreme simplicity. It is used almost exclusively for 2D graphical user interface rendering to handle overlapping windows. The primary 3D application of the painter’s algorithm today is for rasterization of translucent surfaces, although recent trends favor more accurate per-sample stochastic and lossy-volumetric alternatives [ESSL10, LV00, Car84, SML11, JB10, MB07].

1. . . . nor how artists actually paint—for example, sometimes the sky is painted after foreground objects—but the name is now both a technical term and appropriately evocative.

Many applications combine multiple visibility determination algorithms. For example, a hybrid renderer might rasterize primary and shadow rays but perform ray casting for visibility determination on other global illumination paths. A realtime hardware rasterization renderer might augment its depth buffer with hierarchical occlusion culling or precomputed conservative visibility. Many games rely on those techniques, but they include a ray-casting algorithm for visibility determination used to determine line of sight for character AI logic and physical simulation.

36.2. Ray Casting

Ray casting is a direct process for answering an intersection query. As previously shown, it also computes the visibility function: V(P, Q) = 1, if P is the result of the intersection query on the ray with origin Q and direction (P – Q)/|P – Q|; and V(P, Q) = 0 otherwise. Chapter 15 introduced an algorithm for casting rays in scenes described by arrays of triangles, and showed that the same algorithm can be applied to primary and indirect (in that case, shadow) visibility.

The time cost of casting a ray against n triangles in an array is O(n) operations. If V(P, Q) = 1, then the algorithm must actually test every ray-triangle pair. If V(P, Q) = 0, then the algorithm can terminate early when it encounters any intersection with the open segment PQ. In practice this means that computing the visibility function by ray casting may be faster than solving the intersection query; hence, resolving one shadow ray may be faster than finding the surface to shade for one ray from the camera. For a dense scene with high depth complexity, the performance ratio between them may be significant.

Terminating on any intersection still only makes ray casting against an array of surfaces faster by a constant, so it still requires O(n) operations for n surfaces. Linear performance is impractical for large and complex scenes, especially given that such scenes are exactly those in which almost all surfaces are not visible from a given point. Thus, there are few cases in which one would actually cast rays against an array of surfaces, and for almost all applications some other data structure is used to achieve sublinear scaling.

Chapter 37 describes many spatial data structures for accelerating ray intersection queries. These can substantially reduce the time cost of visibility determination compared to the linear cost under an array representation. However, building such a structure may only be worthwhile if there will be many visibility tests over which to amortize the build time. Constants vary with algorithms and architectures, but for more than one hundred triangles or other primitives and a few thousand visibility tests, building some kind of spatial data structure will usually provide a net performance gain.

We now give an example of how a ray-primitive intersection query operates within the binary space partition tree data structure. The issues encountered in this example apply to most other spatial data structures, including bounding volume hierarchies and grids.

36.2.1. BSP Ray-Primitive Intersection

The binary space partition tree (BSP tree) [SBGS69, FKN80] is a data structure for arranging geometric primitives based on their locations and extents. Chapter 37 describes in detail how to build and maintain such a structure, and some alternative data structures. The BSP tree supports finding the first intersection between a ray and a primitive. The algorithm for doing this often has only logarithmic running time in the number of primitives. We say “often” because there are many pathological tree structures and scene distributions that can make the intersection time linear, but these are easily avoidable for many scenes. This logarithmic scaling makes ray casting practical for large scenes.

The BSP tree can also be used to compute the visibility function. The algorithm for this is nearly identical to the first-intersection query. It simply terminates with a return value of false when any intersection is detected, and returns true otherwise.

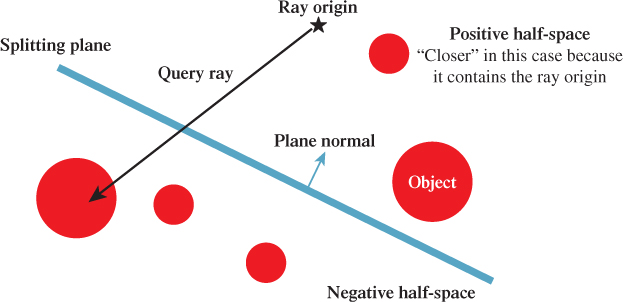

There are a few variations on the BSP structure. For the following example, we consider a simple one to focus on the algorithm. In our simple tree, every internal node represents a splitting plane (which is not part of the scene geometry) and every leaf node represents a geometric primitive in the scene. A plane divides space into two half-spaces. Let the positive half-space contain all points in the plane and on the side to which the normal points. Let the negative half-space contain all points in the plane and on the side opposite to which the normal points. Figure 36.8 shows one such plane (for a 2D scene, so the “plane” is a line). Both the positive and negative half-spaces will be subdivided by additional planes when creating a full tree, until each sphere primitive is separated from the others by at least one plane.

Figure 36.8: The splitting plane for a single internal BSP node divides this scene composed of five spheres into two half-spaces.

The internal nodes in the BSP tree have at most two children, which we label positive and negative. The construction algorithm for the tree ensures that the positive subtree contains only primitives that are in the positive half-space of the plane (or on the plane itself) and that the negative subtree contains only those in the negative half-space of the plane. If a primitive from the scene crosses a splitting plane, then the construction algorithm divides it into two primitives, split at that plane.

Listing 36.1 gives the algorithm to evaluate the visibility function in this simple BSP tree. The recursive intersects function performs the work. Point Q is visible to point P if no intersection exists between the line segment PQ and the geometry in the subtree with node at its root. When node is a leaf, it contains one geometric primitive, so intersects tests whether the line-primitive intersection is empty. Chapter 7 describes intersection algorithms that implement this test for different types of geometric primitives, and Chapter 15 contains C++ code for ray-triangle intersection.

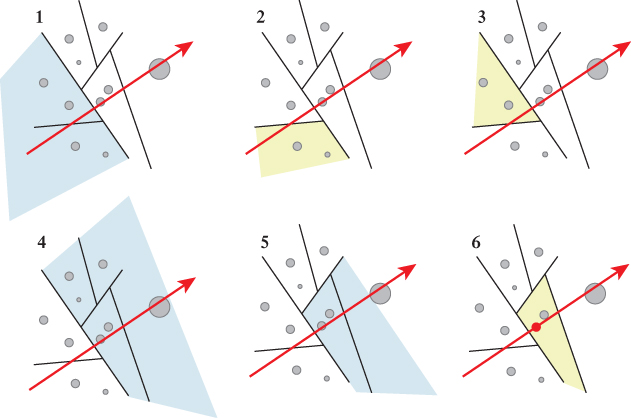

Figure 36.9 visualizes the algorithm’s iteration through a 2D tree for a scene consisting of disks.

Figure 36.9: Tracing a ray through a scene containing disks stored in a 2D BSP tree. Highlighted portions of space correspond to the node at which the algorithm is operating in each step. Iteration proceeds depth-first, preferring to descend into the geometrically closer of the two children at each node.

If node is an internal node, then it contains a splitting plane that creates two half-spaces. We categorize the child nodes corresponding to these as being closer and farther with respect to P. Figure 36.8 shows an example classification at an internal node. With an eye toward reusing this algorithm’s structure for the related problem of finding the first intersection, we choose to visit the closer node first. That is because if there is any intersection between segment PQ and the scene in closer, it must be closer to P than every intersection in farther [SBGS69].

If PQ lies entirely in one half-space, the result of the intersection test for the current node reduces to the result of the test on that half-space. Otherwise, there is an intersection if one is found in either half-space, so the algorithm recursively visits both.

Listing 36.1: Pseudocode for visibility testing in a BSP tree.

1 function V(P, Q):

2 return not intersects(P, Q, root)

3

4 function intersects(P, Q, node):

5 if node is a leaf:

6 return (PQ intersects the primitive at the node)

7

8 closer = node.positiveChild

9 farther = node.negativeChild

10

11 if P is in the negative half-space of node:

12 // The negative side of the plane is closer to P

13 swap closer, farther

14

15 if intersects(P, Q, closer):

16 // Terminate early because an intersection was found

17 return true

18

19 if P and Q are in the same half-space of node:

20 // Segment PQ does not extend into the farther side

21 return false

22

23 // After searching the closer side, recursively search

24 // for an intersection on the farther side

25 return intersects(P, Q, farther)

The visibility testing code assumes that there’s a closer and a farther half-space. What will this code do when the splitting plane contains the camera point P? Will it still perform correctly? If not, what modifications are needed?

In the worst case the routine must visit every node of the tree. In practice this rarely occurs. Typically, PQ is small with respect to the size of the scene and the planes carve space into convex regions that do not all lie along the same line. So we expect a relatively tight depth-first search with runtime proportional to the height of the tree.

There are many ways to improve the performance of this algorithm by a constant factor. These include clever algorithms for constructing the tree and extending the binary tree to higher branching factors. For sparse scenes, alternative spatial partitions can be advantageous. The convex spaces created by the splitting planes often have a lot of empty space compared to the volumes bounded by the geometric primitives within them in a BSP tree. A regular grid or bounding volume hierarchy may increase the primitive density within leaf nodes, thus reducing the number of primitive intersections performed.

Where BSP tree iteration is limited by memory bandwidth, substantial savings can be gained by using techniques for compressing the plane and node pointer representation [SSW+06].

36.2.2. Parallel Evaluation of Ray Tests

The previous analysis considered serial processing on a single scalar core. Parallel execution architectures change the analysis. Tree search is notoriously hard to execute concurrently for a single query. Near the root of a tree there isn’t enough work to distribute over multiple execution units. Deeper in the tree there is plenty of work, but because the lengths of paths may vary, the work of load balancing across multiple units may dominate the actual search itself. Furthermore, if the computational units share a single global memory, then the bandwidth constraints of that memory may still limit net performance. In that case, adding more computational units can reduce net performance because they will overwhelm the memory and reduce the coherence of memory access, eliminating any global cache efficiency.

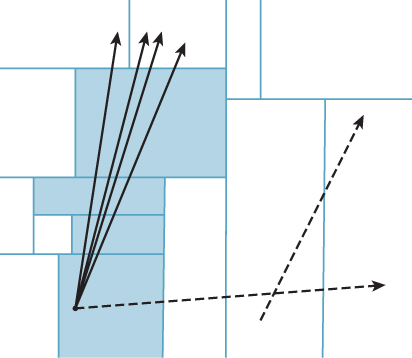

There are opportunities for scaling nearly linearly in the number of computational units when performing multiple visibility queries simultaneously, if they have sufficient main memory bandwidth or independent on-processor caches. In this case, all threads can search the tree in parallel. Historically the architectures capable of massively parallel search have required some level of programmer instruction batching, called vectorization. Also called Single Instruction Multiple Data (SIMD), vector instructions mean that groups of logical threads must all branch the same way to achieve peak computational efficiency. When they do branch the same way, they are said to be branch-coherent; when they do not, they are said to be divergent. In practice, branch coherence is also a de facto requirement for any memory-limited search on a parallel architecture, since otherwise, executing more threads will require more bandwidth because they will fetch different values from memory.

There are many strategies for BSP search on SIMD architectures. Two that have been successful in both research and industry practice are ray packet tracing [WSBW01, WBS07, ORM08] and megakernel tracing [PBD+10]. Each essentially constrains a group of threads to descend the same way through the tree, even if some of the threads are forced to ignore the result because they should have branched the other way (see Figure 36.10). There are some architecture-specific subtleties about how far to iterate before recompacting threads based on their coherence, and how to schedule and cache memory results.

Figure 36.10: Packets of rays with similar direction and origin may perform similar traversals of a BSP tree. Processing them simultaneously on a parallel architecture can amortize the memory cost of fetching nodes and leverage vector registers and instructions. Rays that diverge from the common traversal (illustrated by dashed lines) reduce the efficiency of this approach.

Adding this kind of parallelism is more complicated than merely spawning multiple threads. It is also hard to ignore when considering high-performance visibility computations. At the time of this writing, vector instructions yield an 8x to 32x peak performance boost over scalar or branch-divergent instructions on the same processors. Fortunately, this kind of low-level visibility testing is increasingly provided by libraries, so you may never have to implement such an algorithm outside of an educational context. From a high level, one can look at hardware rasterization as an extreme optimization of parallel ray visibility testing for the particular application of primary rays under pinhole projection.

36.3. The Depth Buffer

A depth buffer [Cat74] is a 2D array parallel to the rendered image. It is also known as a z-buffer, w-buffer, and depth map. In the simplest form, there is one color sample per image pixel, and one scalar associated with each pixel representing some measure of the distance from the center of projection to the surface that colored the pixel.

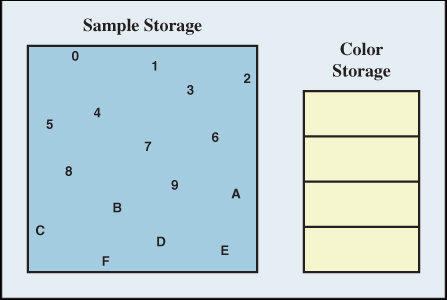

To reduce the aliasing arising from taking a single sample per pixel, renderers frequently store many color and depth samples within a pixel. When resolving to an image for display, the color values are filtered (e.g., by averaging them), and the depth values are discarded. A variety of strategies for efficient rendering allow more independent depth samples than color samples, separating “shading” (color) from “coverage” (visibility). This section is limited to discussions of a single depth sample per pixel. We address strategies for multiple samples in Section 36.9.

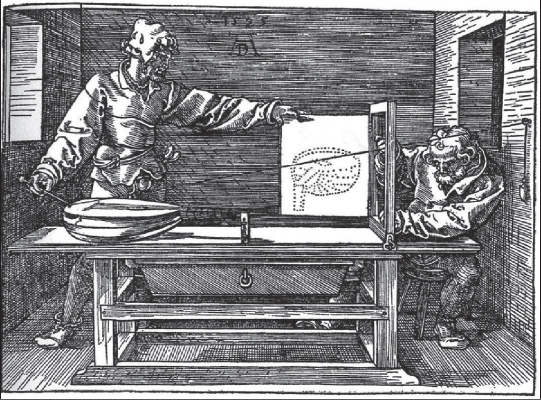

Figure 36.11 reproduces Dürer’s etching of himself and an assistant manually rendering a musical instrument under perspective projection. We have seen and referred to this classic etching before. In it, one man holds a pen at the location where a string crosses the image plane to dot a canvas that corresponds to our color buffer. The pulley on the wall is the center of projection and the string corresponds to a ray of light. Now note the plumb bob on the other side of the pulley. It maintains tension in the string. Dürer’s primary interest was the 2D image produced by marking intersections of that string with the image plane. But, as we noted in Chapter 3, this apparatus can in fact measure more than just the image. Consider what would happen if the artist were to annotate each point he marked on the image plane with the length of string between the plumb bob and the pulley corresponding to that point. He would then record a depth buffer for the scene, encoding samples of all visible three-dimensional geometry.

Dürer’s artist had little need of a depth buffer for a single image. The physical object in front of him ensured correct visibility. However, given two images with depth buffers, he could have composited them into a single scene with correct visibility at each point. At each sample, only the nearer depth value (which in this case means a longer string below the pulley) could be visible in the combined scene. Our rendering algorithms work with virtual objects and lack the benefit of automatic physical occlusion. For simple convex or planar primitives such as points and triangles we know that each primitive does not occlude itself. This means we can render a single image plus depth buffer for each primitive without any visibility determination. The depth buffer allows us to combine rendering of multiple primitives and ensure correct visibility at each sample point.

The depth buffer is often visualized with white values in the distance and black values close to the camera, as if black shapes were emerging from white fog (see Figure 36.12). There are many methods for encoding the distance. The end of this section describes some that you may encounter. Depth buffers are commonly employed to ensure correct visibility under rasterization. However, they are also useful for computing shadowing and depth-based post-processing in other rendering frameworks, such as ray tracers.

There are three common applications of a depth buffer in visibility determination. First, while rendering a scene, the depth buffer provides implicit visible surface determination. A new surface may cover a sample only if its camera-space depth is less than the value in the depth buffer. If it is, then that new surface overwrites the color in the depth buffer and its depth value overwrites the depth in the depth buffer. This is implicit visibility because until rendering completes it is unknown what the closest visible surface is at a sample, or whether a given surface is visible to the camera. Yet when rendering is complete, correct visibility is ensured.

Second, after the scene is rendered, the depth buffer describes the first scene intersection for any ray from the center of projection through a sample. Because the position of each sample on the image plane and the camera parameters are all known, the depth value of a sample is the only additional information needed to reconstruct the 3D position of the sample that colored it.

Third, after the scene is rendered, the depth buffer can directly evaluate the visibility function relative to the center of projection. For camera-space point Q, V((0, 0, 0), Q) = 1 if and only if the depth value at the projection of Q is less than the depth of Q.

The second and third applications deserve some more explanation of why one would want to solve visibility queries after rendering is already completed. Many rendering algorithms make multiple passes over the scene and the framebuffer. The ability to efficiently evaluate ray intersection queries and visibility after an initial pass means that subsequent rendering passes can be more efficient. One common technique exploiting this is the depth prepass [HW96]. In that pass, the renderer renders only the depth buffer, with no shading computations performed. Such a limited rendering pass may be substantially more efficient than a typical rendering pass, for two reasons. First, fixed-function circuitry can be employed because there is no shading. Second, minimal memory bandwidth is required when writing only to the depth buffer, which is often stored in compressed form [HAM06].

Note that a depth buffer must be paired with another algorithm such as rasterization for finding intersections of primary rays with the scene. Chapter 15 gives C++ code for ray casting and rasterization implementations of that intersection test. The rasterization implementation includes the code for a simple depth buffer. That implementation assumes that all polygons lie beyond the near clipping plane (see Chapter 13 for a discussion of clipping planes). This is to work around one of the drawbacks of the depth buffer: It is not a complete solution for visibility. Polygons need to be clipped against the near plane during rasterization to avoid the projection singularity at z = 0. The depth buffer can represent depth values behind the camera; however, rasterization algorithms are awkward and often inefficient to implement on triangles before projection. As a result, most rasterization algorithms pair a depth buffer with a geometric clipping algorithm. That geometric algorithm effectively performs a conservative visibility test by eliminating the parts of primitives that lie behind the camera before rasterization. The depth buffer then ensures correctness at the screen-space samples.

The depth buffer has proved to be a powerful solution for screen-space visibility determination. It is so powerful that not only has it been built in dedicated graphics circuitry since the 1990s, but it has also inspired many image-space techniques. Image space is a good place to solve many graphics problems because solving at the resolution of the output avoids excessive computation. In exchange for a constant memory factor overhead, many algorithms can run in time proportional to the number of pixels and sublinear to, if not independent of, the scene complexity. That is a very good algorithmic tradeoff. Furthermore, geometric algorithms are susceptible to numerical instability as infinitely thin rays and planes pass near one another on a computer with finite precision. This makes rasterization/image-space methods a more robust way of solving many graphics problems, albeit at the expense of aliasing and quantization in the result.

If there are T triangles in the scene and P pixels in the image, under what conditions on T and P would you expect image-space methods to be a good approach to visibility or related problems?

Image-space algorithms seem like a panacea. Describe a situation in which the discrete nature of image-space data makes it inappropriate for solving a problem.

36.3.1. Common Depth Buffer Encodings

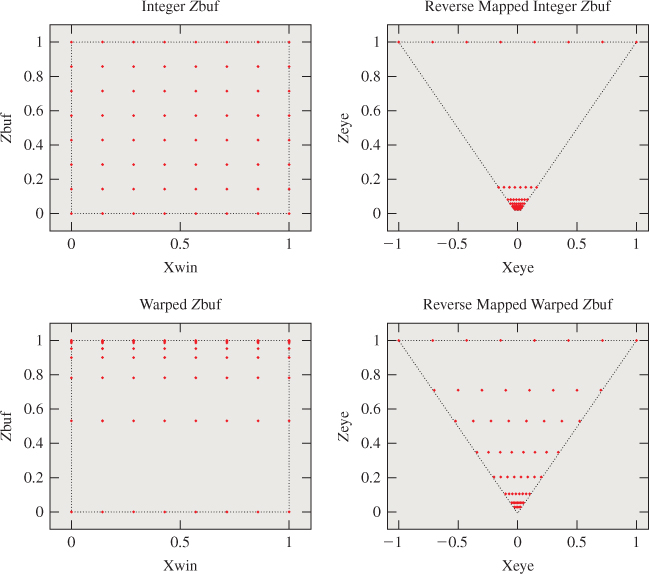

Broadly speaking, there are two common choices for encoding depth: hyperbolic in camera-space z, and linear in camera-space z. Each has several variations for scaling conventions within the mapping. All have the property that they are monotonic, so the comparison z1 < z2 can be performed as m(z1) < m(z2) (perhaps with negation) so that the inverse mapping is not necessary to implement correct visibility determination.

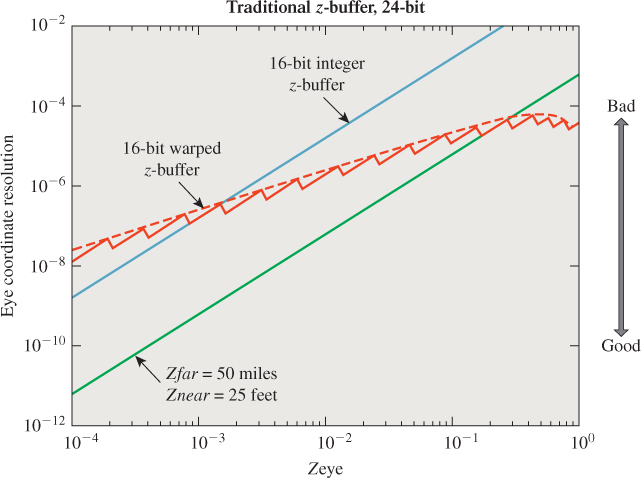

There are many factors to weigh in choosing a depth encoding. The operation count of encoding and decoding (for depth-based post-processing) may be significant. The underlying numeric representation, that is, floating point versus fixed point, affects how the mapping ultimately reduces to numeric precision. The dominant factor is often the relative amount of precision with respect to depth. This is because the accuracy of the visibility determination provided by a depth buffer is limited by its precision. If two surfaces are so close that their depths reduce to the same digital representation, then the depth buffer is unable to distinguish which is closer to the ray origin or camera. This means that the visibility determination will be arbitrarily resolved by primitive ordering or by small roundoff errors in the intersection algorithm. The resultant artifact is the appearance of individual samples with visibility results inconsistent with their neighbors. This is called z-fighting. Often z-fighting artifacts reveal the iteration order of the rasterizer or other intersection algorithm, which tends to cause regular patterns of small bias in depth. Different mappings and underlying numerical representation for depth vary the amount of precision throughout the scene. Depending on the kind of scene and rendering application, it may be desirable to have more precision close to the camera, uniform precision throughout, or possibly even high precision at some specific depth. Akeley and Su give an extensive and authoritative treatment [AS06] of this topic. We summarize the basic ideas of the common mappings here and show Figures 36.13 and 36.14 by them to give a sense of the impact of representation on precision throughout the frustum.

Figure 36.13: The points in (x, z bufferValue) space that are exactly representable under fixed-point, reverse-mapped fixed-point, and floating-point schemes. Fixed-point representations result in wildly varying depth precision with respect to screen-space x (or y).

Figure 36.14: Comparison of precision versus depth for various z-buffer representations: 24-bit fixed point (green) is obviously strictly more accurate than 16-bit fixed point (blue); 16-bit floating point is more accurate than 16-bit fixed point when far from the camera (on the right), but has less precision very near to the camera (on the left). The blue and green curves are lines in log-log space, but would appear as hyperbolas in a linear plot. The red floating-point line is jagged because floating-point spacing is uniform within a single exponent and then jumps at the next exponent; the red curve is a smoothed trendline.

Following (arbitrary) OpenGL conventions, for the following definitions let z be the position on the camera-space z-axis of the point coloring the sample. It is always a negative value. Let the far and near clipping planes be at zf = −f and zn = − n.

36.3.1.1. Hyperbolic

The classic graphics choice describes a hyperbolically scaled normalized value arising from a projection matrix. This is typically called the z-buffer because it stores the z-component of points after multiplication by an API-specified perspective projection matrix and homogeneous division. This representation is also known as a warped z-buffer because it distorts world-space distances.

The OpenGL convention maps −n to 0, −f to 1, and values in between hyperbolically by

Direct3D maps to the interval [−1, 1] by

These mappings assign relatively more precision close to the near plane (where z-fighting artifacts may be more visible), have a normalized range that is appropriate for fixed-point implementation, and are expressible as a matrix multiplication followed by a homogeneous division. The amount of precision close to the near plane is based on the relative distance of the near and far planes from the center of projection. As the near plane moves closer to the center of projection, all precision rapidly shifts toward it, giving poor depth resolution deep in the scene.

A complementary or reversed hyperbolic [LJ99] encoding maps the far plane to the low end of the range and the near plane to the high end. For a fixed-point representation this is usually undesirable because nearby objects would receive higher depth representation errors, but under a floating-point representation this assigns nearly equal accuracy throughout the scene.

Another advantage of the nonlinear depth range is that it is possible to take the limit of the mapping as f ![]() ∞ [Bli93]. This allows a representation of depth within an infinite frustum using finite precision.

∞ [Bli93]. This allows a representation of depth within an infinite frustum using finite precision.

For n = 1m, f = 101m, compute the range of z-values within the view frustum that map to [0, 0.9] under the OpenGL projection matrix. Repeat the exercise for n = 0.1m. How would this inform your choice of near and far plane locations? What is the drawback of pushing the near plane farther into the scene?

This was the preferred depth encoding until fairly recently. It was preferred because it is mathematically elegant and efficient in fixed-function circuitry to express the entire vertex transformation process as a matrix product. However, the widespread adoption of programmable vertex transformations and floating-point buffers in consumer hardware has made other formats viable. This reopened a classic debate on the ideal depth buffer representation. Of course, the ideal representation depends on the application, so while this mapping may no longer be preferred for some applications, it remains well suited for others. More than storage precision is at stake. For example, algorithms that expect to read world-space distances from the depth buffer pay some cost to reconstruct those values from warped ones, and the precision of the world-space value and cost of recovering it may be significant considerations.

Linear The terms linear z, linear depth, and w-buffer describe a family of possible values that are all linear in z. The “w” refers to the w-component of a point after multiplication by a perspective projection matrix but before homogeneous division.

These representations include the direct z-value for convenience; the positive “depth” value –z; the normalized value (z + n)/(n – f) that is 0 at the near plane and 1 at the far plane; and 1 – (z + n)/(n – f), which happens to have nice precision properties in floating-point representation [LJ99]. In fixed point these give uniform world-space depth precision throughout the camera frustum, which makes z-fighting consistent in depth and can simplify the process of assigning decal offsets and other “epsilon” values. Linear depth is often conceptually (and computationally!) easier to work with in pixel shaders that require depth as an input. Examples include soft particles [Lor07] and screen-space ambient occlusion [SA07].

36.4. List-Priority Algorithms

The list-priority algorithms implicitly resolve visibility by rendering scene elements in an order where occluded objects have higher priority, and are thus hidden by overdraw later in the rendering process. These algorithms were an important part of the development of real-time mesh rendering.

Today list-priority algorithms are employed infrequently because better alternatives are available. Spatial data structures can explicitly resolve visibility for ray casts. For rasterization, the memory for a depth buffer is now fast and inexpensive. In that sense, brute force image-space visibility determination has come to dominate rasterization. But the depth buffer also supports an intelligent algorithmic choice. Early depth tests and early depth rendering passes avoid the inefficiency of overdrawing samples, and today’s renderers spend significantly more time shading samples than resolving visibility for them because shading models have grown very sophisticated. So a list-priority visibility algorithm that increases shading time is making the expensive part of rendering more expensive. Despite their current limited application, we discuss three list-priority algorithms.

However, the implicit and refreshingly simple approach of implicit visibility by priority is a counterpoint to the relative complexity of something like hierarchical occlusion culling. There are also some isolated applications, especially graphics for nonraster output, where list priority may be the right approach. We find that the painter’s algorithm is embedded in most user interface systems, and is often encountered even in sophisticated 3D renderers for handling issues like transparency when memory or render time is severely limited.

Some list-priority algorithms are heuristics that often generate a correct ordering, but fail in some cases. Others produce an exact ordering, which may require splitting the input primitives to resolve cases such as Figure 36.15. There’s an important caveat for the algorithms that produce exact results: If we are going to do the work of subdivision, we can achieve more efficient rendering by simply culling all occluded portions, rather than just overdrawing them later in rendering. This was a popular approach in the 1970s. Area-subdivision algorithms such as Warnock’s Algorithm [War69] and the Weiler-Atherton Algorithm [WA77] eliminate culled areas in 2D. There are similar per-scanline 1D algorithms such as those by Wylie et al. [WREE67], Bouknight [Bou70], Watkins [Wat70], and Sechrest and Greenberg [SG81] that use an active edge table to maintain the current-closest polygon along a horizontal line. These were historically extended to single-line depth buffers [Mye75, Cro84]. The obvious trend ensued, and today all of these are largely ignored in favor of full-screen depth buffers. This is a cautionary tale for algorithm development in the long run, since the simplicity found in the depth buffer and painter’s algorithm leads to better performance and more practical implementation than decades of sophisticated visibility algorithms.

36.4.1. The Painter’s Algorithm

Consider a possible process for an artist painting a landscape. The artist paints the sky first, and then the mountains occluding the sky. In the foreground, the artist paints trees over the mountains. This is called the painter’s algorithm in computer graphics. Occlusion and visibility are achieved by overwriting colors due to distant points with colors due to nearer points. We can apply this idea to each sample location because at each sample there is always a correct back-to-front ordering of the points directly affecting it. In this case, the algorithm is potentially inefficient because it requires sorting all of the points, but it gives a correct result.

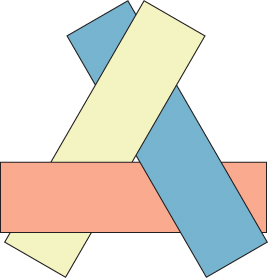

For efficiency, the painter’s algorithm is frequently applied to whole primitives, such as triangles. Here it fails as an algorithm. While primitives larger than points can often be ordered so as to give correct visibility, there are situations where this cannot be done. Figure 36.15 shows three triangles for which there is no correct back-to-front order. Here the “algorithm” is merely a heuristic, although it can be an effective one. If we allow subdividing primitives where their projections cross, then we can achieve an ordering. This is discussed in the following section.

Figure 36.15: Three convex polygons that cannot be rendered properly using the painter’s algorithm due to their mutual overlaps. At each point, a strict depth ordering exists, but there is no correct ordering of whole rectangles.

Despite its inability to generate either correct or conservative results in the general case, the painter’s algorithm is employed for some niche applications in computer graphics and is a useful concept in many cases. It is trivially simple, requires no space, and can operate out of core (provided the sort is implemented out of core). It is the predominant visibility algorithm in 2D user interfaces and presentation graphics. In these systems, all objects are modeled as lying in planes parallel to the image plane. For that special case, the primitives can always be ordered, and the ordering is trivial to achieve because those planes are typically parallel to the image plane.

When we render with a depth buffer and an early depth test, it is advantageous to encounter proximate surfaces before distant ones. Distant surfaces will then fail the early depth test where they are occluded, and not require shading. A reverse painter’s algorithm improves efficiency in that case: Render from front to back. A depth prepass eliminates the need for ordering. During the prepass itself the ordering provides a speedup. Surprisingly, for many models a static ordering of primitives can be precomputed that provides a good front-to-back ordering from any viewpoint [SNB07]. This allows the runtime performance without the runtime cost.

The painter’s algorithm is often employed for translucency, which can be modeled as fractional visibility values between zero and one, as done in OpenGL and Direct3D. Compositing translucent surfaces from back to front allows a good approximation of their fractional occlusion of each other and the background in many cases. However, stochastic methods yield more robust results for this case at the expense of noise and a larger memory footprint. See Section 36.9 for a more complete discussion.

36.4.2. The Depth-Sort Algorithm

Newell et al.’s depth-sort algorithm [NNS72] extends the painter’s algorithm for polygons to produce correct output in all cases. It operates in four steps.

1. Assign each polygon a sort key equal to the camera-space z-value of the vertex farthest from the viewport.

2. Sort all polygons from farthest to nearest according to their keys.

3. Detect cases where two polygons have ambiguous ordering. Subdivide such polygons until the pieces have an explicit ordering, and place those in the sort list in the correct priority order.

4. Render all polygons in priority order, from farthest to nearest.

The ordering of two polygons is considered ambiguous under this algorithm if their z-extents and 2D projections (i.e., their homogeneous clip-space, axis-aligned bounding boxes) overlap and one polygon intersects the plane of the other.

36.4.3. Clusters and BSP Sort

Consider a scene defined by a set of polygons and a viewer using a pinhole projection model. Schumacker [SBGS69] noted that a plane passing through the scene that does not intersect any polygons divides them into two sets. Those polygons on the same side of the plane as the viewer must be strictly closer to the viewer, and thus cannot be occluded by those polygons on the farther side. He grouped polygons into clusters, and recursively subdivided them when suitable partition planes could not be found. Within each cluster he precomputed a viewer-independent ordering (see later work by Sander et al. [SNB07] on a related problem), and employed a special-purpose rasterizer that followed these orderings.

Fuchs, Kedem, and Naylor [FKN80] generalized these ideas into the binary space partition tree. We have already discussed in this chapter how BSP trees can solve visible surface determination by accelerating ray-primitive intersection. They can also be applied in the context of a list-priority algorithm, and that was their original motivating application. (We will shortly see two more applications of BSP trees to visibility: portals and mirrors, and precomputed visibility.)

The same logic found in the ray-intersection algorithm applies to the list-priority rendering with a BSP tree; we are conceptually performing ray intersection on all possible view rays. Listing 36.2 gives an implementation that sorts all polygons from farthest to nearest, given a previously computed BSP tree. We can look at this as a variation on the depth-sort algorithm where we are guaranteed to never encounter the case requiring subdivision. We never need to subdivide during traversal because the tree’s construction already performed subdivision at partition planes between nearby polygons.

Listing 36.2: The list-priority algorithm for rendering polygons in a BSP tree with root node as observed by a viewer at P.

1 function BSPPriorityRender(P, node):

2 if node is a leaf:

3 render the polygon at node

4 return

5

6 closer = node.positiveChild

7 farther = node.negativeChild

8

9 if P is in the negative half-space of node:

10 swap closer, farther

11

12 BSPPriorityRender(P, farther)

13 BSPPriorityRender(P, closer)

36.5. Frustum Culling and Clipping

Assume that we rely on an exact method like a depth buffer or Newell et al.’s depth-sort algorithm for correct visibility under rasterization. To avoid writing to illegal or incorrect memory addresses, assume that we perform 2D scissoring to the viewport. This just means that the rasterizer may generate (x, y) locations that are outside the viewport, but we only allow it to write to memory at locations inside the viewport. We can also scissor in depth: No sample may be written whose depth indicates that it is behind the camera or past the far plane.

Recall that Chapter 13 showed that a rectangular viewport with near and far planes parallel to the image plane defines a volume of 3D space called the view frustum. This is a pyramid with a rectangular base and the top cut off. Clipping to the sides of the view frustum in 3D corresponds to clipping the projection of primitives to the viewport, and produces equivalent results to simply scissoring in 2D.

Scissoring alone ensures correctness, but it may lead to poor efficiency. For example, most primitives that are rasterized may fail the scissor test. There are three common approaches to increasing efficiency in this case that are related to the view frustum.

• Frustum culling: Eliminate polygons that are entirely outside the frustum, for efficiency.

• Near-plane clipping: Clip polygons against the near plane to enable simpler rasterization algorithms and avoid spending work on samples that fail depth scissoring.

• Whole-frustum clipping: Clip polygons to the side and far planes, for efficiency.

In general, it is a good strategy to use scissoring and clipping to complement each other. Use each only for the case where it has high efficiency and low implementation complexity. For example, use a coarse culling based on the view frustum followed by clipping to the near plane and scissoring in 2D. For primitives whose projections are small compared to the viewport, this leads to the scissor test usually passing, which means that most parts of most rasterized primitives for which significant computation is performed are usually on the screen.

36.5.1. Frustum Culling

Eliminating polygons outside the view frustum is simple. One 3D algorithm for this tests each vertex of a polygon against each plane bounding the view frustum. Assume that the planes are oriented so that the view frustum is the intersection of the six positive half-spaces. If there exists some plane for which all vertices of a polygon are in the negative half-space, then that polygon must lie entirely outside the view frustum and can be culled. For small polygons, it may not be efficient to perform this test on each polygon. For example, if a polygon affects at most one sample, then a 3D bounding box test on a single point yields the same result. So frustum culling may be performed on a bounding box hierarchy.

A drawback of the 3D frustum culling algorithm just described is that it may be too conservative. Polygons that are outside the view frustum but near a corner or edge may intersect multiple planes.

36.5.2. Clipping

36.5.2.1. Sutherland-Hodgman 2D Clipping

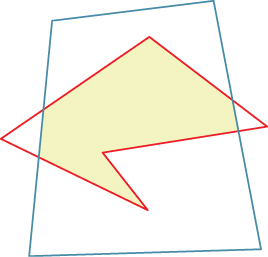

There are many clipping algorithms. Perhaps the simplest is the 2D variation of Sutherland’s and Hodgman’s [SH74] algorithm. It clips one (arbitrary) source polygon against a second, convex boundary polygon (see Figure 36.16). The algorithm proceeds by incrementally clipping the source against the line through each edge of the boundary polygon, as shown in Listing 36.3.

Figure 36.16: The red input polygon is clipped against the convex blue boundary polygon; the result is the boundary of the yellow shaded area.

Construct an example input polygon which, when clipped against the unit square by the Sutherland-Hodgman algorithm, produces a polygon with degenerate edges (i.e., edges that meet at a vertex v with an exterior angle of 180°).

For viewport clipping, Sutherland-Hodgman is applied to a projected polygon and the rectangle of the viewport. For projected polygons that have significant area outside the viewport, clipping to the viewport is an efficient alternative to scissor-testing each sampled point. The algorithm is further useful as a general geometric operation in many contexts, including modeling shapes in the first place.

Listing 36.3: Pseudocode for Sutherland-Hodgman clipping in 2D.

1 // The arrays are the vertices of the polygons.

2 // boundaryPoly must be convex.

3 function polyClip(Point sourcePoly[], Point boundaryPoly[]):

4 for each edge (A, B) in boundaryPoly:

5 sourcePoly = clip(sourcePoly, A, Vector(A.y-B.y, B.x-A.x))

6 return sourcePoly

7

8 // True if vertex V is on the "inside" of the line through P

9 // with normal n. The definition of inside depends on the

10 // direction of the y-axes and whether the winding rule is

11 // clockwise or counter-clockwise.

12 function inside(Point V, Point P, Vector n):

13 return (V - P).dot(n) > 0

14

15 // Intersection of edge CD with the line through P with normal n

16 function intersection(Point C, Point D, Point P, Vector n):

17 distance = (C - P).dot(n) / n.length()

18 t = (D - C).length()

19 return D* t + C* (1 - t)

20

21 // Clip polygon sourcePoly against the line through P with normal n

22 function clip(Point sourcePoly[], Point P, Vector n):

23 Point result[];

24

25 // Add the last point, if it is inside

26 D = sourcePoly[sourcePoly.length - 1]

27 Din = inside(D, P, n)

28 if (Din): result.append(D)

29

30 for (i = 0; i < sourcePoly.length; ++i) :

31 C = D, Cin = Din

32

33 D = sourcePoly[i]

34 Din = inside(D, P, n)

35

36 if (Din != Cin): // Crossed the line

37 result.append(intersection(C, D, P, n))

38

39 if (Din): result.append(D)

40

41 return result

The algorithm produces some degenerate edges, which don’t matter for polygon rasterization but can cause problems when we apply the same ideas in other contexts.

36.5.2.2. Near-Plane Clipping

The 2D Sutherland-Hodgman algorithm generalizes to higher dimensions. To clip a polygon to a plane, we walk the edges finding intersections with the plane. We can do this for the whole view frustum, processing one plane at a time. Consider just the step of clipping to the near plane for now, however.

In camera space, the intersections between polygon edges and the near plane are easy to find because the near plane has a simple equation: z = –n. In fact, this is exactly the same problem as clipping a polygon by a line, since we can project the problem orthogonally into either the xz- or yz-plane. We interpolate vertex attributes that vary linearly across the polygon linearly to the new vertices introduced by clipping, as if they were additional spatial dimensions. Listing 36.4 gives the details in pseudocode for clipping a polygon specified by its vertex list against the plane z = zn, where zn < 0.

Listing 36.4: Clipping of the polygon represented by the vertex array against the near plane z = zn.

1 function clipPolygon(inVertices, zn):

2 outVertices = []

3 Let start = inputVertices.last();

4 for end in inputVertices:

5 if end.z <= zn:

6 if start.z > zn:

7 // We crossed into the frustum

8 outVertices.append( clipLine(start, end, zn) )

9

10 // the endpoint of this edge is in the frustum

11 outVertices.append( end )

12

13 elif start.z <= zn:

14 // We crossed out of the frustum

15 outVertices.append( clipLine(start, end, zn) )

16

17 start = end

18

19 return outVertices

20

21

22 function clipLine(start, end, zn):

23 a = (zn - start.z) / (end.z - start.z)

24 // This holds for any vertex properties that we

25 // wish to linearly interpolate, not just position

26 return start * a + end * (1 - a)

36.5.3. Clipping to the Whole Frustum

Having clipped against the near plane, we are guaranteed that for every vertex in the polygon z < 0. This means that we can project the polygon into homogeneous clip space, mapping the frustum to a cube through perspective projection as described in Chapter 13.

We could continue with Sutherland-Hodgman clipping for the other frustum planes in 3D before projection. However, the side planes are not orthogonal to an axis the way that the near plane is, so clipping to those planes takes more operations per vertex. In comparison, after perspective projection every frustum plane is orthogonal to some axis, so clipping is just as efficient for a side plane as it was for the near plane. That is, clipping is again a 2D operation. Clipping to the far plane can be performed either before or after projection.

When clipping in Cartesian 3D space against the near plane, we were able to linearly interpolate per-vertex attributes such as texture coordinates. In homogeneous clip space, those attributes do not vary linearly along an edge, so we cannot directly linearly interpolate. However, the relationship is nearly as simple.

In practice, we don’t need all of those operations for each edge clipping operation. Instead, we can project every attribute by u′ = –u/z when projecting position. Then we can perform all clipping on the u′ attributes as if they were linear and all operations were 2D. Recall that rasterization needs to interpolate attributes in a perspective-correct fashion, so it operates on the u′ attributes along a scan line anyway (see The Depth Buffer). Only at the per-sample “shading” step do we return to the original attribute space, by computing u = –u′z with the hyperbolically interpolated z-value. Thus, in practice, the clipping (and rasterization) cost for 3D attributes is the same as for 2D attributes, and all of the 2D optimization techniques such as finite differences can be applied to the u′-values.

36.6. Backface Culling

The back of an opaque, solid object is necessarily hidden from direct line of sight from an observer. The object itself occludes the view rays. Culling primitives that lie on the backs of objects can therefore conservatively eliminate about half of the scene geometry. As pointed out previously, backface culling is a good optimization when computing the visibility function, but not for the entire rendering pipeline. When we consider the entire rendering pipeline, the image may be affected by points not directly visible to the camera, such as objects seen by their reflections in mirrors and shadows cast by objects outside the field of view. So, while backface culling is one of the first tools that we reach for when optimizing visibility, it is important to apply it at the correct level. One can occasionally glimpse errors arising from programs culling at the wrong stage, such as shadows disappearing when their caster is not in view.

Although backface culling could be applied to parametric curved surfaces, it is typically performed on polygons. That is because a test at a single point on the polygon indicates whether the entire polygon lies on the front or back of an object, so it is very efficient to test polygons. A curve may require tests at multiple points or an analytic test over the entire surface to make the same determination.

We intuitively recognize the back of an object—it is what we can’t see!—but how can we distinguish it geometrically? Consider a closed polygonal mesh with no self-intersections, and an observer at point Q that lies outside the polyhedron defined by the mesh. Let P be the vertex of a polygon and ![]() be the normal to the polygon (specifically, the true geometric normal to the polygon, not some implied surface normal at the vertex to be used in smooth shading). The polygon defines a plane that passes through P and has normal

be the normal to the polygon (specifically, the true geometric normal to the polygon, not some implied surface normal at the vertex to be used in smooth shading). The polygon defines a plane that passes through P and has normal ![]() . We say that the polygon is a frontface with respect to Q if Q lies in the positive half-plane of the polygon and that the polygon is a backface if Q lies in the negative half-plane. If Q lies exactly in the plane, then the polygon lies on the contour curve dividing the front and back of the object.

. We say that the polygon is a frontface with respect to Q if Q lies in the positive half-plane of the polygon and that the polygon is a backface if Q lies in the negative half-plane. If Q lies exactly in the plane, then the polygon lies on the contour curve dividing the front and back of the object.

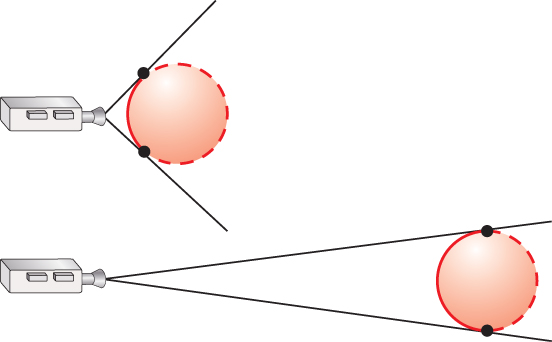

The result will be the same for the polygon regardless of which vertex we choose for a polygon, and regardless of the field of view of the camera. However, Figure 36.17 shows that an object close to the camera tends to have more backfaces than the same object far from the camera, and that this is a general phenomenon, at least for convex objects.

Figure 36.17: Two cameras facing to the right, toward spheres. The long lines depict the rays from the center of projection to the silhouette of the sphere, which is where the backfacing and frontfacing surfaces meet. The top camera is near a sphere, so most of the sphere’s surface is backfacing. The bottom camera is distant from its sphere, so only about half of the sphere’s surface is backfacing.

Prove that for a triangle and a viewer, the backface classification is independent of which vertex we consider.

Most ray tracers and rasterizers perform backface culling on whole triangles before progressing to per-sample intersection tests. This is a more effective strategy for rasterizers because they can amortize the backface test (and the corresponding cost of reading the triangle into memory) over the whole triangle. A ray tracer generally must perform the test once per sample.

Backface culling assumes opaque, solid objects. If the ray starting point Q (e.g., the viewpoint for a primary ray) is inside the volume bounded by a mesh, then it is not conservative to cull backfaces. It also isn’t obvious what the result of visibility determination should be in such a situation because it does not correspond to a physically plausible scene. If an object is composed of a material that transmits light, then a geometric “visibility” test does not correspond to a light transport visibility test.

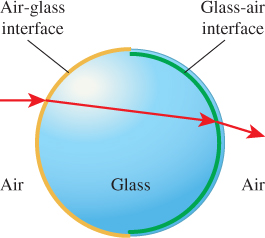

If we abuse geometric visibility in this case and apply backface culling, the back surface will disappear. In practice one can model a transmissive object with coincident and oppositely oriented surfaces. For example, a glass ball consists of a spherical air-glass interface oriented outward from the center of the ball and a glass-air interface oriented inward. Backface culling remains conservative under this model. Along a path that does not experience total internal refraction, light originating outside the ball first interacts with an air-glass frontface to enter the ball and then with a glass-air frontface to exit again, as shown in Figure 36.18.

Figure 36.18: Backface culling allows a ray to intersect the correct one of the two coincident air-glass and glass-air interfaces of a glass ball surrounded by air.

36.7. Hierarchical Occlusion Culling

If no part of a box is visible from a point Q that is outside of the box, and if the box is replaced with some new object that fits inside it, then no part of that object can possibly be visible either. This observation holds for any shape, not just a box. This is the key idea of occlusion culling. It seeks to conservatively identify that a complex object is not visible by proving that a geometrically simpler bounding volume around the object is also not visible. It is an excellent strategy for efficient conservative visibility determination on dynamic scenes.

How much simpler should the bounding volume be? If it is too simple, then it may be much larger than the original object and will generate too many false-positive results (i.e., the bounding volume will often be visible even when the actual object is not). If it is too complex, then we gain little net efficiency even if it is a good predictor. The natural solution is to divide and conquer. Create a Bounding Volume Hierarchy (BVH; see Chapter 37) and walk its tree. If a node is not visible, then all of its children must not be visible. This is one form of hierarchical occlusion culling.

A ray tracer with a hierarchical spatial data structure effectively performs occlusion culling, although the term is not typically applied to that case. For example, a ray cast through a BVH corresponds exactly to the algorithm from the previous paragraph.

For rasterization, occlusion culling is only useful if we can test visibility for the bounding volumes substantially faster than we can for the primitives themselves. There are two implementation strategies, commonly built directly into rasterization hardware, that support this.

The first is a special rasterization operation called an occlusion query [Sek04] that invokes no shading or changes to the depth buffer. Its only output is a count of the number of samples that would have passed the depth buffer visibility test. It can be substantially faster than full rasterization because it requires no output bandwidth to the framebuffer and no synchronous access to the depth buffer for updates, interpolates no attributes except depth, and launches no shading operations. Chapter 38 shows that those are often the expensive operations in rasterization, so eliminating them decreases the cost of rasterization substantially.

Occlusion culling with an occlusion query issues several queries asynchronously from the main rendering thread. When the result of a query is available, it then renders the corresponding object if and only if one or more pixels passed the occlusion query. This is made hierarchical by recursively considering bounds on smaller parts of the scene when a visible bound is observed.