The ensemble module of sklearn contains an implementation of gradient boosting trees for regression and classification, both binary and multiclass. The following GradientBoostingClassifier initialization code illustrates the key tuning parameters that we previously introduced, in addition to those that we are familiar with from looking at standalone decision tree models. The notebook gbm_tuning_with_sklearn contains the code examples for this section.

The available loss functions include the exponential loss that leads to the AdaBoost algorithm and the deviance that corresponds to the logistic regression for probabilistic outputs. The friedman_mse node quality measure is a variation on the mean squared error that includes an improvement score (see GitHub references for links to original papers), as shown in the following code:

gb_clf = GradientBoostingClassifier(loss='deviance', # deviance = logistic reg; exponential: AdaBoost

learning_rate=0.1, # shrinks the contribution of each tree

n_estimators=100, # number of boosting stages

subsample=1.0, # fraction of samples used t fit base learners

criterion='friedman_mse', # measures the quality of a split

min_samples_split=2,

min_samples_leaf=1,

min_weight_fraction_leaf=0.0, # min. fraction of sum of weights

max_depth=3, # opt value depends on interaction

min_impurity_decrease=0.0,

min_impurity_split=None,

max_features=None,

max_leaf_nodes=None,

warm_start=False,

presort='auto',

validation_fraction=0.1,

tol=0.0001)

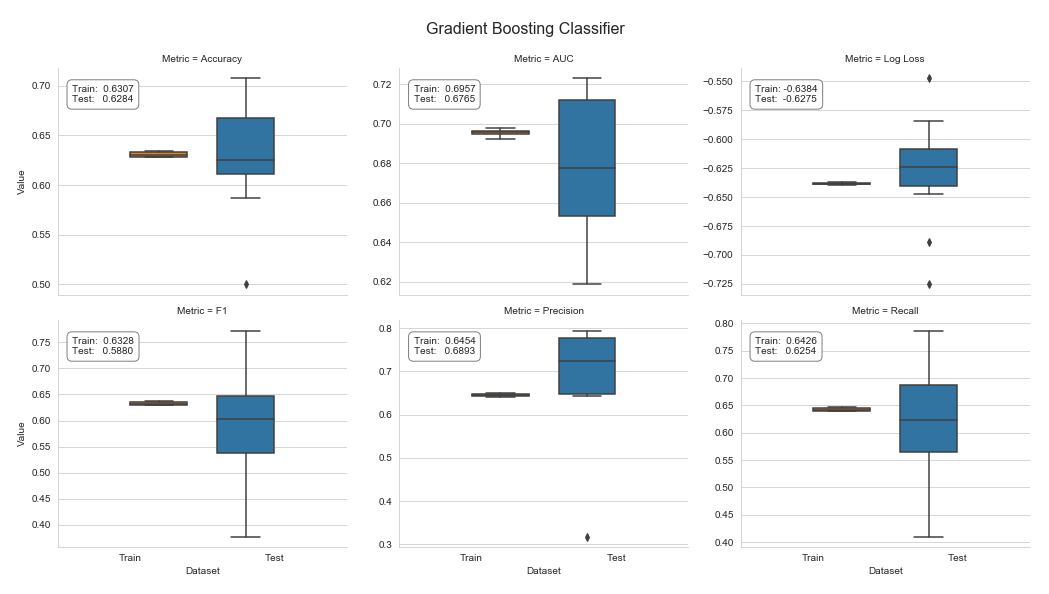

Similar to AdaBoostClassifier, this model cannot handle missing values. We'll again use 12-fold cross-validation to obtain errors for classifying the directional return for rolling 1 month holding periods, as shown in the following code:

gb_cv_result = run_cv(gb_clf, y=y_clean, X=X_dummies_clean)

gb_result = stack_results(gb_cv_result)

We will parse and plot the result to find a slight improvement—using default parameter values—over the AdaBoostClassifier, as shown in the following screenshot: