CHAPTER 12

Assuring Usability of Healthcare IT

Andre Kushniruk, Elizabeth Borycki

In this chapter, you will learn how to

• Describe what usability is and why it is important in healthcare IT

• Employ usability engineering methods in designing and implementing healthcare IT

• Ensure health information systems operate safely

• Define human factors and human–computer interaction

• Factor in human cognition while assisting in the design of healthcare information systems

• Implement the principles of good user interface design in healthcare IT

Around the world, and currently in particular in the United States, there is a move toward widespread adoption of electronic health record (EHR) systems and related information technologies. These systems allow physicians to electronically store and retrieve patient data, document care, place orders, and access computerized decision support in the form of automated reminders and alerts about patient conditions. The ultimate goal is to electronically link individual physicians and health professionals to local, regional, and national patient data repositories. With that purpose in mind, the Health Information Technology for Economic and Clinical Health (HITECH) program was created as a component of the 2009 American Recovery and Reinvestment Act to provide financial incentives to physicians for using these systems in a clinically meaningful way, commonly called meaningful use.1 One expectation is that the incentives will lead to an increased use of EHRs and other HIT-related technologies by health professionals. Adoption of EHRs by end users is highly dependent upon how easy such systems are to use and how well the technology supports and facilitates the work activities of the healthcare professionals. Additionally, it has become increasingly clear there is wide variation in how different types of user interfaces (UIs) affect a clinician’s workflow, activities, and decision making. In this chapter, we will discuss a range of human factors related to designing better and more effective HIT systems from the perspective of the end user. The field of usability engineering will be introduced, and practical methods for ensuring that EHR systems are useful, usable, and safe will be described.

Besides EHRs, a wide variety of health information systems and healthcare information technology have been developed that promise to streamline and modernize healthcare. Examples of these systems include clinical decision support systems (CDSSs) along with other systems designed for use by health consumers and patients for storing and accessing their own health information, such as personal health records (PHRs). However, despite the great potential of these advances, acceptance of HIT systems by end users has been problematic.2 Issues related to users finding some systems difficult to use and the need for increasing user input in the design of HIT systems have been identified as barriers to the widespread adoption of HIT systems by physicians, nurses, and other healthcare professionals. Problems encountered by users of HIT systems related to poor usability have been cited as contributing to the failure of a growing number of HIT projects and initiatives.3 On the other hand, designing HIT systems that are user friendly and truly support healthcare workers and their work will lead to greater adoption of HIT systems and improved healthcare processes. This chapter will discuss some practical approaches and methods for improving the ease of use of HIT systems through the assessment of usability.

Usability of Healthcare IT

In response to the increasing complexity of user interfaces and systems, the field of usability engineering emerged in the software industry during the early 1990s. The methods that have emerged from usability engineering have been applied to improve human–computer interaction (HCI) in a wide range of fields, including HIT. Usability is a concept that can be considered as a measure of how easy it is to use an information system, how efficient and effective the system is, and how easy it is to learn to use the system.4 In addition, usability, broadly defined, also considers the safety of healthcare IT (how safe it is to use a healthcare system) as well as the concept of user enjoyment (how enjoyable it is to use a system). Good usability is important in HIT, and some earlier systems have been rejected by end users because of poor usability. In recent years, usability has become a major issue in HIT; it has become important to avoid designing systems that are difficult to use and hard to learn and that could potentially lead to inadvertent inefficiencies and medical error.

Usability Engineering Approaches

The two main approaches in usability engineering are usability testing and usability inspection.5 Usability testing involves evaluating the usability of user interfaces, prototypes, or fully operational systems by observing representative end users (e.g., physicians or nurses) as they interact with the system or user interface in the study to carry out representative tasks (e.g., use an EHR system or decision support system). For example, usability testing of an EHR system might involve observing physicians interacting with a new EHR system as they enter or retrieve patient data. Users are typically video recorded as they interact with the system (see Figure 12-1), and the computer screens are captured (which can be done with low-cost screen-recording software). During usability testing, users are often asked to “think aloud” or verbalize their thoughts while using the system to carry out tasks.6

Figure 12-1 Health professional being video recorded while carrying out tasks using an EHR system during a usability testing session

TIP Usability refers to the ease of use of a system in terms of efficiency, effectiveness, enjoyment, learnability, and safety.

The data collected from usability testing sessions, such as video recordings of users interacting with a system (including audio recordings of user verbalizations and computer screen recordings), can be played back and analyzed to identify the following:

• User problems in interacting with the system under study

• Potential inefficiencies in the user-system interaction and user interface

• Potential impact on user workflow and work activities

• Recommendations for improving the user interface and underlying system functionality6

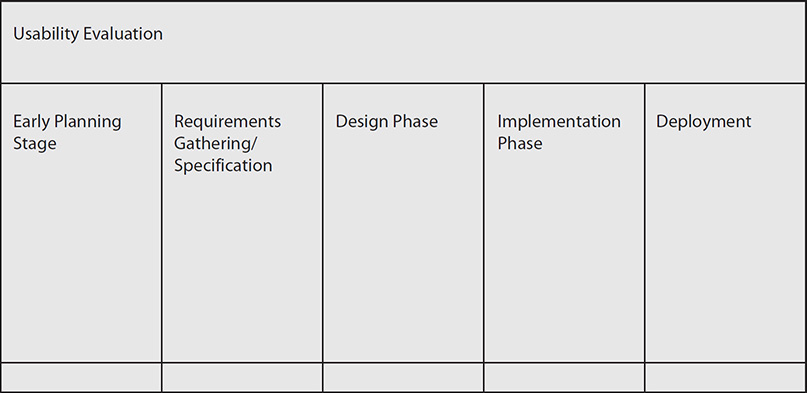

Usability testing has been found to be critically important in designing, implementing, deploying, and customizing HIT systems to ensure they are efficient, effective, enjoyable to use, easy to learn, and safe to use. Furthermore, the approach can be applied across the entire systems development life cycle (SDLC) of healthcare information systems, as illustrated in Figure 12-2. The SDLC provides a timeline or life cycle for considering when and where HIT may be evaluated and improved applying usability testing.

Figure 12-2 Usability evaluation in relation to the SDLC

In the early planning stages of the usability testing SDLC, testing can be applied to test early system designs and user interface ideas using mock-ups or sketches of potential system ideas or screen layouts that can then be shown to users for feedback. This method can also be used to help make selections among different possible candidate systems in situations where an organization, such as a hospital, is deciding among several commercial systems. In this case, potential candidate systems can be required to undergo usability testing, with information from the testing used to help decide on a system to buy. Moving to the requirements-gathering phase of the SDLC, usability testing can be used to gain information about deficiencies with systems currently in place. Also, during requirements gathering, mock-ups can be shown to potential end users to obtain feedback and refined requirements from end users. During the design phase of healthcare IT products, usability testing can be used to obtain user feedback and input in response to partially working user interfaces in iterative cycles of user testing, with the results from the usability testing sent back for use in system redesign. Once a healthcare IT system is ready for implementation, the usability testing approach can be also used to obtain information about the need for potential refinement of the system or user interface and any potential safety issues once it is to be released in a healthcare setting. Finally, once the system has been widely deployed, usability testing can be employed to determine whether the system needs to be modified, customized, or even replaced in order to continue to meet users’ information and work needs.

Another main approach to conducting usability evaluations of healthcare IT is known as usability inspection.7 Usability inspection methods differ from usability testing in that end users are not observed using the system under study, as in usability testing, but rather this approach involves having one or more trained usability inspectors “step through” or inspect a healthcare information system. In doing these inspections, one approach is to compare the user interface against a set of principles or heuristics for good interface design in order to identify violations of the principles or heuristics. A popular set of heuristics in this analysis was developed by Jacob Nielsen and has been widely used in the design and evaluation of healthcare IT systems.5 The following list summarizes the heuristics:

• Visibility of system status The status of the system should be apparent to the user.

Example: The users should know whether patient information that was entered by them was successfully saved in the EHR.

• Match between the system and the real world The terminology in the system should match that of the user.

Example: The medical terminology used in an EHR should match the terms that physicians using the system use in their work.

• User control and freedom The system should support undo and redo operations.

Example: When learning how to use an EHR, the user can explore the system’s functions and features without worrying about making changes that can’t be revoked.

• Consistency and standards The interface should be consistent throughout.

Example: Differing modules in an EHR should use consistent formatting and labels.

• Error prevention The system should help users prevent errors.

Example: An alert should pop up when a physician writes an order for a medication the patient is allergic to when using a physician order entry system.

• Recognition rather than recall Users should not have to remember things.

Example: Users should be able to select a medication from a list rather than trying to remember the medication formats accepted by the system.

• Flexibility and efficiency of use The interface should accommodate the needs of the user.

Example: An EHR should support nurse and physician views of relevant patient information.

• Aesthetic and minimalist design Simple and minimalist interfaces are often easiest to use.

Example: The main screens on an EHR may be simple with the option of going to more advanced screens with more options.

• Help users recognize, diagnose, and recover from errors The system should help users recognize and fix errors.

Example: If the user misses a field on a data entry form, then that field is highlighted in red, and a message is provided to the user that highlighted fields are missing information.

• Help documentation The system should provide adequate help and documentation.

Example: If the user of an EHR needs help in using the technology, there should be online help available for them to refer to.

• Chunking Information should be chunked appropriately in packets of seven plus or minus two.

Example: A menu containing options for using an EHR should not contain more than nine items (otherwise, it should be broken up into more than one menu).

• Style Prominent and important information should appear at the top of the screen.

Example: Drug allergy information should appear at the top of the screen.

Results from conducting heuristic evaluations typically consist of a summary of the number and type of violations of usability heuristics identified in the analysis. This information is then fed back into the system and user interface customization and redesign process to improve the user interface and system.8 For a comprehensive set of usability guidelines providing detailed guidance on development of web-based applications, refer to the excellent book, Research-Based Web Design and Usability Guidelines (https://www.usability.gov/sites/default/files/documents/guidelines_book.pdf).9

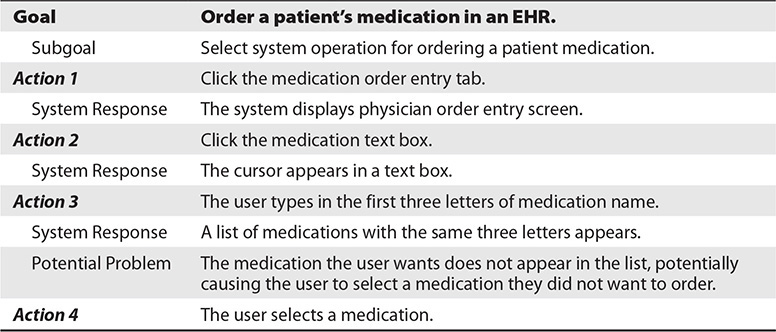

A second form of usability inspection is known as the cognitive walk-through.7 In conducting a cognitive walk-through, the analyst considers the profile of potential end users: what is the level of computer expertise of the typical user, and what is their prior experience with this type of HIT? This method first involves specifying a task to be analyzed using a healthcare IT system, such as entering medications into a medication list in an EHR system. The analyst then systematically steps through the user interface to carry out the specified task. In doing so, the analyst notes and records the following:

• User goals (entering a medication)

• User steps (recording exactly what the user types or clicks)

• The system’s responses to each user step

• Potential user problems in carrying out the task specified

Table 12-1 gives an example of a cognitive walk-through. The results can be used to identify the following:

Table 12-1 Example of a Cognitive Walk-through for a Physician User Ordering a Medication

• Inefficient sequences requiring too many user steps

• Inappropriate or hard-to-understand system feedback

• Potential usability problems

• Problems in achieving goals required to complete a healthcare-related task using the HIT system under study

In general, conducting usability inspections of healthcare IT is faster and may be conducted at a lower cost than doing usability testing, which involves setting up observations of real users of a healthcare IT system. However, it should be noted that usability inspection, although it is a powerful method for predicting user errors, cannot fully predict what users will actually do when faced with HIT in the hospital or clinical setting. Therefore, the two approaches (usability inspection and usability testing) are often used in conjunction, with usability inspection identifying potential errors to improve design and subsequent usability testing with users determining whether there are serious user problems. Selecting the most appropriate usability evaluation method, given a particular system, will depend on the considerations of time and expense, with usability inspection methods requiring less investment of both. However, to ensure that systems meet user needs, observing or interviewing end users of those systems should never be replaced. Furthermore, the costs and efforts associated with conducting usability testing of HIT systems have been shown to be reasonable, and the approach can be done in a highly cost-effective way. For example, usability testing of HIT applications does not require expensive usability laboratories but rather can be conducted “on location” or “in situ” within the healthcare organizations where the system will be deployed. Furthermore, the costs associated with data collection have been shown to decrease by using low-cost video cameras and inexpensive computer screen–recording software such as HyperCam.10, 11

Usability and HIT Safety

In recent years, a growing body of literature on commercially available HIT and EHR systems indicates that some system features and poorly designed user interfaces may actually introduce and cause health professionals to make new types of medical errors. These errors are often not detected until the system has been released and is being used in complex clinical settings. These errors are referred to as technology-induced errors.12 Furthermore, without proper testing with end users before releasing systems, it has been shown that some HIT systems may be dangerous to use, leading to potential harm to patients. Even systems that may have been tested to ensure they pass traditional software testing during their development may be found to “facilitate” or “induce” errors when used in real healthcare settings, in real work situations, and under real-world conditions that were not fully tested during commercial development.

TIP Healthcare information systems need to be extensively tested before going live to prevent technology-induced error.

Use Case 12-1: Simulation Testing the System

A hospital has decided to implement a medication administration system, which will allow physicians to enter medication orders for patients electronically.13 Nurses may access the system to see what medications they should administer to their patients, including all the information about what medication to give the patient, the dosage, and so on. The system also allows the nurses to verify that the patient is the correct patient and is linked to the medication barcoding system that allows the nurse to scan the wristband of the patient to make sure the patient is the right one and also to scan the label of the medication to make sure the medication is correct. It was expected that the system would make giving medications in the hospital safer. However, through simulation testing before the system was released, it was found that under emergency situations the system could become a hazard itself. For example, the testing showed that during emergencies there was not enough time for the nurse to go through all the checking procedures that the system required. Furthermore, it was found that if one nurse was using the system for a patient and was called away, all other users were “locked” out from viewing that patient’s data on the computer. Based on this prerelease testing, it was decided that in order to ensure system safety under all conditions, an emergency override function could be invoked by users if there were serious time constraints. In addition, the system was modified to allow more than one nurse or physician to access a patient record in an emergency.

Previous work has shown that HIT systems may have a number of consequences on clinical performance and cognition, which may be inadvertent and in some cases negative. For example, work by Kushniruk and colleagues has shown that certain designs of screen layouts in EHRs may lead clinical users to become “screen-driven,” where they are overly guided by the order of information on the computer screen, thus relying on how information is presented on the screen to drive their interviews of patients and ultimately their clinical decision making.6 Furthermore, suboptimal design of user interfaces can lead to errors that could potentially lead to patient harm. For example, screens that are cluttered and, as a consequence, obscure important information about drug allergies could potentially contribute to a physician giving patients medication to which they are allergic. Other examples include errors resulting from the inability of the user to navigate or move through the clinical user interface in order to find critical information, especially when needed during a patient emergency, where time is critical.

To ensure HIT safety, a variety of new methods and approaches to more fully testing healthcare information systems are beginning to appear. For example, clinical simulations are an extension of usability testing, whereby representative users are observed using a system to carry out representative tasks, much as is done in usability testing.12 However, this type of testing is typically conducted under highly realistic conditions that closely simulate the real working environment in which a system being developed will be deployed. Once a system has been deployed, the reporting of potential technology-induced errors is also beginning to receive attention from both healthcare IT vendors and governmental organizations concerned with healthcare IT safety. Along these lines, error-reporting systems are beginning to be developed where end users can create anonymous online reports about errors that may be related to HIT that can be collected, published, and used to refine and improve the safety of commercially available HIT.13

Human Factors and Human–Computer Interaction in Healthcare

Human factors is the field of study focused on understanding the human elements of systems, where systems may be defined as software, medical devices, computer technology, and organizations.1 Usability engineering can be considered a subfield of the more general human factors area. The objective of studying human factors in healthcare is to optimize overall system performance and improve healthcare processes and outcomes. Two main areas covered by human factors are cognitive ergonomics, which deals with mental processes of participants in systems, and physical ergonomics, which deals with the physical activity within a system and the physical arrangement of the system.14 Human–computer interaction (HCI) is a subdiscipline of human factors that focuses on understanding how humans use computers to design systems that align with and support human information-processing activities.4 HCI looks specifically at the user’s interaction with a system, such as the keyboard or a touch screen, as well as how the interaction fits within the user’s larger work context. Also important are the multitude of cognitive and social experiences that surround the use of an information system or technology. The four major components of HCI are

• The user of the technology

• The task or job the user is trying to carry out via the technology

• The particular context of use of the technology

• The technology itself

Each of these components can be further analyzed and broken down for improvement and for optimization. In healthcare there are multiple users of a given system that vary widely in their expectations, from physician to pharmacist to nurse. A given role can then be further defined by the focus of the clinician—general practice versus surgical specialty physicians. The task or job each user carries out using the technology covers a wide spectrum, from recording basic patient data to viewing and making clinical choices based on recommendations from a decision support system about a patient’s treatment. The context in which technology is used might vary from the routine to complex or urgent situations and conditions that may involve more than one user or participant. Finally, healthcare technology itself may range from desktop applications for entering and retrieving patient data to mobile devices and “telehealth” applications used in remote patient monitoring. The four components of HCI listed earlier are useful in considering which aspects of user interactions within healthcare systems might need to be improved, modified, or redesigned in order to optimize the user experience. An underlying goal of HCI is to consider all four dimensions to improve HIT and healthcare systems so that users can carry out tasks safely, effectively, efficiently, and enjoyably.

User Interface Design and Human Cognition

The user interface is the component of a HIT system that communicates with a given clinician, healthcare worker, or healthcare consumer as they connect with that technology. The objective of user interaction with HIT systems is typically to carry out healthcare-related work tasks, where patient information can be manipulated, accessed, or created to support clinical decision making and work activities. Anyone using healthcare technology does so in the context of performing tasks related to their role that involve access to, manipulation of, or creation of data. From this perspective, HCI involves shared information processing between the machine and the end user. Given that dynamic, an understanding of human cognition and what users can and can’t be expected to do using HIT systems, in a variety of contexts (i.e., emergent versus non-emergent), underlies the success or failure of the user-HIT interface. For example, an important aspect of human cognition that can be applied to improving user interactions with systems includes understanding the limitations of human ability to process large amounts of complex data. Strategies for reducing cognitive load (i.e., the amount of perception, attention, and thinking required to understand something) associated with task performance and improving the quality and relevance of information displayed on computers to end users are needed in order to ensure the effectiveness and efficiency of HIT systems.

Importance of Considering Cognitive Psychology

To understand how to design, develop, and implement effective healthcare IT, an understanding of key areas of cognitive psychology is relevant.15 Cognitive psychology is the field that studies human reasoning and information processing (information seeking and decision making), including studies of perception, attention, memory, learning, and skill development. For example, studies of how clinicians gain experience and expertise in their area of work are relevant for designing user interfaces that better match the information processing of end users in that work area. Further, understanding the limitations of human memory has led user interface experts to develop simple heuristics “rules of thumb” that can be used to help guide the design of user interfaces and computer screens for HIT. For example, studies in cognitive psychology indicate that people can generally remember seven discrete pieces of information, plus or minus two, with seven-digit telephone numbers a good example.4 This has led to the recommendation that the number of items in a computer menu follow that same guideline. In addition, studies of users learning how to use HIT have particular relevance to improving the adoption of complex HIT applications. A number of reports have shown that some applications are difficult to use and time-consuming to learn and master in real-world healthcare settings. Work in characterizing and finding ways of reducing the amount of time and effort to learn how to use such systems has considerable importance in deploying HIT systems, where users may be faced with the need to learn how to use multiple varied information systems and HIT in complex and stressed environments. Knowledge gleaned from the study of perception and attention is also highly relevant for HIT and has led to the following development guidelines about how to display information to users on a computer screen:

• Present information in a logical and meaningful way.

• Provide attention-getting visual cues, and highlight important or critical information.

• Help users focus their attention on important information.

• Make information needed in decision making easy to find and process.

• Avoid overloading the user with large amounts of irrelevant information.

Approaches to Cognition and HCI

The field of HCI has been influenced by two primary models of how humans interact with information technology and how they carry out tasks using computer systems. In the 1960s and 1970s, the concept of the human as the “information processor” borrowed from advances in computer science and used the metaphor of computer systems to describe human attention, information processing, and reasoning. For example, psychologists working in the area of HCI borrowed concepts such as “memory stores” and “processing units” from computer science in order to describe how humans think and process information. Five areas of focus as a result of this work are

• Describing the computer user’s goals

• Describing how their goals were translated into intentions to carry out a task using computer systems

• Describing how user intentions became plans for executing an action using a computer system

• Describing how computer operations are actually performed by the user

• Describing how users interpret feedback from the computer system once the action has been completed16

Using the method of studying user interactions, it is then possible to understand where users are having problems in using a complex healthcare information system. Examples include the user having problems translating their intentions into computer actions by typing in the right command and having trouble interpreting error messages returned by the system as feedback to the user. While this model has been useful in analyzing HCI at a detailed level, it has been criticized for focusing only on the individual user interacting with an individual computer system in isolation, not as part of the rich and complex social context of healthcare work. As a result, more recent approaches to modeling HCI have appeared that focus on “distributed cognition” where information processing is shared among a number of people and systems in order to carry out real tasks in real social settings.15 In an operating room, analyzing and understanding how to use a new surgical information system requires a thorough understanding of all the participants in the procedure, their roles, and their interactions with the system. Although understanding how one individual user interacts with such a system is important, it does not provide a complete picture of how the system interfaces with the activities of all the participants, including the patient. Interactions between the information-processing roles of the doctor, the patient, and the computer system used by the doctor are all important in understanding the impact of EHRs on the physician-patient relationship (see Use Case 12-2).17

Considering work activity as a distributed process provides a broader framework for understanding where HIT matches user needs and provides support for the goal of completing complex healthcare activities involving multiple participants. Somewhat unique to healthcare, it is important to note that many work tasks are complex, are often time-sensitive, and may involve considerable uncertainty and urgency. Work domain analysis is an approach used to analyze technology in these complex situations and has proven to be an effective starting point in characterizing the impact of HIT. This approach involves answering the following questions:

• Who are the classes of users in the design and testing of HIT?

• What are the tasks each class of user carries out using a technology?

• Are there situational aspects of doing the task, and did the designer consider that the task might involve time constraints or urgency?

• What are the potential problems users might encounter when using the HIT, and how can they be fixed?

All of these questions should be considered both before and after a HIT application has been implemented in a hospital or clinic.

Use Case 12-2: Observing Users Interacting with an EHR System

Observing users interacting with a system has become widely used to understand the impact of HIT on human cognition and work processes. In one such study of the introduction of a new EHR in a diabetes clinic, the vendor of the system wanted to know how the design of the system, including the way its computer screens were organized and what information it displayed to the user, would affect the interaction of the physician with the patient. This was a key consideration because the EHR was intended to be used by the physician while interviewing the patient.17 In this study, the physician’s interaction with the system was recorded while interviewing a “simulated patient” (a study collaborator who played the part of a patient). One observation of the study noted that physicians using the EHR struggled with finding the screens containing the questions they wanted to ask the patient and documenting the response. A number of physicians eventually dropped out of the study and did not adopt the system. Of those who remained, some changed their patient interviewing strategy and style and became “screen driven,” asking questions based on the order of information on the screens. When interviewed about their use of the EHR, these physicians were not consciously aware that the design of the system and the layout of information had led to changes in the way they interacted with patients. The vendor used the study results to improve and streamline user training with specific examples of how to use the system while interacting with the patient.

Technological Advances in HIT and User Interfaces

A key component or dimension of HCI is the human dimension—or cognition, knowledge, skills, and understanding of the user interacting with a computer. The technology itself is another important consideration of HCI in healthcare. The design and build of computer-user interfaces have advanced significantly over the past several decades and continue to evolve and improve rapidly. The typical user interaction with healthcare computer systems up until the 1970s involved command-line interactions recalled from memory and entered on a keyboard to create and edit patient files and access data from laboratory and pharmacy systems. The subsequent development of graphical user interfaces (GUIs) greatly improved user interaction with those systems, and by the 1980s, those same enhancements appeared in personal and work computers. This major breakthrough in the design of user interfaces essentially extended the use of computers from only highly computer-literate end users to the larger population of work professionals and eventually the general public. The Apple Macintosh introduced the concepts of a mouse, icons, and direct manipulation of objects on the computer screen versus typing a variety of commands with only a keyboard. Operating systems such as macOS and Microsoft Windows replicated the office examples of files, folders, calendars, and spreadsheets on the computer desktop and allowed easy manipulation via keyboard and mouse. The use of this metaphor simplified user interactions with systems and allowed users to more rapidly learn how to use computer systems.4 The World Wide Web introduced the hyperlink concept, providing links to text, images, and other media via a mouse click on the screen. In healthcare IT, these advances in organizing and displaying information on computer screens for end users have had a major impact on healthcare information system interactions and on the type of user interfaces that HIT has developed. With the introduction of the graphical user interface in other industries, EHR vendors have all evolved from the difficult-to-use command line such as Microsoft DOS to more user-friendly GUI-based systems using Microsoft Windows and macOS.4 Improvements in the EHR user interfaces now facilitate easy access to and entry of information in a variety of source systems such as laboratory, radiology, and pharmacy. Internet or web technologies have also enhanced EHR development beyond Windows and macOS operating systems to take advantage of those capabilities.

Mobile devices such as smartphones and tablet computers, the increased use of the Web, and social media applications such as Facebook and Twitter have started a new trend in the development of collaborative/cooperative user interfaces designed to support the distributed work activities of multiple users, both health professionals and more recently patients. One example of this is the web site PatientsLikeMe (www.patientslikeme.com). Now users may be

• Located in the same place at the same time

• Geographically apart but still communicating at the same time (using video or web conferencing to discuss a patient case)

• Communicating asynchronously from different locations at different points in time (using secure e-mail to communicate with the patient or another colleague)

User interfaces are becoming increasingly important in areas such as telehealth and distance medical consultations and are providing new types of interactions that may involve multiple synchronous or asynchronous users interacting to carry out a complex task from different locations, such as conducting remote patient consultations using video conferencing. Skills and expertise in this area of HCI will become increasingly important as software/hardware for supporting collaborative healthcare activities becomes more prevalent.18

Input and Output Devices and the Visualization of Healthcare Data

Along with the advances in operating systems and user interfaces, improvements continue to be made in the development of more effective input and output devices for HIT. Additionally, advances in the visualization of health data are taking place, such as designing new ways for representing complex health data, supporting analysis of patient trends by health professionals, and understanding aggregated patient, health professional, and organizational data from many sources. Principles for the display of health information on computer screens include grouping information in a clinically meaningful way, avoiding overload and simplifying access to critical patient data by limiting what is presented, and standardizing information displays where possible.4

A variety of different approaches, styles, and technologies have been developed to support the input of health data into computer systems. Keyboards are still the most common way of entering healthcare data into a computer system, with other devices now seeing increased use. These include the computer mouse, trackballs, electronic pens, and handwriting recognition and touch screens. Voice recognition technology, as part of the EHR capturing spoken information in certain sections, is also seeing increased adoption. Each of these approaches has advantages and disadvantages, and their usefulness varies considerably depending on the context of their use in different healthcare settings. Touch screens may be useful for allowing patients to enter data in a physician’s waiting room, while in infection-prone areas of a hospital they may not be recommended. Voice recognition (VR) has been useful in physicians’ private offices to dictate narrative portions of a patient’s history in the EHR and patient referral letters. Most VR systems work best when the user is taught how to use the program, including how to incorporate it into user workflows and “training” it to the users’ speech patterns. Increasingly, with the use of networked speech files accessible from any computer and more accurate microphones, acute-care settings are now leveraging the same tools.

When considering data entry options, there are a wide variety of approaches, including using online forms that take direct data entry through the use of specific fields, using question-and-answer dialogs that prompt the user, selecting options from drop-down and other menus, directly manipulating objects on the screen using pointing devices such as a mouse, and using voice recognition, as described earlier. Across all methods, data entered in the EHR by clinicians vary widely from complete free text entered via a keyboard to very structured data where options are limited to well-defined terms and phrases the user can select from. Free-text examples include comments about how a medication might be used and the narrative in clinician notes where the patient’s own words are captured. Allergies, medications, and other patient histories are more commonly captured using structured vocabularies with some options for text entry as well. Most EHRs try to find a balance of data entry methods that facilitate complete and adequate documentation but don’t limit or prevent the user from performing the task in a timely fashion in the way they documented prior to using a computer. Furthermore, clinical data may be entered into fields of systems such as EHRs as free-form text (text typed in a text box in an unrestricted way) or alternatively as semistructured data (where the format of data entered is somewhat structured by the computer system) or completely structured or coded data (where the user must select only from the options displayed to them by the system, such as select from a list of medical diagnoses). For example, some EHRs will allow data on a patient’s history of present illness to be entered as free text with no restrictions imposed by the computer system on what the user types. However, as electronic information in computer systems becomes more widely shared, structured or coded data using standard medical terms will be needed.19 For example, in systems employing coded data input, a physician might enter into a text box a diagnosis of “diabetes,” which will result in a menu listing agreed-upon forms of diabetes, such as “diabetes I” and “diabetes II,” from which the user must select. This approach allows for the selection of coded and structured data that is sharable and understandable across systems and end users. However, some users may feel that the approach restricts their ability to express themselves and that, if not carefully selected, the choices offered by the system do not fully match their information needs or the particular medical or healthcare terminology or vocabulary they use. An anecdotal complaint from clinicians who use electronic tools to document their notes and from those who receive copies of those notes is that each one looks similar, contains more data than is needed, and doesn’t tell the story compared to dictated or handwritten notes that were more common prior to electronic records. This tension between free-text entry and coded data entry still exists, and some recent work has been conducted in developing user interfaces for healthcare that automatically extract coded standardized medical terms from free text.20

The visualization of healthcare has also become an important area of research and development. There are many ways of visualizing health data, ranging from textual data to graphical displays including charts and histograms to three-dimensional images. Workstations capable of integrating complex images with text and graphics are becoming more common, and multimedia capabilities are becoming more standard in healthcare user interfaces. Experimental approaches to data visualization have led to user interfaces that use 3D graphics to reconstruct views of the body and to allow for remote robotically controlled surgeries and other medical procedures. One such system, known as the da Vinci system, has allowed for surgery at a micro level, where the surgeon operates using a computer display that allows for fine-tuned control of miniaturized surgical instruments that is less invasive than traditional surgeries and that has greatly improved medical outcomes.21

Other trends in the area of input and display of healthcare data are related to the concepts of ubiquitous and pervasive computing. Ubiquitous computing in healthcare refers to user interfaces that are mostly “invisible” and that the user may be largely unaware of. Examples include wearable computing, such as giving patients wired electronic shirts containing sensors that can detect cardiac problems and automatically alert healthcare professionals of impending heart-related events, often outside the normal acute-care environment. The move to pervasive healthcare includes the possibility for interaction with HIT throughout our daily lives.22 Examples of pervasive health include use of mobile healthcare applications such as “mHealth” that allow healthcare professionals, patients, and the general public to access the latest evidence-based health information using cell phones, smartphones, tablets, and other devices. According to recent studies and reports, this trend will continue,22 and in many countries the use of mobile devices for accessing the Internet and for text messaging has surpassed the use of desktop computing models. In the area of healthcare, many new HIT applications are being developed for supporting the promotion of health and for linking health professionals and patients through a range of innovative mobile applications. From an HCI perspective, increased use of mHealth affords new opportunities for communicating and receiving health information from a wider range of settings than previously possible.

Finally, work in the area of developing customizable and adaptive user interfaces to HIT applications is also promising. A criticism of many healthcare user interfaces and systems voiced by end users has been their perceived inflexibility, given the wide range of types of healthcare users and the even wider range of contexts in which they use HIT. Along these lines, work in developing customizable and adaptable user interfaces and the broader information systems holds considerable promise.

Approaches to Developing User Interfaces in Healthcare

Recommended approaches to the development of user interfaces for HIT include considering the design of user interfaces within the context of a “holistic” systems approach, where user input into the design and implementation is considered along the continuum from initial conception of interface metaphor to development, testing, and implementation.4 User-centered design of HIT involves the following principles:

• Focus early on the users’ needs and work situations.

• Conduct a task analysis where the details of the users’ information needs and environment are identified.

• Carry out continual testing and evaluation of systems with users.

• Design iteratively, whereby there are cycles of development and testing with end users.

Along these lines, rapid prototyping of complex HIT user interfaces is recommended. The process begins with sharing early mock-ups (typically sketches or computer drawings of planned user interfaces) with potential end users, to obtain specific feedback to improve interface design, features offered, and potential alterations or revisions to the interface. As the system and user interface evolve during HIT development, continual user input and feedback through continual user testing are recommended.4 In later stages, the testing of prototypes (partially working early versions of a system or user interface) and emerging user interfaces with end users may involve conventional methods, such as periodically holding focus groups with potential users (where groups of users are shown prototypes or early system versions and asked to react to and comment about) or individual interviews with future users about the design to obtain their feedback. Methods from the emerging area of usability engineering were described in detail earlier in this chapter.

Methods for Assessing HIT in Use

Early stages in the development of healthcare user interfaces for HIT include the creation of an initial product description and description of the context of use of the system or user interface. Here, the capabilities of the system from the end user’s perspective and the requirements of the end user are defined. It is at this stage that a number of engineering method requirements can be employed. This may involve conducting walk-throughs or observations of settings in which the technology is or will be deployed. One method that has been used is known as shadowing, where one or more healthcare professionals are followed during a normal day to see how they perform their work, how HIT fits (or could fit) into their practice, and where problems and errors occur with current workflow and tools. A wide range of methods can be used at this stage to obtain information about users and their work, including ethnographic observational study of the work environment, time-motion studies (where the times to complete healthcare tasks with or without HIT are recorded), and interviews with health professionals about their information-processing needs. At this stage, analyses can be conducted to describe how users carry out their healthcare-related tasks and where HIT could be inserted to improve their work processes.4 This analysis may involve observing users as they carry out real or simulated tasks of increasing complexity using HIT, including those related to managing medications: medication dispensing, patient and order verification and administration, and complex intravenous therapies.

After completing studies of end users in their work environments as described earlier, initial user interface designs and specifications are created. Similar to the previous analogies of files and folders and how those have been replicated in basic personal computing, medical analogies such as rooms can be employed to streamline and optimize workflow using other user interface options. This approach has been used in hospital bed scheduling systems, where users can click different “rooms” displayed on the computer screen and visually increase their size to focus on a particular patient bed. Decisions also need to be made about the following aspects of user interface design:

• Determining the functionality of the interface and what users will be allowed to do

• Designing the layout and sequence of computer screens that users will see

• Specifying the style of interaction with the user

• Selecting an appropriate prototyping and user interface programming tool

• Planning and scheduling continual iterative user testing

While there are a number of guiding principles that have been used for both the design and testing of healthcare information systems, several of which were described earlier in the section on usability engineering, it should be noted that a recurring theme in the design and development of applications such as EHRs has been a lack of agreed-upon national or industry user interface standards for these types of systems. A vendor may choose one type of user metaphor and lay out screen sequences and organize patient information in a way that may be quite different from another vendor, thus making it difficult for users of multiple different electronic records to remember how to use each system. Attempts at developing standards for use in the design and implementation of healthcare IT user interfaces are underway, such as the Common User Interface (CUI) project of the National Health Services (NHS) in the United Kingdom, which is in collaboration with Microsoft.23 Its work has led to a number of nationally endorsed guidelines and recommendations for displaying health information data in an EHR in a way that is more intuitive and friendly for the user and less likely to lead to error.

Ongoing user interface review/improvement is important as technology and healthcare evolve. As described earlier, user-centered design recommends continual user input and feedback as the user interface is being developed and as soon as possible after a stable version of the user interface is available. Along these lines, an approach to the design and implementation of healthcare IT has emerged known as participatory design, whereby users are more closely involved in the development of healthcare user interfaces, in effect serving as “user consultants” and active participants and members of the design and implementation team, providing their expertise on what would, or would not, work from the user’s perspective. Along these lines, proponents of “socio-technical design” argue that consideration of the social impact of systems, such as the effects of a new system on physician or nurse work practices and social interactions, is as important as obtaining technical requirements.24 They further argue that lack of consideration of socio-technical issues will likely lead to lack of system acceptance and, ultimately, system failure, which has been shown to be the case in the literature regarding HIT successes and failure.

Challenges and Future Issues

In recent years, the issue of usability of HIT has come to the fore in the United States and worldwide. Along the lines described in this chapter, the American Medical Informatics Association has identified four areas of importance for which they have developed recommendations, which include: “(1) human factors in HIT research, (2) HIT policy, (3) industry recommendations, (4) recommendations for the clinician end user of EHR software.”25 From a usability and human factors perspective, there is a need to develop standardized use cases for design and testing HIT, best practices for implementation, improvement of adverse event reporting systems, and evaluation procedures for ensuring the usability and safety of HIT. Also, according to the American Medical Association (AMA), eight usability-related priorities should include (1) enhancing physicians’ ability to provide high-quality patient care, (2) supporting team-based care, (3) promoting care coordination, (4) offering configurable and modular HIT products, (5) reducing cognitive load when using systems, (6) promoting interoperability across different healthcare settings, (7) facilitating engagement among patients, mobile technologies, and EHRs, and (8) incorporating user feedback into product development design and improvements.26

Chapter Review

The success of HIT depends on a number of human factors and requires that the systems and technologies that we develop are both useful and usable. The usability of HIT has become a critical issue in developing HIT that will work effectively and be willingly adopted by end users. Usability is defined as a measure of how easy it is to use an information system in terms of its efficiency, effectiveness, enjoyment, learnability, and safety. A number of practical methods for evaluating HIT usability were described in this chapter. They included usability testing, which involves observing users of HIT carrying out tasks using a technology, and usability inspection (heuristic evaluation and the cognitive walk-through), which involves trained analysts “stepping through” or analyzing HIT systems and their user interfaces in order to identify potential usability problems and issues. In the chapter, the relationship between poor usability and increased chances for medical error was discussed. Along these lines, it has been increasingly recognized that some HIT systems, if poorly designed, can pose a safety hazard. Approaches for more effectively testing the safety and effectiveness of HIT systems before widespread release were described. It is important that the HIT system that is developed and deployed in healthcare settings be shown to be not only effective and efficient but also safe.

Human factors is a broad area of study that includes consideration of a wide range of human elements within healthcare systems. Human–computer interaction (HCI) is a subfield of human factors that deals with understanding how humans use computers so that better systems can be designed. There are a number of dimensions of HCI, including the technology itself, the user of the technology, the task at hand, and the context of use of the technology. It is important to consider all of these dimensions when designing and deploying HIT. Design of user interfaces in healthcare can benefit from an understanding of the capabilities and limits of human cognition. For example, knowledge about how information is best displayed to healthcare professionals borrows from fundamental work in cognitive psychology and HCI. Indeed, to design effective user interfaces, consideration of human aspects related to the processing of information, training, and potential for introduction of error must be considered. From the technological side of things, advances in user interfaces are rapidly advancing. Some of these breakthroughs have included the development of GUIs, new input and output devices, and advances in the visualization of healthcare data. Further advances include the widespread use of mobile devices and increased use of the Web and social media, allowing for access to healthcare data from many locations and supporting collaborative work practices involving multiple users. In addition, approaches to developing more effective user interfaces to HIT are also evolving, with the advent of user-centered design methods and participatory design, both of which promote increased user involvement in all stages of HIT design, implementation, and testing.

Questions

To test your comprehension of the chapter, answer the following questions and then check your answers against the list of correct answers that follows the questions.

1. Human factors can be defined as what?

A. The study of making more effective user interfaces to computer-based systems

B. The field that examines human elements of systems

C. The group of methods that can be used to make systems more usable

D. The study of technology-induced errors

2. User-centered design involves which of the following?

A. An early focus on the user and their needs

B. Continued testing of system design with users

C. Iterative feedback into redesign

D. Participation of users as members of the design team

E. All of the above

F. A, B, C, D

G. A, B, C

3. Which of the following is not a user interface metaphor?

A. The desktop metaphor

B. The document metaphor

C. The patient chart metaphor

D. The command-line metaphor

4. What are the main methods from usability engineering?

A. Participatory design

B. Cognitive walk-through

C. Heuristic inspection

D. Usability testing

E. B, C, D

F. A, B, D

5. Which of the following is not one of Nielsen’s heuristics?

A. Allow for error prevention.

B. Support recognition rather than recall.

C. Use bright colors to be aesthetically pleasing.

D. Allow for flexibility and efficiency of use.

6. Which of the following is not true?

A. Usability testing can be conducted inexpensively in hospitals.

B. Usability testing should be conducted only by human factors engineers.

C. Lack of user input in design and testing is one of the biggest causes of system implementation failure.

D. Nurses and physicians should be involved in systems design.

7. What can technology-induced errors arise from?

A. Programming errors

B. Systems design flaws

C. Inadequate requirements gathering

D. Poorly planned systems implementation

E. A, B, C, D

F. A, C

8. What are the four main components of HCI?

A. Software, task, subtask, human factors engineer

B. Technology, task, user, context of use

C. Software, hardware, user, outcome

D. User, user interface, human factors engineer, usability testing lab,

Answers

1. B. Human factors broadly examines human elements of systems, where systems represent physical, cognitive, and organizational artifacts that people interact with (e.g., computers).

2. G. User-centered design involves: (1) an early focus on users and their needs, (2) continued testing of system design with users, and (3) iterative feedback into redesign.

3. D. The command line is not a metaphor; it does not represent some other object in the world.

4. E. The main methods used in usability engineering are: (1) the cognitive walk-through, (2) heuristic inspection, and (3) usability testing.

5. C. Using bright colors is not one of Nielsen’s heuristics.

6. B. Usability testing can be conducted by professionals with varied backgrounds and not just by human factors engineers. Usability engineering methods have become more widely known and have been simplified and used by different types of IT and health professionals.

7. E. Programming errors, systems design flaws, inadequate requirements gathering, and poorly planned systems implementation have all been identified as factors that cause technology-induced error in healthcare.

8. B. The four main components of HCI are the technology itself, the task, the user of the technology, and the context of use of the technology.

References

1. HealthIT.gov. (2013). EHR incentives and certification. Accessed on February 11, 2017, from https://www.healthit.gov/providers-professionals/ehr-incentives-certification.

2. Caryon, P. (Ed.). (2012). Handbook of human factors and ergonomics in health care and patient safety. CRC Press.

3. Kushniruk, A., & Borycki, E. (Eds.). (2008). Human, social and organizational aspects of health information systems. IGI Global.

4. Preece, J., Sharp, H., & Rogers, Y. (2007). Interaction design: Beyond human-computer interaction, second edition. John Wiley & Sons.

5. Nielsen, J. (1993). Usability engineering. Academic Press.

6. Kushniruk, A., & Patel, V. (2004). Cognitive and usability engineering methods for the evaluation of clinical information systems. Journal of Biomedical Informatics, 37, 56–76.

7. Nielsen, J., & Mack, R. L. (1994). Usability inspection methods. John Wiley & Sons.

8. Zhang, J., Johnson, T., Patel, V., Paige, D., & Kubose, T. (2003). Using usability heuristics to evaluate patient safety of medical devices. Journal of Biomedical Informatics, 36, 23–30.

9. Usability.gov. (2012). Research-based web design and usability guidelines. Accessed on June 20, 2012, from https://www.usability.gov/sites/default/files/documents/guidelines_book.pdf.

10. Kushniruk, A., & Borycki, E. (2006). Low-cost rapid usability engineering: Designing and customizing usable healthcare information systems. Healthcare Quarterly, 9, 98–100, 102.

11. Rubin, J., & Chisnell, D. (2008). Handbook of usability testing: How to plan, design, and conduct effective tests. John Wiley & Sons.

12. Borycki, E., & Kushniruk, A. W. (2005). Identifying and preventing technology-induced error using simulations: Application of usability engineering techniques. Healthcare Quarterly, 8, 99–105.

13. Kushniruk, A., Borycki, E., Kuwata, S., & Kannry, J. (2006). Predicting changes in workflow resulting from healthcare information systems: Ensuring the safety of healthcare. Healthcare Quarterly, 9, 114–118.

14. Shortliffe, E., & Cimino, J. (Eds.). (2006). Biomedical informatics: Computer applications in health care and biomedicine. Springer.

15. Norman, D., & Draper, S. W. (Eds.). (1986). User centered system design. LEA.

16. Patel, V. L., Kushniruk, A. W., Yang, S., & Yale, J. F. (2000). Impact of a computer-based patient record system on data collection, knowledge organization and reasoning. Journal of the American Medical Informatics Association, 7, 569–585.

17. Baecker, R. M. (1992). Readings in groupware and computer-supported cooperative work: Assisting human-human collaboration. Morgan Kaufman.

18. Patel, V., & Kaufman, D. (2006). Cognitive science and biomedical informatics. In E. Shortliffe and J. Cimino (Eds.), Biomedical informatics: Computer applications in health care and biomedicine. Springer.

19. Patel, V. L., & Kushniruk, A. W. (1998). Interface design for health care environments: The role of cognitive science. Proceedings of the AMIA Symposium, 29–37.

20. Da Vinci Surgery. (2012). The da Vinci surgical system. Accessed on June 20, 2012, from www.davincisurgery.com/davinci-surgery/davinci-surgical-system/.

21. Bardram, J. E., Mihailis, A., & Wan, D. (2007). Pervasive computing in healthcare. CRC Press.

22. Microsoft Health. (2012). Microsoft health common user interface. Accessed on June 20, 2012, from www.mscui.net.

23. Berg, M. (1999). Patient care information systems and health care work: A sociotechnical approach. International Journal of Medical Informatics, 55, 87–101.

24. Middleton, B., Bloomrosen, M., Dente, M. A., Hashmat, B., Koppel, R., Overhage, J. M., … Zhang, J. (2013). Enhancing patient safety and quality of care by improving the usability of electronic health record systems: Recommendations from AMIA. Journal of the American Medical Informatics Association, 20(e1), e2–e8.

25. American Medical Association (2014). Improving care: Priorities to improve electronic health record usability. Accessed on June 20, 2012, from https://www.aace.com/files/ehr-priorities.pdf.