CHAPTER 25

Framework for Privacy, Security, and Confidentiality

Dixie B. Baker*

In this chapter, you will learn how to

• Explain the relationship between dependability and healthcare quality and safety

• Identify and explain five guidelines for building dependable systems

• Present an informal assessment of the healthcare industry with respect to these guidelines

The healthcare industry is in the midst of a dramatic transformation that is motivated by costly administrative inefficiencies, a payment system that rewards doctor visits rather than healthy outcomes, and a hit-or-miss approach to diagnosis and treatments. This transformation is driven by a number of factors, most prominently the skyrocketing cost of healthcare in the United States; the exposure of patient-safety problems related to care; advances in genomics and “big data” analytics; and an aging, socially networked population that expects the healthcare industry to effectively leverage information technology to manage costs, improve health outcomes, advance medical science, and engage consumers as active participants in their own health.

The U.S. Health Information Technology for Economic and Clinical Health (HITECH) Act that in 2009 was enacted as part of the American Recovery and Reinvestment Act (ARRA) provided major structural changes; funding for research, technical support, and training; and financial incentives designed to significantly expedite and accelerate this transformation.1 The HITECH Act codified the Office of National Coordinator (ONC) for HIT and assigned it responsibility for developing a nationwide infrastructure that would facilitate the use and exchange of electronic health information, including policy, standards, implementation specifications, and certification criteria. In enacting the HITECH Act, Congress recognized that the meaningful use and exchange of electronic health records (EHRs) were key to improving the quality, safety, and efficiency of the U.S. healthcare system.

At the same time, the HITECH Act recognized that as more health information was recorded and exchanged electronically to coordinate care, monitor quality, measure outcomes, and report public health threats, the risk to personal privacy and patient safety would be heightened. This recognition is reflected in the fact that four of the eight areas the HITECH Act identified as priorities for the ONC specifically address risks to individual privacy and information security:1

• Technologies that protect the privacy of health information and promote security in a qualified electronic health record, including for the segmentation and protection from disclosure of specific and sensitive individually identifiable health information, with the goal of minimizing the reluctance of patients to seek care (or disclose information about a condition) because of privacy concerns, in accordance with applicable law, and for the use and disclosure of limited data sets of such information

• A nationwide HIT infrastructure that allows for the electronic use and accurate exchange of health information

• Technologies that as a part of a qualified electronic health record allow for an accounting of disclosures made by a covered entity (as defined by the Health Insurance Portability and Accountability Act of 1996) for purposes of treatment, payment, and healthcare operations

• Technologies that allow individually identifiable health information to be rendered unusable, unreadable, or indecipherable to unauthorized individuals when such information is transmitted in the nationwide health information network or physically transported outside the secured, physical perimeter of a healthcare provider, health plan, or healthcare clearinghouse

The HITECH Act resulted in the most significant amendments to the Health Insurance Portability and Accountability Act (HIPAA) Security and Privacy Rules since the rules became law.2 In addition, the HITECH Act’s EHR certification and “meaningful use” incentive program produced the most dramatic increase in the adoption and use of certified EHR technology the United States had ever witnessed.3

Capitalizing on this universal adoption of EHR technology, the Patient Protection and Affordable Care Act (ACA) enacted in 2010 changed the basis of payment from services rendered (e.g., visit, procedure) to the effectiveness of the outcomes attributable to those services, as captured in EHR data.4 Because care may span multiple organizations, the ACA encouraged providers to participate in networks called accountable-care organizations (ACOs) through which outcome measures are reported. The ACA significantly increased the number of individuals covered by health insurance, while at the same time increasing privacy risks for individuals and security risks for healthcare organizations.

By associating coverage with individuals’ Social Security numbers (SSNs), employers and providers were required to collect, store, and report SSNs, one of the most valuable individual identifiers. Thus, the healthcare organizations exchanging SSNs, along with sensitive health information, became very attractive targets for intruders. As the financial payout for medical records in the black market increased, the number of cyberattacks against healthcare systems and medical devices also increased5 and the number of breaches of unprotected health information soared.6 Although the certified EHR technology that providers adopted was tested against a set of standards and criteria, the certification process for security mechanisms included only conformance testing and did not include any assurance evaluation that would measure the degree to which these systems could defend against, withstand, or recover from concerted cyberattack. Thus the U.S. health system lacked the security and resilience architecture and functional components necessary to withstand a critical health infrastructure attack.7

As noted by former National Coordinator David Blumenthal, “Information is the lifeblood of modern medicine. Health information technology is destined to be its circulatory system. Without that system, neither individual physicians nor healthcare institutions can perform at their best or deliver the highest-quality care.”8 To carry Dr. Blumenthal’s analogy one step further, at the heart of modern medicine lies “trust.” Caregivers must trust that the technology and information they need will be available when they are needed at the point of care. They must trust that the information in an individual’s EHR is accurate and complete and that it has not been accidentally or intentionally corrupted, modified, or destroyed. Consumers must trust that their caregivers will keep their most private personal and health information confidential and will disclose and use it only to the extent necessary and in ways that are legal, ethical, and authorized consistent with the consumer’s personal expectations and preferences. Above all else, both providers and consumers must trust that the technology and services they use will “do no harm.”

The medical field is firmly grounded in a tradition of ethics, patient advocacy, care quality, and human safety. Health professionals are well indoctrinated on clinical practice that respects personal privacy and that protects confidential information and life-critical information services. The American Medical Association (AMA) Code of Medical Ethics commits physicians to “respect the rights of patients, colleagues, and other health professionals, and shall safeguard patient confidences and privacy within the constraints of the law,9 and the American Nurses Association’s (ANA’s) Code of Ethics for Nurses with Interpretive Statements includes a commitment to “promote, advocate for, and strive to protect the rights, health, and safety, and rights of the patient.”10 Fulfilling these ethical obligations is the individual responsibility of each healthcare professional, who must trust that the information technology she relies upon will help and not harm patients and will protect the patient’s private information—despite the fact that digitizing information and transmitting it beyond the boundaries of the healthcare organization introduces risk to information confidentiality and integrity.

Recording, storing, using, and exchanging information electronically does indeed introduce new risks. As anyone who has used e-mail, texting, or social media knows, very little effort is required to instantaneously make private information accessible to millions of people throughout the world. We also know that disruptive and destructive software and nefarious human intruders skulk around the Internet of Things and insert themselves into our laptops, tablets, and smartphones, eager to capture our passwords, identities, credit card numbers, and health information. The question is whether these risks are outweighed by the many benefits enabled through HIT, such as the capability to receive laboratory results within seconds after a test is performed; to continuously monitor a patient’s condition remotely, without requiring him to leave his home; or to align treatments with outcomes-based protocols and decision-support rules personalized according to the patient’s condition, family history, and genome.

The ANA Code of Ethics acknowledges the challenges posed by “rapidly evolving communication technology and the porous nature of social media” and stresses that “nurses must maintain vigilance regarding postings, images, recordings, or commentary that intentionally or unintentionally breaches their obligation to maintain and protect patients’ rights to privacy and confidentiality.”10 Along with these challenges come opportunities to engage patients in protecting their own health and the health of their families. Consumer health technology is growing at exponential rates. A 2016 study found that approximately 24 percent of consumers were using mobile apps to track health and wellness, 16 percent were using wearable sensors, and 29 percent used electronic personal health records—a trend expected to continue.11 For healthcare professionals, this trend offers a huge opportunity to engage patients as a key player in the care team, helping to measure health indicators and monitor compliance with preventive and interventional strategies.

As HIT assumes a central role in the provision of care and in healthcare decision-making, healthcare providers increasingly must trust HIT to provide timely access to accurate and complete health information and to offer personalized clinical decision support based on that information, while assuring that individual privacy is continuously protected. Legal and ethical obligations, as well as consumer expectations, drive requirements for assurance that data and applications will be available when they are needed, that private and confidential information will be protected, that data will not be modified or destroyed other than as authorized, that systems will be responsive and usable, and that systems designed to perform health-critical functions will do so safely. These are the attributes of trustworthy HIT. The Markle Foundation’s Connecting for Health collaboration identified privacy and security as technology principles fundamental to trust: “All health information exchange, including in support of the delivery of care and the conduct of research and public health reporting, must be conducted in an environment of trust, based upon conformance with appropriate requirements for patient privacy, security, confidentiality, integrity, audit, and informed consent.”12

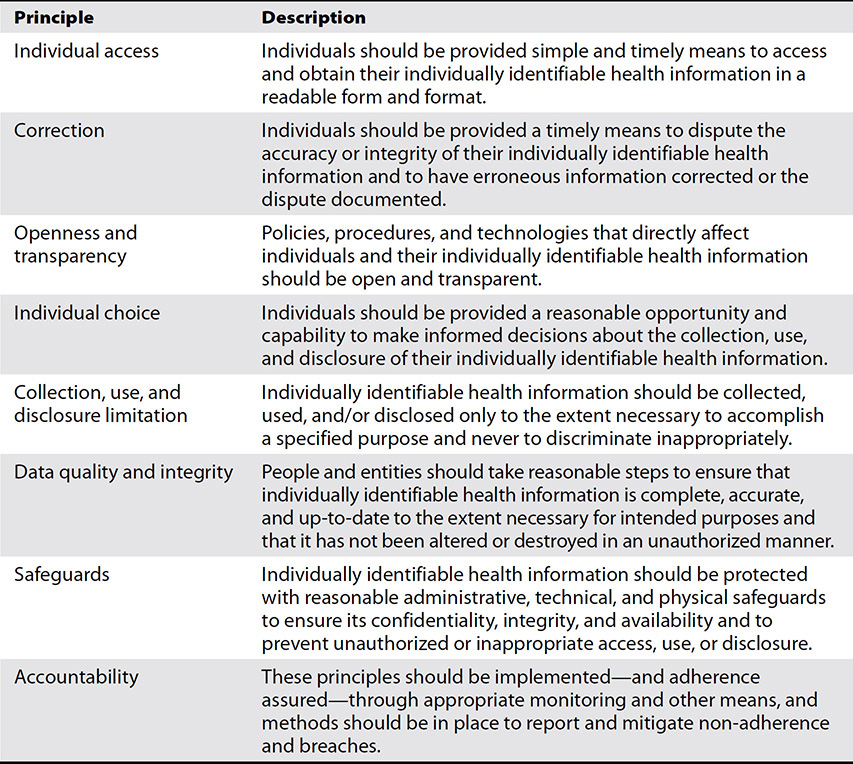

Many people think of “security” and “privacy” as synonymous. Indeed, these concepts are related—security mechanisms can help protect personal privacy by assuring that confidential personal information is accessible only by authorized individuals and entities. However, privacy is more than security, and security is more than privacy. A key component of privacy is autonomy—the right to decide for oneself whether and how one’s health information is collected, used, and shared. Healthcare privacy principles were first articulated in 1973 in a U.S. Department of Health, Education, and Welfare report (entitled “Records, Computers, and the Rights of Citizens”) as “fair information practice principles.”13 The Markle Foundation’s Connecting for Health collaboration updated these principles to incorporate the new risks created by a networked environment in which health information routinely is electronically captured, used, and exchanged.12 Other national and international privacy and security principles have also been developed that focus on individually identifiable information in an electronic environment (including but not limited to health). Based on these works, the ONC developed a Nationwide Privacy and Security Framework for Electronic Exchange of Individually Identifiable Health Information that identified eight principles intended to guide the actions of all people and entities that participate in networked, electronic exchange of individually identifiable health information.14 These principles, described in Table 25-1, essentially articulate the “rights” of individuals to openness, transparency, fairness, and choice in the collection and use of their health information.

Table 25-1 Eight Principles for Private and Secure Electronic Exchange of Individually Identifiable Health Information14

Whereas privacy has to do with an individual’s right to be left alone, security deals with protection, some of which supports that right. Security mechanisms and assurance methods are used to protect the confidentiality and authenticity of information, the integrity of data, and the availability of information and services; as well as to provide an accurate record of activities and accesses to information. Security mechanisms help assure the enforcement of access rules expressing individuals’ privacy preferences and sharing consents. While these mechanisms and methods are critical to protecting personal privacy, they are also essential in protecting patient safety and care quality—and in engendering trust in electronic systems and information. For example, if laboratory results are corrupted during transmission or historical data in an EHR is overwritten, the healthcare professional is likely to lose confidence that the HIT can be trusted to help them provide quality care. If a sensor system designed to track wandering Alzheimer’s patients shuts down without alarming those depending upon it, patients’ lives are put at risk!

Trustworthiness is an attribute of each technology component and of integrated collections of technology components, including software components that may exist in “clouds,” in smartphones, or in automobiles. Trustworthiness is very difficult to retrofit, as it must be designed and integrated into the technology or system and conscientiously preserved as the technology evolves. Discovering that an operational EHR system cannot be trusted generally indicates that extensive—and expensive—changes to the system are needed. In this chapter, we introduce a framework for achieving and maintaining trustworthiness in HIT.

When Things Go Wrong

Although we would like to be able to assume that computers, networks, and software are as trustworthy as our toasters and refrigerators, unfortunately that is not the case, and when computers, networks, and software fail in such a way that critical services and data are not available when they are needed, or confidential information is disclosed, or health data are corrupted, personal privacy and safety are imperiled.

In 2013, the Sutter Health System, in Northern California, experienced an outage affecting all 24 hospitals across the network. The outage was attributed to an “issue with the software that manages user access to the EHR”15—demonstrating that even technology designed to protect patients can cause security and safety risks. The “issue” resulted in a failure of both primary and back-up systems, and for one full day, nurses, physicians, and hospital staff had no access to patient information—including vital medication data, orders, and medical histories. Information available from back-up media was outdated by two to three days. Several days before the incident, the system had been purposely brought down for eight hours for a planned system upgrade—an unacceptable period of planned downtime for a “trustworthy” safety-critical system.15

Some might surmise that the risk of such outages might be mitigated by migrating systems to the cloud, where storage and infrastructure resources are made available upon demand, and services are provided in accordance with negotiated service-level agreements (SLAs). However, today’s cloud environments are equally susceptible to outages. Even the largest global cloud computing infrastructures can be brought down by seemingly minor factors—in some cases factors intended to provide security. One such example is a major crash of Microsoft’s Azure Cloud that occurred in February 2013, when a Secure Sockets Layer (SSL) certificate used to authenticate the identity of a server was allowed to expire. The outage brought down cloud storage for 12 hours and affected secure web traffic worldwide. Over 50 different Microsoft services reported performance problems during the outage.16

Use Case 25-1: Stolen IDs Can Put Patient Safety at Risk

Identity theft is a felonious and seriously disruptive invasion of personal privacy that also can cause physical harm. In 2006, a 27-year-old mother of four children in Salt Lake City received a phone call from a Utah social worker notifying her that her newborn had tested positive for methamphetamines and that the state planned to remove all of her children from her home. The young mother had not been pregnant in more than two years, but her stolen driver’s license had ended up in the hands of a meth user who gave birth while registered at the hospital using the stolen identity. After a few tense days of urgent phone calls with child services, the victim was allowed to keep her children. She hired an attorney to sort out the damages to her legal and medical records. Months later, when she needed treatment for a kidney infection, she carefully avoided the hospital where her stolen identity had been used. But her caution did no good—her electronic record, with the identity thief’s medical information intermingled, had circulated to hospitals throughout the community. The hospital worked with the victim to correct her charts to avoid making life-critical decisions based on erroneous information. The data corruption damage could have been far worse had the thief’s baby not tested positive for methamphetamines, bringing the theft to the victim’s attention.17

One’s personal genome is the most uniquely identifying of health information, and it contains information not only about the individual, but about her parents and siblings as well. One determined and industrious 15-year-old used his own personal genome to find his biological father, who was his mother’s anonymous sperm donor.18 Even whole genome sequences that claim to be “anonymized” can disclose an individual’s identity, as demonstrated by a research team at Whitehead Institute for Biomedical Research.19 As genomic data are integrated into EHRs and routinely used in clinical care, the potential for damage from unauthorized disclosure and identity theft significantly increases.

In July 2009, after noting several instances of computer viruses affecting the United Kingdom’s National Health Service (NHS) hospitals, a British news broadcasting station conducted a survey of the NHS trusts throughout England to determine how many of their systems had been infected by computer viruses. Seventy-five percent replied, reporting that over 8,000 viruses had penetrated their security systems, with 12 incidents affecting clinical departments, putting patient care at risk and exposing personal information. One Scottish trust was attacked by the Conficker virus, which shut down computers for two days. Some attacks were used to steal personal information, and at a cancer center, 51 appointments and radiotherapy sessions had to be rescheduled.20 The survey seemed to have little effect in reducing the threat—less than a year later, NHS systems were victimized by the Qakbot data-stealing worm, which infected over a thousand computers and stole massive amounts of information.21

For decades, healthcare organizations and individuals have known that they need to fortify their desktop computers and laptops to counter the threat of computer malware. However, as medical device manufacturers have integrated personal computer technology into medical devices, they have neglected to protect against malware threats. The U.S. Department of Veterans Affairs (VA) reported that between 2009 and 2013, malware infected at least 327 medical devices in VA hospitals, including X-ray machines, lab equipment, equipment used to open blocked arteries, and cameras used in nuclear medicine studies. In one reported outbreak, the Conflicker virus was detected in 104 devices in a single VA hospital. A VA security technologist surmised that the malware most likely was brought into the hospital on infected thumb drives used by vendor support technicians to install software updates.22 Infections from malware commonly seen in the wild are most likely to cause a degradation of system performance or to expose patients’ private data. However, malware written to target a specific medical device could result in even more dire consequences. Recognizing the safety risk posed by malware, the Food and Drug Administration (FDA) in 2013 published a warning addressed to medical device manufacturers, hospitals, medical device users, healthcare IT and procurements staff, and biomedical engineers regarding cybersecurity risks affecting medical devices and hospital networks.23

Since 2009, entities covered under HIPAA have been required to notify individuals whose unsecured protected health information (PHI) may have been exposed due to a security breach, and to report to the Department of Health and Human Services (HHS) breaches affecting 500 or more individuals. HHS maintains a public web site, frequently referred to as the “wall of shame,” listing the breaches reported.24 Between October 21, 2009, and March 2, 2017, a total of 1,850 breaches, affecting over 172 million individuals, were reported! One breach, affecting nearly 79 million individuals, accounted for almost half of the individuals affected. From the first reported breach until March 26, 2010, no hacking incidents were reported. Since that time, 283 hacking incidents have been reported, including three that together affected nearly 100 million individuals.2

TIP Breaches affecting 500 or more individuals must be immediately reported to the secretary of the Department of Health and Human Services, who must post a list of such breaches to a public web site.

The bottom line is that systems, networks, and software applications, as well as the contexts within which they are used, are highly complex, and the only safe assumption is that “things will go wrong.” Trustworthiness is an essential attribute for the systems, software, services, processes, and people used to manage individuals’ health information and to help provide safe, high-quality healthcare.

HIT Trust Framework

Trustworthiness can never be achieved by implementing a few policies and procedures, licensing some security technology, and checking all the boxes in a HIPAA checklist. Protecting sensitive and safety-critical health information and assuring that the systems, services, and information that healthcare professionals rely upon to deliver quality care are available when they are needed, require a complete HIT trust framework that starts with an objective assessment of risk, and that is conscientiously applied throughout the development and implementation of policies, operational procedures, and security safeguards built on a solid system architecture. This trust framework is depicted in Figure 25-1 and comprises seven layers of protection, each of which is dependent upon the layers below it (indicated by the arrows in the figure), and all of which must work together to provide a trustworthy HIT environment for healthcare delivery. This trust framework does not dictate a physical architecture; it may be implemented within a single site or across multiple sites, and may comprise enterprise, mobile, home health, and cloud components.

Figure 25-1 A framework for achieving and maintaining trustworthiness in healthcare IT comprises multiple layers of trust, beginning with objective risk assessment that serves as the foundation for information assurance policy and operational, architectural, and technological safeguards.

Layer 1: Risk Management

Risk management is the foundation of the HIT trust framework. Objective risk assessment informs decision making and positions an organization to address those physical, operational, and technical deficiencies that pose the highest risk to the organization’s information assets. Objective risk assessment also puts in place protections that will enable the organization to manage the residual risk and liability.

TIP Patient safety, individual privacy, and information security all relate to risk, which is simply the probability that some “bad thing” will happen to adversely affect a valued asset. Risk is always considered with respect to a given context comprising relevant threats, vulnerabilities, and valued assets. Threats can be natural occurrences (e.g., earthquake, hurricane), accidents, or malicious people and software programs. Vulnerabilities are present in facilities, hardware, software, communication systems, business processes, workforces, and electronic data. Valued assets can be anything from reputation to business infrastructure to information to human lives.

A security risk is the probability that a threat will exploit a vulnerability to expose confidential information, corrupt or destroy data, or interrupt or deny essential information services. If that risk could result in the unauthorized disclosure of an individual’s private health information or the compromise of an individual’s identity, it also represents a privacy risk. If the risk could result in the corruption of clinical data or an interruption in the availability of a safety-critical system, potentially causing human harm or the loss of life, it is a safety risk as well.

Information security is widely viewed as the protection of information confidentiality, data integrity, and service availability. Indeed, these are the three types of technical safeguards directly addressed by the HIPAA Security Rule.2 Generally, safety is most closely associated with protective measures for data integrity and the availability of life-critical information and services, while privacy is more often linked to confidentiality. However, the unauthorized exposure of private health information, or corruption of one’s personal EHR as a result of an identity theft, can also put an individual’s health, safety, lifestyle, and livelihood at risk.

Risk management is an ongoing, individualized discipline wherein each individual or each organization examines its own threats, vulnerabilities, and valued assets and decides for itself how to deal with identified risks—whether to reduce or eliminate them, counter them with protective measures, or tolerate them and prepare for the consequences. Risks to personal privacy, patient safety, care quality, financial stability, and public trust all must be considered in developing an overall strategy for managing risks both internal and external to an organization. Effective risk management helps security managers determine how to allocate their budgets to get the strongest protection possible against those threats that could cause the most harm to the organization.

In bygone times, risk assessment focused on resources within a well-defined physical and electronic boundary that comprised the “enterprise.” In today’s environment, where resources may include cloud components, bring-your-own devices (BYOD), smartphones, personal health-monitoring devices, as well as large, complex enterprise systems, risk assessment is far more challenging and will require careful analysis of potential data flows and close examination of SLAs for enterprise and cloud services, data-sharing agreements with trading partners, and personnel agreements.

TIP Resource virtualizations—from Internet transmissions to cloud computing—present particular challenges because the computing and networking resources used may be outside the physical and operational control of the subscriber and are likely to be shared with other subscribers.

Layer 2: Information Assurance Policy

The risk management strategy will identify what security, privacy, and safety risks need to be addressed through an information assurance policy that governs operations, information technology, and individual behavior. The information assurance policy comprises rules that guide organizational decision-making, and that define behavioral expectations and sanctions for unacceptable actions. The policy defines rules for protecting individuals’ private information, protecting the security of that information, and providing choice and transparency with respect to how individuals’ health information is safely used and shared. It includes rules that protect human beings, including patients, employees, family members, and visitors, from physical harm that could result from data corruption or service interruption. Overall, the information assurance policy defines the rules enforced to protect the organization’s valued information assets from identified risks to personal privacy, information confidentiality, data integrity, and service availability.

Some policy rules are mandated by applicable state and federal laws and regulations. For example, the HIPAA Security Rule requires compliance with a set of administrative, physical, and technical standards, and the HIPAA Privacy Rule (2013) sets forth privacy policies to be implemented.2 However, although the HIPAA regulations establish uniform minimum privacy and security standards, state health privacy laws are quite diverse. Because the HIPAA regulations apply only to “covered entities” and their “business associates” and not to everyone who may hold health information, and because the HIPAA regulations pre-empt only those state laws that are less stringent, the privacy protections of individuals and security protections of health information vary depending on who is holding the information and the state in which they are located.25

The policy that codifies the nurse’s obligation to protect patients’ privacy and safety is embodied in the ANA Code of Ethics for Nurses:10

• The nurse holds in confidence personal information and uses judgment in sharing this information.

• The nurse takes appropriate action to safeguard individuals, families, and communities when their health is endangered by a co-worker or any other person.

The HIT information assurance policy provides the foundation for the development and implementation of physical, operational, architectural, and security technology safeguards. Healthcare professionals can provide valuable insights, recommendations, and advocacy in the formulation of information assurance policy within the organizations where they practice, as well as within their professional organizations and with state and federal governments.

Layer 3: Physical Safeguards

Physically safeguarding health information and the information technology used to collect, store, retrieve, analyze, and share health data is essential to assuring that information needed at the point and time of care is available, trustworthy, and usable in providing quality healthcare. Although the electronic signals that represent health information are not themselves “physical,” the facilities within which data is generated, stored, displayed, and used; the media on which data is recorded and shared; the information system hardware used to process, access, and display the data; and the communications equipment used to transmit and route the data are physical. So are the people who generate, access, and use the information the data represent. Physical safeguards are essential to protecting these assets in accordance with the information assurance policy.

TIP The HIPAA Security Rule prescribes four standards for physically safeguarding electronic health information protected under HIPAA: facility-access controls, workstation use, workstation security, and device and media controls. Physically safeguarding the lives and well-being of patients is central to the roles and responsibilities of healthcare professionals. Protecting patients requires the physical protection of the media on which their health data are recorded, as well as the devices, systems, networks, and facilities involved in data collection, use, storage, and disposal.

Healthcare organizations are increasingly choosing to purchase services from third parties, rather than hosting and maintaining these services within their own facilities. Third-party services include EHR software-as-a-service (SaaS) applications, outsourced hosting services, and cloud storage, platform, and infrastructure offerings. The HIPAA Security Rule requires that the providers of these services sign a business associate agreement in which they agree to meet all of the HIPAA security standards. However, if a breach occurs, the covered entity retains primary responsibility for reporting and responding to the breach. So it is essential that healthcare entities perform due diligence to assure that their business associates understand and are capable of providing the required levels of physical protection and data isolation.

Layer 4: Operational Safeguards

Operational safeguards are processes, procedures, and practices that govern the creation, handling, usage, and sharing of health information in accordance with the information assurance policy. The HIT trust framework shown in Figure 25-1 includes the following operational safeguards.

System Activity Review

One of the most effective means of detecting potential misuse and abuse of privileges is by regularly reviewing records of information system activity, such as audit logs, facility access reports, security incident tracking reports, and accountings of disclosures. The HIPAA Privacy Rule requires that covered entities provide to patients, upon request, an accounting of all disclosures (i.e., outside the entity holding the information) of their PHI except for those disclosures for purposes of treatment, payment, and healthcare operations (TPO). The HITECH Act dropped the TPO exception, and a proposed rule was published in May 2011.26 However, in October 2014, the Office of Civil Rights announced a delay in the release of a final rule. As of this writing, no final regulation has been released.

Technology that automates system-activity review is increasingly being used by healthcare organizations to detect potential intrusions and internal misuse, particularly since healthcare has become a key target for intruders.

Continuity of Operations

Unexpected events, both natural and human-produced, do happen, and when they do, it is important that critical health services can continue to be provided. As healthcare organizations become increasingly dependent on electronic health information and information systems, the need to plan for unexpected events and for operational procedures that enable the organization to continue to function through emergencies becomes more urgent. The HIPAA Security Rule requires that organizations establish and implement policies and procedures for responding to an emergency. Contingency planning is part of an organization’s risk-management strategy, and the first step is performed as part of a risk assessment—identifying those software applications and data that are essential for enabling operations to continue under emergency conditions and for returning to full operations. These business-critical systems are those to which architectural safeguards such as fail-safe design, redundancy and failover, and availability engineering should be applied.

The rapidly escalating risk to healthcare organizations is motivating them to strengthen their continuity-of-operations posture. The 2015 Cybersecurity Survey conducted by the Health Information Management and Systems Society (HIMSS) reported that 87 percent of respondents “indicated that information security had increased as a business priority” over the previous year, resulting in “improvements to network security capabilities, endpoint protection, data loss prevention, disaster recovery, and information technology (IT) continuity.”27

Incident Procedures

Awareness and training should include a clear explanation of what an individual should do if they suspect a security incident, such as a malicious code infiltration, denial-of-service attack, or a breach of confidential information. Organizations need to plan their response to an incident report, including procedures for investigating and resolving the incident, notifying individuals whose health information may have been exposed as a result of the incident, and penalizing parties responsible for the incident. Individuals whose information may have been exposed as a result of a breach must be notified, and breaches affecting 500 or more individuals must be reported to DHS.

Not all security incidents are major or require enterprise-wide response. Some incidents may be as simple as a user accidentally including PHI in a request from the help desk. Incident procedures should not require a user or helpdesk operator to make a judgment call on the seriousness of a disclosure; procedures should be clear about what an individual should do when they notice a potential disclosure.

Sanctions

The HIPAA law, as amended by the HITECH Act, prescribes severe civil and criminal penalties for sanctioning entities that fail to comply with the privacy and security provisions.28,1 Organizations must implement appropriate sanctions to penalize workforce members who fail to comply with privacy and security policies and procedures. An explanation of workforce sanctions should be included in annual HIPAA security and privacy training.

Evaluation

Periodic, objective evaluation of the operational and technical safeguards in place helps measure the effectiveness, or “outcomes,” of the security management program. A formal evaluation should be conducted at least annually and should involve independent participants who are not responsible for the program. Security evaluation should include resources and services maintained within the enterprise, as well as resources and services provided by business associates—including SaaS and cloud-services providers. Independent evaluators can be from either within or outside an organization, as long as they can be objective. In addition to the annual programmed evaluation, security technology safeguards should be evaluated whenever changes in circumstances or events occur that affect the risk profile of the organization.

Security Operations Management

HIPAA regulations require that each healthcare organization designate a “security official” and a “privacy official” to be responsible for developing and implementing security and privacy policies and procedures. The management of services relating to the protection of health information and patient privacy touches every function within a healthcare organization. For large enterprises, responsibility for security- and safety-critical operations may require implementation of shared, two-person administration.

Awareness and Training

One of the most valuable actions a healthcare organization can take to maintain public trust is to inculcate a culture of safety, privacy, and security. If every person employed by, or associated with, a healthcare organization feels individually responsible for protecting the confidentiality, integrity, and availability of health information and the privacy and safety of patients, the risk for that organization will be vastly reduced! Recognition of the value of workforce training is reflected in the fact that the HIPAA Security and Privacy Rules require training in security and privacy, respectively, for all members of the workforce. Formal privacy and security training should be required to be completed at least annually, augmented by simple reminders.

Business Agreements

Business agreements help manage risk and bound liability, clarify responsibilities and expectations, and define processes for addressing disputes among parties. The HIPAA Privacy and Security Rules require that each person or organization that provides to a covered entity services involving individually identifiable health information must sign a “business associate” contract obligating the service provider to comply with HIPAA requirements, subject to the same enforcement and sanctions as covered entities. The HIPAA Privacy Rule also requires “data use agreements” defining how “limited data sets” will be used. Organizations wishing to exchange health information as part of the National eHealth Collaborative must sign a Data Use and Reciprocal Support Agreement (DURSA) in which they agree to exchange and use message content only in accordance with the agreed-upon provisions.29 Agreements are only as trustworthy as the entities that sign them. Organizations should exercise due diligence in deciding with whom they will enter into business agreements.

The HIPAA administrative definitions draw a distinction between “business associates” and members of the “workforce” whose work performance is under the direct control of a covered entity or business associate. Although not required by HIPAA, having workforce members attest to their understanding of their responsibilities to protect privacy and security is a good business practice as part of a comprehensive risk-management program.

Configuration Management

Configuration management refers to processes and procedures for maintaining an accurate and consistent accounting of the physical and functional attributes of a system throughout its life cycle. From an information assurance perspective, configuration management is the process of controlling and documenting modifications to the hardware, firmware, software, and documentation involved in the protection of information assets.

Identity Management and Authorization

Arguably, the operational processes most critical to the effectiveness of technical safeguards are the process used to positively establish the identity of the individuals and entities to whom rights and privileges are being assigned, and the process used to assign credentials and authorizations to (and withdraw them from) those identities. Many of the technical safeguards (e.g., authentication, access control, audit, digital signature, secure e-mail) rely upon and assume the accuracy of the identity that is established when an account is created.

Identity management begins with verification of the identity of each individual before creating a system account for them. This process, called “identity proofing,” may require the person to present one or more government-issued documents containing the individual’s photograph, such as a driver’s license or passport. Once identity has been positively established, a system account is created, giving the individual the access rights and privileges essential to performing their assigned duties, and a “credential” is issued to serve as a means of “authenticating” identity when the individual attempts to access resources. (See the “Layer 6: Technical Safeguards,” section, later in the chapter.) The life cycle of identity management includes the prompt termination of identities and authorizations when the individual leaves the organization, is suspected of misuse of privileges, or otherwise no longer needs the resources and privileges assigned to them. In addition, the identity-management life cycle includes the ongoing maintenance of the governance processes.

Consent Management

Just as medical ethics and state laws require providers to obtain a patient’s “informed consent” before delivering medical care, or administering diagnostic tests or treatment, a provider must obtain the individual’s consent (also called “permission” or “authorization”) prior to taking any actions that involve their personal information. Similarly, the Federal Policy for the Protection of Human Subjects (aka the Common Rule), designed to protect human research subjects, requires informed consent before using an individual’s identifiable private information or identifiable biospecimens in research.30 Obtaining an individual’s permission is fundamental to respecting their right to privacy, and a profusion of state and federal laws set forth requirements for protecting and enforcing this right.

The HIPAA Privacy Rule specifies conditions under which an individual’s personal health information may be used and exchanged, including uses and exchanges that require the individual’s express “authorization.” Certain types of information, such as psychotherapy notes and substance abuse records, have special restrictions and authorization requirements. Managing an individual’s consents and authorizations and assuring consistent adherence to the individual’s privacy preferences are complex processes, but essential to protecting personal privacy.

Historically, consent management has been primarily a manual process, and signed consent documents have been collected and managed within a single institution. However, as health information was digitized and electronically shared among multiple institutions and with the patient, paper-based consent management no longer is practical. At the same time, digitization and electronic exchange heightens the risk of disclosure and misuse. New standards and models for electronically capturing individual consents and for exchanging individual permissions are beginning to emerge. Although the original HIPAA Security Rule published in 1998 included a requirement for a digital signature standard, by the time the final rule was issued, this requirement had been omitted, placing the onus on the covered entity to ensure that digital signature methods they use are sufficiently secure and do not lead to compromise of PHI. The preamble to the Privacy Rule final rule, issued in 2002, stated “currently, no standards exist under HIPAA for electronic signatures. Thus, in the absence of specific standards, covered entities should ensure [that] any electronic signature used will result in a legally binding contract under applicable State or other law.”31 Standards for “segmenting” data for special protection also are beginning to emerge.32

Layer 5: Architectural Safeguards

A system’s architecture comprises its individual hardware and software components, the relationships among them, their relationship with the environment, and the principles that govern the system’s design and evolution over time. As shown in Figure 25-1, specific architectural design principles and the hardware and software components that support those principles work together to establish the technical foundation for security technology safeguards. In simpler times, the hardware and software components that comprised an enterprise’s architecture were under the physical and logical control of the enterprise itself, but in an era when an enterprise may depend upon external services (e.g., a health exchange service, external back-up service) and virtualized services (e.g., SaaS, cloud storage), this may not be the case. Still, the design principles discussed in the following sections apply whether an enterprise’s architecture is centralized or distributed, physical or virtual.

Scalability

As more health information is recorded, stored, used, and exchanged electronically, systems and networks must be able to deal with that growth. A catastrophic failure at CareGroup in 2003 resulted from the network’s inability to scale to the capacity required.33 The most recent stage in the evolution of the Internet specifically addresses the scalability issue by virtualizing computing resources into services, including SaaS, platforms as a service (PaaS), and infrastructure as a service (IaaS)—collectively referred to as “cloud” services. Indeed, the Internet itself was created on the same principle as cloud computing—the creation of a virtual, ubiquitous, continuously expanding network through the sharing of resources (servers) owned by different entities. Whenever one sends information over the Internet, the information is broken into small packets that are then sent (“hop”) from server to server from source to destination, with all of the servers in between being “public”—in the sense that they probably belong to someone other than the sender or the receiver. Cloud computing, a model for provisioning “on demand” computing services accessible over the Internet, pushes virtualization to a new level by sharing applications, storage, and computing power to offer flexible scalability beyond what would be economically possible otherwise.

Reliability

Reliability is the ability of a system or component to perform its specified functions consistently and over a specified period of time—an essential attribute of trustworthiness.

Safety

Safety-critical components, software, and systems should be designed so that if they fail, the failure will not cause people to be physically harmed. Note that fail-safe design may indicate that under certain circumstances, a component should be shut down or forced to cease to perform its usual functions in order to avoid harming someone. So the interrelationships among redundancy and failover, reliability, and fail-safe design are complex, yet critical to patient safety. The “break-the-glass” feature that enables an unauthorized user to gain access to patient information in an emergency situation is an example of fail-safe design. If, in an emergency, an EHR system “fails” to provide a healthcare provider access to the clinical information they need to deliver care, the “break-the-glass” feature will enable the system to “fail safely.” Fail-safe methods are particularly important in research, where new treatment protocols and devices are being tested for safety.

Interoperability

Interoperability is the ability of systems and system components to work together. To exchange health information effectively, healthcare systems must interoperate at every level on the Open Systems Interconnect (OSI) reference model, and beyond that, at the syntactic and semantic levels as well.34 The Internet and its protocols, which have been adopted for use within enterprises as well, transmit data (packets of electronic bits) over a network in such a way that upon arrival at their destination, the data appear the same as when they were sent. If the data are encrypted, the receiving system must be able to decrypt the data, and if the data are wrapped in an electronic envelope (e.g., e-mail message, HL7 message), the system must open the envelope and extract the content. Finally, the system must translate the electronic data into health information that the system’s applications and users will understand. Open standards, including encryption and messaging standards, and standard vocabulary for coding and exchanging security attributes and patient permissions—e.g., Security Assertion Markup Language (SAML), OAuth 2.0—are fundamental to implementing interoperable healthcare systems.

At a finer level of abstraction than the “application layer” are the syntactic packages and semantic coding that together help assure that applications that process health information will interpret the information consistently across systems within an organization, and between systems at different organizations. Historically, Health Level Seven (HL7) messaging has been used for this purpose. More recently, HL7 introduced a new model for packaging and exchanging health information. Called Fast Healthcare Interoperability Resources (FHIR), the new model is designed to capitalize on the simplicity of RESTful Internet exchange by defining “resources” that are exchanged in accordance with predefined FHIR “profiles.”35 Access authorization to FHIR resources is performed primarily using the OAuth 2.0 authorization framework.36

Further, within these packages, health information is coded using an extensive set of controlled vocabularies that are used for different purposes, such as SNOMET-CT for clinical systems, ICD-10 for classifying diseases for reimbursement purposes, and RxNorm for drug prescribing. The complexity of health information exchange only serves to heighten the need for data integrity and system security.

Availability

Required services and information must be available and usable when they are needed. Availability is measured as the proportion of time a system is in a functioning condition. A reciprocal dependency exists between security technology safeguards and high-availability design—security safeguards depend on the availability of systems, networks, and information, which in turn enable those safeguards to protect enterprise assets against threats to availability, such as denial-of-service attacks. Resource virtualization and “cloud” computing are important technologies for helping assure availability.

Simplicity

Safe, secure architectures are designed to minimize complexity. The simplest design and integration strategy will be the easiest to understand, maintain, and recover in the case of a failure or disaster. Achieving simplicity may be an elusive goal for implementers of healthcare systems, but it is an important principle to helping minimize security vulnerabilities.

Process Isolation

Isolation refers to the extent to which processes running on the same system at different trust levels, virtual machines (VMs) running on the same hardware, or applications running on the same computer or tablet are kept separate so that if one process, VM, or application misbehaves or is compromised, other processes, VMs, or applications can continue to operate safely and securely. Isolation is particularly important to preserve the integrity of the operating system itself. Within an operating system, functions critical to the security and reliability of the system execute within a protected hardware state, while untrusted applications execute within a separate state. However, this hardware architectural isolation is undermined if the system is configured so that untrusted applications are allowed to run with privilege, which puts the operating system itself at risk. For example, if a user logs into an account with administrative privileges and then runs an infected application (or opens an infected e-mail attachment), the entire operating system becomes infected.

Within a cloud environment, the hypervisor is assigned responsibility for assuring that VMs are kept separate so that processes running on one subscriber’s VM cannot interfere with those running on another VM. In general, the same security safeguards used to protect an enterprise system are equally effective in a cloud environment—but only if the hypervisor is able to maintain isolation among virtual environments. Another example of isolation is seen in the Apple iOS environment. Apps running on an iPad or iPhone are isolated such that not only are they unable to view or modify each other’s data, but one app does not even know whether another app is installed on the device. (Apple calls this architectural feature “sandboxing.”)

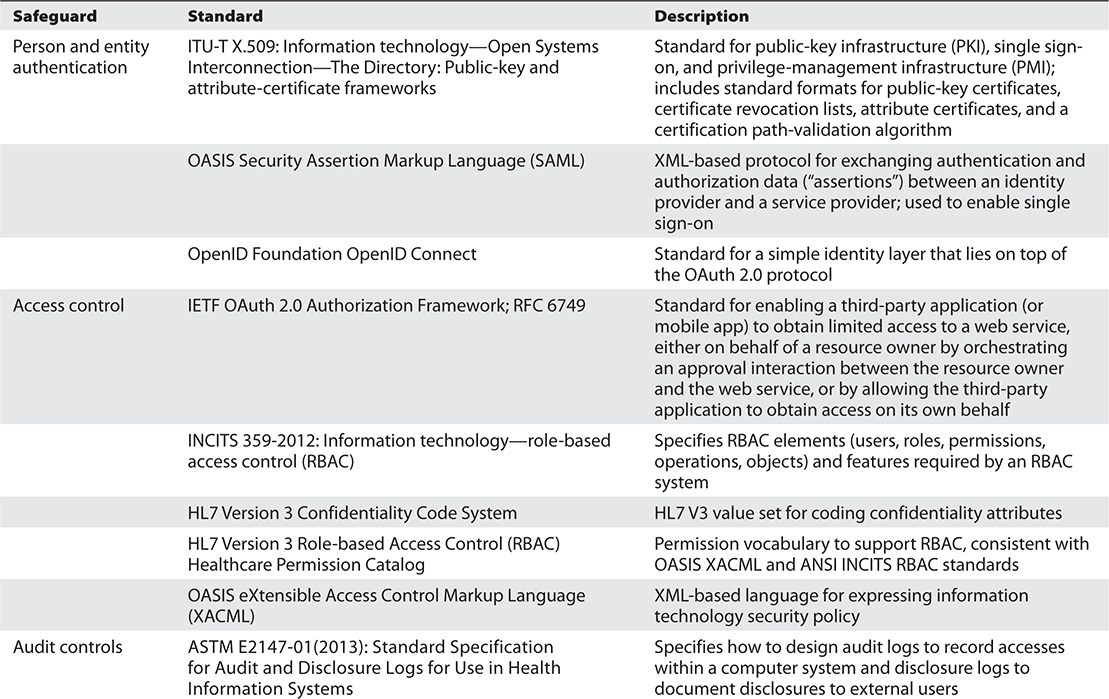

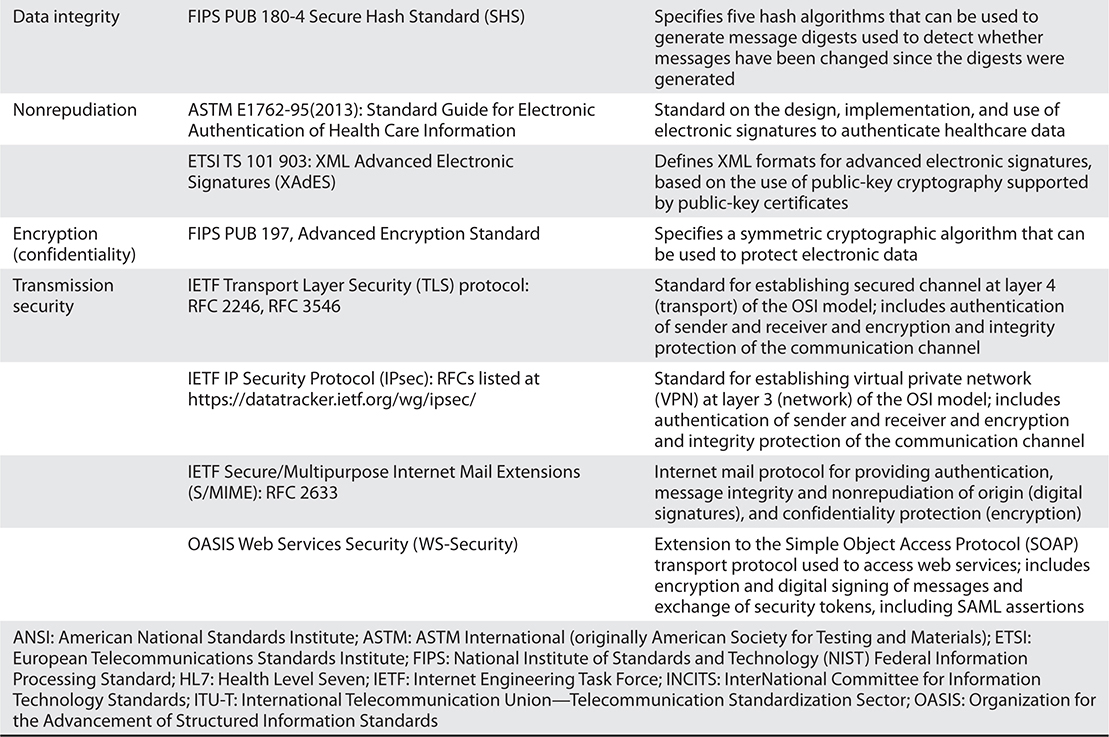

Layer 6: Technology Safeguards

Technology safeguards are software and hardware services specifically designed to perform security-related functions. All of the security services depicted in Figure 25-1 are technical safeguards required by the HIPAA Security Rule. Table 25-2 identifies a number of standards that are useful in implementing these safeguards.

Table 25-2 Many Open Standards Address Security Technology Safeguards

Person and Entity Authentication

The identity of each entity, whether it be a person or a software entity, must be clearly established before that entity is allowed to access protected systems, applications, and data. Identity management and authorization processes are used to validate identities and to assign them system rights and privileges. (See the “Identity Management and Authorization” section earlier in the chapter.) Then, whenever the person or application requires access, it asserts an identity (userID) and authenticates that identity by providing some “proof” in the form of something it has (e.g., smartcard), something it knows (e.g., password, private encryption key), or something it is (e.g., fingerprint). The system then checks to verify that the userID represents someone who has been authorized to access the system, and then verifies that the “proof” submitted provides the evidence required. While only people can authenticate themselves using biometrics, both people and software applications can authenticate themselves using public-private key exchanges.

Individuals who work for federal agencies, such as the Department of Veterans Affairs (VA), are issued Personal Identity Verification (PIV) cards containing a digital certificate that uniquely identifies the individual and the rights assigned to them. The National Strategy for Trusted Identities in Cyberspace (NSTIC) program is working toward an “identity ecosystem” in which each individual possesses trusted credentials for proving their identity.37

Access Control

Access-control services help assure that people, computer systems, and software applications are able to use all of (and only) the resources (e.g., computers, networks, applications, services, data files, information) they are authorized to use and only within the constraints of the authorization. Access controls protect against unauthorized use, disclosure, modification, and destruction of resources and unauthorized execution of system functions. Access-control rules are based on federal and state laws and regulations, the enterprise’s information assurance policy, as well as consumer-elected preferences. These rules may be based on the user’s identity, the user’s role, the context of the request (e.g., location, time of day), and/or a combination of the sensitivity attributes of the data and the user’s authorizations.

OAuth 2.0 is starting to be used to authorize patients to access their own health information using a web or mobile app, and to enable a provider from one organization to access their patient’s information held by a different organization.

Audit Controls

Security auditing is the process of collecting and recording information about security-relevant events. Audit logs are generated by multiple software components within a system, including operating systems, servers, firewalls, applications, and database management systems. Many healthcare organizations rely heavily on audit log review to detect potential intrusions and misuse. Automated intrusion and misuse detection tools are increasingly being used to normalize and analyze data from network monitoring logs, system audit logs, application audit logs, and database audit logs to detect potential intrusions originating from outside the enterprise, and potential misuse by authorized users within an organization.

Data Integrity

Data integrity services provide assurance that electronic data have not been modified or destroyed except as authorized. Cryptographic hash functions are commonly used for this purpose. A cryptographic hash function is a mathematical algorithm that uses a block of data as input to generate a “hash value” such that any change to the data will change the hash value that represents it, thus detecting an integrity breach.

Nonrepudiation

Sometimes the need arises to assure not only that data have not been modified inappropriately but also that the data are in fact from an authentic source. This proof of the authenticity of data is often referred to as nonrepudiation and can be met through the use of digital signatures. Digital signatures use public-key (asymmetric) encryption (discussed next) to encrypt a block of data using the signer’s private key. To authenticate that the data block was signed by the entity claimed, one only needs to try decrypting the data using the signer’s public key; if the data block decrypts successfully, its authenticity is assured.

Encryption

Encryption is simply the process of obfuscating information by running the data representing it through an algorithm (sometimes called a cipher) to make the information unreadable until it has been decrypted by someone possessing the proper encryption key. Symmetric encryption uses the same key to both encrypt and decrypt data, while asymmetric encryption (also known as public-key encryption) uses two keys that are mathematically related—one key is used for encryption and the other for decryption. One key is called a private key and is held secret; the other is called a public key and is openly published. Which key is used for encryption and which for decryption depends on the assurance objective. For example, secure e-mail encrypts the message contents using the recipient’s public key so that only a recipient holding the private key can decrypt and view it, and then digitally signs the message using the sender’s own private key so that if the recipient can use the sender’s public key to decrypt the signature, the recipient can be assured that the sender actually sent it. Encryption technology can be used to encrypt both data at rest (to protect sensitive data in storage) and data in motion (to protect electronic transmissions over networks).

Malicious Software Protection

Malicious software, also called malware, is any software program designed to infiltrate a system without the user’s permission, with the intent to damage or disrupt operations or use unauthorized resources. Malicious software includes programs commonly called viruses, worms, Trojan horses, and spyware. Protecting against malicious software requires not only technical solutions to prevent, detect, and remove these intruders but also policies and procedures for reporting suspected attacks.

Transmission Security

Sensitive and safety-critical electronic data that are transmitted over open, vulnerable networks such as the Internet must be protected against unauthorized disclosure and modification. The Internet Protocol was designed with no protection against the disclosure or modification of any transmissions and no assurance of the identity of any transmitters or receivers (or eavesdroppers). Internet traffic is clearly visible from every server through which it passes on its journey from source to destination. Protecting network transmissions between two entities (people, organizations, or software programs) requires that the communicating entities authenticate themselves to each other, confirm the integrity of the data exchanged (for example, by using a cryptographic hash function), and assure that data exchanged between them are encrypted.

Both the Transport Layer Security (TLS) protocol and Internet Protocol Security (IPsec) suite support these functions, but at different layers in the OSI model. TLS establishes protected channels at the OSI transport layer (layer 4), allowing software applications to exchange information securely.38, 39, 40 For example, TLS might be used to establish a secure link between a user’s browser and a merchant’s check-out application on the Web. It is important to note that a TLS secure channel is between two servers or between a server and a browser. If data need to flow through an intermediary, such as a load balancer, the TLS link is broken. Thus TLS does not provide true end-to-end protection, such as that provided by encrypted e-mail.

IPsec addresses this problem by establishing protected channels at the OSI network layer (layer 3), allowing Internet gateways to exchange information securely. For example, IPsec might be used to establish a VPN that allows all hospitals within an integrated delivery system to openly yet securely exchange information. Because IPsec is implemented at the network layer, it is less vulnerable to malicious software applications than TLS and also less visible to users (for example, IPsec does not display an open/closed lock icon in a browser).

Layer 7: Usability Features

The top layer of the trust framework includes services that make life easier for users. Single sign-on often is referred to as a security “service,” but in fact it is a usability service that makes authentication services more palatable. Both single sign-on and identity federation enable a user to authenticate oneself once and then to access multiple applications, multiple databases, and even multiple enterprises for which they are authorized, without having to re-authenticate oneself. Single sign-on enables a user to navigate among authorized applications and resources within a single organization. Identity federation enables a user to navigate between services managed by different organizations. Both single sign-on and identity federation require the exchange of security assertions. Once the user has logged into a system, that system can pass the user’s identity, along with other attributes, such as role, method of authentication, and time of login, to another entity using a security assertion. The receiving entity then enforces its own access-control rules, based on the identity passed to it.

Increasingly, identity is being federated across multiple organizations using an OAuth 2.0 profile called OpenID Connect.41 For example, an individual might use her Google+ identity to log into a different application in a web browser or on her smartphone. Healthcare organizations are using OpenID Connect to enable an individual with an authenticated (and identity-proofed) credential from one organization to use that credential to log into a different organization. The OAuth 2.0 authorization protocol enables the identity to be validated and authorized.

Neither single sign-on nor identity federation actually adds security protections (other than to reduce the need for users to post their passwords to their computer monitors). In fact, if the original identity-proofing process or authentication method is weak, the risk associated with that weakness will be propagated to any other entities to which the identity is passed. Therefore, whenever single sign-on or federated identity is implemented, a key consideration is the level of assurance provided by the methods used to identity-proof and authenticate the individual.

Chapter Review

Healthcare is in the midst of a dramatic and exciting transformation that will enable individual health information to be captured, used, and exchanged electronically using interoperable HIT. The potential impacts on individuals’ health and on the health of entire populations are dramatic. Outcomes-based decision support will help improve the safety and quality of healthcare. The availability of huge quantities of de-identified health information, combined with genomic sequencing data, will help scientists discover the underlying genetic profiles for diseases, leading to earlier and more accurate detection and diagnoses and more targeted and effective treatments, ultimately reaching the vision of “precision medicine.”

This chapter explained the critical role that trustworthiness plays in HIT adoption and in providing safe, private, high-quality care. It introduced and described a trust framework comprising seven layers of protection essential for establishing and maintaining trust in a healthcare enterprise. Many of the safeguards included in the trust framework have been codified in HIPAA standards and implementation specifications. Building trustworthiness in HIT always begins with objective risk assessment, a continuous process that serves as the basis for developing and implementing a sound information assurance policy and physical, operational, architectural, and technological safeguards to mitigate and manage risks to patient safety, individual privacy, care quality, financial stability, and public trust.

Questions

To test your comprehension of the chapter, answer the following questions and then check your answers against the list of correct answers that follows the questions.

1. What components are included in an information assurance policy?

A. Rules for protecting confidential information

B. Rules for assigning roles and making access-control decisions

C. Individual sanctions for violating rules for acceptable behavior

D. All of the above

2. The HIPAA Security Rule prescribes four standards for physically safeguarding electronic health information protected under HIPAA. Which four are they?

A. Redundancy, failover, reliability, and availability

B. Isolation, simplicity, redundancy, and fail-safe design

C. Interoperability, facility-access control, cloud control, and device control

D. Facility-access controls, workstation use, workstation security, and device and media controls

3. Which of the following is not an operational safeguard?

A. Reliability

B. Configuration management

C. Sanctions

D. Continuity of operations

4. The new push for virtualizing applications, storage, and computing power offers what dimension to your architectural safeguards?

A. Scalability

B. Data integrity

C. Network audit and monitoring

D. None of the above

5. What are two types of usability features?

A. Data encryption and authenticity

B. Access control and audit logs

C. Malicious network protection and isolation

D. Single sign-on and identity federation

6. Which of these statements best describes the relationship between security and privacy?

A. The terms are synonymous.

B. Security deals with cybercriminals, whereas privacy does not.

C. Security helps protect individual privacy.

D. Privacy is a security mechanism.

7. The purpose of risk management is to help an organization accomplish which of the following?

A. Identify its vulnerabilities, and assess the damage that might result if a threat were able to exploit those vulnerabilities

B. Decide what security policies, mechanisms, and operational procedures it needs

C. Figure out where to allocate a limited security budget in order to protect the organization’s most valuable assets

D. All of the above

8. Which operational process is most critical to the effectiveness of a broad range of security technical safeguards?

A. System activity review

B. Identity management

C. Incident procedures

D. Secure e-mail

Answers

1. D. The components in an information assurance policy include rules for protecting confidential information, rules for assigning roles and making access-control decisions, and individual sanctions for violating rules for acceptable behavior.

2. D. The HIPAA Security Rule prescribes four standards for physically safeguarding electronic health information protected under HIPAA: facility-access controls, workstation use, workstation security, and device and media controls.

3. A. Reliability is an architectural safeguard, not an operational safeguard.

4. A. Virtualizing applications, storage, and computing power provides the capability to scale as the need and demand for these resources increases.

5. D. Two types of usability features are single sign-on and identity federation.

6. C. Security helps protect individual privacy.

7. D. The purpose of risk management is to help an organization: identify its vulnerabilities and assess the damage that might result if a threat were able to exploit those vulnerabilities; decide what security policies, mechanisms, and operational procedures it needs; and figure out where to allocate a limited security budget in order to get the most “bang for the buck.”

8. B. The operational process most critical to the effectiveness of a broad range of security technical safeguards is identity management.

References

1. American Recovery and Reinvestment Act (ARRA) of 2009, Pub. L. No. 111-5, 123 Stat. 115, 516 (Feb. 19, 2009). Accessed on March 7, 2017, from https://www.congress.gov/bill/111th-congress/house-bill/1/text.

2. Health Insurance Portability and Accountability Act (HIPAA) Privacy, Security, and Enforcement Rules, 45 C.F.R. pts. 160, 162, and 164, most recently amended January 25, 2013. Accessed on July 19, 2016, from www.ecfr.gov/.

3. HealthIT.gov. (2016). Adoption dashboard. Accessed on July 7, 2016, from https://dashboard.healthit.gov/quickstats/quickstats.php.

4. Patient Protection and Affordable Care Act (ACA), Pub. L. No. 111-148, 42 U.S.C. § 18001 et seq. (2010). Accessed on July 7, 2016, from https://www.gpo.gov/fdsys/pkg/PLAW-111publ148/pdf/PLAW-111publ148.pdf.

5. Federal Bureau of Investigation (FBI). (2014, Apr. 8). Health care systems and medical devices at risk for increased cyber-intrusions for financial gain. Accessed on July 7, 2016, from www.aha.org/content/14/140408--fbipin-healthsyscyberintrud.pdf.

6. HealthIT.gov. (2016). Safety and security dashboard. Accessed on July 7, 2016, from http://dashboard.healthit.gov/quickstats/pages/breaches-protected-health-information.php.

7. The White House. (2013, Feb. 12). Presidential Policy Directive 21: Critical infrastructure security and resilience. Accessed on July 7, 2016, from https://www.whitehouse.gov/the-press-office/2013/02/12/presidential-policy-directive-critical-infrastructure-security-and-resil.

8. Blumenthal, D. (2010, Feb. 4). Launching HITECH. New England Journal of Medicine, 362, 382–385. Accessed on July 19, 2016, from www.nejm.org/doi/full/10.1056/NEJMp0912825.

9. American Medical Association (AMA). (2001). AMA code of medical ethics: Principles of medical ethics. Accessed on March 7, 2017, from https://www.ama-assn.org/sites/default/files/media-browser/principles-of-medical-ethics.pdf.

10. American Nurses Association (ANA). (2015). Code of ethics for nurses with interpretive statements. Silver Spring, MD: Nursesbooks.org. Accessed on July 7, 2016, from http://nursingworld.org/DocumentVault/Ethics-1/Code-of-Ethics-for-Nurses.html.

11. Das, R. (2016). Top 10 healthcare predictions for 2016. Forbes. Accessed on July 7, 2016, from www.forbes.com/sites/reenitadas/2015/12/10/top-10-healthcare-predictions-for-2016/#49bef6392f63.

12. Markle Foundation Connecting for Health (Markle). (2006). The common framework: Overview and principles. Accessed on March 7, 2017, from https://www.markle.org/sites/default/files/CF-Professionals-Full.pdf.

13. Department of Health, Education, and Welfare (DHEW). (1973, July). Records, computers, and the rights of citizens: Report of the Secretary’s Advisory Committee on Automated Personal Data Systems. Accessed on July 19, 2016, from https://epic.org/privacy/hew1973report/.

14. Office of the National Coordinator for Health Information Technology, U.S. Department of Health and Human Services (ONC). (2008, Dec. 15). Nationwide privacy and security framework for electronic exchange of individually identifiable health information. Accessed on March 7, 2017, from https://www.healthit.gov/sites/default/files/nationwide-ps-framework-5.pdf.

15. McCann, E. (2013). Setback for Sutter: $1B EHR goes black. HealthcareITNews, November 10. Accessed on July 12, 2016, from www.healthcareitnews.com/news/setback-sutter-1b-ehr-goes-black.

16. Microsoft Corporation (Microsoft). (2013). Details of the February 22nd 2013 Windows Azure storage disruption. Windows Azure blog. Accessed on March 7, 2017, from https://azure.microsoft.com/en-us/blog/details-of-the-february-22nd-2013-windows-azure-storage-disruption/.

17. Rys, R. (2008). The imposter in the ER: Medical identity theft can leave you with hazardous errors in health records. NBCNews.com, March 13. Accessed on July 19, 2016, from www.msnbc.msn.com/id/23392229/ns/health-health_care.

18. Stein, R. (2005). Found on the web, with DNA: A boy’s father. Washington Post, November 13. Accessed on July 19, 2016, from www.washingtonpost.com/wp-dyn/content/article/2005/11/12/AR2005111200958.html.

19. Gymrek, M., McGuire, A. L., Golan, D. E., Halperin, E., & Erlich, Y. (2013). Identifying personal genomes by surname inference. Science, 339, 321–324. Accessed on July 19, 2016, from http://data2discovery.org/dev/wp-content/uploads/2013/05/Gymrek-et-al.-2013-Genome-Hacking-Science-2013-Gymrek-321-4.pdf.

20. Cohen, B. (2009). NHS hit by a different sort of virus. Channel 4 News, July 9. Accessed on July 19, 2016, from www.channel4.com/news/articles/science_technology/nhs+hit+by+a+different+sort+of+virus/3256957.

21. Goodin, D. (2010). NHS computers hit by voracious, data-stealing worm. Register, April 23. Accessed on July 19, 2016, from www.theregister.co.uk/2010/04/23/nhs_worm_infection/.

22. Weaver, C. (2013). Patients put at risk by computer viruses. Wall Street Journal. Accessed on March 7, 2017 from https://www.wsj.com/articles/SB10001424127887324188604578543162744943762.

23. Food and Drug Administration (FDA). (2013, June 13). Cybersecurity for medical devices and hospital networks: FDA safety communication. Accessed on July 12, 2016, from www.fda.gov/MedicalDevices/Safety/AlertsandNotices/ucm356423.htm.

24. Department of Health and Human Services (HHS). (2016). Health information privacy: Breaches affecting 500 or more individuals. Accessed on July 12, 2016, from https://ocrportal.hhs.gov/ocr/breach/breach_report.jsf.

25. Pritts, J., Choy, A., Emmart, L., & Hustead, J. (2002, June 1). The state of health privacy: A survey of state health privacy statutes, second edition. Accessed on July 19, 2016, from http://ihcrp.georgetown.edu/privacy/pdfs/statereport2.pdf.

26. Department of Health and Human Services (HHS). (2011). HIPAA privacy rule accounting of disclosures under the Health Information Technology for Economic and Clinical Health Act: Proposed Rule. 76 Fed. Reg. 31425 (May 31, 2011) (to be codified at 45 C.F.R. pt. 164). Accessed on July 12, 2016, from https://www.federalregister.gov/articles/2011/05/31/2011-13297/hipaa-privacy-rule-accounting-of-disclosures-under-the-health-information-technology-for-economic.

27. Health Information and Management Systems Society (HIMSS). (2015, June 30). 2015 cybersecurity survey. Accessed on July 12, 2016, from www.himss.org/2015-cybersecurity-survey.