Here, we used TensorFlow ops Layers (Contrib) to build neural network layers. It made our work slightly easier as we were saved from declaring weights and biases for each layer separately. The work can be further simplified if we use an API like Keras. Here is the code for the same in Keras with TensorFlow as the backend:

#Network Parameters

m = len(X_train)

n = 3 # Number of features

n_hidden = 20 # Number of hidden neurons

# Hyperparameters

batch = 20

eta = 0.01

max_epoch = 100

# Build Model

model = Sequential()

model.add(Dense(n_hidden, input_dim=n, activation='relu'))

model.add(Dense(1, activation='sigmoid'))

model.summary()

# Summarize the model

#Compile model

model.compile(loss='mean_squared_error', optimizer='adam')

#Fit the model

model.fit(X_train, y_train, validation_data=(X_test, y_test),epochs=max_epoch, batch_size=batch, verbose=1)

#Predict the values and calculate RMSE and R2 score

y_test_pred = model.predict(X_test)

y_train_pred = model.predict(X_train)

r2 = r2_score( y_test, y_test_pred )

rmse = mean_squared_error( y_test, y_test_pred )

print( "Performance Metrics R2 : {0:f}, RMSE : {1:f}".format( r2, rmse ) )

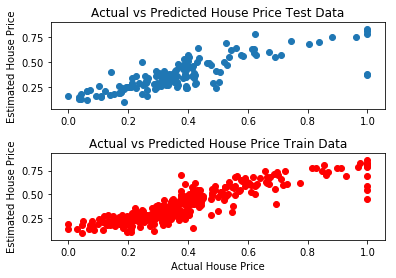

The preceding code gives the following result between predicted and actual values. We can see that the result can be improved by removing the outliers (some houses with max price irrespective of other parameters, the points at the right extreme):