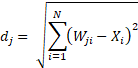

In order to implement SOM, let's first understand how it works. As the first step, the weights of the network are initialized to either some random value or by taking random samples from the input. Each neuron occupying a space in the lattice will be assigned specific locations. Now as an input is presented, the neuron with the least distance from the input is declared Winner (WTU). This is done by measuring the distance between weight vector (W) and input vectors (X) of all neurons:

Here, dj is the distance of weights of neuron j from input X. The neuron with minimum d value is the winner.

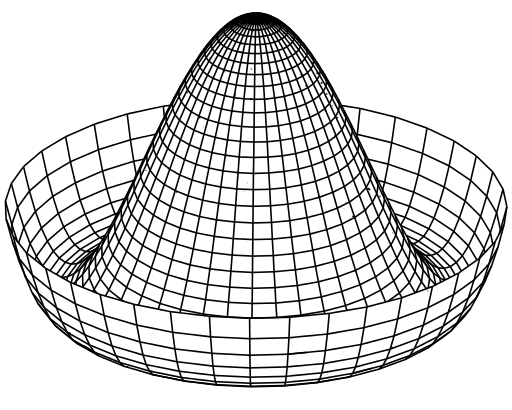

Next, the weights of the winning neuron and its neighboring neurons are adjusted in a manner to ensure that the same neuron is the winner if the same input is presented next time. To decide which neighboring neurons need to be modified, the network uses a neighborhood function Λ(r); normally, the Gaussian Mexican hat function is chosen as a neighborhood function. The neighborhood function is mathematically represented as follows:

Here, σ is a time-dependent radius of influence of a neuron and d is its distance from the winning neuron:

Another important property of the neighborhood function is that its radius reduces with time. As a result, in the beginning, many neighboring neurons' weights are modified, but as the network learns, eventually a few neurons' weights (at times, only one or none) are modified in the learning process. The change in weight is given by the following equation:

We continue the process for all inputs and repeat it for a given number of iterations. As the iterations progress, we reduce the learning rate and the radius by a factor dependent on the iteration number.