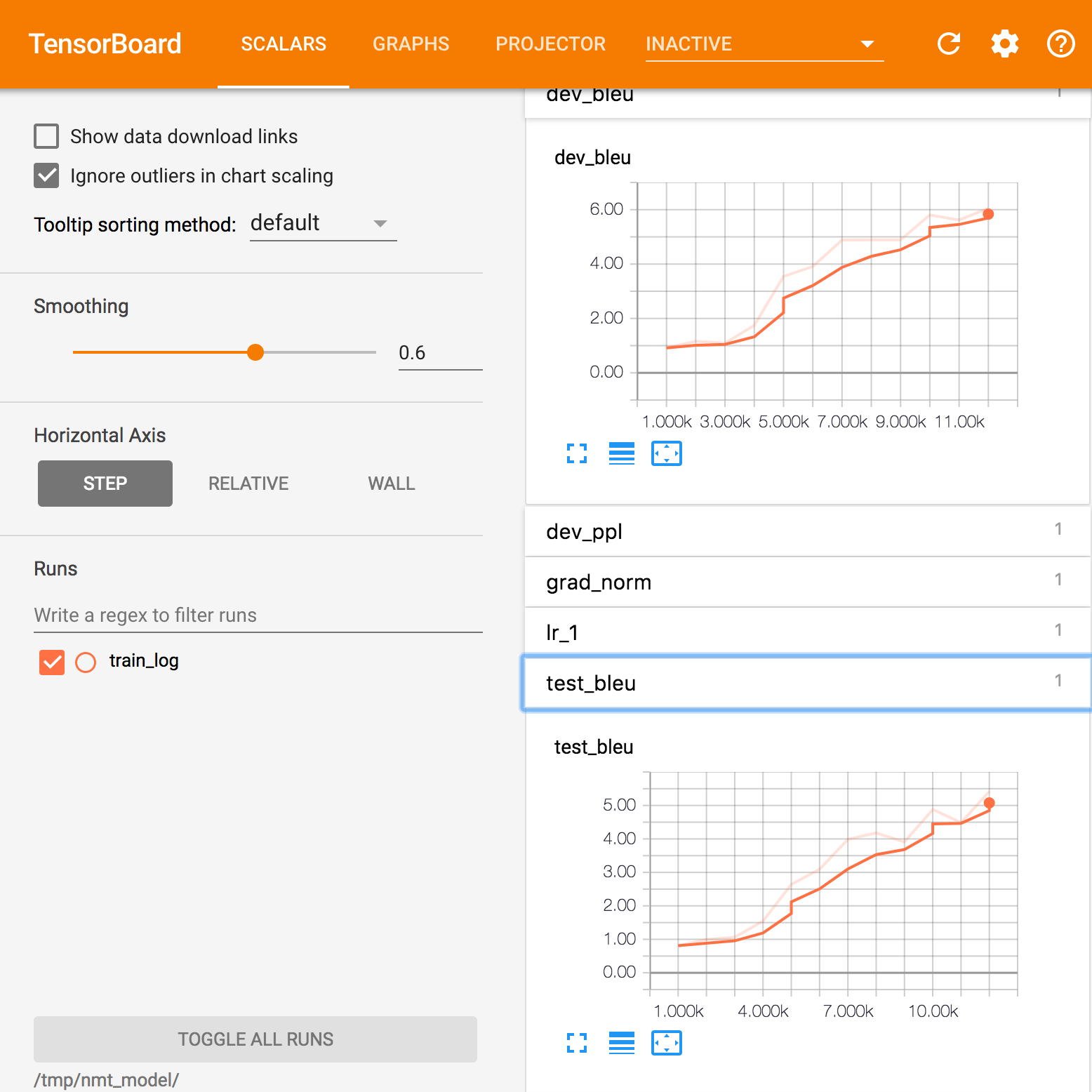

Attention is a mechanism for using the information acquired by the inner states of the encoder RNN and combining this information with the final state of the decoder. The key idea is that, in this way, it is possible to pay more or less attention to some tokens in the source sequence. The gain obtained with attention is shown in the following figure representing the BLEU score.

We notice a significant gain with respect to the same diagram given in our first recipe, where no attention was used:

An example of BLEU metrics with attention in Tensorboard