Let's see the expressions that we will need to make our RBM:

Forward pass: The information at visible units (V) is passed via weights (W) and biases (c) to the hidden units (h0). The hidden unit may fire or not depending on the stochastic probability (σ is stochastic probability):

Backward pass: The hidden unit representation (h0) is then passed back to the visible units through the same weights W, but different bias c, where they reconstruct the input. Again, the input is sampled:

These two passes are repeated for k-steps or till the convergence is reached. According to researchers, k=1 gives good results, so we will keep k = 1.

The joint configuration of the visible vector V and the hidden vector has an energy given as follows:

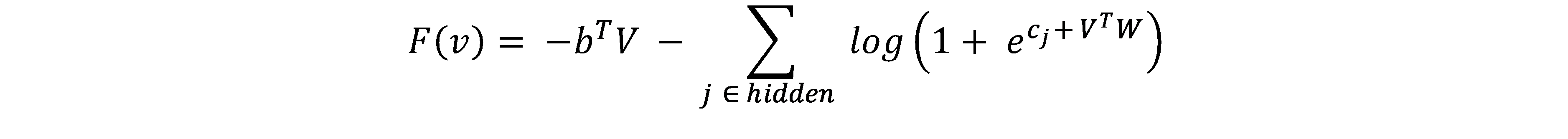

Also associated with each visible vector V is free energy, the energy that a single configuration would need to have in order to have the same probability as all of the configurations that contain V:

Using the Contrastive Divergence objective function, that is, Mean(F(Voriginal))- Mean(F(Vreconstructed)), the change in weights is given by:

Here, η is the learning rate. Similar expressions exist for the biases b and c.