In this chapter, we will discuss how Recurrent Neural Networks (RNNs) are used for deep learning in domains where maintaining a sequential order is important. Our attention will be mainly devoted to text analysis and natural language processing (NLP), but we will also see examples of sequences used to predict the value of Bitcoins.

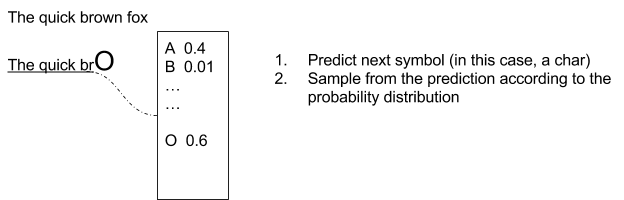

Many real-time situations can be described by adopting a model based on temporal sequences. For instance, if you think about writing a document, the order of words is important and the current word certainly depends on the previous ones. If we still focus on text writing, it is clear that the next character in a word depends on the previous characters (for example, The quick brown f... there is a very high probability that the next letter will be the letter o), as illustrated in the following figure. The key idea is to produce a distribution of next characters given the current context, and then to sample from the distribution to produce the next candidate character:

A simple variant is to store more than one prediction and therefore create a tree of possible expansions as illustrated in the following figure:

However, a model based on sequences can be used in a very large number of additional domains. In music, the next note in a composition will certainly depend on the previous notes, and in a video, the next frame in a movie is certainly related to the previous frames. Moreover, in certain situations, the current video frame, word, character, or musical note will not only depend on the previous but also on the following ones.

A model based on a temporal sequence can be described with an RNN where for a given input Xi at time i producing the output Yi, a memory of previous states at time [0, i-1] is fed back to the network. This idea of feeding back previous states is depicted by a recurrent loop, as shown in the following figures:

;

;

The recurrent relation can be conveniently expressed by unfolding the network, as illustrated in the following figure:

The simplest RNN cell consists of a simple tanh function, the hyperbolic tangent function, as represented in the following figures:

|

|