All the preceding code has been defined in https://github.com/tensorflow/nmt/blob/master/nmt/model.py. The key idea is to have two RNNs packed together. The first one is the encoder which works into an embedding space, mapping similar words very closely. The encoder understands the meaning of training examples and it produces a tensor as output. This tensor is then passed to the decoder simply by connecting the last hidden layer of the encoder to the initial layer of the decoder. Note that the learning happens because of our loss function based on cross-entropy with labels=decoder_outputs.

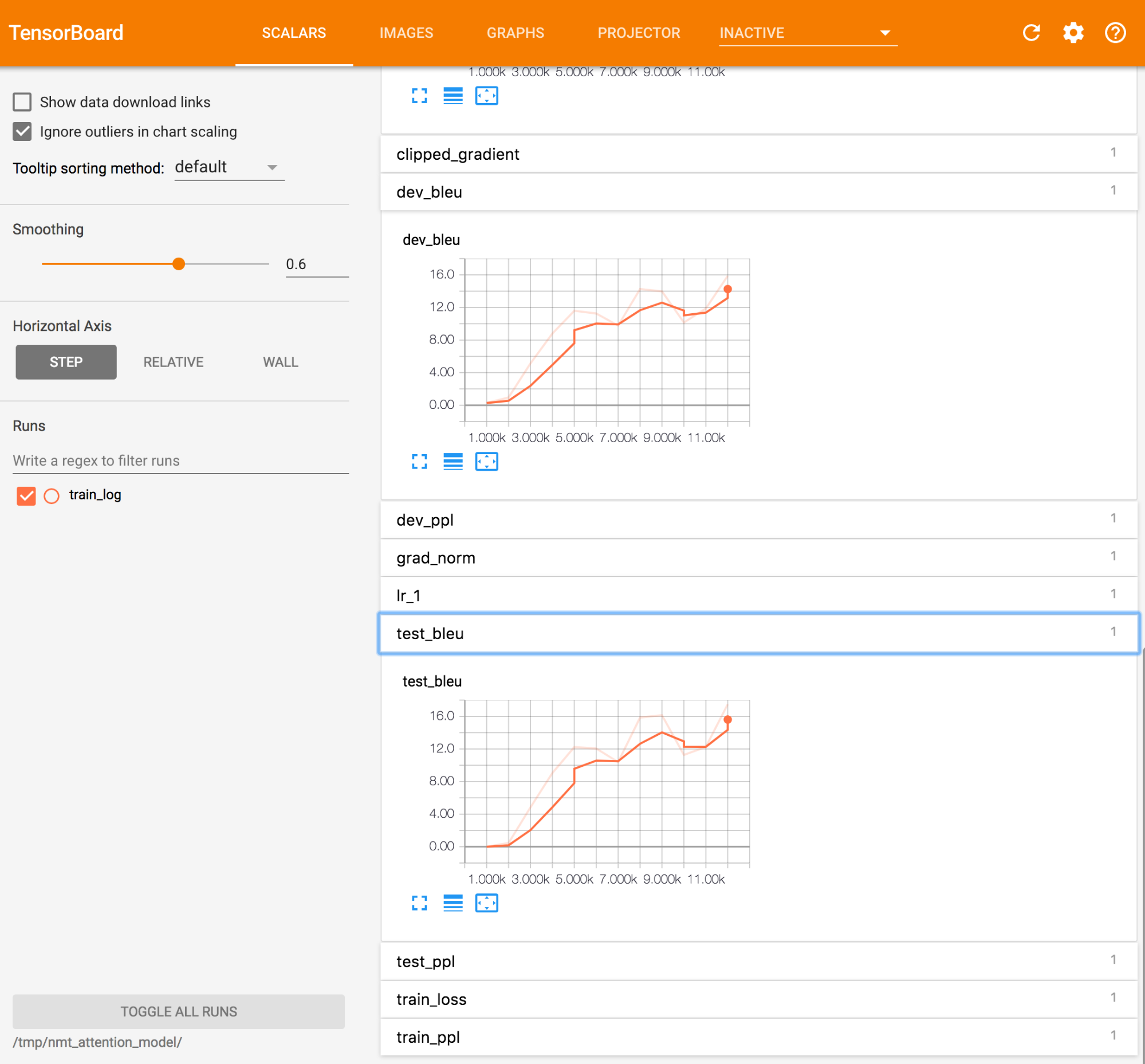

The code learns how to translate and the progress is tracked through iterations by the BLEU metric, as shown in the following figure:

An example of BLEU metric in Tensorboard