Meta-learning systems can be trained to achieve a large number of tasks and are then tested for their ability to learn new tasks. A famous example of this kind of meta-learning is the so-called Transfer Learning discussed in the Chapter on Advanced CNNs, where networks can successfully learn new image-based tasks from relatively small datasets. However, there is no analogous pre-training scheme for non-vision domains such as speech, language, and text.

Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks, Chelsea Finn, Pieter Abbeel, Sergey Levine, 2017, https://arxiv.org/abs/1703.03400 proposes a model-agnostic approach names MAML, compatible with any model trained with gradient descent and applicable to a variety of different learning problems, including classification, regression, and reinforcement learning. The goal of meta-learning is to train a model on a variety of learning tasks, such that it can solve new learning tasks using only a small number of training samples. The meta-learner aims at finding an initialization that rapidly adapts to various problems quickly (in a small number of steps) and efficiently (using only a few examples). A model represented by a parametrized function fθ with parameters θ. When adapting to a new task Ti, the model's parameters θ become θi~. In MAML, the updated parameter vector θi' is computed using one or more gradient descent updates on task Ti.

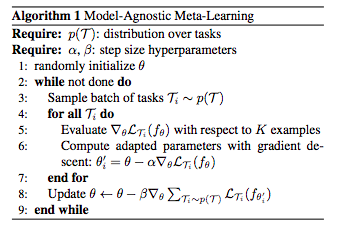

For example, when using one gradient update, θi~ = θ − α∇θLTi (fθ) where LTi is the loss function for the task T and α is a meta-learning parameter. The MAML algorithm is reported in this figure:

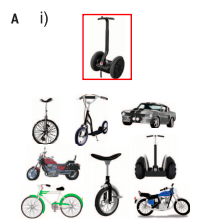

MAML was able to substantially outperform a number of existing approaches on popular few-shot image classification benchmark. Few shot image is a quite challenging problem aiming at learning new concepts from one or a few instances of that concept. As an example, Human-level concept learning through probabilistic program induction, Brenden M. Lake, Ruslan Salakhutdinov, Joshua B. Tenenbaum, 2015, https://www.cs.cmu.edu/~rsalakhu/papers/LakeEtAl2015Science.pdf, suggested that humans can learn to identify novel two-wheel vehicles from a single picture such as the one contained in a red box as follows:

At the end of 2017, the topic of AutoML (or meta-learning) is an active research topic that aims at auto-selecting the most efficient neural network for a given learning task. The goal is to learn how to efficiently and automatically design networks that, in turn, can learn specific tasks or can adapt to new tasks. The main problem is that designing a network cannot simply be described with a differentiable loss function and therefore traditional optimization techniques cannot simply be adopted for meta-learning. Therefore a few solutions have been proposed including the idea of having a controller recurrent network (RNN) and a reward policy based on reinforcement learning and the idea of having a model-agnostic meta-Learning. Both the approaches are very promising but there is certainly still a lot of room for research.

So, if you are interested in a hot topic, this Learning to learn for deep learning is certainly a space to consider as your next job.

- Google proposed the adoption of RNNs for the controller in Using Machine Learning to Explore Neural Network Architecture; Quoc Le & Barret Zoph, 2017, https://research.googleblog.com/2017/05/using-machine-learning-to-explore.html.

- Neural Architecture Search with Reinforcement Learning, Barret Zoph, Quoc V. Le, https://arxiv.org/abs/1611.01578, is a seminal paper proving more details about Google's approach. However, RNNs are not the only option.

- Large-Scale Evolution of Image Classifiers, Esteban Real, Sherry Moore, Andrew Selle, Saurabh Saxena, Yutaka Leon Suematsu, Jie Tan, Quoc Le, Alex Kurakin, 2017, https://arxiv.org/abs/1703.01041, proposed to use evolutionary computing where genetic intuitive mutation operations are explored to generate new candidate networks.

- Learning Transferable Architectures for Scalable Image Recognition, Barret Zoph, Vijay Vasudevan, Jonathon Shlens, Quoc V. Le, https://arxiv.org/abs/1707.07012, proposed the idea of cells learnt on CIFAR and used for improving ImageNet classification.

- Building A.I. That Can Build A.I.: Google and others, fighting for a small pool of researchers, are looking for automated ways to deal with a shortage of artificial intelligence experts., The New York Times, https://www.nytimes.com/2017/11/05/technology/machine-learning-artificial-intelligence-ai.html.

- Model-Agnostic Meta-Learning for Fast Adaptation of Deep Networks, Chelsea Finn, Pieter Abbeel, Sergey Levine, 2017, https://arxiv.org/abs/1703.03400.

- Learning to Learn by Gradient Descent by Gradient Descent, Marcin Andrychowicz, Misha Denil, Sergio Gomez, Matthew W. Hoffman, David Pfau, Tom Schaul, Brendan Shillingford, Nando de Freitas, https://arxiv.org/abs/1606.04474, shows how the design of an optimization algorithm can be cast as a learning problem, allowing the algorithm to learn to exploit structure in the problems of interest in an automatic way. The LSMT learned algorithms outperform hand-designed competitors on the tasks for which they are trained, and also generalize well to new tasks with similar structure. The code for this algorithm is available on GitHub at https://github.com/deepmind/learning-to-learn.