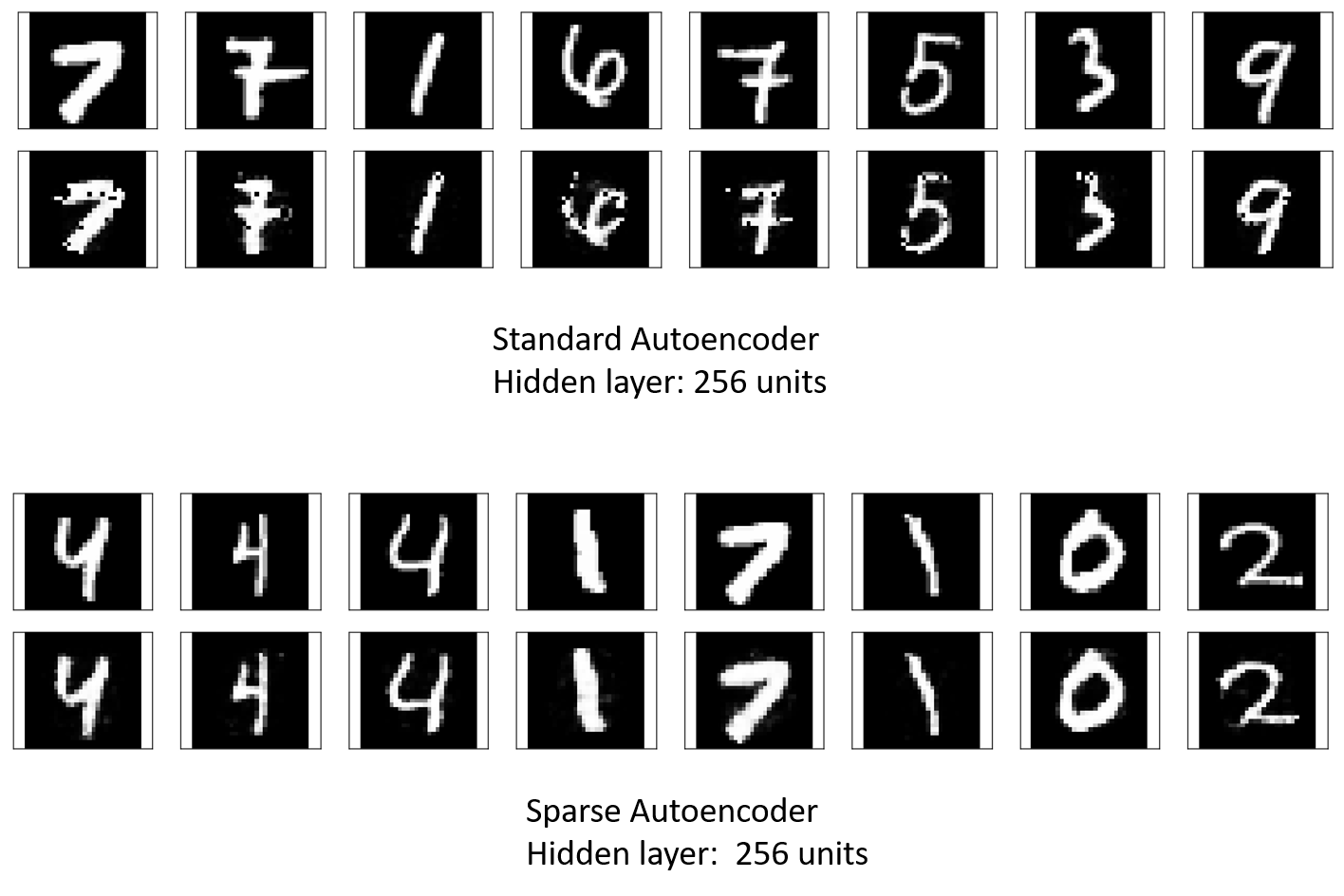

The main code of the sparse autoencoder, as you must have noticed, is exactly the same as that of the vanilla autoencoder, which is so because the sparse autoencoder has just one major change--the addition of the KL divergence loss to ensure sparsity of the hidden (bottleneck) layer. However, if you compare the two reconstructions, you can see that the sparse autoencoder is much better than the standard encoder, even with the same number of units in the hidden layer:

The reconstruction loss after training the vanilla autoencoder for the MNIST dataset is 0.022, while for the sparse autoencoder, it is 0.006. Thus, adding constraint forces the network to learn hidden representations of the data.