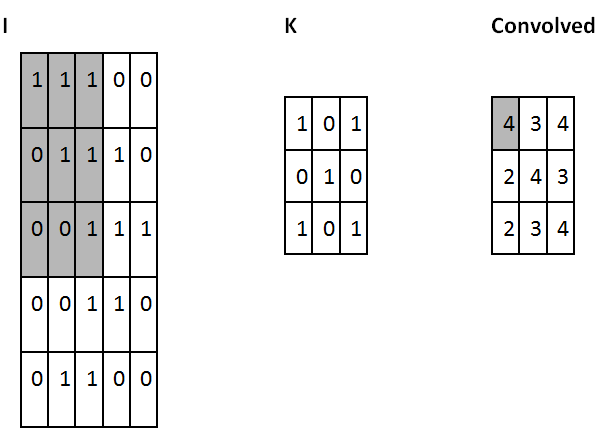

One simple way to understand convolution is to think about a sliding window function applied to a matrix. In the following example, given the input matrix I and the kernel K, we get the convolved output. The 3 x 3 kernel K (sometimes called filter or feature detector) is multiplied element-wise with the input matrix to get one cell in the output convolved matrix. All the other cells are obtained by sliding the window on I:

In this example, we decided to stop the sliding window as soon as we touch the borders of I (so the output is 3 x 3). Alternatively, we could have chosen to pad the input with zeros (so that the output would have been 5 x 5). This decision relates to the padding choice adopted.

Another choice is about the stride which is about the type of shift adopted by our sliding windows. This can be one or more. A larger stride generates fewer applications of the kernel and a smaller output size, while a smaller stride generates more output and retains more information.

The size of the filter, the stride, and the type of padding are hyperparameters that can be fine-tuned during the training of the network.