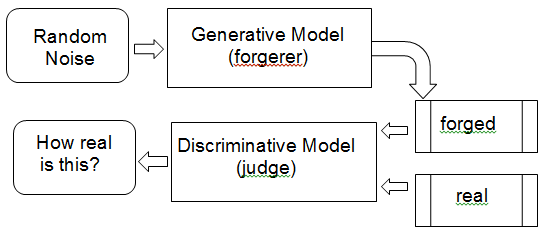

The key process of GAN can be easily understood by considering it analogous to art forgery, which is the process of creating works of art which are falsely credited to other, usually more famous, artists. GANs train two neural networks simultaneously.

The Generator G(Z) is the one that makes the forgery happen, and the Discriminator D(Y) is the one that can judge how realistic the reproduction is based on its observations of authentic pieces of art and copies. D(Y) takes an input Y (for instance an image) and expresses a vote to judge how real the input is. In general, a value close to zero denotes real, while a value close to one denotes forgery. G(Z) takes an input from a random noise Z and trains itself to fool D into thinking that whatever G(Z) produces is real. So the goal of training the discriminator D(Y) is to maximize D(Y) for every image from the true data distribution and to minimize D(Y) for every image not from the true data distribution. So G and D play an opposite game: hence the name adversarial training. Note that we train G and D in an alternating manner, where each of their objectives is expressed as a loss function optimized via a gradient descent. The generative model learns how to forge better and better, and the discriminative model learns how to recognize forgery better and better.

The discriminator network (usually a standard convolutional neural network) tries to classify if an input image is real or generated. The important new idea is to backpropagate both the discriminator and the generator to adjust the generator's parameters in such a way that the generator can learn how to fool the discriminator in an increasing number of situations. In the end, the generator will learn how to produce images that are indistinguishable from the real ones:

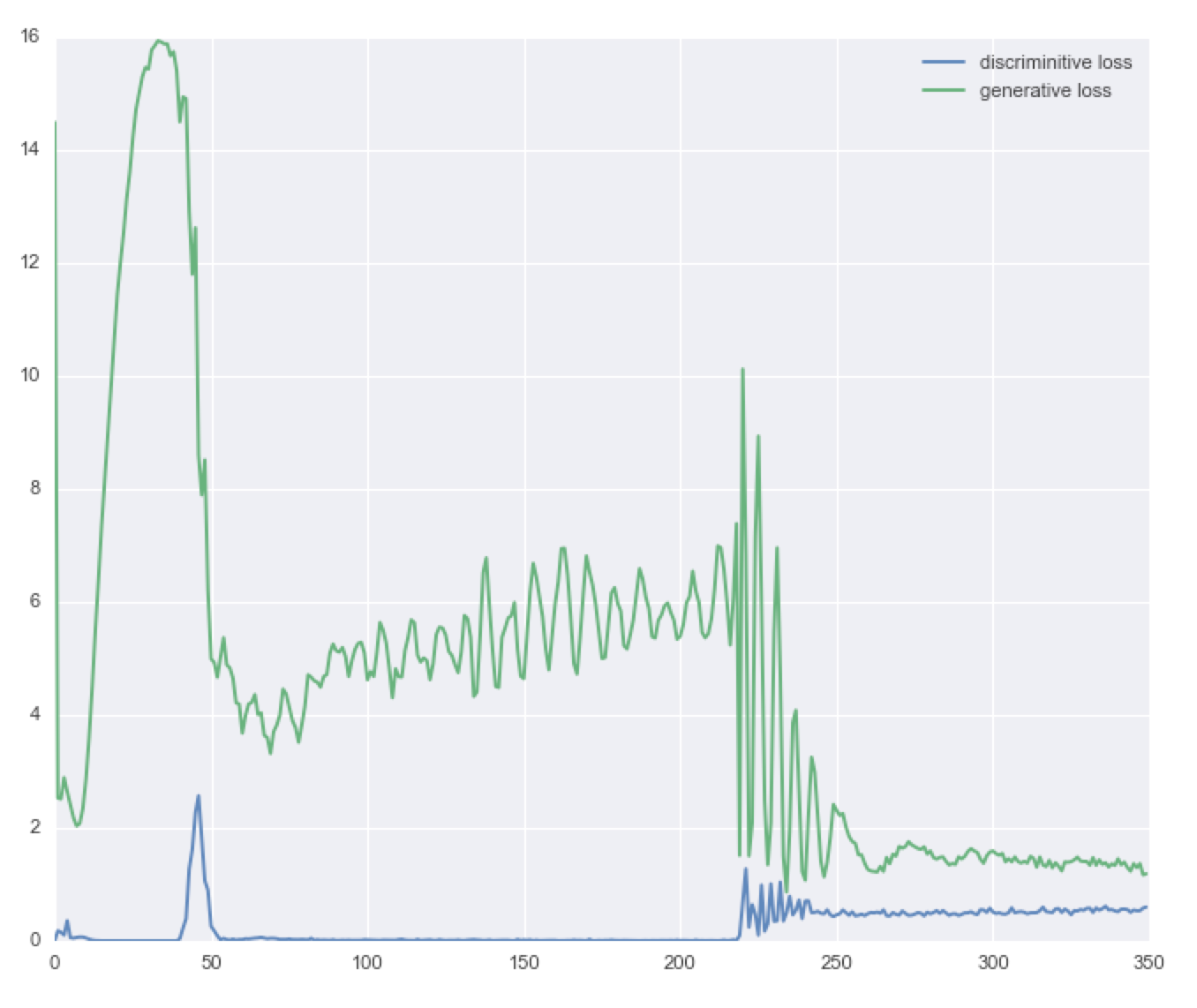

Of course, GANs find the equilibrium in a game with two players. For effective learning, it is necessary that if a player successfully moves downhill in a round of updates, the same update must move the other player downhill too. Think about it! If the forger learns how to fool the judge on every occasion, then the forger himself has nothing more to learn. Sometimes the two players eventually reach an equilibrium, but this is not always guaranteed and the two players can continue playing for a long time. An example of this from both sides is provided in the following figure: