Comparing Two Means: T-Tests

Introduction to T-Tests

If you are comparing the mean of a continuous dependent variable across two categories,

then you can achieve this through t-tests. Also, as we saw in the previous section,

there are various scenarios for comparing two means through t-tests, including:

-

Comparing means of two independent groups. In the main textbook example, the variable License divides the sample into two groups that are unrelated. In other words, any given customer can be either a Premium or a Freeware customer. Our aim would be to compare average Sales between these two groups.

-

Comparing means of two related groups. It is also possible to compare means between two related samples. An example of this is where you measure the same variable at two different times, or between two groups that in some way are related. We will examine this below.

-

Comparing a group against a population mean. You might have only one group, with a single mean. You may wish to compare this average with a benchmark average, which you will assume comes from a population that you have not measured directly. For instance, you may have data on your employee turnover, and wish to compare the average to some specific industry benchmark. You assume that the industry benchmark accurately represents the broader population’s mean, and compare your mean with the benchmark.

The following sections discuss each of these options.

Comparing Means between Two Independent Samples

Introduction

The most usual comparison of groups is that between two independent groups (samples),

i.e. where (in terms of our taxonomy of choices):

-

There is one categorical factor with two levels (Groups 1 and 2). In the main textbook example License is a categorical factor, with two levels or groups (Premium and Freeware).

-

There is one dependent variable. In the case of the book example this is Sales.

-

There are no other variables being taken into account.

-

The categories are independent. The Premium customers are independent from the Freeware customers.

In the case example, we might focus on whether average sales of services differ significantly

between types of license (Freeware vs. Premium).

As stated in the first section, you can see the literal difference in average in simple

descriptive statistics, so what value does the t-test add? The answer is that the

t-test goes a little further to answer the question, ”is the difference in means statistically significant?” Any two datasets of this type will have a difference in their averages, even if

it is a small difference arising from random data differences. The question is whether

the difference is too big to be due to random chance, which would make it a statistically

significant difference.

Because the focus of the t-test is on statistical significance, the test culminates

in the analysis of confidence intervals for the difference, as explained in the next

section.

The Ultimate End-Point of the T-Test

The aim of the t-test is to statistically analyze the difference in averages (means)

between two groups. We see in Figure 14.3 The t-test confidence interval that the difference in the means is $80,483 for freeware customers less $103,825

for premium customers, i.e. a difference in averages of -$23,342.

Figure 14.3 The t-test confidence interval

You probably don’t need a complex statistical program to calculate these averages

and analyze the size of the difference for yourself. However, t-tests and the other

comparison of means tests described below are concerned with whether a difference in means is large enough to be different from zero.

The problem faced by t-tests and other comparison of means tests is that sheer randomness

in data usually guarantees that there will be some difference between different groups in data. However, if the only reason two groups

differ is randomness, the difference should be close to zero. If there is a true and

substantial difference between the groups, the difference should be at least large

enough to be bigger than what might occur randomly. Comparison of means tests this

distinction.

To test whether a difference in the means of two groups is statistically different

from zero, t-tests provide a significance test (through confidence intervals and p-values).

Figure 14.3 The t-test confidence interval illustrates the analysis of t-test confidence intervals. Your aim is typically to

assess whether the difference in means is effectively zero, in which case the two

groups do not differ significantly on the dependent variable. The confidence interval

for the difference then tells us whether or not to reject this proposition: an interval

lying entirely above or below zero suggests the means are significantly different

from each other.

In addition to confidence intervals, t-tests also provide p-values as in Figure 14.4 The t-test p-values. However, I suggest ignoring the p-values and sticking with the more informative

confidence intervals.

Figure 14.4 The t-test p-values

Before getting to the t-test confidence intervals and p-values you should first assess

and deal with data assumptions. The next section discusses the steps to go through

in a t-test.

Step 1: Run the Initial T-Test

Comparison of two means in this context is done in SAS through PROC TTest. To see the licenses versus sales example, open and run the file “Code14a Independent

Samples TTest.” Having run the analysis, your next job is, as usual, to assess the

tests.

Step 2: Assess Data Assumptions

As in regression, there are certain data assumptions incorporated in the classic t-test:

-

The variances of each group on the dependent variable are roughly similar.

-

The data is roughly normally distributed.

-

There are sufficient numbers of observations in each group.

-

The dependent variable is continuous (not discrete).

-

The samples are representative of the population, which should be ascertained in the methodological design.

You need to either satisfy yourself that the data meets these assumptions, in which

case you can look at the standard t-test confidence intervals, or find alternatives

that bypass data problems.

I suggest the following initial tests after you have run the t-test program:

-

Assess the actual variance (standard deviation) of each group’s data. Manually ascertain whether the standard deviations of the two groups are roughly equal in magnitude. For instance, in Figure 14.3 The t-test confidence interval the standard deviation of sales in the freeware group is $27,471 and that for premium is $25,236. Decide for yourself whether these deviations are different enough to be a potential violation. This is often a difficult task in the context of a standard deviation, which is why we have formalized tests for differences in variances as described in Step 2 below.

-

Assess the formal test of variance equality: Figure 14.5 The SAS T-Test equality of variance checks shows the SAS assumption check for equality of variance, the “Equality of Variances” test.

-

Note that the hypothesis that you are testing is that the data is equal in variance, so a significant p-value (< .05) rejects this hypothesis. You accordingly want the p-value to be high not low; a high value means we do not reject equal variance.

-

If the p-value is high, you can feel confident that the assumption of equal data variance between the groups is met.

-

Note that with very small or very big samples, these tests may have issues[1].

-

Figure 14.5 The SAS T-Test equality of variance checks

-

Assess normality of the data. The graphs at the end of the SAS output can be read in the same way as those in regression.

If either equality of variances or normality is violated significantly, you may wish

to implement alternatives. In the example, the actual standard deviations are relatively

similar, the formal equality of variances test does not seem to indicate violations

of equal assumptions, and the regression-like normality plots seem to indicate rough

normality: there seems to be no major violations.

Step 3: Compare Versions of the Traditional Parametric T-Test

As discussed in Data Assumptions and Alternatives when Comparing Categories above, the standard, traditional, parametric t-test is your first port of call for

deciding if there is a statistical basis to claim that the means of your groups differ.

You should really only use the traditional parametric test if there are no assumption

violations at all. However, no data is ever perfect, so I suggest that you use the

remedial versions or at least compare the traditional t-test to the remedial versions.

In addition to the traditional test there are four alternatives for serious assumption

violations. I start with the remedial measures involving traditional (parametric)

t-tests tests. The fourth option is nonparametric tests, which I cover in the next

section. As mentioned already in Data Assumptions and Alternatives when Comparing Categories you have the following parametric options:

-

Use the traditional parametric t-test without changes: This is the default confidence interval or p-value in the output. I would use this only if a) the two samples have equal variances, and b) the sample is small (say < 25). With either unequal variances or larger samples, bootstrapping is likely to give better statistics.

-

Transform the dependent variable: Especially due to skewed data, transforming the dependent variable (for instance logging it or using a root like the square root) can sometimes achieve equal variances. Perhaps try a few transformations and see if they work; see Chapter 6 for how to create transformed versions of variables.

-

Bootstrapping: You can bootstrap the t-test to get accuracy statistics (generally, confidence intervals) that are robust to assumption violations. I advocate bootstrapping as standard except when your samples are very small. I do not cover bootstrapping further in this chapter, however.

-

Unequal variances t-test: An unequal variances parametric t-test (the Satterthwaite versions of the tests) can be seen in the second row of Figure 14.3 The t-test confidence interval. Use this test for unequal variances.

Step 4: Implement the Nonparametric T-Test and Compare with the Parametric

As introduced in Nonparametric Statistics, nonparametric tests usually eliminate the assumptions problem, often (but not always)

through the following simple method:

-

The dependent variable data is reduced to ranks (the highest score would be ranked first, the next-highest second, and so forth). This means that any given dataset can be standardized to a format that does not require assumptions like distributional shapes. See Figure 14.2 Ranking of dependent variable for nonparametric comparison for an example of this procedure.

-

The key question then becomes whether the ranks of each group’s dependent variable differ significantly (either average or median rank, depending on the test).

To implement the nonparametric version of the 2-sample t-test, run or adapt the code

“Code14b Nonparametric TTest.”

The nonparametric analogue of the independent samples t-test is called the “Wilcoxon-Mann-Whitney”

test or just the Wilcoxon test, but there is also a nonparametric confidence interval

for the differences called the Hodges-Lehmann test, as seen in Figure 14.6 Nonparametric T-Tests.

Figure 14.6 Nonparametric T-Tests

You can therefore compare the results of the nonparametric and parametric t-tests.

As seen in Figure 14.6 Nonparametric T-Tests, the diagnosis of the parametric options was confirmed: the confidence interval excludes

zero and the p-values are small, suggesting significant differences in sales of services

between freeware and premium customers.

Comparing Means with Related Samples or Categories

Introduction to the Relation Cases

An alternate set of tests are designed for cases where the categories or samples are

related instead of independent. This usually occurs in the following two major cases.

-

One sample contributing to multiple categories

-

Paired samples

These two cases are discussed in the following sections.

One Sample Contributing to Multiple Categories

In the first example, there is one sample contributing data to more than one category.

The aim is to compare scores between the categories. There are two major sub-types

here.

Related data case # 1: Repeated measures

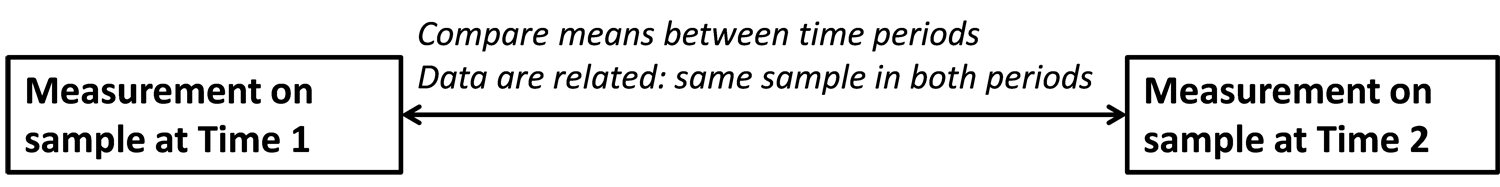

The first example is where the same measurement is made at different time periods

on the same observations. Earlier, I mentioned the example of a brand awareness measure

taken before and after an advertisement. The sample consumer set is asked to provide

data twice, once in each time period, and the time period is the category. Figure 14.7 Related data case #1 (same sample, repeated measures) below shows this ”repeated measures” design.

Figure 14.7 Related data case #1 (same sample, repeated measures)

Related data case # 2: Same sample rating different categories

Another case is where each observation provides data on different categories. For

instance, you could ask consumers to rate the price and the practicality of your new

car model. Your focus is to see if consumers rate price different to practicality,

or some similar analysis need. Figure 14.8 Related data case #2 (same sample rating different categories) shows this design.

Figure 14.8 Related data case #2 (same sample rating different categories)

Related Data Case # 3: Paired Samples

The third type of related data is where the same measurement is made on two samples,

but each observation in each sample is matched to an observation in another sample.

For instance, we may have a pharmaceutical trial in which one group of participants

get the true drug, and for comparison, another sample group get a fake drug (a placebo).

These samples would be independent without further methodological design, however,

researchers sometimes pair each observation in the one sample with an observation

in another sample, based on demographics. (For instance, you may pair a 25 year old

Caucasian woman in one sample with a roughly similarly aged Caucasian woman in another

sample, and so on.)

Figure 14.9 Related data case # 3: Paired samples below shows this comparison.

Figure 14.9 Related data case # 3: Paired samples

Implementing Related Data T-Tests in SAS

When you are dealing with only two samples or categories, the essential steps for

related data analysis are similar to those for independent samples shown earlier,

with just a few elaborations. Simply set up the data in two columns. In the repeated

measures example, each column represents the measure at a time period. In the one-sample

two-category example, each column is a rating on a category. In the paired samples

test, column 1 is the first sample and column 2 the second sample, where each row

represents a pair of matched observations. Then, you run a ”paired sample” t-test

on the data.

Take, for example, the data in “Dataset09_Related_TTests” in the “Textbook Materials”

folder. Here, we see a comparison between two time periods on a single sample and

single measure. If you open and run “Code14c Paired T Test,“ you will see how to set

up the code and you will be able to easily analyze the results using the same process

as for independent samples.

Comparing a Mean to a Population Benchmark

In the final – and simplest – example of t-tests, there is only a single sample where

you wish to compare the mean on a dependent variable to a defined benchmark value,

such as zero. For instance, you may assess whether the average level of sales in our

sample is significantly different from or higher or lower than $1,000,000. We assume

that the benchmark is drawn from a normally distributed population. This simple t-test

allows a judgement of whether your sample mean appears to differ statistically significantly

from this benchmark. See the SAS helpfiles (SAS/STAT 13.2 User’s Guide) for more on this easy test.

Last updated: April 18, 2017

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.