Chapter 5. Implementing an External DSL

With internal DSLs, you can do a great deal to define a language that has that elusive flow, but in the end you are always limited by conforming to the syntactic structure of the host language. External DSLs provide a greater syntactic freedom—the ability to use any syntax you like.

Implementing an external DSL differs from internal DSLs in that the parsing process operates on pure text input which is not constrained by any particular language. The techniques we can use to parse text are essentially those that have been in use for decades in parsing programming languages. There is also a long-running language community developing these tools and techniques.

But there is a catch. The tools and writings of the programming language community almost always assume you are working with a general-purpose language. DSLs are lucky to get a mention in passing. While many of the principles apply equally to general-purpose and domain-specific languages, there are differences. In addition, you don’t need to understand as much to work with DSLs, which essentially means you don’t need to go all the way up the learning curve that you’d need to go for a general-purpose language.

5.1 Syntactic Analysis Strategy

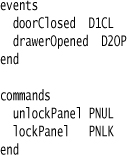

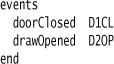

When we’re parsing an external DSL, we need to take the stream of text and break it up into some kind of structure that we can use to figure out what that text says. This initial structuring is referred to as syntactic analysis. Let’s consider the following code which might be a variation on programming my introductory state machine (“Gothic Security,” p. 3).

event doorClosed D1CL

event drawerOpened D2OP

command unlockPanel PNUL

command lockPanel PNLK

Syntactic analysis is about recognizing that the line event doorClosed D1CL is an event definition and telling it apart from a command definition.

The simplest way to do it is one that, I’m sure, you’ve done yourself, even if you’ve never dabbled with serious parsing. Divide the input text into lines, then process each line. If it starts with event, you know it’s an event; if it starts with command you know it’s a command. You can then break up the line accordingly to find the key bits of information. This style I refer to as Delimiter-Directed Translation. The general idea is to pick some delimiter characters that break the input into statements (usually line endings), chop the input into separate statements using that delimiter, and then feed each chunk into a separate processing step to figure out what’s on the line. Usually, there’s some clear marker in the line that tells you what kind of statement you are dealing with.

Delimiter-Directed Translation is very simple to use and uses tools that most programmers are familiar with—string splitting and regular expressions. Its limitation is that it doesn’t give you any inherent way to handle the hierarchic context of your input.

Let’s assume that I formulated my definitions thus:

Now splitting it up into lines isn’t quite enough. There isn’t enough information on the line doorClosed D1CL to tell if this is an event or a command I’m defining. There are ways to do this (and I explore one in an example for Delimiter-Directed Translation) but you’ll have to do it yourself. The more hierarchic context you get, the more effort you have to spend on managing it yourself.

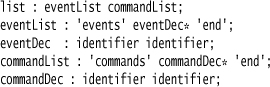

In order to handle DSLs with this kind of structure, the next step is to use Syntax-Directed Translation. In this technique, we first define a formal grammar for the input language, for example:

If you’ve read any book on programming languages, you’ve encountered the notion of a grammar. A grammar is a way of defining the legal syntax of a programming language. Grammars are almost always written in some form of BNF. Each line is a production rule; it states a name followed by the legal elements of that rule. So, in the above example, the line list : eventList commandList; says that the element list consists of an eventList followed by a commandList. Items in quotes are literals and a “*” indicates that the preceding element may appear many times. So eventList : 'events' eventDec* 'end'; says that an event list consists of the word events followed by some number of eventDecs, followed by the word end.

A grammar is a good way of thinking about the syntax of a language, whether you are using Syntax-Directed Translation or not. Indeed it’s helpful for thinking about internal DSLs as well, as illustrated by my table for internal DSL elements (“Using Grammars to Choose Internal Elements,” p. 79). It works particularly well for Syntax-Directed Translation because you can translate it fairly mechanically into a parser.

The kind of parser that’s generated from Syntax-Directed Translation is very capable of handling hierarchic structures like these; after all, this kind of thing is essential for general-purpose languages. As a result, you can handle many things that are awkward with Delimiter-Directed Translation much more easily.

How do you go from a grammar to a parser? As mentioned earlier, it’s a pretty mechanical process, and there are various ways to turn a BNF into some kind of parsing algorithm. There are many years of research into doing this, and that research spawned lots of techniques. In this book, I’ve selected three broad approaches.

Recursive Descent Parser is a classic way to perform this conversion. The recursive descent algorithm is an easy to understand approach to parsing that takes each grammar rule and turns it into a control flow representation inside a function. Each rule in the grammar turns into a function in the parser, and there are clear patterns you follow to turn each BNF operator into control flow.

A more hip and modern way is the Parser Combinator. Here, each rule is turned into an object, and we compose the objects into a structure that mirrors the grammar. You still need the elements of the Recursive Descent Parser, but these are packaged up into combinator objects that you can just compose together. This allows you to implement a grammar without knowing the details of the Recursive Descent Parser algorithms.

The third option does much of what this book is all about. A Parser Generator takes a flavor of BNF and uses it as a DSL. You write your grammar in this DSL, and Parser Generator then generates a parser for you.

The Parser Generator is the most sophisticated approach; such tools are very mature and can handle complex languages very efficiently. Using BNF as a DSL makes it easy to understand and maintain the language, since its syntax is clearly defined and automatically tied to the parser. On the downside, they do take a bit of time to learn and, since they mostly use code generation, they complicate the build process. You may also not have a good Parser Generator available for your language platform, and it’s not trivial to write one yourself.

A Recursive Descent Parser may be less powerful and efficient, but it is powerful and efficient enough for a DSL. It thus makes a reasonable option if the Parser Generator isn’t available or feels too heavyweight to introduce. The biggest problem with Recursive Descent Parser is that the grammar gets lost in the control flow, which makes the code far less explicit than I’d like.

As a result, I prefer the Parser Combinator for cases where you can’t or don’t want to use a Parser Generator. A Parser Combinator follows basically the same algorithm as a Recursive Descent Parser but allows you to represent the grammar explicitly in the code that composes the combinators together. While this code may not be quite as clear as a true BNF, it can be pretty close — particularly if you introduce internal DSL techniques.

With either of these three techniques, Syntax-Directed Translation makes it much easier than Delimiter-Directed Translation to handle languages that have any kind of structure to them. The biggest downside of Syntax-Directed Translation is that it’s a technique that isn’t as widely known as it should be. Many people are under the impression that using it is quite hard. I think that this fear often comes from the fact that Syntax-Directed Translation is usually described in the context of parsing a general-purpose language—which introduces a lot of complexities that you don’t face with a DSL. I hope this book will encourage you to try and work with Syntax-Directed Translation for yourself, and you’ll discover that it’s really not that tough to do.

For most of this book, I’m going to use Parser Generator. I find that the maturity of the tooling and the explicitness of the grammar make it easier to talk about the various concepts that I want to explain. In particular, I use the ANTLR Parser Generator—a mature, widely available, open source tool. One of its advantages is that it is a sophisticated form of a Recursive Descent Parser, which means it fits in well with the understanding you may get by using Recursive Descent Parser or Parser Combinator. Particularly if you are new to Syntax-Directed Translation, I think ANTLR is a good place to start.

5.2 Output Production Strategy

When we want to parse some input, we have to know what we want to do with the result—what is our output going to be? I’ve already argued that most of the time, the output we should build is a Semantic Model, which we can then either interpret directly or use as an input for code generation. I won’t rehash that argument again now, other than to point out that this is immediately a significant difference to the underlying assumptions you may find in the established language community.

Within that community, there is a strong emphasis on code generation, and parsers are usually constructed to directly produce the output code with no Semantic Model in sight. This is a reasonable approach for general-purpose languages, but it isn’t the approach I suggest for DSLs. This difference is important to bear in mind when you read material produced by the language community—which includes most documentation for tools such as Parser Generators.

Given that our output is a Semantic Model, our options boil down to using one or two steps. The single-step way is Embedded Translation, where you place calls directly in the parser to create the Semantic Model during the parsing process. In this approach, you gradually build up the Semantic Model while you are going through the parse. As soon as you understand enough of the input to recognize a part of the Semantic Model, you go ahead and create it. Often you’ll need some intermediate parsing data before you can actually create the objects in the Semantic Model—this usually involves storing some information in Symbol Tables

The alternative route is the two-step route—Tree Construction. In this approach, you parse the input text and produce a syntax tree that captures the essential structure of that text. You also populate a Symbol Table to handle cross-references between different parts of the tree. You then run a second phase that walks the syntax tree and populates the Semantic Model.

The great advantage of using Tree Construction is that this splits up the task of parsing into two simpler tasks. While recognizing the input text, you can focus only on how to build up the syntax tree. Indeed, many Parser Generators provide a DSL for tree construction that further simplifies this part of the process. Walking the tree to populate the Semantic Model is then a more regular programming exercise, and you have the whole tree to examine in order to determine what to do. If you’ve written code to process XML, you can liken Embedded Translation to using SAX and Tree Construction to using a DOM.

There is also a third option—Embedded Interpretation. Embedded Interpretation runs an interpretation process during the parse itself, and its output is the final result. A classic example of Embedded Interpretation is a calculator that takes in arithmetic expressions and produces the answer as a result. Therefore, Embedded Interpretation doesn’t produce a Semantic Model. Although Embedded Interpretation does come up from time to time, it’s a rare case.

You can also use Embedded Translation and Tree Construction without a Semantic Model; indeed, this is quite common when using code generation. Most examples you see of Parser Generators will do one of these. Although it may make sense, particularly for simpler cases, it’s an approach I recommend only rarely. Usually I find the Semantic Model overwhelmingly helpful.

So, most of the time the choice is between Embedded Translation and Tree Construction. This decision depends on the costs and benefits of that intermediate syntax tree. The great benefit of Tree Construction is that it splits the parsing problem into two. Usually it’s easier to combine two simple tasks than to write one more complicated task. This becomes increasingly true as the complexity of the overall translation increases. The more involved the DSL and the greater the distance between the DSL and the Semantic Model, the more useful it is to have an intermediate syntax tree, particularly if you have tooling support to create an abstract syntax tree.

I seem to be making a convincing argument here for Tree Construction. Certainly, a common argument against it—the memory that the syntax tree takes up—withers away when processing small DSLs on modern hardware. But despite the many reasons that favor Tree Construction, I’m not entirely convinced—sometimes it feels that building up and walking the tree is more trouble than it’s worth. I have to write the code to create the tree and code to walk it—often, it’s easier to just build the Semantic Model right there and then.

So, I’m conflicted on the choice. Other than the vague notion that increasing complexity of translation favors Tree Construction, I have mixed feelings. My best advice is to try a little of both and see which you prefer.

5.3 Parsing Concepts

If you start reading about parsing and using Parser Generators, you’ll soon run into a whole bunch of fundamental concepts of that world. In order to make sense of Syntax-Directed Translation, you’ll need to understand many of these, albeit not to the extent that traditional compiler books assume, since we’re dealing with DSLs rather than general-purpose languages here.

5.3.1 Separated Lexing

Usually Syntax-Directed Translation is divided into two stages, lexing (also scanning or tokenizing) and syntactic analysis (also referred to, confusingly, as parsing). The lexing stage takes the input text and transforms it into a stream of tokens. Tokens are a data type with two primary attributes: type and content. In our state machine language, the text state idle would turn into two tokens.

[content: "state", type: state-keyword]

[content: "idle", type: identifier]

It’s pretty easy to write a lexer using a Regex Table Lexer. This is simply a list of rules that match regular expressions to token types. You read the input stream, find the first regexp that matches, create a token of the corresponding type, and repeat all that with the next part of the stream.

The syntactic analyzer then takes this stream of tokens and arranges it into a syntax tree, based on the grammar rules. However, the fact that the lexer does its work first has some significant consequences. Firstly, it means that I have to be careful about how I use my text. I might have a state declared like this: state initial state, intending to name my state initial state. This is tricky because, by default, the lexer will classify the second “state” as a state keyword, not an identifier. To avoid this, I have to use some scheme of Alternative Tokenization. There are various ways to do Alternative Tokenization, depending a great deal on my parser tool.

The second consequence of this is that whitespace is generally discarded before the parser gets to see anything. This makes syntactic whitespace more difficult to deal with. Syntactic whitespace is whitespace that is part of the syntax of the language—such as using newlines as statement separators (Newline Separators) or using indentation to indicate structure in the manner of Python.

Syntactic whitespace is inherently a knotty area because it intermixes the syntactic structure of the language with formatting. In many ways, it makes sense for these to match—our eye uses formatting to infer structure, so it’s advantageous for the language to use it the same way. However, there’s just enough edge cases where the two needs don’t quite line up, which introduces a lot of complications. This is why many language people really hate syntactic whitespace. I’ve included some information on Newline Separators here, as they are a common form of syntactic whitespace, but I ran out of time to dig into syntactic indentation in any depth, leaving only enough time for a few notes in “Syntactic Indentation,” p. 337.

The reason for the lexer to be separated like this is that it makes it much easier to write each of the two elements. It’s another case of decomposing a complicated task into two simpler tasks. It also improves performance, particularly on the more limited hardware that many of these tools were originally designed for.

5.3.2 Grammars and Languages

If you were exceptionally sharp-eyed earlier on, you might have noticed that I talked about writing a grammar for a language. Many people have the misconception that one can have the grammar for a language. While it’s true that a grammar formally defines the syntax of a language, it’s quite easy for more than one grammar to recognize the same language.

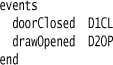

Let’s take the following input, from the gothic security system:

I can write a grammar for this input that looks like this:

![]()

But I can also write a grammar that looks like this:

Both of these are valid grammars for this language. They will both recognize the input—meaning they will turn the input text into a parse tree. The resulting parse trees will be different, and thus the way I write my output generation code will differ.

There are many reasons for why you can get different grammars. The primary reason is that different Parser Generators use different grammars, in terms of both syntax and semantics. Even with a single Parser Generator, you can have different grammars depending on how you factor your rules, which is the variation I’ve shown above. Just like with any code, you refactor your grammars to make them easier to understand. Another aspect that alters how you factor your grammar is the output production code; I often end up altering my grammar to make it easier to organize the code that translates source into the semantic model.

5.3.3 Regular, Context-Free, and Context-Sensitive Grammars

This is a good moment to dip our toes in some language theory, in particular the way in which the programming language community classifies grammars. This scheme, called the Chomsky hierarchy, was developed by the linguist Noam Chomsky in the 1950’s. It was based on looking at natural languages, rather than computer languages, but it derives its classification from the mathematical properties of a grammar used to define their syntactic structure.

Regular, context-free, and context-sensitive are the three categories that concern us. They form a hierarchy, in that all grammars that are regular are context-free, and all grammars that are context-free are context-sensitive. The Chomsky hierarchy strictly applies to grammars, but people use it for languages too. To say that a language is regular means that you can write a regular grammar for it.

The difference between the classes depends on certain mathematical characteristics of the grammar. I’ll leave that to proper language books to explain; for our purposes, the key distinction is what kind of fundamental algorithm you need for the parser.

A regular grammar is important to us because it can be processed using a finite-state machine. This is important because regular expressions are finite-state machines, hence a regular language can be parsed using regular expressions.

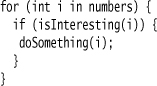

In terms of computer languages, regular grammars have one big problem: They can’t deal with nested elements. A regular language can parse an expression like 1 + 2 * 3 + 4 but can’t parse 1 + (2 * (3 + 4)). You may hear people saying that regular grammars “can’t count.” In parsing terms this means that you can’t use a finite-state machine to parse a language that has nested blocks. Obviously, this is bit of a bummer when it comes to computer languages, as any general-purpose language has be able to do arithmetic. It also affects block structure—programs like this:

need nested blocks, so are not regular.

To handle nested blocks, you need to step up to a context-free grammar. I find this name rather confusing, because the way I look at things, a context-free grammar does add hierarchic context to your grammar, allowing it to “count.” A context-free grammar can be implemented using a push-down machine, which is a finite-state machine with a stack. Most language parsers use context-free grammars, most Parser Generators use them, and both Recursive Descent Parser and Parser Combinator produce a push-down machine. As a result, most modern programming languages are parsed using context-free grammars.

Although context-free grammars are so widely used, they can’t handle all the syntactic rules that we might like. The common exception case to context-free grammars is the rule that you must declare a variable before you use it. The problem here is that the declaration of a variable often occurs outside the particular branch of the hierarchy you are in when you use the variable. While a context-free grammar can hold hierarchic context, that’s not enough context to handle this case—hence the need for Symbol Tables.

The next step up in the Chomsky hierarchy is context-sensitive grammars. A context-sensitive grammar could handle this case, but we don’t know how to write general context-sensitive parsers. In particular, we don’t know how to generate a parser from a context-sensitive grammar.

I made this dip into language classification theory primarily because it gives you some insight into which tool to use for processing a DSL. In particular, it tells you that if you use nested blocks, you’ll need something that can handle a context-free language. It also argues that if you need nested blocks, you’re likely to be better off with Syntax-Directed Translation rather than Delimiter-Directed Translation.

It also suggests that if you only have a regular language, you don’t need a pushdown machine to process it. As it turns out, you may find that it’s easier to use a push-down machine in any case. Once you’ve got used to using them, they are sufficiently straightforward, so it usually isn’t overkill to use one even for a regular language.

This division is also part of why we see separated lexing. Lexing is usually done with a finite-state machine, while syntactic analysis uses a push-down machine. This limits what you can do in the lexer, but allows the lexer to be faster. There are exceptions to this; in particular, I use ANTLR for most examples in this book, and ANTLR uses a push-down machine for both lexing and syntactic analysis.

There are some parser tools out there that only handle regular grammars. Ragel is one better-known example. Also, you can use lexers on their own to recognize a regular grammar. However, if you’re getting into Syntax-Directed Translation, I’d suggest starting with a context-free tool.

While the notions of regular and context-free grammars are the most common ones you’re likely to run into, there is a relative newcomer that is also interesting. This is a form of grammar called a Parsing Expression Grammar (PEG). PEGs use a different form of grammar which can handle most context-free situations and some context-sensitive ones. PEG parsers don’t tend to separate lexing, and it seems that a PEG is more usable than a context-free grammar in many situations. However, as I write this, PEGs are still relatively new, and tools are both rare and immature. This may change, of course, by the time you read this, but that’s the reason I don’t talk much about PEGs in this book. The best known PEG parsers are Packrat parsers.

(The line between the PEGs and more traditional parsers is not solid, however. ANTLR, for example, has incorporated many ideas from PEGs.)

5.3.4 Top-Down and Bottom-Up Parsing

There are many ways you can write a parser, and as a result, there are many kinds of Parser Generators out there, many with interesting differences. One of the biggest distinguishing features, however, is whether a parser is top-down or bottom-up. This affects not just the way it works, but also the kinds of grammars it can work with.

A top-down parser begins with the highest level rule in the grammar, and uses it to decide what to try and match. So, in an event list grammar like this:

![]()

with input like this:

the parser would work by first trying to match an eventBlock and therefore looking for an event keyword. Once it sees the event keyword, it then knows it wants to match an eventDec and looks inside that rule to know if it needs to match an identifier. In short, a top-down parser uses the rules as goals to direct it what to look for.

It won’t shock you if I say that a bottom-up parser does the opposite. It starts by reading the event keyword, then checks if the input so far is enough to match a rule. As it isn’t (yet), it puts it aside (this is called shifting) and takes the next token (an identifier). This is still not enough to match anything, so it shifts again. But with the second identifier, it can now match the eventDec rule, so it can now reduce the two identifiers to an eventDec. It similarly recognizes the second line of input; then, when it reaches the end keyword, it can reduce the whole expression to an event block.

You will often hear top-down parsers referred to as LL parsers and bottom-up parsers as LR parsers. The first letter refers to the direction in which the input is scanned, and the second to how the rules are recognized (L is left-to-right, i.e. top-down, R is right-to-left, hence bottom-up). You will also hear bottom-up parsing referred to as shift-reduce parsing, as the shift-reduce approach is the most likely approach to bottom-up parsing that you’ll run into. There are a number of variants of LR parsers, such as LALR, GLR, and SLR. I’m not going to go into the details of these variations here.

Bottom-up parsers are usually considered harder to write and understand than top-down ones. This is because most people find it harder to visualize the order in which the rules are processed with a bottom-up approach. While you don’t have to worry about writing a parser if you use a Parser Generator, you often have to understand, roughly, how it works in order to debug problems. Probably the best-known family of Parser Generators is the Yacc family, which is a bottom-up (LALR) parser.

The recursive descent algorithm is a top-down parsing algorithm. As a result, Recursive Descent Parser is a top-down parser, as is Parser Combinator. If that’s not enough, the ANTLR Parser Generator is also based on recursive descent, and is thus top-down.

The big disadvantage for top-down parsers is that they cannot deal with left recursion, which is a rule of the form:

expr: expr '+' expr;

Rules like this push the parser into an endless recursion trying to match expr. People disagree about how big a problem this limitation is in practice. There is a simple, mechanical technique called left-factoring that you can use to get rid of left recursion, but the result is a grammar that isn’t as easy to follow. The good news for top-down parsers, however, is that you only really run into this problem when dealing with Nested Operator Expressions, and once you understand the idioms for Nested Operator Expressions, you can churn them out relatively mechanically. The resulting grammar will still be not as clear as for a bottom-up parser, but knowing the idiom will get you there much quicker.

In general, different Parser Generators have various restrictions on the kind of grammars they can handle. These restrictions are driven by the parsing algorithms they use. There are also plenty of other differences, such as how you write actions, how you can move data up or down the parse tree, and what the grammar syntax is like (BNF vs. EBNF). All of these affect how you write your grammar. Perhaps the most important point is to realize that you shouldn’t treat the grammar as a fixed definition of the DSL. Often, you’ll need to alter the grammar to make the output production work better. Like any other code, the grammar will change depending on what you want to do with it.

If you are pretty comfortable with these concepts, they probably play an important role in deciding which parser tool to use. For more casual users, they probably don’t make a difference as to which tool to use, but are useful to bear in mind as they alter how you work with the chosen tool.

5.4 Mixing-in Another Language

One of the biggest dangers that you face with an external DSL is that it may accidentally evolve to become a general-purpose language. Even if things don’t deteriorate that far, a DSL can easily become overly complex, particularly if you have a lot of special cases that need particular treatment but are only used rarely.

Imagine we have a DSL that allocates sales leads to salesmen based on the kind of product that’s being asked for and the state in which the customer is based. We might have rules like:

scott handles floor_wax in WA;

helen handles floor_wax desert_topping in AZ NM;

brian handles desert_topping in WA OR ID MT;

otherwise scott

Now, what happens if Scott starts regularly playing golf with a big shot of Baker Industries, which gives him links into all sorts of companies called Baker This and Baker That? We decide to handle this problem by assigning to him all floor wax leads with companies whose names start with “baker” in the New England states.

There may be a dozen of special cases like this, all of which need extending the DSL in a particular direction. But including special tweaks for individual cases may add a lot of complication to the DSL. For those rare cases, it’s often worthwhile to handle them using a general-purpose language by using Foreign Code. Foreign Code embeds a small bit of a general-purpose language into the DSL. This code isn’t parsed by the DSL’s parser; rather, it is just slurped as a string and put into the Semantic Model for later processing. Here, this may lead to a rule like this (using Javascript as the foreigner):

scott handles floor_wax in MA RI CT when {/^Baker/.test(lead.name)};

This isn’t as clear as extending the DSL would be, but this mechanism can handle a wide range of cases. Should regex matching become a common condition, we can always extend the language later.

In this case, the general-purpose language I’ve used is Javascript. Using a dynamic language is useful for Foreign Code because it allows you to read and interpret the DSL script. You can also do Foreign Code with a static language, but then you have to use code generation and weave the host code into the generated code. This technique is familiar to people who use Parser Generators since this is how most Parser Generators work.

This example uses general-purpose code, but you can also use the same technique with another DSL. This approach would allow you to use different DSLs for different aspects of your problem—which very much fits the philosophy of using several small DSLs rather than one larger DSL.

Sadly, using multiple external DSLs together in this way isn’t very easy to do with current technology. Current parser technologies aren’t well suited to mixing different languages together with modular grammars (“Modular Grammars,” p. 339).

One of the problems with using Foreign Code is that you need to tokenize the Foreign Code differently to how you scan the code in your main language, so you need to use some approach of Alternative Tokenization.

The simplest approach for Alternative Tokenization is to quote the embedded code inside some clear delimiters that can be spotted by the tokenizer and thus slurped as a single string, as I did above by putting the Javascript between curly brackets. Such an approach allows you to grab the different text easily, but may add some noise to the language.

Alternative Tokenization isn’t just for handling Foreign Code. There are cases where, depending on the parsing context, you may want to interpret what should usually be a keyword as part of a name, such as state initial state. Quoting can do the trick (state "initial state"), but the other implementations of Alternative Tokenization which I discuss in that pattern may involve less syntactic noise.

5.5 XML DSLs

Right at the beginning of this book, I argued that many of the XML configuration files that we deal with are effectively DSLs. Other than an occasional snark, I haven’t talked any more about XML DSLs yet, holding my fire till I’ve had a chance to talk more about external DSLs.

I’m not saying that all configuration files are DSLs. In particular, I like to draw a distinction between property lists and DSLs. A property list is a simple list of key/value pairs, perhaps organized into categories. There’s not much syntactic structure here—none of that mysterious language nature that’s key to something being a DSL. (Although I will say that XML is too noisy for property lists—I much prefer the INI file approach for things like that.)

Many configuration files do have a language nature to them, and are thus DSLs. If done in XML, I do see them as external DSLs. XML isn’t a programming language; it’s a syntactic structure with no semantics. Therefore, we process it by reading the code into tokens rather than interpreting it for execution. DOM processing is essentially Tree Construction, SAX processing leads to Embedded Translation. I think of XML as a carrier syntax for the DSL, in much the same way that an internal DSL’s host language provides a carrier syntax. (An internal DSL also provides carrier semantics.)

My problem with XML as a carrier syntax is that it introduces far too much syntactic noise—lots of angle brackets, quotes, and slashes. Any nesting element needs both opening and closing tags. The result is too many characters expended on syntactic structure as opposed to real content. This makes it much harder to understand what the code is trying to say—which spoils the whole purpose of DSLs.

Despite this, there are a couple of arguments for XML. The first one is that humans shouldn’t have to write XML in the first place—special UIs should capture the information and just use XML as a human-readable serialization mechanism. This is a reasonable argument, although it takes us away from the DSL territory, with XML becoming a serialization mechanism rather than a language. A particular task may handled with a forms-and-fields UI as an alternative to using a DSL. What I have seen is much talk of having a UI over XML, but not so much action. If you spend a significant amount of time looking at XML (or diffs of XML), then the fact that you have a UI is incidental.

I often hear the point that XML parsers exist off-the-shelf, and as a result you don’t need to write your own. I think this argument is rather flawed, stemming from a confusion about what parsing is. In this book, I look at parsing as the whole route from input text to the Semantic Model. The XML parser only takes us part of the way—typically to a DOM. We still have to write code to traverse the DOM and do something useful. This is what Parser Generators can also do; ANTLR can easily take some input text and produce a syntax tree—which is the equivalent of the DOM. My experience is that, once you get moderately familiar with a Parser Generator, it takes no longer to use it than XML parser tools. Another point is that programmers are typically more familiar with XML parsing libraries than Parser Generators, but my view is that the time cost of learning to use a Parser Generator is a price worth paying.

One irritation with dealing with custom external DSLs is the inconsistency that they breed when dealing with things like quoting and escaping. Anyone who’s spent time working with Unix configuration files appreciates this annoyance, and XML does provide a single scheme that works very solidly.

I’ve generally skimmed over error handling and diagnostics in this book, but that shouldn’t be a reason to ignore the fact that XML processors usually do a good job here. You’ll have to work harder to get good diagnostics with a typical custom language; how hard depends on how good a parser toolkit you’re working with.

XML comes with technologies that allow you to easily check if the XML is reasonable without executing it, by comparing it to a schema. Various schema formats exist for XML: DTDs, XML Schema, Relax NG, all of which can check various things about the XML and also support more intelligent editing tools. (I write this book in XML and welcome the support that a Relax NG schema provides to my Emacs.)

Apart from parser tools that generate trees or events, you can also get binding interfaces that can easily translate XML data to fields in objects. These are less useful for DSLs, as the structure of the Semantic Model will rarely match that of the DSL to allow you to bind XML elements to the Semantic Model. You may be able to use binding with a translation layer, but it’s doubtful that this will buy you much over walking an XML tree.

If you use a Parser Generator, then the grammar DSL can define many of the checks that an XML schema provides. But few tools can take advantage of a grammar. We can write something ourselves, of course, but the plus of XML is that such tools already exist for it. Often, an inferior but prevalent approach ends up being more useful than superior technologies.

I concede the point, but still believe that the syntactic noise of XML is too much for a DSL. The key to a DSL is readability; tooling helps with writing, but it’s the reading that really counts. XML has its virtues—it’s really good at text markup like this book—but as a DSL carrier syntax it just imposes too much noise for my taste.

This raises a connected point of other carrier syntaxes. Some of these syntaxes have got traction recently as ways to textually encode structured data; good examples are JSON (www.json.org) and YAML (www.yaml.org). Many people, including myself, like these syntaxes because they carry much less syntactic noise than XML. However, these languages are very much oriented towards structuring data, and as a result lack the flexibility you need to have a truly fluent language. A DSL is different from a data serialization, just like a fluent API is different from a command-query API. Fluency is important for a DSL to be easily readable, and a data serialization format makes too many compromises to work well in that context.