Putting Statistical Size and Accuracy Together

Size is

certainly the most important thing in a statistical parameter, for

the reasons discussed above. However, practically, you need a combination

of size and relative accuracy together before you can say much about

your analysis. Consider the following possibilities:

-

A relatively large statistic that is relatively accurate (compared to some range or benchmark like zero):

-

This is fine, because the statistic is accurate enough. You can proceed to interpret the size.

-

An example may be a moderate correlation of, say, .35 with p < .01. The accuracy frees you to interpret the size.

-

Remember that a big statistic is not necessarily good – a big decline in patient health in a drug trial is not good. However, it is practically important.

-

-

A relatively large statistic that is inaccurate (either cannot be distinguished from 0 or has a very large confidence interval, which is the low power problem):

-

This is not very good. The inaccuracy basically undermines the size.

-

Take for example the same correlation of .35 as above, but now p = .26. The wide confidence interval means that you cannot trust the statistic to be what the point estimate guesses it to be: simply, it could be a wide range of too many other values.

-

You should mistrust this and look for more evidence or more accurate tests.

-

Note: You might reach the conclusion that the statistic is not trustworthy with a relatively wide confidence interval even if the interval excludes 0. Say you have a correlation of .52, but its confidence interval is .05 to .99. This range spans almost the entire positive correlation range! It’s claiming that the correlation is positive, yes, but its actual true size is very uncertain.

-

-

A relatively small statistic that has high accuracy (the high power problem):

-

As discussed earlier this is due to high power. It is not a problem so long as the analyst understands that the statistic remains insubstantial in size.

-

A small correlation of .06 with p < .0001 is an example.

-

The analyst should accept that he or she has an accurately small statistic and interpret it in this light.

-

-

A relatively small, inaccurate statistic: This issue may be low power or it may not. It depends on the width of the confidence interval. If the p-value is relatively high (telling you that the statistic is indistinguishable from zero) then look at the confidence interval itself:

-

If the confidence interval is actually quite narrow and the statistic is small, then the conclusion that the statistic is basically equivalent to zero is justified. Say you have a correlation of r = .03 (confidence interval -.01 to .07). This is not a very wide range and it includes zero. Conclude this is a negligible correlation.

-

However, if the confidence interval is very wide then conclude you have low power and cannot be sure if the statistic is low or high. Take the same point estimate correlation as above (r = .03) but this time with a huge 95% interval of -.42 to .48. This is a very wide range: yes it includes 0, but frankly the test lacks the power to be sure of much. Don’t conclude anything except that more research may be needed.

-

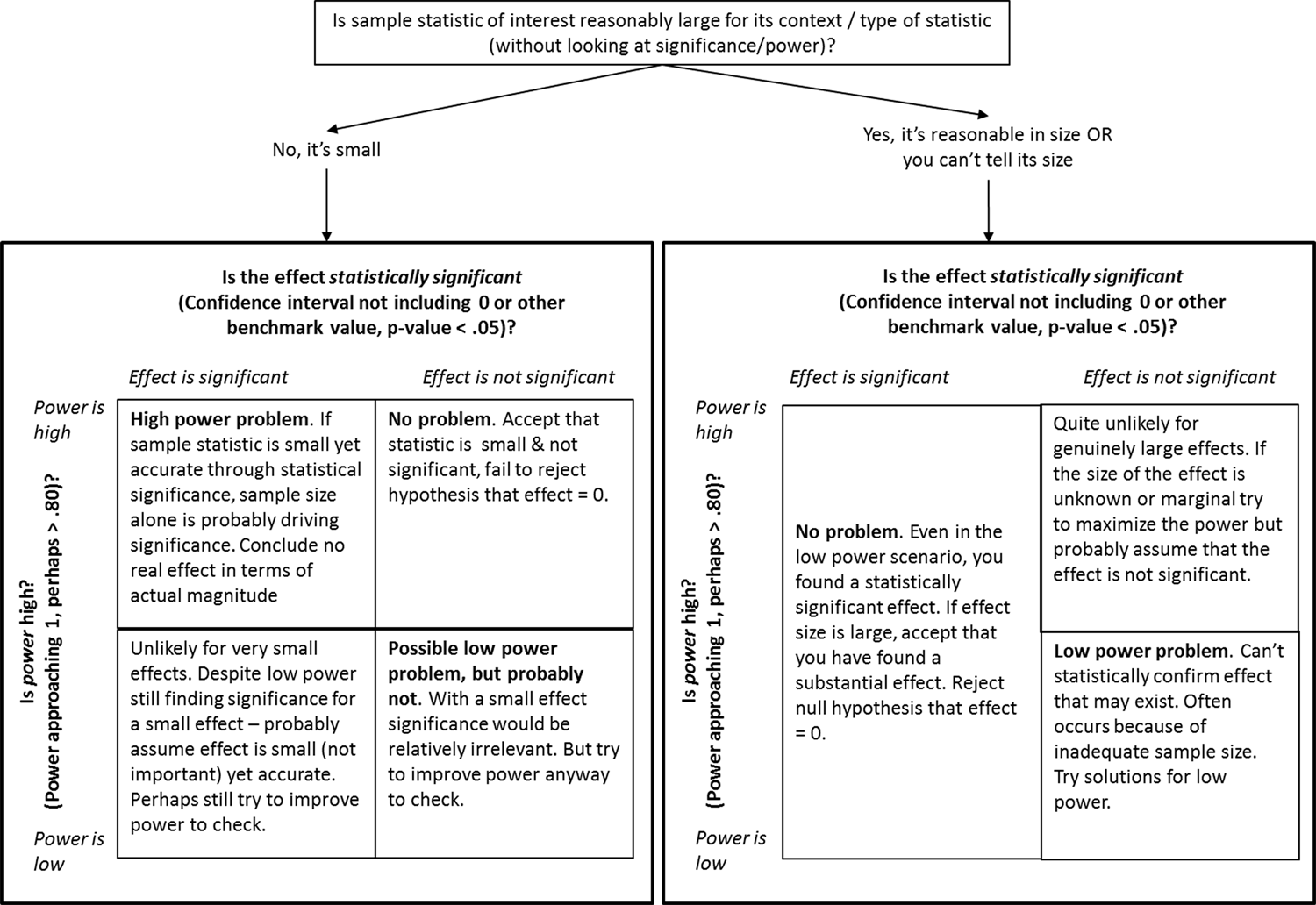

Figure 12.18 Decision diagram for statistical size, significance and power is a complex

diagram summarizing the various options discussed in this chapter

when thinking about size and significance in the context of post-hoc

power. For the reasons of these complex power issues, significance

testing is very dangerous, a point that I re-emphasize in the next

section.

Figure 12.18 Decision diagram for statistical size, significance and power

Last updated: April 18, 2017

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.