Chapter 15. Ray Casting and Rasterization

15.1. Introduction

Previous chapters considered modeling and interacting with 2D and 3D scenes using an underlying renderer provided by WPF. Now we focus on writing our own physically based 3D renderer.

Rendering is integration. To compute an image, we need to compute how much light arrives at each pixel of the image sensor inside a virtual camera. Photons transport the light energy, so we need to simulate the physics of the photons in a scene. However, we can’t possibly simulate all of the photons, so we need to sample a few of them and generalize from those to estimate the integrated arriving light. Thus, one might also say that rendering is sampling. We’ll tie this integration notion of sampling to the alternative probability notion of sampling presently.

In this chapter, we look at two strategies for sampling the amount of light transported along a ray that arrives at the image plane. These strategies are called ray casting and rasterization. We’ll build software renderers using each of them. We’ll also build a third renderer using a hardware rasterization API. All three renderers can be used to sample the light transported to a point from a specific direction. A point and direction define a ray, so in graphics jargon, such sampling is typically referred to as “sampling along a ray,” or simply “sampling a ray.”

There are many interesting rays along which to sample transport, and the methods in this chapter generalize to all of them. However, here we focus specifically on sampling rays within a cone whose apex is at a point light source or a pinhole camera aperture. The techniques within these strategies can also be modified and combined in interesting ways. Thus, the essential idea of this chapter is that rather than facing a choice between distinct strategies, you stand to gain a set of tools that you can modify and apply to any rendering problem. We emphasize two aspects in the presentation: the principle of sampling as a mathematical tool and the practical details that arise in implementing real renderers.

Of course, we’ll take many chapters to resolve the theoretical and practical issues raised here. Since graphics is an active field, some issues will not be thoroughly resolved even by the end of the book. In the spirit of servicing both principles and practice, we present some ideas first with pseudocode and mathematics and then second in actual compilable code. Although minimal, that code follows reasonable software engineering practices, such as data abstraction, to stay true to the feel of a real renderer. If you create your own programs from these pieces (which you should) and add the minor elements that are left as exercises, then you will have three working renderers at the end of the chapter. Those will serve as a scalable code base for your implementation of other algorithms presented in this book.

The three renderers we build will be simple enough to let you quickly understand and implement them in one or two programming sessions each. By the end of the chapter, we’ll clean them up and generalize the designs. This generality will allow us to incorporate changes for representing complex scenes and the data structures necessary for scaling performance to render those scenes.

We assume that throughout the subsequent rendering chapters you are implementing each technique as an extension to one of the renderers that began in this chapter. As you do, we recommend that you adopt two good software engineering practices.

1. Make a copy of the renderer before changing it (this copy becomes the reference renderer).

2. Compare the image result after a change to the preceding, reference result.

Techniques that enhance performance should generally not reduce image quality. Techniques that enhance simulation accuracy should produce noticeable and measurable improvements. By comparing the “before” and “after” rendering performance and image quality, you can verify that your changes were implemented correctly.

Comparison begins right in this chapter. We’ll consider three rendering strategies here, but all should generate identical results. We’ll also generalize each strategy’s implementation once we’ve sketched it out. When debugging your own implementations of these, consider how incorrectly mismatched results between programs indicate potential underlying program errors. This is yet another instance of the Visual Debugging principle.

15.2. High-Level Design Overview

We start with a high-level design in this section. We’ll then pause to address the practical issues of our programming infrastructure before reducing that high-level design to the specific sampling strategies.

15.2.1. Scattering

Light that enters the camera and is measured arrives from points on surfaces in the scene that either scattered or emitted the light. Those points lie along the rays that we choose to sample for our measurement. Specifically, the points casting light into the camera are the intersections in the scene of rays, whose origins are points on the image plane, that passed through the camera’s aperture.

To keep things simple, we assume a pinhole camera with a virtual image plane in front of the center of projection, and an instantaneous exposure. This means that there will be no blur in the image due to defocus or motion. Of course, an image with a truly zero-area aperture and zero-time exposure would capture zero photons, so we take the common graphics approximation of estimating the result of a small aperture and exposure from the limiting case, which is conveniently possible in simulation, albeit not in reality.

We also assume that the virtual sensor pixels form a regular square grid and estimate the value that an individual pixel would measure using a single sample at the center of that pixel’s square footprint. Under these assumptions, our sampling rays are the ones with origins at the center of projection (i.e., the pinhole) and directions through each of the sensor-pixel centers.1

1. For the advanced reader, we acknowledge Alvy Ray Smith’s “a pixel is not a little square”—that is, no sample is useful without its reconstruction filter—but contend that Smith was so successful at clarifying this issue that today “sample” now is properly used to describe the point-sample data to which Smith referred, and “pixel” now is used to refer to a “little square area” of a display or sensor, whose value may be estimated from samples. We’ll generally use “sensor pixel” or “display pixel” to mean the physical entity and “pixel” for the rectangular area on the image plane.

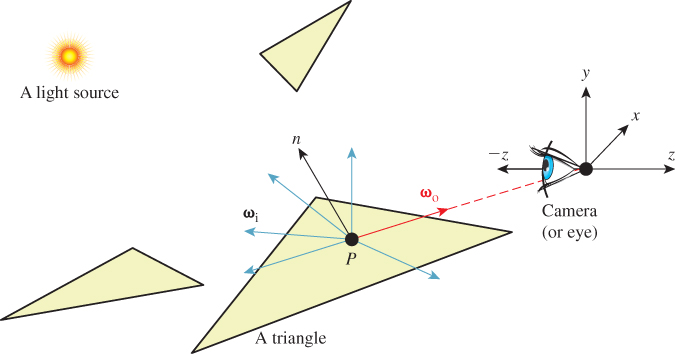

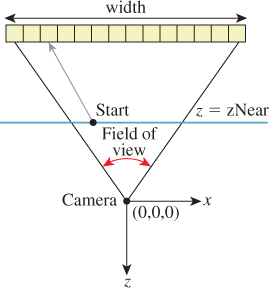

Finally, to keep things simple we chose a coordinate frame in which the center of projection is at the origin and the camera is looking along the negative z-axis. We’ll also refer to the center of projection as the eye. See Section 15.3.3 for a formal description and Figure 15.1 for a diagram of this configuration.

Figure 15.1: A specific surface location P that is visible to the camera, incident light at P from various directions {ωi}, and the exitant direction ωo toward the camera.

The light that arrived at a specific sensor pixel from a scene point P came from some direction. For example, the direction from the brightest light source in the scene provided a lot of light. But not all light arrived from the brightest source. There may have been other light sources in the scene that were dimmer. There was also probably a lot of light that previously scattered at other points and arrived at P indirectly. This tells us two things. First, we ultimately have to consider all possible directions from which light may have arrived at P to generate a correct image. Second, if we are willing to accept some sampling error, then we can select a finite number of discrete directions to sample. Furthermore, we can probably rank the importance of those directions, at least for lights, and choose a subset that is likely to minimize sampling error.

We don’t expect you to have perfect answers to these, but we want you to think about them now to help develop intuition for this problem: What kind of errors could arise from sampling a finite number of directions? What makes them errors? What might be good sampling strategies? How do the notions of expected value and variance from statistics apply here? What about statistical independence and bias?

Let’s start by considering all possible directions for incoming light in pseudocode and then return to the ranking of discrete directions when we later need to implement directional sampling concretely.

To consider the points and directions that affect the image, our program has to look something like Listing 15.1.

Listing 15.1: High-level rendering structure.

1 for each visible point P with direction ωo from it to pixel center (x, y):

2 sum = 0

3 for each incident light direction ωi at P:

4 sum += light scattered at P from ωi to ωo

5 pixel[x, y] = sum

15.2.2. Visible Points

Now we devise a strategy for representing points in the scene, finding those that are visible and scattering the light incident on them to the camera.

For the scene representation, we’ll work within some of the common rendering approximations described in Chapter 14. None of these are so fundamental as to prevent us from later replacing them with more accurate models.

Assume that we only need to model surfaces that form the boundaries of objects. “Object” is a subjective term; a surface is technically the interface between volumes with homogeneous physical properties. Some of these objects are what everyday language recognizes as such, like a block of wood or the water in a pool. Others are not what we are accustomed to considering as objects, such as air or a vacuum.

We’ll model these surfaces as triangle meshes. We ignore the surrounding medium of air and assume that all the meshes are closed so that from the outside of an object one can never see the inside. This allows us to consider only single-sided triangles. We choose the convention that the vertices of a triangular face, seen from the outside of the object, are in counterclockwise order around the face. To approximate the shading of a smooth surface using this triangle mesh, we model the surface normal at a point on a triangle pointing in the direction of the barycentric interpolation of prespecified normal vectors at its vertices. These normals only affect shading, so silhouettes of objects will still appear polygonal.

Chapter 27 explores how surfaces scatter light in great detail. For simplicity, we begin by assuming all surfaces scatter incoming light equally in all directions, in a sense that we’ll make precise presently. This kind of scattering is called Lambertian, as you saw in Chapter 6, so we’re rendering a Lambertian surface. The color of a surface is determined by the relative amount of light scattered at each wavelength, which we represent with a familiar RGB triple.

This surface mesh representation describes all the potentially visible points at the set of locations {P}. To render a given pixel, we must determine which potentially visible points project to the center of that pixel. We then need to select the scene point closest to the camera. That point is the actually visible point for the pixel center. The radiance—a measure of light that’s defined precisely in Section 26.7.2, and usually denoted with the letter L—arriving from that point and passing through the pixel is proportional to the light incident on the point and the point’s reflectivity.

To find the nearest potentially visible point, we first convert the outer loop of Listing 15.1 (see the next section) into an iteration over both pixel centers (which correspond to rays) and triangles (which correspond to surfaces). A common way to accomplish this is to replace “for each visible point” with two nested loops, one over the pixel centers and one over the triangles. Either can be on the outside. Our choice of which is the new outermost loop has significant structural implications for the rest of the renderer.

15.2.3. Ray Casting: Pixels First

Listing 15.2: Ray-casting pseudocode.

1 for each pixel position (x, y):

2 let R be the ray through (x, y) from the eye

3 for each triangle T:

4 let P be the intersection of R and T (if any)

5 sum = 0

6 for each direction:

7 sum += . . .

8 if P is closer than previous intersections at this pixel:

9 pixel[x, y] = sum

Consider the strategy where the outermost loop is over pixel centers, shown in Listing 15.2. This strategy is called ray casting because it creates one ray per pixel and casts it at every surface. It generalizes to an algorithm called ray tracing, in which the innermost loop recursively casts rays at each direction, but let’s set that aside for the moment.

Ray casting lets us process each pixel to completion independently. This suggests parallel processing of pixels to increase performance. It also encourages us to keep the entire scene in memory, since we don’t know which triangles we’ll need at each pixel. The structure suggests an elegant way of eventually processing the aforementioned indirect light: Cast more rays from the innermost loop.

15.2.4. Rasterization: Triangles First

Now consider the strategy where the outermost loop is over triangles shown in Listing 15.3. This strategy is called rasterization, because the inner loop is typically implemented by marching along the rows of the image, which are called rasters. We could choose to march along columns as well. The choice of rows is historical and has to do with how televisions were originally constructed. Cathode ray tube (CRT) displays scanned an image from left to right, top to bottom, the way that English text is read on a page. This is now a widespread convention: Unless there is an explicit reason to do otherwise, images are stored in row-major order, where the element corresponding to 2D position (x, y) is stored at index (x + y * width) in the array.

Listing 15.3: Rasterization pseudocode; O denotes the origin, or eyepoint.

1 for each pixel position (x, y):

2 closest[x, y] = ∞

3

4 for each triangle T:

5 for each pixel position (x, y):

6 let R be the ray through (x, y) from the eye

7 let P be the intersection of R and T

8 if P exists:

9 sum = 0

10 for each direction:

11 sum += . . .

12 if the distance to P is less than closest[x, y]:

13 pixel[x, y] = sum

14 closest[x, y] = |P - O|

Rasterization allows us to process each triangle to completion independently.2 This has several implications. It means that we can render much larger scenes than we can hold in memory, because we only need space for one triangle at a time. It suggests triangles as the level of parallelism. The properties of a triangle can be maintained in registers or cache to avoid memory traffic, and only one triangle needs to be memory-resident at a time. Because we consider adjacent pixels consecutively for a given triangle, we can approximate derivatives of arbitrary expressions across the surface of a triangle by finite differences between pixels. This is particularly useful when we later become more sophisticated about sampling strategies because it allows us to adapt our sampling rate to the rate at which an underlying function is changing in screen space.

2. If you’re worried that to process one triangle we have to loop through all the pixels in the image, even though the triangle does not cover most of them, then your worries are well founded. See Section 15.6.2 for a better strategy. We’re starting this way to keep the code as nearly parallel to the ray-casting structure as possible.

Note that the conditional on line 12 in Listing 15.3 refers to the closest previous intersection at a pixel. Because that intersection was from a different triangle, that value must be stored in a 2D array that is parallel to the image. This array did not appear in our original pseudocode or the ray-casting design. Because we now touch each pixel many times, we must maintain a data structure for each pixel that helps us resolve visibility between visits. Only two distances are needed for comparison: the distance to the current point and to the previously closest point. We don’t care about points that have been previously considered but are farther away than the closest, because they are hidden behind the closest point and can’t affect the image. The closest array stores the distance to the previously closest point at each pixel. It is called a depth buffer or a z-buffer. Because computing the distance to a point is potentially expensive, depth buffers are often implemented to encode some other value that has the same comparison properties as distance along a ray. Common choices are –zP, the z-coordinate of the point P, and –1/zP. Recall that the camera is facing along the negative z-axis, so these are related to distance from the z = 0 plane in which the camera sits. For now we’ll use the more intuitive choice of distance from P to the origin, |P – O|.

The depth buffer has the same dimensions as the image, so it consumes a potentially significant amount of memory. It must also be accessed atomically under a parallel implementation, so it is a potentially slow synchronization point. Chapter 36 describes alternative algorithms for resolving visibility under rasterization that avoid these drawbacks. However, depth buffers are by far the most widely used method today. They are extremely efficient in practice and have predictable performance characteristics. They also offer advantages beyond the sampling process. For example, the known depth at each pixel at the end of 3D rendering yields a “2.5D” result that enables compositing of multiple render passes and post-processing filters, such as artificial defocus blur.

This depth comparison turns out to be a fundamental idea, and it is now supported by special fixed-function units in graphics hardware. A huge leap in computer graphics performance occurred when this feature emerged in the early 1980s.

15.3. Implementation Platform

15.3.1. Selection Criteria

The kinds of choices discussed in this section are important. We want to introduce them now, and we want them all in one place so that you can refer to them later. Many of them will only seem natural to you after you’ve worked with graphics for a while. So read this section now, set it aside, and then read it again in a month.

In your studies of computer graphics you will likely learn many APIs and software design patterns. For example, Chapters 2, 4, 6, and 16 teach the 2D and 3D WPF APIs and some infrastructure built around them.

Teaching that kind of content is expressly not a goal of this chapter. This chapter is about creating algorithms for sampling light. The implementation serves to make the algorithms concrete and provide a test bed for later exploration. Although learning a specific platform is not a goal, learning the issues to consider when evaluating a platform is a goal; in this section we describe those issues.

We select one specific platform, a subset of the G3D Innovation Engine [http://g3d.sf.net] Version 9, for the code examples. You may use this one, or some variation chosen after considering the same issues weighed by your own goals and computing environment. In many ways it is better if your platform—language, compiler, support classes, hardware API—is not precisely the same as the one described here. The platform we select includes only a minimalist set of support classes. This keeps the presentation simple and generic, as suits a textbook. But you’re developing software on today’s technology, not writing a textbook that must serve independent of currently popular tools.

Since you’re about to invest a lot of work on this renderer, a richer set of support classes will make both implementation and debugging easier. You can compile our code directly against the support classes in G3D. However, if you have to rewrite it slightly for a different API or language, this will force you to actually read every line and consider why it was written in a particular manner. Maybe your chosen language has a different syntax than ours for passing a parameter by value instead of reference, for example. In the process of redeclaring a parameter to make this syntax change, you should think about why the parameter was passed by value in the first place, and whether the computational overhead or software abstraction of doing so is justified.

To avoid distracting details, for the low-level renderers we’ll write the image to an array in memory and then stop. Beyond a trivial PPM-file writing routine, we will not discuss the system-specific methods for saving that image to disk or displaying it on-screen in this chapter. Those are generally straightforward, but verbose to read and tedious to configure. The PPM routine is a proof of concept, but it is for an inefficient format and requires you to use an external viewer to check each result. G3D and many other platforms have image-display and image-writing procedures that can present the images that you’ve rendered more conveniently.

For the API-based hardware rasterizer, we will use a lightly abstracted subset of the OpenGL API that is representative of most other hardware APIs. We’ll intentionally skip the system-specific details of initializing a hardware context and exploiting features of a particular API or GPU. Those transient aspects can be found in your favorite API or GPU vendor’s manuals.

Although we can largely ignore the surrounding platform, we must still choose a programming language. It is wise to choose a language with reasonably high-level abstractions like classes and operator overloading. These help the algorithm shine through the source code notation.

It is also wise to choose a language that can be compiled to efficient native code. That is because even though performance should not be the ultimate consideration in graphics, it is a fairly important one. Even simple video game scenes contain millions of polygons and are rendered for displays with millions of pixels. We’ll start with one triangle and one pixel to make debugging easier and then quickly grow to hundreds of each in this chapter. The constant overhead of an interpreted language or a managed memory system cannot affect the asymptotic behavior of our program. However, it can be the difference between our renderer producing an image in two seconds or two hours . . . and debugging a program that takes two hours to run is very unpleasant.

Computer graphics code tends to combine high-level classes containing significant state, such as those representing scenes and objects, with low-level classes (a.k.a. “records”, “structs”) for storing points and colors that have little state and often expose that which they do contain directly to the programmer. A real-time renderer can easily process billions of those low-level classes per second. To support that, one typically requires a language with features for efficiently creating, destroying, and storing such classes. Heap memory management for small classes tends to be expensive and thwart cache efficiency, so stack allocation is typically the preferred solution. Language features for passing by value and by constant reference help the programmer to control cloning of both large and small class instances.

Finally, hardware APIs tend to be specified at the machine level, in terms of bytes and pointers (as abstracted by the C language). They also often require manual control over memory allocation, deallocation, types, and mapping to operate efficiently.

To satisfy the demands of high-level abstraction, reasonable performance for hundreds to millions of primitives and pixels, and direct manipulation of memory, we work within a subset of C++. Except for some minor syntactic variations, this subset should be largely familiar to Java and Objective C++ programmers. It is a superset of C and can be compiled directly as native (nonmanaged) C#. For all of these reasons, and because there is a significant tools and library ecosystem built for it, C++ happens to be the dominant language for implementing renderers today. Thus, our choice is consistent with showing you how renderers are really implemented.

Note that many hardware APIs also have wrappers for higher-level languages, provided by either the API vendor or third parties. Once you are familiar with the basic functionality, we suggest that it may be more productive to use such a wrapper for extensive software development on a hardware API.

15.3.2. Utility Classes

This chapter assumes the existence of obvious utility classes, such as those sketched in Listing 15.4. For these, you can use equivalents of the WPF classes, the Direct3D API versions, the built-in GLSL, Cg, and HLSL shading language versions, or the ones in G3D, or you can simply write your own. Following common practice, the Vector3 and Color3 classes denote the axes over which a quantity varies, but not its units. For example, Vector3 always denotes three spatial axes but may represent a unitless direction vector at one code location and a position in meters at another. We use a type alias to at least distinguish points from vectors (which are differences of points).

Listing 15.4: Utility classes.

1 #define INFINITY (numeric_limits<float>::infinity())

2

3 class Vector2 { public: float x, y; ... };

4 class Vector3 { public: float x, y, z; ... };

5 typedef Vector2 Point2;

6 typedef Vector3 Point3;

7 class Color3 { public: float r, g, b; ... };

8 typedef Radiance3 Color3;

9 typedef Power3 Color3;

10

11 class Ray {

12 private:

13 Point3 m_origin;

14 Vector3 m_direction;

15

16 public:

17 Ray(const Point3& org, const Vector3& dir) :

18 m_origin(org), m_direction(dir) {}

19

20 const Point3& origin() const { return m_origin; }

21 const Vector3& direction() const { return m_direction; }

22 ...

23 };

Observe that some classes, such as Vector3, expose their representation through public member variables, while others, such as Ray, have a stronger abstraction that protects the internal representation behind methods. The exposed classes are the workhorses of computer graphics. Invoking methods to access their fields would add significant syntactic distraction to the implementation of any function. Since the byte layouts of these classes must be known and fixed to interact directly with hardware APIs, they cannot be strong abstractions and it makes sense to allow direct access to their representation. The classes that protect their representation are ones whose representation we may (and truthfully, will) later want to change. For example, the internal representation of Triangle in this listing is an array of vertices. If we found that we computed the edge vectors or face normal frequently, then it might be more efficient to extend the representation to explicitly store those values.

For images, we choose the underlying representation to be an array of Radiance3, each array entry representing the radiance incident at the center of one pixel in the image. We then wrap this array in a class to present it as a 2D structure with appropriate utility methods in Listing 15.5.

1 class Image {

2 private:

3 int m_width;

4 int m_height;

5 std::vector<Radiance3> m_data;

6

7 int PPMGammaEncode(float radiance, float displayConstant) const;

8

9 public:

10

11 Image(int width, int height) :

12 m_width(width), m_height(height), m_data(width * height) {}

13

14 int width() const { return m_width; }

15

16 int height() const { return m_height; }

17

18 void set(int x, int y, const Radiance3& value) {

19 m_data[x + y * m_width] = value;

20 }

21

22 const Radiance3& get(int x, int y) const {

23 return m_data[x + y * m_width];

24 }

25

26 void save(const std::string& filename, float displayConstant=15.0f) const;

27 };

Under C++ conventions and syntax, the & following a type in a declaration indicates that the corresponding variable or return value will be passed by reference. The m_ prefix avoids confusion between member variables and methods or parameters with similar names. The std::vector class is the dynamic array from the standard library.

One could imagine a more feature-rich image class with bounds checking, documentation, and utility functions. Extending the implementation with these is a good exercise.

The set and get methods follow the historical row-major mapping from a 2D to a 1D array. Although we do not need it here, note that the reverse mapping from a 1D index i to the 2D indices (x, y) is

x = i % width; y = i / width

where % is the C++ integer modulo operation.

Our Image class stores physically meaningful values. The natural measurement of the light arriving along a ray is in terms of radiance, whose definition and precise units are described in Chapter 26. The image typically represents the light about to fall onto each pixel of a sensor or area of a piece of film. It doesn’t represent the sensor response process.

Displays and image files tend to work with arbitrarily scaled 8-bit display values that map nonlinearly to radiance. For example, if we set the display pixel value to 64, the display pixel does not emit twice the radiance that it does when we set the same pixel to 32. This means that we cannot display our image faithfully by simply rescaling radiance to display values. In fact, the relationship involves an exponent commonly called gamma, as described briefly below and at length in Section 28.12.

Assume some multiplicative factor d that rescales the radiance values in an image so that the largest value we wish to represent maps to 1.0 and the smallest maps to 0.0. This fills the role of the camera’s shutter and aperture. The user will select this value as part of the scene definition. Mapping it to a GUI slider is often a good idea.

Historically, most images stored 8-bit values whose meanings were illspecified. Today it is more common to specify what they mean. An image that actually stores radiance values is informally said to store linear radiance, indicating that the pixel value varies linearly with the radiance (see Chapter 17). Since the radiance range of a typical outdoor scene with shadows might span six orders of magnitude, the data would suffer from perceptible quantization artifacts were it reduced to eight bits per channel. However, human perception of brightness is roughly logarithmic. This means that distributing precision nonlinearly can reduce the perceptual error of a small bit-depth approximation. Gamma encoding is a common practice for distributing values according to a fractional power law, where 1/γ is the power. This encoding curve roughly matches the logarithmic response curve of the human visual system. Most computer displays accept input already gamma-encoded along the sRGB standard curve, which is about γ = 2.2. Many image file formats, such as PPM, also default to this gamma encoding. A routine that maps a radiance value to an 8-bit display value with a gamma value of 2.2 is:

1 int Image::PPMGammaEncode(float radiance, float d) const {

2 return int(pow(std::min(1.0f, std::max(0.0f, radiance * d)),

3 1.0f / 2.2f) * 255.0f);

4 }

Note that x1/2.2 ≈ ![]() . Because they are faster than arbitrary exponentiation on most hardware, square root and square are often employed in real-time rendering as efficient γ = 2.0 encoding and decoding methods.

. Because they are faster than arbitrary exponentiation on most hardware, square root and square are often employed in real-time rendering as efficient γ = 2.0 encoding and decoding methods.

The save routine is our bare-bones method for exporting data from the renderer for viewing. It saves the image in human-readable PPM format [P+ 10] and is implemented in Listing 15.6.

Listing 15.6: Saving an image to an ASCII RGB PPM file.

1 void Image::save(const std::string& filename, float d) const {

2 FILE* file = fopen(filename.c_str(), "wt");

3 fprintf(file, "P3 %d %d 255

", m_width, m_height);

4 for (int y = 0; y < m_height; ++y) {

5 fprintf(file, "

# y = %d

", y);

6 for (int x = 0; x < m_width; ++x) {

7 const Radiance3& c(get(x, y));

8 fprintf(file, "%d %d %d

",

9 PPMGammaEncode(c.r, d),

10 PPMGammaEncode(c.g, d),

11 PPMGammaEncode(c.b, d));

12 }

13 }

14 fclose(file);

15 }

This is a useful snippet of code beyond its immediate purpose of saving an image. The structure appears frequently in 2D graphics code. The outer loop iterates over rows. It contains any kind of per-row computation (in this case, printing the row number). The inner loop iterates over the columns of one row and performs the per-pixel operations. Note that if we wished to amortize the cost of computing y * m_width inside the get routine, we could compute that as a perrow operation and merely accumulate the 1-pixel offsets in the inner loop. We do not do so in this case because that would complicate the code without providing a measurable performance increase, since writing a formatted text file would remain slow compared to performing one multiplication per pixel.

The PPM format is slow for loading and saving, and consumes lots of space when storing images. For those reasons, it is rarely used outside academia. However, it is convenient for data interchange between programs. It is also convenient for debugging small images for three reasons. The first is that it is easy to read and write. The second is that many image programs and libraries support it, including Adobe Photoshop and xv. The third is that we can open it in a text editor to look directly at the (gamma-corrected) pixel values.

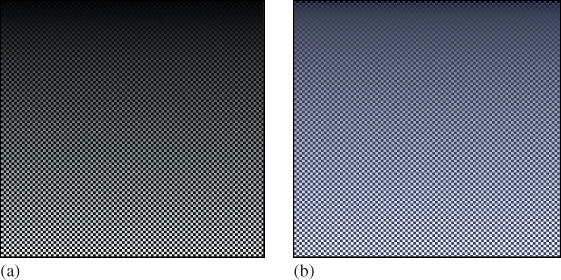

After writing the image-saving code, we displayed the simple pattern shown in Figure 15.2 as a debugging aid. If you implement your own image saving or display mechanism, consider doing something similar. The test pattern alternates dark blue pixels with ones that form a gradient. The reason for creating the single-pixel checkerboard pattern is to verify that the image was neither stretched nor cropped during display. If it was, then one or more thin horizontal or vertical lines would appear. (If you are looking at this image on an electronic display, you may see such patterns, indicating that your viewing software is indeed stretching it.) The motivation for the gradient is to determine whether gamma correction is being applied correctly. A linear radiance gradient should appear as a nonlinear brightness gradient, when displayed correctly. Specifically, it should primarily look like the brighter shades. The pattern on the left is shown without gamma correction. The gradient appears to have linear brightness, indicating that it is not displayed correctly. The pattern on the right is shown with gamma correction. The gradient correctly appears to be heavily shifted toward the brighter shaders.

Figure 15.2: A pattern for testing the Image class. The pattern is a checkerboard of 1-pixel squares that alternate between 1/10 W/(m2 sr) in the blue channel and a vertical gradient from 0 to 10. (a) Viewed with deviceGamma = 1.0 and displayConstant = 1.0, which makes dim squares appear black and gives the appearance of a linear change in brightness. (b) Displayed more correctly with deviceGamma = 2.0, where the linear radiance gradient correctly appears as a nonlinear brightness ramp and the dim squares are correctly visible. (The conversion to a printed image or your online image viewer may further affect the image.)

Note that we made the darker squares blue, yet in the left pattern—without gamma correction—they appear black. That is because gamma correction helps make darker shades more visible, as in the right image. This hue shift is another argument for being careful to always implement gamma correction, beyond the tone shift. Of course, we don’t know the exact characteristics of the display (although one can typically determine its gamma exponent) or the exact viewing conditions of the room, so precise color correction and tone mapping is beyond our ability here. However, the simple act of applying gamma correction arguably captures some of the most important aspects of that process and is computationally inexpensive and robust.

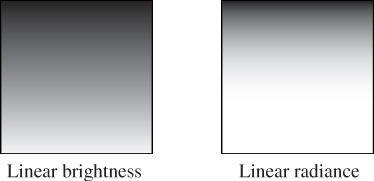

Two images are shown below. Both have been gamma-encoded with γ = 2.0 for printing and online display. The image on the left is a gradient that has been rendered to give the impression of linear brightness. It should appear as a linear color ramp. The image on the right was rendered with linear radiance (it is the checkerboard on the right of Figure 15.2 without the blue squares). It should appear as a nonlinear color ramp. The image was rendered at 200 × 200 pixels. What equation did we use to compute the value (in [0, 1]) of the pixel at (x, y) for the gradient image on the left?

15.3.3. Scene Representation

Listing 15.7 shows a Triangle class. It stores each triangle by explicitly storing each vertex. Each vertex has an associated normal that is used exclusively for shading; the normals do not describe the actual geometry. These are sometimes called shading normals. When the vertex normals are identical to the normal to the plane of the triangle, the triangle’s shading will appear consistent with its actual geometry. When the normals diverge from this, the shading will mimic that of a curved surface. Since the silhouette of the triangle will still be polygonal, this effect is most convincing in a scene containing many small triangles.

Listing 15.7: Interface for a Triangle class.

1 class Triangle {

2 private:

3 Point3 m_vertex[3];

4 Vector3 m_normal[3];

5 BSDF m_bsdf;

6

7 public:

8

9 const Point3& vertex(int i) const { return m_vertex[i]; }

10 const Vector3& normal(int i) const { return m_normal[i]; }

11 const BSDF& bsdf() const { return m_bsdf; }

12 ...

13 };

We also associate a BSDF class value with each triangle. This describes the material properties of the surface modeled by the triangle. It is described in Section 15.4.5. For now, think of this as the color of the triangle.

The representation of the triangle is concealed by making the member variables private. Although the implementation shown contains methods that simply return those member variables, you will later use this abstraction boundary to create a more efficient implementation of the triangle. For example, many triangles may share the same vertices and bidirectional scattering distribution functions (BSDFs), so this representation is not very space-efficient. There are also properties of the triangle, such as the edge lengths and geometric normal, that we will find ourselves frequently recomputing and could benefit from storing explicitly.

Compute the size in bytes of one Triangle. How big is a 1M triangle mesh? Is that reasonable? How does this compare with the size of a stored mesh file, say, in the binary 3DS format or the ASCII OBJ format? What are other advantages, beyond space reduction, of sharing vertices between triangles in a mesh?

Listing 15.8 shows our implementation of an omnidirectional point light source. We represent the power it emits at three wavelengths (or in three wavelength bands), and the center of the emitter. Note that emitters are infinitely small in our representation, so they are not themselves visible. If we wish to see the source appear in the final rendering we need to either add geometry around it or explicitly render additional information into the image. We will do neither explicitly in this chapter, although you may find that these are necessary when debugging your illumination code.

Listing 15.8: Interface for a uniform point luminaire—a light source.

1 class Light {

2 public:

3 Point3 position;

4

5 /** Over the entire sphere. */

6 Power3 power;

7 };

Listing 15.9 describes the scene as sets of triangles and lights. Our choice of arrays for the implementation of these sets imposes an ordering on the scene. This is convenient for ensuring a reproducible environment for debugging. However, for now we are going to create that ordering in an arbitrary way, and that choice may affect performance and even our image in some slight ways, such as resolving ties between which surface is closest at an intersection. More sophisticated scene data structures may include additional structure in the scene and impose a specific ordering.

Listing 15.9: Interface for a scene represented as an unstructured list of triangles and light sources.

1 class Scene {

2 public:

3 std::vector<Triangle> triangleArray;

4 std::vector<Light> lightArray;

5 };

Listing 15.10 represents our camera. The camera has a pinhole aperture, an instantaneous shutter, and artificial near and far planes of constant (negative) z values. We assume that the camera is located at the origin and facing along the –z-axis.

Listing 15.10: Interface for a pinhole camera at the origin.

1 class Camera {

2 public:

3 float zNear;

4 float zFar;

5 float fieldOfViewX;

6

7 Camera() : zNear(-0.1f), zFar(-100.0f), fieldOfViewX(PI / 2.0f) {}

8 };

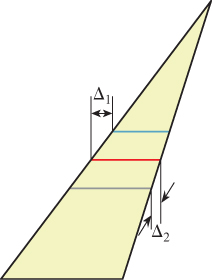

We constrain the horizontal field of view of the camera to be fieldOfViewX. This is the measure of the angle from the center of the leftmost pixel to the center of the rightmost pixel along the horizon in the camera’s view in radians (it is shown later in Figure 15.3). During rendering, we will compute the aspect ratio of the target image and implicitly use that to determine the vertical field of view. We could alternatively specify the vertical field of view and compute the horizontal field of view from the aspect ratio.

Figure 15.3: The ray through a pixel center in terms of the image resolution and the camera’s horizontal field of view.

15.3.4. A Test Scene

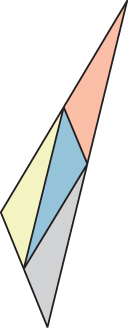

We’ll test our renderers on a scene that contains one triangle whose vertices are

Point3(0,1,-2), Point3(-1.9,-1,-2), and Point3(1.6,-0.5,-2),

and whose vertex normals are

Vector3( 0.0f, 0.6f, 1.0f).direction(),

Vector3(-0.4f,-0.4f, 1.0f).direction(), and

Vector3( 0.4f,-0.4f, 1.0f).direction().

We create one light source in the scene, located at Point3(1.0f,3.0f,1.0f) and emitting power Power3(10, 10, 10). The camera is at the origin and is facing along the –z-axis, with y increasing upward in screen space and x increasing to the right. The image has size 800 × 500 and is initialized to dark blue.

This choice of scene data was deliberate, because when debugging it is a good idea to choose configurations that use nonsquare aspect ratios, nonprimary colors, asymmetric objects, etc. to help find cases where you have accidentally swapped axes or color channels. Having distinct values for the properties of each vertex also makes it easier to track values through code. For example, on this triangle, you can determine which vertex you are examining merely by looking at its x-coordinate.

On the other hand, the camera is the standard one, which allows us to avoid transforming rays and geometry. That leads to some efficiency and simplicity in the implementation and helps with debugging because the input data maps exactly to the data rendered, and in practice, most rendering algorithms operate in the camera’s reference frame anyway.

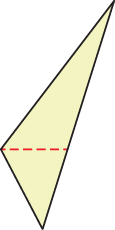

Mandatory; do not continue until you have done this: Draw a schematic diagram of this scene from three viewpoints.

1. The orthographic view from infinity facing along the x-axis. Make z increase to the right and y increase upward. Show the camera and its field of view.

2. The orthographic view from infinity facing along the –y-axis. Make x increase to the right and z increase downward. Show the camera and its field of view. Draw the vertex normals.

3. The perspective view from the camera, facing along the –z-axis; the camera should not appear in this image.

15.4. A Ray-Casting Renderer

We begin the ray-casting renderer by expanding and implementing our initial pseudocode from Listing 15.2. It is repeated in Listing 15.11 with more detail.

Listing 15.11: Detailed pseudocode for a ray-casting renderer.

1 for each pixel row y:

2 for each pixel column x:

3 let R = ray through screen space position (x + 0.5, y + 0.5)

4 closest = ∞

5 for each triangle T:

6 d = intersect(T, R)

7 if (d < closest)

8 closest = d

9 sum = 0

10 let P be the intersection point

11 for each direction ωi:

12 sum += light scattered at P from ωi to ωo

13

14 image[x, y] = sum

The three loops iterate over every ray and triangle combination. The body of the for-each-triangle loop verifies that the new intersection is closer than previous observed ones, and then shades the intersection. We will abstract the operation of ray intersection and sampling into a helper function called sampleRayTriangle. Listing 15.12 gives the interface for this helper function.

Listing 15.12: Interface for a function that performs ray-triangle intersection and shading.

1 bool sampleRayTriangle(const Scene& scene, int x, int y,

2 const Ray& R, const Triangle& T,

3 Radiance3& radiance, float& distance);

The specification for sampleRayTriangle is as follows. It tests a particular ray against a triangle. If the intersection exists and is closer than all previously observed intersections for this ray, it computes the radiance scattered toward the viewer and returns true. The innermost loop therefore sets the value of pixel (x, y) to the radiance L_o passing through its center from the closest triangle. Radiance from farther triangles is not interesting because it will (conceptually) be blocked by the back of the closest triangle and never reach the image. The implementation of sampleRayTriangle appears in Listing 15.15.

To render the entire image, we must invoke sampleRayTriangle once for each pixel center and for each triangle. Listing 15.13 defines rayTrace, which performs this iteration. It takes as arguments a box within which to cast rays (see Section 15.4.4). We use L_o to denote the radiance from the triangle; the subscript “o” is for “outgoing”.

Listing 15.13: Code to trace one ray for every pixel between (x0, y0) and (x1-1, y1-1), inclusive.

1 /** Trace eye rays with origins in the box from [x0, y0] to (x1, y1).*/

2 void rayTrace(Image& image, const Scene& scene,

3 const Camera& camera, int x0, int x1, int y0, int y1) {

4

5 // For each pixel

6 for (int y = y0; y < y1; ++y) {

7 for (int x = y0; x < x1; ++x) {

8

9 // Ray through the pixel

10 const Ray& R = computeEyeRay(x + 0.5f, y + 0.5f, image.width(),

11 image.height(), camera);

12

13 // Distance to closest known intersection

14 float distance = INFINITY;

15 Radiance3 L_o;

16

17 // For each triangle

18 for (unsigned int t = 0; t < scene.triangleArray.size(); ++t){

19 const Triangle& T = scene.triangleArray[t];

20

21 if (sampleRayTriangle(scene, x, y, R, T, L_o, distance)) {

22 image.set(x, y, L_o);

23 }

24 }

25 }

26 }

27 }

To invoke rayTrace on the entire image, we will use the call:

rayTrace(image, scene, camera, 0, image.width(), 0, image.height());

15.4.1. Generating an Eye Ray

Assume the camera’s center of projection is at the origin, (0, 0, 0), and that, in the camera’s frame of reference, the y-axis points upward, the x-axis points to the right, and the z-axis points out of the screen. Thus, the camera is facing along its own –z-axis, in a right-handed coordinate system. We can transform any scene to this coordinate system using the transformations from Chapter 11.

We require a utility function, computeEyeRay, to find the ray through the center of a pixel, which in screen space is given by (x + 0.5, y + 0.5) for integers x and y. Listing 15.14 gives an implementation. The key geometry is depicted in Figure 15.3. The figure is a top view of the scene in which x increases to the right and z increases downward. The near plane appears as a horizontal line, and the start point is on that plane, along the line from the camera at the origin to the center of a specific pixel.

To implement this function we needed to parameterize the camera by either the image plane depth or the desired field of view. Field of view is a more intuitive way to specify a camera, so we previously chose that parameterization when building the scene representation.

Listing 15.14: Computing the ray through the center of pixel (x, y) on a width × height image.

1 Ray computeEyeRay(float x, float y, int width, int height, const Camera& camera) {

2 const float aspect = float(height) / width;

3

4 // Compute the side of a square at z = -1 based on our

5 // horizontal left-edge-to-right-edge field of view

6 const float s = -2.0f * tan(camera.fieldOfViewX * 0.5f);

7

8 const Vector3& start =

9 Vector3( (x / width - 0.5f) * s,

10 -(y / height - 0.5f) * s * aspect, 1.0f) * camera.zNear;

11

12 return Ray(start, start.direction());

13 }

We choose to place the ray origin on the near (sometimes called hither) clipping plane, at z = camera.zNear. We could start rays at the origin instead of the near plane, but starting at the near plane will make it easier for results to line up precisely with our rasterizer later.

The ray direction is the direction from the center of projection (which is at the origin, (0, 0, 0)) to the ray start point, so we simply normalize start point.

By the rules of Chapter 7, we should compute the ray direction as (start - Vector3(0,0,0)).direction(). That makes the camera position explicit, so we are less likely to introduce a bug if we later change the camera. This arises simply from strongly typing the code to match the underlying mathematical types. On the other hand, our code is going to be full of lines like this, and consistently applying correct typing might lead to more harm from obscuring the algorithm than benefit from occasionally finding an error. It is a matter of personal taste and experience (we can somewhat reconcile our typing with the math by claiming that P.direction() on a point P returns the direction to the point, rather than “normalizing” the point).

Rewrite computeEyeRay using the distinct Point and Vector abstractions from Chapter 7 to get a feel for how this affects the presentation and correctness. If this inspires you, it’s quite reasonable to restructure all the code in this chapter that way, and doing so is a valuable exercise.

Note that the y-coordinate of the start is negated. This is because y is in 2D screen space, with a “y = down” convention, and the ray is in a 3D coordinate system with a “y = up” convention.

To specify the vertical field of view instead of the horizontal one, replace fieldOfViewX with fieldOfViewY and insert the line s /= aspect.

15.4.1.1. Camera Design Notes

The C++ language offers both functions and methods as procedural abstractions. We have presented computeEyeRay as a function that takes a Camera parameter to distinguish the “support code” Camera class from the ray-tracer-specific code that you are adding. As you move forward through the book, consider refactoring the support code to integrate auxiliary functions like this directly into the appropriate classes. (If you are using an existing 3D library as support code, it is likely that the provided camera class already contains such a method. In that case, it is worth implementing the method once as a function here so that you have the experience of walking through and debugging the routine. You can later discard your version in favor of a canonical one once you’ve reaped the educational value.)

A software engineering tip: Although we have chosen to forgo small optimizations, it is still important to be careful to use references (e.g., Image&) to avoid excess copying of arguments and intermediate results. There are two related reasons for this, and neither is about the performance of this program.

The first reason is that we want to be in the habit of avoiding excessive copying. A Vector3 occupies 12 bytes of memory, but a full-screen Image is a few megabytes. If we’re conscientious about never copying data unless we want copy semantics, then we won’t later accidentally copy an Image or other large structure. Memory allocation and copy operations can be surprisingly slow and will bloat the memory footprint of our program. The time cost of copying data isn’t just a constant overhead factor on performance. Copying the image once per pixel, in the inner loop, would change the ray caster’s asymptotic run time from Ο(n) in the number of pixels to Ο(n2).

The second reason is that experienced programmers rely on a set of idioms that are chosen to avoid bugs. Any deviation from those attracts attention, because it is a potential bug. One such convention in C++ is to pass each value as a const reference unless otherwise required, for the long-term performance reasons just described. So code that doesn’t do so takes longer for an experienced programmer to review because of the need to check that there isn’t an error or performance implication whenever an idiom is not followed. If you are an experienced C++ programmer, then such idioms help you to read the code. If you are not, then either ignore all the ampersands and treat this as pseudocode, or use it as an opportunity to become a better C++ programmer.

15.4.1.2. Testing the Eye-Ray Computation

We need to test computeEyeRay before continuing. One way to do this is to write a unit test that computes the eye rays for specific pixels and then compares them to manually computed results. That is always a good testing strategy. In addition to that, we can visualize the eye rays. Visualization is a good way to quickly see the result of many computations. It allows us to more intuitively check results, and to identify patterns of errors if they do not match what we expected.

In this section, we’ll visualize the directions of the rays. The same process can be applied to the origins. The directions are the more common location for an error and conveniently have a bounded range, which make them both more important and easier to visualize.

A natural scheme for visualizing a direction is to interpret the (x, y, z) fields as (r, g, b) color triplets. The conversion of ray direction to pixel color is of course a gross violation of units, but it is a really useful debugging technique and we aren’t expecting anything principled here anyway.

Because each ordinate is on the interval [–1, 1], we rescale them to the range [0, 1] by r = (x + 1)/2. Our image display routines also apply an exposure function, so we need to scale the resultant intensity down by a constant on the order of the inverse of the exposure value. Temporarily inserting the following line:

image.set(x, y, Color3(R.direction() + Vector3(1, 1, 1)) / 5);

into rayTrace in place of the sampleRayTriangle call should yield an image like that shown in Figure 15.4. (The factor of 1/5 scales the debugging values to a reasonable range for our output, which was originally calibrated for radiance; we found a usable constant for this particular example by trial and error.) We expect the x-coordinate of the ray, which here is visualized as the color red, to increase from a minimum on the left to a maximum on the right. Likewise, the (3D) y-coordinate, which is visualized as green, should increase from a minimum at the bottom of the image to a maximum at the top. If your result varies from this, examine the pattern you observe and consider what kind of error could produce it. We will revisit visualization as a debugging technique later in this chapter, when testing the more complex intersection routine.

15.4.2. Sampling Framework: Intersect and Shade

Listing 15.15 shows the code for sampling a triangle with a ray. This code doesn’t perform any of the heavy lifting itself. It just computes the values needed for intersect and shade.

Listing 15.15: Sampling the intersection and shading of one triangle with one ray.

1 bool sampleRayTriangle(const Scene& scene, int x, int y, const Ray& R,

const Triangle& T, Radiance3& radiance, float& distance) {

2

3 float weight[3];

4 const float d = intersect(R, T, weight);

5

6 if (d >= distance) {

7 return false;

8 }

9

10 // This intersection is closer than the previous one

11 distance = d;

12

13 // Intersection point

14 const Point3& P = R.origin() + R.direction() * d;

15

16 // Find the interpolated vertex normal at the intersection

17 const Vector3& n = (T.normal(0) * weight[0] +

18 T.normal(1) * weight[1] +

19 T.normal(2) * weight[2]).direction();

20

21 const Vector3& w_o = -R.direction();

22

23 shade(scene, T, P, n, w_o, radiance);

24

25 // Debugging intersect: set to white on any intersection

26 //radiance = Radiance3(1, 1, 1);

27

28 // Debugging barycentric

29 //radiance = Radiance3(weight[0], weight[1], weight[2]) / 15;

30

31 return true;

32 }

The sampleRayTriangle routine returns false if there was no intersection closer than distance; otherwise, it updates distance and radiance and returns true.

When invoking this routine, the caller passes the distance to the closest currently known intersection, which is initially INFINITY (let INFINITY = std::numeric_limits<T>::infinity() in C++, or simply 1.0/0.0). We will design the intersect routine to return INFINITY when no intersection exists between R and T so that a missed intersection will never cause sampleRayTriangle to return true.

Placing the (d >= distance) test before the shading code is an optimization. We would still obtain correct results if we always computed the shading before testing whether the new intersection is in fact the closest. This is an important optimization because the shade routine may be arbitrarily expensive. In fact, in a full-featured ray tracer, almost all computation time is spent inside shade, which recursively samples additional rays. We won’t discuss further shading optimizations in this chapter, but you should be aware of the importance of an early termination when another surface is known to be closer.

Note that the order of the triangles in the calling routine (rayTrace) affects the performance of the routine. If the triangles are in back-to-front order, then we will shade each one, only to reject all but the closest. This is the worst case. If the triangles are in front-to-back order, then we will shade the first and reject the rest without further shading effort. We could ensure the best performance always by separating sampleRayTriangle into two auxiliary routines: one to find the closest intersection and one to shade that intersection. This is a common practice in ray tracers. Keep this in mind, but do not make the change yet. Once we have written the rasterizer renderer, we will consider the space and time implications of such optimizations under both ray casting and rasterization, which gives insights into many variations on each algorithm.

We’ll implement and test intersect first. To do so, comment out the call to shade on line 23 and uncomment either of the debugging lines below it.

15.4.3. Ray-Triangle Intersection

We’ll find the intersection of the eye ray and a triangle in two steps, following the method described in Section 7.9 and implemented in Listing 15.16. This method first intersects the line containing the ray with the plane containing the triangle. It then solves for the barycentric weights to determine if the intersection is within the triangle. We need to ignore intersections with the back of the single-sided triangle and intersections that occur along the part of the line that is not on the ray.

The same weights that we use to determine if the intersection is within the triangle are later useful for interpolating per-vertex properties, such as shading normals. We structure our implementation to return the weights to the caller. The caller could use either those or the distance traveled along the ray to find the intersection point. We return the distance traveled because we know that we will later need that anyway to identify the closest intersection to the viewer in a scene with many triangles. We return the barycentric weights for use in interpolation.

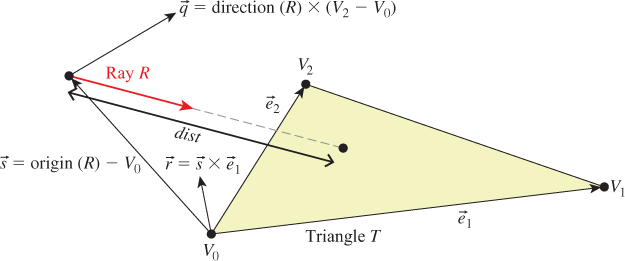

Figure 15.5 shows the geometry of the situation. Let R be the ray and T be the triangle. Let ![]() be the edge vector from V0 to V1 and

be the edge vector from V0 to V1 and ![]() be the edge vector from V0 to V2. Vector

be the edge vector from V0 to V2. Vector ![]() is orthogonal to both the ray and

is orthogonal to both the ray and ![]() . Note that if

. Note that if ![]() is also orthogonal to

is also orthogonal to ![]() , then the ray is parallel to the triangle and there is no intersection. If

, then the ray is parallel to the triangle and there is no intersection. If ![]() is in the negative hemisphere of

is in the negative hemisphere of ![]() (i.e., “points away”), then the ray travels away from the triangle.

(i.e., “points away”), then the ray travels away from the triangle.

Vector ![]() is the displacement of the ray origin from V0, and vector

is the displacement of the ray origin from V0, and vector ![]() is the cross product of

is the cross product of ![]() and

and ![]() . These vectors are used to construct the barycentric weights, as shown in Listing 15.16.

. These vectors are used to construct the barycentric weights, as shown in Listing 15.16.

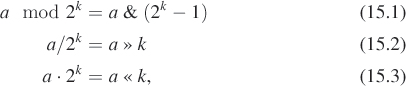

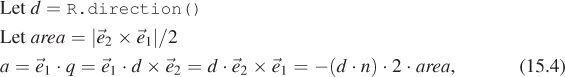

Variable a is the rate at which the ray is approaching the triangle, multiplied by twice the area of the triangle. This is not obvious from the way it is computed here, but it can be seen by applying a triple-product identity relation:

since the direction of ![]() 2 ×

2 × ![]() 1 is opposite the triangle’s geometric normal n. The particular form of this expression chosen in the implementation is convenient because the

1 is opposite the triangle’s geometric normal n. The particular form of this expression chosen in the implementation is convenient because the q vector is needed again later in the code for computing the barycentric weights.

There are several cases where we need to compare a value against zero. The two epsilon constants guard these comparisons against errors arising from limited numerical precision.

The comparison a <= epsilon detects two cases. If a is zero, then the ray is parallel to the triangle and never intersects it. In this case, the code divided by zero many times, so other variables may be infinity or not-a-number. That’s irrelevant, since the first test expression will still make the entire test expression true. If a is negative, then the ray is traveling away from the triangle and will never intersect it. Recall that a is the rate at which the ray approaches the triangle, multiplied by the area of the triangle. If epsilon is too large, then intersections with triangles will be missed at glancing angles, and this missed intersection behavior will be more likely to occur at triangles with large areas than at those with small areas. Note that if we changed the test to fabs(a)<= epsilon, then triangles would have two sides. This is not necessary for correct models of real, opaque objects; however, for rendering mathematical models or models with errors in them it can be convenient. Later we will depend on optimizations that allow us to quickly cull the (approximately half) of the scene representing back faces, so we choose to render single-sided triangles here for consistency.

Listing 15.16: Ray-triangle intersection (derived from [MT97])

1 float intersect(const Ray& R, const Triangle& T, float weight[3]) {

2 const Vector3& e1 = T.vertex(1) - T.vertex(0);

3 const Vector3& e2 = T.vertex(2) - T.vertex(0);

4 const Vector3& q = R.direction().cross(e2);

5

6 const float a = e1.dot(q);

7

8 const Vector3& s = R.origin() - T.vertex(0);

9 const Vector3& r = s.cross(e1);

10

11 // Barycentric vertex weights

12 weight[1] = s.dot(q) / a;

13 weight[2] = R.direction().dot(r) / a;

14 weight[0] = 1.0f - (weight[1] + weight[2]);

15

16 const float dist = e2.dot(r) / a;

17

18 static const float epsilon = 1e-7f;

19 static const float epsilon2 = 1e-10;

20

21 if ((a <= epsilon) || (weight[0] < -epsilon2) ||

22 (weight[1] < -epsilon2) || (weight[2] < -epsilon2) ||

23 (dist <= 0.0f)) {

24 // The ray is nearly parallel to the triangle, or the

25 // intersection lies outside the triangle or behind

26 // the ray origin: "infinite" distance until intersection.

27 return INFINITY;

28 } else {

29 return dist;

30 }

31 }

The epsilon2 constant allows a ray to intersect a triangle slightly outside the bounds of the triangle. This ensures that triangles that share an edge completely cover pixels along that edge despite numerical precision limits. If epsilon2 is too small, then single-pixel holes will very occasionally appear on that edge. If it is too large, then all triangles will visibly appear to be too large.

Depending on your processor architecture, it may be faster to perform an early test and potential return rather than allowing not-a-number and infinity propagation in the ill-conditioned case where a ≈ 0. Many values can also be precomputed, for example, the edge lengths of the triangle, or at least be reused within a single intersection, for example, 1.0f / a. There’s a cottage industry of optimizing this intersection code for various architectures, compilers, and scene types (e.g., [MT97] for scalar processors versus [WBB08] for vector processors). Let’s forgo those low-level optimizations and stick to high-level algorithmic decisions. In practice, most ray casters spend very little time in the ray intersection code anyway. The fastest way to determine if a ray intersects a triangle is to never ask that question in the first place. That is, in Chapter 37, we will introduce data structures that quickly and conservatively eliminate whole sets of triangles that the ray could not possibly intersect, without ever performing the ray-triangle intersection. So optimizing this routine now would only complicate it without affecting our long-term performance profile.

Our renderer only processes triangles. We could easily extend it to render scenes containing any kind of primitive for which we can provide a ray intersection solution. Surfaces defined by low-order equations, like the plane, rectangle, sphere, and cylinder, have explicit solutions. For others, such as bicubic patches, we can use root-finding methods.

15.4.4. Debugging

We now verify that the intersection code code is correct. (The code we’ve given you is correct, but if you invoked it with the wrong parameters, or introduced an error when porting to a different language or support code base, then you need to learn how to find that error.) This is a good opportunity for learning some additional graphics debugging tricks, all of which demonstrate the Visual Debugging principle.

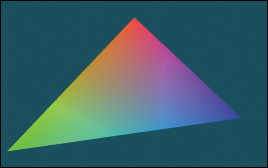

It would be impractical to manually examine every intersection result in a debugger or printout. That is because the rayTrace function invokes intersect thousands of times. So instead of examining individual results, we visualize the barycentric coordinates by setting the radiance at a pixel to be proportional to the barycentric coordinates following the Visual Debugging principle. Figure 15.6 shows the correct resultant image. If your program produces a comparable result, then your program is probably nearly correct.

Figure 15.6: The single triangle scene visualized with color equal to barycentric weight for debugging the intersection code.

What should you do if your result looks different? You can’t examine every result, and if you place a breakpoint in intersect, then you will have to step through hundreds of ray casts that miss the triangle before coming to the interesting intersection tests.

This is why we structured rayTrace to trace within a caller-specified rectangle, rather than the whole image. We can invoke the ray tracer on a single pixel from main(), or better yet, create a debugging interface where clicking on a pixel with the mouse invokes the single-pixel trace on the selected pixel. By setting breakpoints or printing intermediate results under this setup, we can investigate why an artifact appears at a specific pixel. For one pixel, the math is simple enough that we can also compute the desired results by hand and compare them to those produced by the program.

In general, even simple graphics programs tend to have large amounts of data. This may be many triangles, many pixels, or many frames of animation. The processing for these may also be running on many threads, or on a GPU. Traditional debugging methods can be hard to apply in the face of such numerous data and massive parallelism. Furthermore, the graphics development environment may preclude traditional techniques such as printing output or setting breakpoints. For example, under a hardware rendering API, your program is executing on an embedded processor that frequently has no access to the console and is inaccessible to your debugger.

Fortunately, three strategies tend to work well for graphics debugging.

1. Use assertions liberally. These cost you nothing in the optimized version of the program, pass silently in the debug version when the program operates correctly, and break the program at the test location when an assertion is violated. Thus, they help to identify failure cases without requiring that you manually step through the correct cases.

2. Immediately reduce to the minimal test case. This is often a single-triangle scene with a single light and a single pixel. The trick here is to find the combination of light, triangle, and pixel that produces incorrect results. Assertions and the GUI click-to-debug scheme work well for that.

3. Visualize intermediate results. We have just rendered an image of the barycentric coordinates of eye-ray intersections with a triangle for a 400,000-pixel image. Were we to print out these values or step through them in the debugger, we would have little chance of recognizing an incorrect value in that mass of data. If we see, for example, a black pixel, or a white pixel, or notice that the red and green channels are swapped, then we may be able to deduce the nature of the error that caused this, or at least know which inputs cause the routine to fail.

15.4.5. Shading

We are now ready to implement shade. This routine computes the incident radiance at the intersection point P and how much radiance scatters back along the eye ray to the viewer.

Let’s consider only light transport paths directly from the source to the surface to the camera. Under this restriction, there is no light arriving at the surface from any directions except those to the lights. So we only need to consider a finite number of ωi values. Let’s also assume for the moment that there is always a line of sight to the light. This means that there will (perhaps incorrectly) be no shadows in the rendered image.

Listing 15.17 iterates over the light sources in the scene (note that we have only one in our test scene). For each light, the loop body computes the distance and direction to that light from the point being shaded. Assume that lights emit uniformly in all directions and are at finite locations in the scene. Under these assumptions, the incident radiance L_i at point P is proportional to the total power of the source divided by the square of the distance between the source and P. This is because at a given distance, the light’s power is distributed equally over a sphere of that radius. Because we are ignoring shadowing, let the visible function always return true for now. In the future it will return false if there is no line of sight from the source to P, in which case the light should contribute no incident radiance.

The outgoing radiance to the camera, L_o, is the sum of the fraction of incident radiance that scatters in that direction. We abstract the scattering function into a BSDF. We implement this function as a class so that it can maintain state across multiple invocations and support an inheritance hierarchy. Later in this book, we will also find that it is desirable to perform other operations beyond invoking this function; for example, we might want to sample with respect to the probability distribution it defines. Using a class representation will allow us to later introduce additional methods for these operations.

The evaluateFiniteScatteringDensity method of that class evaluates the scattering function for the given incoming and outgoing angles. We always then take the product of this and the incoming radiance, modulated by the cosine of the angle between w_i and n to account for the projected area over which incident radiance is distributed (by the Tilting principle).

Listing 15.17: The single-bounce shading code.

1 void shade(const Scene& scene, const Triangle& T, const Point3& P,

const Vector3& n, const Vector3& w_o, Radiance3& L_o) {

2

3 L_o = Color3(0.0f, 0.0f, 0.0f);

4

5 // For each direction (to a light source)

6 for (unsigned int i = 0; i < scene.lightArray.size(); ++i) {

7 const Light& light = scene.lightArray[i];

8

9 const Vector3& offset = light.position - P;

10 const float distanceToLight = offset.length();

11 const Vector3& w_i = offset / distanceToLight;

12

13 if (visible(P, w_i, distanceToLight, scene)) {

14 const Radiance3& L_i = light.power / (4 * PI * square(distanceToLight));

15

16 // Scatter the light

17 L_o +=

18 L_i *

19 T.bsdf().evaluateFiniteScatteringDensity(w_i, w_o) *

20 max(0.0, dot(w_i, n));

21 }

22 }

23 }

15.4.6. Lambertian Scattering

The simplest implementation of the BSDF assumes a surface appears to be the same brightness independent of the viewer’s orientation. That is, evaluateFiniteScatteringDensity returns a constant. This is called Lambertian reflectance, and it is a good model for matte surfaces such as paper and flat wall paint. It is also trivial to implement. Listing 15.18 gives the implementation (see Section 14.9.1 for a little more detail and Chapter 29 for a lot more). It has a single member, lambertian, that is the “color” of the surface. For energy conservation, this value should have all fields on the range [0, 1].

Listing 15.18: Lambertian BSDF implementation, following Listing 14.6.

1 class BSDF {

2 public:

3 Color3 k_L;

4

5 /** Returns f = L_o / (L_i * w_i.dot(n)) assuming

6 incident and outgoing directions are both in the

7 positive hemisphere above the normal */

8 Color3 evaluateFiniteScatteringDensity

9 (const Vector3& w_i, const Vector3& w_o) const {

10 return k_L / PI;

11 }

12 };

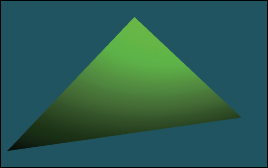

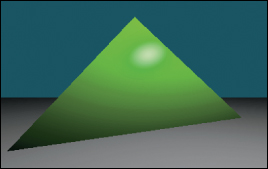

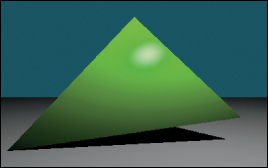

Figure 15.7 shows our triangle scene rendered with the Lambertian BSDF using k_L=Color3(0.0f, 0.0f, 0.8f). Because our triangle’s vertex normals are deflected away from the plane defined by the vertices, the triangle appears curved. Specifically, the bottom of the triangle is darker because the w_i.dot(n) term in line 20 of Listing 15.17 falls off toward the bottom of the triangle.

15.4.7. Glossy Scattering

The Lambertian surface appears dull because it has no highlight. A common approach for producing a more interesting shiny surface is to model it with something like the Blinn-Phong scattering function. An implementation of this function with the energy conservation factor from Sloan and Hoffmann [AMHH08, 257] is given in Listing 15.19. See Chapter 27 for a discussion of the origin of this function and alternatives to it. This is a variation on the shading function that we saw back in Chapter 6 in WPF, only now we are implementing it instead of just adjusting the parameters on a black box. The basic idea is simple: Extend the Lambertian BSDF with a large radial peak when the normal lies close to halfway between the incoming and outgoing directions. This peak is modeled by a cosine raised to a power since that is easy to compute with dot products. It is scaled so that the outgoing radiance never exceeds the incoming radiance and so that the sharpness and total intensity of the peak are largely independent parameters.

Listing 15.19: Blinn-Phong BSDF scattering density.

1 class BSDF {

2 public:

3 Color3 k_L;

4 Color3 k_G;

5 float s;

6 Vector3 n;

7 . . .

8

9 Color3 evaluateFiniteScatteringDensity(const Vector3& w_i,

10 const Vector3& w_o) const {

11 const Vector3& w_h = (w_i + w_o).direction();

12 return

13 (k_L + k_G * ((s + 8.0f) *

14 powf(std::max(0.0f, w_h.dot(n)), s) / 8.0f)) /

15 PI;

16

17 }

18 };

For this BSDF, choose lambertian + glossy < 1 at each color channel to ensure energy conservation, and glossySharpness typically in the range [0, 2000]. The glossySharpness is on a logarithmic scale, so it must be moved in larger increments as it becomes larger to have the same perceptual impact.

Figure 15.8 shows the green triangle rendered with the normalized Blinn-Phong BSDF. Here, k_L=Color3(0.0f, 0.0f, 0.8f), k_G=Color3(0.2f, 0.2f, 0.2f), and s=100.0f.

15.4.8. Shadows

The shade function in Listing 15.17 only adds the illumination contribution from a light source if there is an unoccluded line of sight between that source and the point P being shaded. Areas that are occluded are therefore darker. This absence of light is the phenomenon that we recognize as a shadow.

In our implementation, the line-of-sight visibility test is performed by the visible function, which is supposed to return true if and only if there is an unoccluded line of sight. While working on the shading routine we temporarily implemented visible to always return true, which means that our images contain no shadows. We now revisit the visible function in order to implement shadows.