5.2. Important Concepts

Parallelism and threading are confusing, and there are a few questions many developers have (see the following questions).

5.2.1. Why Do I Need These Enhancements?

Can't you just create lots of separate threads? Well, you can, but there are a couple of issues with this approach. First, creating a thread is a resource-intensive process, so (depending on the type of work you do) it might be not be the most efficient or quickest way to complete a task. Creating too many threads, for example, can slow task completion because threads are never given time to complete as the operating system rapidly switches between them. And what happens if someone loads up two instances of your application?

To avoid some of these issues, .NET has a thread pool class that has a bunch of threads up and running, ready to do your bidding. The thread pool also can impose a limit on the number of threads created, preventing thread starvation issues.

However the thread pool isn't so great at letting you know when work has been completed or cancelling running threads. The thread pool also doesn't have any information about the context in which the work is created, which means it can't schedule threads as efficiently as it possibly could have done. Enter the new parallelization functionality that provides additional cancellation and scheduling, and offers an intuitive way of programming.

Note that the parallelization functionality works on top of .NET's thread pool instead of replacing it. See Chapter 4 for details about improvements made to the thread pool in this release.

5.2.2. Concurrent != Parallel

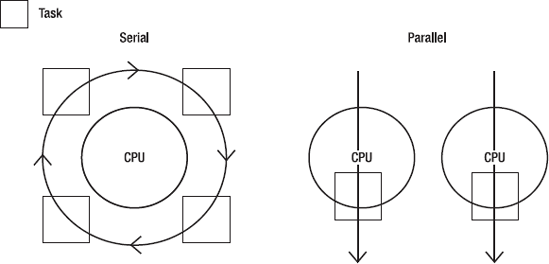

If your application is multithreaded, is it running in parallel? Probably not—applications running on a single CPU machine can appear to run in parallel because the operating system allocates time with the CPU to each thread and then rapidly switches between them (known as time slicing). Threads might not ever be actually running at the same time (although they could be), whereas in a parallelized application work is actually being conducted at the same time (Figure 5-1). Processing work at the same time can introduce some complications in your application regarding access to resources.

Daniel Moth (from the Parallel computing team at Microsoft) puts it succinctly when he says the following (www.danielmoth.com/Blog/2008/11/threadingconcurrency-vs-parallelism.html):

On a single core, you can use threads and you can have concurrency, but to achieve parallelism on a multi-core box, you have to identify in your code the exploitable concurrency: the portions of your code that can truly run at the same time.

Figure 5.1. Multithreaded != parallelization

5.2.3. Warning: Threading and Parallelism Will Increase Your Application's Complexity

Although the new parallelization enhancements greatly simplify writing parallelized applications, they do not negate a number of issues that you might have encountered in any application utilizing multiple threads:

Race conditions: "Race conditions arise in software when separate processes or threads of execution depend on some shared state. Operations upon shared states are critical sections that must be atomic to avoid harmful collision between processes or threads that share those states" (http://en.wikipedia.org/wiki/Race_condition).

Deadlocks: "A deadlock is a situation in which two or more competing actions are waiting for the other to finish, and thus neither ever does. It is often seen in a paradox like the chicken or the egg" (http://en.wikipedia.org/wiki/Deadlock). Also see http://en.wikipedia.org/wiki/Dining_philosophers_problem.

Thread starvation: Thread starvation can be caused by creating too many threads (no one thread gets enough time to complete its work because of CPU time slicing) or a flawed locking mechanism that results in a deadlock.

Coding and debugging difficulties: Debugging and developing applications is tricky enough without the additional complexity of multiple threads executing.

Environmental: Code must be optimized for different machine environments (e.g., CPUs/cores, memory, storage media, etc.).

5.2.4. Crap Code Running in Parallel Is Just Parallelized Crap Code

Perhaps this is an obvious point, but before you try to speed up any code by parallelizing it, ensure that it is written in the most efficient manner. Crap code running in parallel is now just parallelized crap code; it still won't perform as well as it could!

5.2.5. What Applications Benefit from Parallelism?

Many applications contain some segments of code that will benefit from parallelization; and some that will not. Code that is likely to benefit from being run in parallel will probably have the following characteristics:

It can be broken down into self-encapsulated units.

It has no dependencies or shared state.

A classic example of code that would benefit from being run in parallel is code that goes off to call an external service or perform a long-running calculation (e.g., iterating through stock quotes and performing a long-running calculation by querying historical data).

This type of problem is an ideal candidate for parallelization because each individual calculation is independent, and so can safely be run in parallel. Some people like to refer to such problems as "embarrassingly parallel" (although Stephen Toub of Microsoft suggests "delightfully parallel.") in that they are very well suited for the benefits of parallelization.

5.2.6. I Have Only a Single-Core Machine; Can I Run These Examples?

Yes. The parallel runtime won't mind. This is a really important benefit of using parallel libraries, because they will scale automatically, saving you from having to alter your code to target your applications for different environments.

5.2.7. Can the Parallelization Features Slow Me Down?

Maybe—although the difference is probably negligible. In some cases, using the new parallelization features (especially on a single-core machine) could slow your application down due to the additional overhead involved. However, if you have written a custom scheduling mechanism, the chances are that Microsoft's implementation might perform more quickly and offer a number of other benefits, as you will see.

5.2.8. Performance

Of course, the main aim of parallelization is to increase an applications performance. But what sort of gains can you expect?

For the test application, I used some of the parallel code samples (http://code.msdn.microsoft.com/ParExtSamples). The code shown in Table 5-1 was run on a Dell XPS M1330 64-bit Windows 7 Ultimate laptop with Visual Studio 2010 Professional Beta 2. The laptop has an Intel Duo Core CPU with 2.5MHz, 6MB cache, and 4GB of memory.

|