Classification and Regression Trees (CART) is one of the most popular decision tree algorithms. It is a binary recursive partitioning algorithm that can be used to process continuous and nominal attributes.

There are three main steps in the CART algorithm. The first is to construct the maximum tree (binary tree). The second step is to choose the right size of the tree. The last step is to classify new data using the result tree.

Compared to other algorithms, there are many important characteristics of CART:

- Binary decision tree (a binary recursive partitioning process)

- The source dataset can have continuous or nominal attributes

- No stopping rule (unless no possible splits are available)

- Tree pruning with cost-complexity pruning

- Nonparametric

- No variables to be selected in advance

- The missing value is dealt with an adaptive and better strategy

- The outlier can be easily handled

- No assumptions

- Computationally fast

- At each split point, only one variable is used

- Only one optimal tree is taken as the result tree, which is formed from a sequence of nested pruned candidate trees generated by CART

- Automatically handling the missing value in the source dataset

The weighted Gini index equation is defined as follows:

The CART measure is different; the goodness of the split point is proportional to the value of measure. The higher the better.

Splitting rules, with the omission of the following parts, is too lengthy to include in this section:

- Separate handling of continuous and categorical splitters

- Special handling for categorical splitters with multiple levels

- Missing value handling

- Tree pruning

- Tree selection

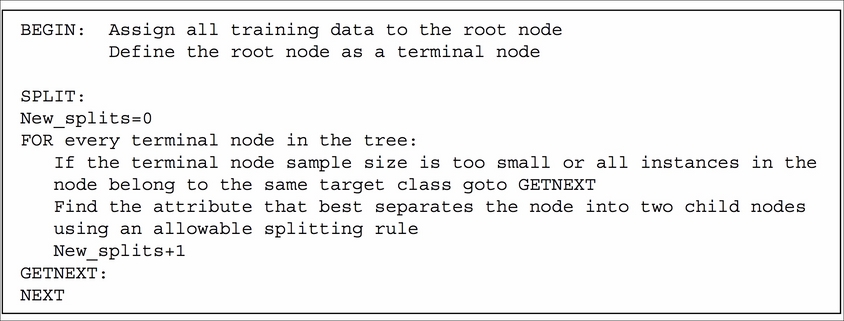

Here is the pseudocode for the CART algorithm, the simplified tree-growing algorithm:

The simplified pruning algorithm is as follows:

Please look up the R codes file ch_02_cart.R from the bundle of R codes for the previously mentioned algorithms. One example is chosen to apply the CART algorithm to, in the following section.

Web key resource judgment arises from the domain of web information retrieval and web search engines. The original concept is from the authority value, the hub value, and the HITS algorithm. During queries of information from IR systems or search engines, finding important and related information from an overwhelmingly increasing volume of information is a challenging task. A better judgment leads to less indexing storage and a more informative querying result.

A key resource page is a high quality web page with much more information per selected topic compared to an ordinary web page on the same topic. In order to measure the importance of a certain web page, feature selection is the first thing required in the design.

The link-based features used in current search technologies can't resolve such issues at an acceptable accuracy rate. To improve the accuracy rate, more global information across many data instances can be adopted in addition to the single-data-instance-related attributes or features, which means local attributes.

Experimental results show that the key web page should contain in-site out-links with anchor text to other pages. Non-content attributes, such as web page links related attributes and content structures of pages, can be applied to judge key resource pages. The possible attributes are listed as follows:

- In-degree or in-links: This denotes the number of links pointing to the page. Observation shows that the higher the number of in-links related to the key page, the more the links from other sites to that page, which means more recommendations to a certain extent.

- URL length or the depth of a page's URL: There are four types of URLs defined in the following box: root, subroot, path, and filename. The four types of URLs map to four levels of length, that is, 1 to 4 respectively. A lower prior probability with a lower level and a higher prior probability mean a bigger possibility to be a key resource page.

- In-site out-link anchor text rate: This refers to the rate of the length of the anchor text to the document or page content length.

- In-site out-link number: This refers to the number of links embedded in a page.

- Document length (in words): This filters out specified predefined non-usable characters from the document. This attribute can predict the relevance of a page because of the non-uniform distribution.

With the attributes just mentioned, the uniform sampling problem can be bypassed to a certain extent. The dataset can be easily built and used by decision tree induction algorithms such as CART.