6.6 Interprocess Communication Mechanisms

Processes often need to communicate with each other. Interprocess communication mechanisms are provided by the operating system as part of the process abstraction.

In general, a process can send a communication in one of two ways: blocking or nonblocking. After sending a blocking communication, the process goes into the waiting state until it receives a response. Nonblocking communication allows the process to continue execution after sending the communication. Both types of communication are useful.

There are two major styles of interprocess communication: shared memory and message passing. The two are logically equivalent—given one, you can build an interface that implements the other. However, some programs may be easier to write using one rather than the other. In addition, the hardware platform may make one easier to implement or more efficient than the other.

6.6.1 Shared Memory Communication

Figure 6.14 illustrates how shared memory communication works in a bus-based system. Two components, such as a CPU and an I/O device, communicate through a shared memory location. The software on the CPU has been designed to know the address of the shared location; the shared location has also been loaded into the proper register of the I/O device. If, as in the figure, the CPU wants to send data to the device, it writes to the shared location. The I/O device then reads the data from that location. The read and write operations are standard and can be encapsulated in a procedural interface.

Figure 6.14 Shared memory communication implemented on a bus.

Example 6.7 describes the use of shared memory as a practical communication mechanism.

Example 6.7 Elastic Buffers as Shared Memory

The text compressor of Application Example 3.4 provides a good example of a shared memory. As shown below, the text compressor uses the CPU to compress incoming text, which is then sent on a serial line by a UART.

The input data arrive at a constant rate and are easy to manage. But because the output data are consumed at a variable rate, these data require an elastic buffer. The CPU and output UART share a memory area—the CPU writes compressed characters into the buffer and the UART removes them as necessary to fill the serial line. Because the number of bits in the buffer changes constantly, the compression and transmission processes need additional size information. In this case, coordination is simple—the CPU writes at one end of the buffer and the UART reads at the other end. The only challenge is to make sure that the UART does not overrun the buffer.

6.6.2 Message Passing

Message passing

Message passing communication complements the shared memory model. As shown in Figure 6.15, each communicating entity has its own message send/receive unit. The message is not stored on the communications link, but rather at the senders/receivers at the endpoints. In contrast, shared memory communication can be seen as a memory block used as a communication device, in which all the data are stored in the communication link/memory.

Figure 6.15 Message passing communication.

Applications in which units operate relatively autonomously are natural candidates for message passing communication. For example, a home control system has one microcontroller per household device—lamp, thermostat, faucet, appliance, and so on. The devices must communicate relatively infrequently; furthermore, their physical separation is large enough that we would not naturally think of them as sharing a central pool of memory. Passing communication packets among the devices is a natural way to describe coordination between these devices. Message passing is the natural implementation of communication in many 8-bit microcontrollers that do not normally operate with external memory.

Queues

A queue is a common form of message passing. The queue uses a FIFO discipline and holds records that represent messages. The FreeRTOS.org system provides a set of queue functions. It allows queues to be created and deleted so that the system may have as many queues as necessary. A queue is described by the data type xQueueHandle and created using xQueueCreate:

xQueueHandle q1;

q1 = xQueueCreate(MAX_SIZE,sizeof(msg_record)); /* maximum number of records in queue, size of each record */

if (q1 == 0) /* error */

…

The queue is created using the vQueueDelete() function.

A message is put into the queue using xQueueSend() and received using xQueueReceive():

xQueueSend(q1,(void *)msg,(portTickType)0); /* queue, message to send, final parameter controls timeout */

if (xQueueReceive(q2,&(in_msg),0); /* queue, message received, timeout */

The final parameter in these functions determines how long the queue waits to finish. In the case of a send, the queue may have to wait for something to leave the queue to make room. In the case of the receive, the queue may have to wait for data to arrive.

6.6.3 Signals

Another form of interprocess communication commonly used in Unix is the signal. A signal is simple because it does not pass data beyond the existence of the signal itself. A signal is analogous to an interrupt, but it is entirely a software creation. A signal is generated by a process and transmitted to another process by the operating system.

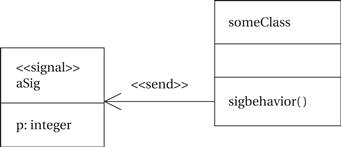

A UML signal is actually a generalization of the Unix signal. While a Unix signal carries no parameters other than a condition code, a UML signal is an object. As such, it can carry parameters as object attributes. Figure 6.16 shows the use of a signal in UML. The sigbehavior() behavior of the class is responsible for throwing the signal, as indicated by <<send>>. The signal object is indicated by the <<signal>> stereotype.

Figure 6.16 Use of a UML signal.

6.6.4 Mailboxes

The mailbox is a simple mechanism for asynchronous communication. Some architectures define mailbox registers. These mailboxes have a fixed number of bits and can be used for small messages. We can also implement a mailbox using P() and V() using main memory for the mailbox storage. A very simple version of a mailbox, one that holds only one message at a time, illustrates some important principles in interprocess communication.

In order for the mailbox to be most useful, we want it to contain two items: the message itself and a mail ready flag. The flag is true when a message has been put into the mailbox and cleared when the message is removed. (This assumes that each message is destined for exactly one recipient.) Here is a simple function to put a message into the mailbox, assuming that the system supports only one mailbox used for all messages:

void post(message *msg) {

P(mailbox.sem); /* wait for the mailbox */

copy(mailbox.data,msg); /* copy the data into the mailbox */

mailbox.flag = TRUE; /* set the flag to indicate a message is ready */

V(mailbox.sem); /* release the mailbox */

}

Here is a function to read from the mailbox:

boolean pickup(message *msg) {

boolean pickup = FALSE; /* local copy of the ready flag */

P(mailbox.sem); /* wait for the mailbox */

pickup = mailbox.flag; /* get the flag */

mailbox.flag = FALSE; /* remember that this message was received */

copy(msg, mailbox.data); /* copy the data into the caller’s buffer */

V(mailbox.sem); /* release the flag---can’t get the mail if we keep the mailbox */

return(pickup); /* return the flag value */

}

Why do we need to use semaphores to protect the read operation? If we don’t, a pickup could receive the first part of one message and the second part of another. The semaphores in pickup() ensure that a post() cannot interleave between the memory reads of the pickup operation.