Chapter 14. Standard Approximations and Representations

14.1. Introduction

The real world contains too much detail for us to simulate it efficiently from first principles of physics and geometry. Mathematical models of the real world and the data structures and algorithms that implement them are always approximations. These approximations make graphics computationally tractable but introduce restrictions and error. The models and approximations are both geometric and algorithmic. For example, a ball is a simple geometric model of an orange. A simple computational model of light interaction might specify that the light passing through glass does not refract or lose energy.

In this chapter, we survey some pervasive approximations and their limitations. This chapter brings together a number of key assumptions about models and data structures for representing them that are implicit in the rest of the book and throughout graphics. It contains some of the engineering conventional wisdom and practical mathematical techniques accumulated over the past 50 years of computer graphics. It is what you need to know to apply your existing mathematics and computer science knowledge to computer graphics as it is practiced today. In order to quickly communicate a breadth of material, we’ll stay relatively shallow on details. Where there are deep implications of choosing a particular approximation, a later chapter on each particular topic will explain those implications with more nuance. To keep the text modular (and save you a lot of flipping), there is some duplication of ideas from both prior and succeeding chapters, and we’ve used some terms and units that have not yet been introduced, like steradians, but whose precise details don’t matter in a first reading at this stage.

The code samples in this chapter are based on the freely available OpenGL API (http://opengl.org) and G3D Innovation Engine library (http://g3d.sf.net). We recommend examining the details in the documentation for those or equivalent alternatives for further study of how these common approximations and representations manifest themselves in programming practice.

14.2. Evaluating Representations

In many cases, there are competing representations that have different properties. Which representation is best suited to a particular application depends on the goals of that application. Choosing the right representation for an application is a large part of the art of system design. Some factors to consider when evaluating a representation are

• Physical accuracy

• Perceived accuracy

• Design goals

• Space efficiency

• Time efficiency

• Implementation complexity

• Associated cost of content creation

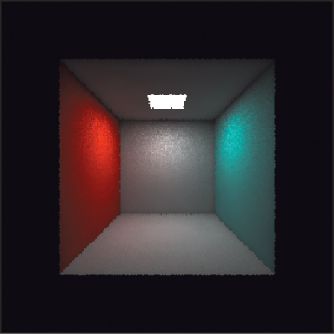

Physical accuracy is the easiest property to measure objectively. We can use a calibrated camera to measure the energy reflected from a known scene and compare that to a rendering of the scene, for example, as is often done with the Cornell Box (see Figure 14.1).

Figure 14.1: The Cornell box, a carefully measured five-sided, painted plywood box with a light source at the top, is used as a standard test model for rendering algorithms. Here it’s rendered by photon mapping with 1 million photons.

But physical accuracy is rarely the most important consideration in the creation of images. When the image is to be viewed by a human observer, errors that are imperceptible are less significant than those that are perceptible. So physical accuracy is the wrong metric for image quality. That’s also fortunate—regardless of how well we simulate a virtual scene, we are forced to accept huge errors from our displays. Today’s displays cannot reproduce the full intensity range of the real world and don’t create true 3D light fields into which you can focus your eyes.

Perceived accuracy is a better metric for quality, but it is hard to measure. There are many reasonable models that measure how a human observer will perceive a scene. These are used both for scientific analysis of new algorithms and directly as part of those algorithms—for example, video compression schemes frequently consider the perceptual error introduced by their compression. However, as discussed in Chapter 5, human perception is sensitive to the viewing environment, the task context, the image content, and of course, the particular human involved. So, while we can identify important perceptual trends, it is not possible to precisely quantify the reduction in perceived image quality at the level that we can quantify, say, a reduction in performance.

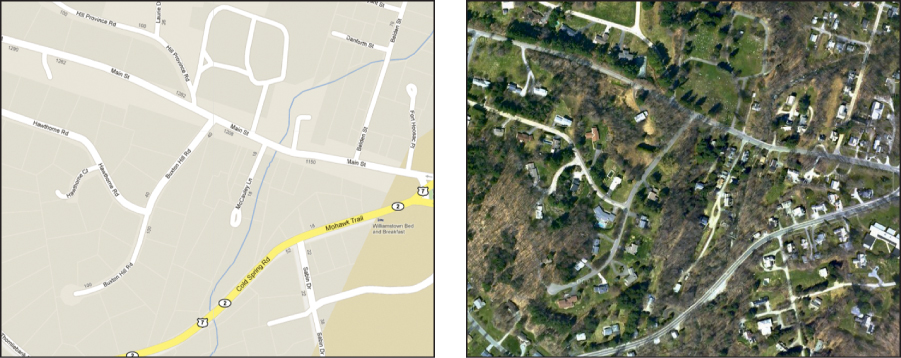

Even perceptual accuracy is not necessarily a good measure of image quality. A line drawing has little perceptual relationship to the hues and tones in a photograph, yet a good line drawing may be considered a higher-quality depiction of a scene than a poorly composed photograph, as shown in Figure 14.2. The model with best image quality is the one that best communicates the virtual scene to the viewer, in the style that the designer desires. This may be, for example, wireframe in a CAD program, painterly in an art piece, cartoony in a video game, or photorealistic for film. Often artists and designers intentionally simplify and deform geometric models, stylize lighting, and remove realism from rendering to better communicate their ideas. This kind of image quality is beyond objective measurement, which is one of the reasons that designing a graphics system is a subjective art as well as an engineering exercise.

Figure 14.2: A map contains less information and detail than a satellite photograph, but presents its information in a way that better communicates the salient elements to a human viewer. This is evidence that capturing many aspects of reality is not always the most effective way to model a scene. (Credit: © 2012 Google - Map data © 2012 Cnes/Spot Image, DigitalGlobe, GeoEye, MassGIS, Commonwealth of Massachusetts EOEA, New York GIS, USDA Farm Service Agency)

Space and time efficiency and implementation complexity go beyond mathematical modeling and into implementation. We seek to actually implement the algorithms that we design and apply them to real problems. For real-time interactive rendering, efficiency is paramount. A low-quality animation that is interactive almost always leads to a better experience in a virtual world than a high-quality one with limited or high-latency interaction. The accessibility and viability of a system in the market is driven by price. The computational and memory requirements, and developer-time costs to build a system, must be balanced against the quality of the images produced.

14.2.1. The Value of Measurement

We can draw some lessons by considering measurements of image quality. Advances in graphics have largely focused on space and time efficiency and physical image quality, even though we claim that perceptual quality, fidelity to the designer’s vision, and implementation complexity are also important factors. This is likely because efficiency and physical quality are more amenable to objective measurement. They aren’t necessarily easier to optimize for, but the objective measurements allow quantitative optimization. So the first lesson is that if you want something to improve, find an objective way to quantify it. Today’s physical image quality is very high, and within some limits we can also achieve very good perceptual image quality. Feature films regularly contain completely computer-generated images that are indistinguishable from photographs, and even low-power mobile devices feature interactive 3D graphics. The second lesson is to make sure that you optimized for what you really wanted. (This is an instance of the Know Your Problem principle from Chapter 1!) Despite the many advances in image quality, the process of modeling, animating, and rendering scenes using either tools or code has not advanced as far as one might hope. Implementation complexity has skyrocketed over the past 50 years despite (and sometimes because of) graphics middleware libraries and standardization of certain algorithms. Progress has been very slow outside of photorealism, perhaps because the quality of nonphotorealistic renderings is evaluated subjectively. Computer graphics does not today empower the typical user with the expressive and communicative ability of an artist using natural media.

14.2.2. Legacy Models

Beware that in this chapter we describe both the representations that are preferred for current practice and some that are less frequently recommended today. Some of the older techniques make tradeoffs that one might not select intentionally if designing a system from a blank slate today. That can be because they were developed early in the history of computer graphics, before certain aspects were well understood. It can also be because they were developed for systems that lacked the resources to support a more sophisticated model.

We include techniques that we don’t recommend using for two reasons. First, this chapter describes what you need to know, not what you should do. Classic graphics papers contain great key ideas surrounded by modeling artifacts of their publication date. You need to understand the modeling artifacts to separate them from the key ideas. Graphics systems contain models needed to support legacy applications, such as Gouraud interpolation of per-vertex lighting in OpenGL. You will encounter and likely have to help maintain such systems and can’t abandon the past in practice.

Second, out-of-fashion ideas have a habit of returning in systems. As we discussed in this section, the best model for an application is rarely the most accurate—there are many factors to be considered. The relative costs of addressing these are highly dynamic. One source of change in cost is due to algorithmic discoveries. For example, the introduction of the fast Fourier transform, the rise of randomized algorithms, and the invention of shading languages changed the efficiency and implementation complexity of major graphics algorithms. Another source of change is hardware. Progress in computer graphics is intimately tied to the “constant factors” prescribed by the computers of the day, such as the ratio of memory size to clock speed or the power draw of a transistor relative to battery capacity. When technological or economic factors change these constants, the preferred models for software change with them. When real-time 3D computer graphics entered the consumer realm, it adopted models that the film industry had abandoned a decade earlier as too primitive. A film industry server farm could bring thousands of times more processing and memory to bear on a single frame than a consumer desktop or game console, so that industry faced a very different quality-to-performance tradeoff. More recently the introduction of 3D graphics in mobile form factors again resurrected some of the lower-quality approximations.

14.3. Real Numbers

An implicit assumption in most computer science is that we can represent real numbers with sufficient accuracy for our application in digital form. In graphics we often find ourselves dangerously close to the limit of available precision, and many errors are attributable to violations of that assumption. So, it is worth explicitly considering how we approximate real numbers before we build more interesting data structures that use them.

Fixed point, normalized fixed point, and floating point are the most pervasive approximations of real numbers employed in computer graphics programs. Each has finite precision, and error tends to increase as more operations are performed. When the precision is too low for a task, surprising errors can arise. These are often hard to debug because the algorithm may be correct—for real numbers—so mathematical tests will yield seemingly inconsistent results. For example, consider a physical simulation in which a ball approaches the ground. The simulator might compute that the ball must fall d meters to exactly contact the ground. It advances the ball d – 0.0001 meters, on the assumption that this will represent the state of the system immediately before the contact. However, after that transformation, a subsequent test reveals that the ball is in fact partly underneath the ground. This occurs because mathematically true statements, such as d = d – a + a (and especially, a = (a/b) * b), may not always hold for a particular approximation of real numbers. This is compounded by optimizing compilers. For example, a = b + c; e = a + d may yield a different result than e = b + c + d due to differing intermediate precision, and even if you write the former, your optimizing compiler may rewrite it as the latter. Perhaps the most commonly observed precision artifact today is self-shadowing “acne” caused by insufficient precision when computing the position of a point in the scene independently relative to the camera and to the light. When these give different results with an error in one direction, the point casts a shadow on itself. This manifests as dark parallel bands and dots across surfaces.

More exotic, and potentially more accurate, representations of real numbers are available than fixed and floating point. For example, rational numbers can be accurately encoded as the ratio of two bignums (i.e., dynamic bit-length integers). These rational numbers can be arbitrarily close approximations of real numbers, provided that we’re willing to spend the space and time to operate on them. Of course, we are seldom willing to pay that cost.

14.3.1. Fixed Point

Fixed-point representations specify a fixed number of binary digits and the location of a decimal point among those digits. They guarantee equal precision independent of magnitude. Thus, we can always bound the maximum error in the representation of a real number that lies within the representable range. Fixed point leads to fairly simple (i.e., low-cost) hardware implementation because the implementation of fixed-point operations is nearly identical to that of integer operations. The most basic form is exact integer representation, which almost always uses the two’s complement scheme for efficiently encoding negative values.

Fixed-point representations have four parameters: signed or unsigned, normalized or not, number of integer bits, and number of fractional bits. The latter two are often denoted using a decimal point. For example, “24.8 fixed point format” denotes a fixed-point representation that has 32 bits total, 24 of which are devoted to the integer portion and eight to the fractional portion.

An unsigned normalized b-bit fixed-point value corresponding to the integer 0 ≤ x ≤ 2b – 1 is interpreted as the real number x/(2b – 1), that is, on the range [0, 1]. A signed normalized fixed-point value has a range of [–1, 1]. Since direct mapping of the range [0, 2b – 1] to [–1, 1] would preclude an exact representation of 0, it is common to map the two lowest bit patterns to –1, thus sliding the number line slightly and making –1, 0, and 1 all exactly representable.

Normalized values are particularly important in computer graphics because we frequently need to represent unit vectors, dot products of unit vectors, and fractional reflectivities using compact storage.

A terse naming convention is desirable for expressing numeric types in a graphics program because there are frequently so many variations. One common convention for fixed point decorates int, or fix with prefixes and suffixes. In this convention, the prefix u denotes unsigned, the prefix n denotes normalized, and the suffix denotes the bit allocations using an underscore instead of a period. For example, uint8 is an 8-bit unsigned fixed-point number with range [0, 255] and ufix5_3 is an unsigned fixed-point number with 5 integer bits and 3 fractional bits on the range [0, 25 – 2–3] = [0, 31.875]. An even more terse variation of this in OpenGL is the use of I to represent non-normalized fixed point and an assumption of unsigned normalized representation. For example, GL_R8 indicates an 8-bit normalized value (unint8) on the range [0, 1] and GL_RI8 indicates an integer on the range [0, 255].

Some common fixed-point formats currently in use in hardware graphics are unsigned normalized 8-bit for reflectivity, normalized 8-bit for unit vectors, and 24.8 fixed point for 2D positions during rasterization. Fixed-point is infrequently used in modern software rendering. CPUs are not very efficient for most operations on fixed-point formats and software rendering today tends to focus on quality more than performance, so one less frequently seeks minimal data formats if they are inconvenient. The exception is software rasterization—24.8 format is used in hardware, not for performance but because fixed-point arithmetic is exact: a + b – b = a (so long as the intermediate results do not overflow), which is not the case for most floating-point a and b.

14.3.2. Floating Point

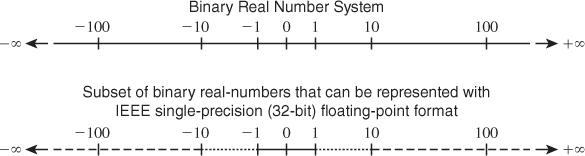

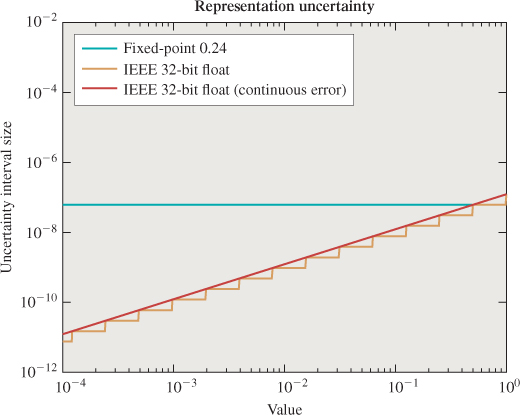

Floating-point representations allow the location of the decimal point to move—in some cases, far beyond the number of digits. Although the details of the commonly used IEEE 754 floating-point representations are slightly more complicated than scientific notation, the key ideas are similar. A number can be represented as a mantissa and an exponent; for example, a × 10b can be encoded by concatenating the bits of a and b, which are themselves integer or fixed-point numbers. In practice, the IEEE 754 representations allow explicit representation of concepts like “not a number” (e.g., 0/0) and positive and negative infinity. These could be, but rarely are, represented as specific bit patterns in fixed point. Floating point offers increased range or precision over fixed point at the same bit count; the catch is that it rarely offers both at the same time. The magnitude of the error in the approximation of a real number depends on the specific number; it tends to be larger for larger-magnitude numbers (see Figures 14.3, 14.4). This makes it complicated to bound the error in algorithms that use this representation. Floating point also tends to require more complicated circuits to implement.

Figure 14.3: Subset of binary real numbers that can be represented with IEEE single-precision (32-bit) floating-point format. (Credit: Courtesy of Intel Corporation)

Figure 14.4: Distance between adjacent representable real numbers in 8.24-bit fixed point versus 32-bit floating point [AS06] over the range [10–4, 1). Floating-point representation accuracy varies with magnitude. ©2006 ACM, Inc. Included here by permission.

Both 32-bit and 64-bit floating-point numbers (sometimes called single-and double-precision) are common across all application domains. The 32-bit float is often preferred in graphics for space and time efficiency. Graphics also employs other floating-point sizes that are less common in other areas, such as 16-bit “half” precision and some very special-purpose sizes like 10-bit floating point (a.k.a. 7e3). Ten bits may seem like a strange size given that most architectures prefer power-of-two sizes for data types. In the context of a 3-vector storing XYZ or RGB values, three 10-bit values fit within a 32-bit word (the remaining two bits are then unused). Shared-exponent formats efficiently combine separate mantissas for each vector element with a single exponent [War94]. These are particularly useful for images, in which values may span a large range.

14.3.3. Buffers

The term of art, “buffer,” usually refers to a 2D rectangular array of “pixel” values in computer graphics; for example, an image ready for display or a map of the distance from the camera to the object seen at each pixel. Beware that in general computer science, a “buffer” is often a queue (and that sometimes a “2D vector” refers to a 2D array, not a geometric vector!); to avoid confusion, we never use the general computer science terminology in this book.

The color buffer holds the image shown on-screen in a graphics system. A reasonable representation might be a 2D array of pixel values, each of which stores three fields: red, green, and blue. Set aside the interpretation of those fields for the moment and consider the implementation details of this representation.

The fields should be small, that is, they should contain few bits. If the color buffer is too large then it might not fit in memory, so it is desirable to make each field as compact as possible without affecting the perceived quality of the final image. Furthermore, ordered access to the color buffer will be substantially faster if the working set fits into the processor’s memory cache. The smaller the fields, the more pixels that can fit in cache.

The size of each pixel in bits should be an integer multiple or fraction of the word size of the machine. If each pixel fits into a single word, then the memory system can make aligned read and write operations. Those are usually twice as fast as unaligned memory accesses, which must make two adjacent aligned accesses and synthesize the unaligned result. Aligned memory accesses are also required for hardware vector operations in which a set of adjacent memory locations are read and then processed in parallel. This might give another factor of 32 in performance on a vector architecture that has 32 lanes. If a pixel is larger than a word by an integer multiple, then multiple memory accesses are required to read it; however, vectorization and alignment are still preserved. If a pixel is smaller than a word by an integer multiple, then multiple pixels may be read with each aligned access, giving a kind of super-vectorization.

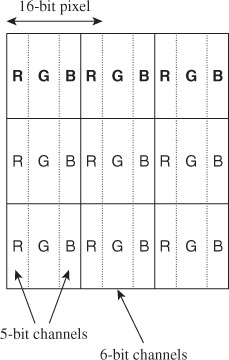

One common buffer format is shown in Figure 14.5. This figure shows a 3×3 buffer in the GL_R5G6B5 format. This is a normalized-fixed point format for 16-bit pixels. On a 64-bit computer, four of these pixels can be read or written with a single scalar instruction.

Figure 14.5: The GL_R5G6B5 buffer format packs three normalized fixed-point values representing red, green, blue, and coverage values, each on [0, 1], into every 16-bit pixel. The red and blue channels each receive five bits. Because 16 is not evenly divisible by three, the “extra” bit is (mostly arbitrarily) assigned to the green channel.

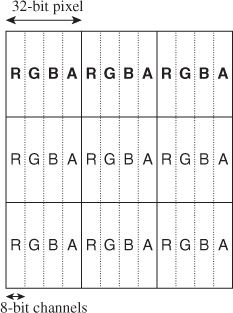

Five bits per channel is not much considering that the human eye can distinguish hundreds of shades of gray. Eight bits per channel enable 256 monochrome shades. But three 8-bit channels consume 24 bits, and most memory systems are built on power-of-two word sizes. One solution is to round up to a 32-bit pixel and simply leave the last eight bits of each pixel unused. This is a common practice beyond graphics—compilers often align the fields of data structures with such unused bits to enable efficient aligned memory access. However, it is also common in graphics to store some other value in the available space. For example, the common GL_RGBA8 format stores three 8-bit normalized fixed-point color channels and an additional 8-bit normalized fixed-point value called α (or “alpha,” represented by an “A”) in the remaining space (see Figure 14.6). This value might represent coverage, where α = 0 is a pixel that the viewer should be able to see through and α = 1 is a completely opaque pixel.

Figure 14.6: The GL_RGBA8 buffer format packs three 8-bit normalized fixed-point values representing red, green, blue, and coverage values, each on [0, 1], into every 32-bit pixel. This format allows efficient, word-aligned access to an entire pixel for a memory system with 32-bit words. A 64-bit system might fetch two pixels at once and mask off the unneeded bits—although if processing multiple pixels of an image in parallel, both pixels likely need to be read anyway.

Obviously, on most displays one cannot see through the display itself when a color buffer pixel has α = 0; however, the color buffer may not be intended for direct display. Perhaps we are rendering an image that will itself be composited into another image. When writing this book, we prepared horizontal and vertical grid lines of Figure 14.5 as an image in a drawing program and left the pixels that appear “white” on the page as “transparent.” The drawing program stored those values with α = 0. We then pasted the grid over the text labels “R,” “G,” etc. Because the color buffer from the grid image indicated that the interior of the grid cells had no coverage, the text labels showed through, rather than being covered with white squares. We return more extensively to coverage and transmission in Section 14.10.2.

The compositing example is one of many cases where a buffer is intended as input for an algorithm rather than for direct display to a human as an image, and α is only one of many common quantities found in buffers that has no direct visible representation. For example, it is common to store “depth” in a buffer that corresponds 1:1 to the color buffer. A depth buffer stores some value that maps monotonically to distance from the center of projection to the surface seen at a pixel (we motivate and show how to implement and use a depth buffer in Chapter 15, and evaluate variations on the method and alternatives extensively in Chapter 36 and Section 36.3 in particular).

Another example is a stencil buffer, which stores arbitrary bit codes that are frequently used to mask out parts of an image during processing in the way that a physical stencil (see Figure 14.7) does during painting.

Figure 14.7: A real “stencil” is a piece of paper with a shape cut out of it. The stencil is placed against a surface and then painted over. When the stencil is removed, the surface is only painted where the holes were. A computer graphics stencil is a buffer of data that provides similar functionality.

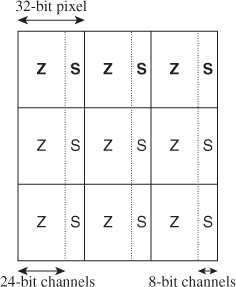

Stencil buffers typically use very few bits, so it is common to pack them into some other buffer. For example, Figure 14.8 shows a 3×3 combined depth-and-stencil buffer in the GL_DEPTH24STENCIL8 format.

Figure 14.8: The GL_DEPTH24 STENCIL8 buffer format encodes a 24-bit normalized fixed point “depth” value with eight stencil bits used for arbitrary masking operations.

A framebuffer1 is an array of buffers with the same dimensions. For example, a framebuffer might contain a GL_RGBA8 color buffer and a GL_DEPTH24STENCIL8 depth-and-stencil buffer. The individual buffers act as parallel arrays of fields at each pixel. A program might have multiple framebuffers with many-to-many relationships to the individual buffers.

1. The framebuffer is an abstraction of an older idea called the “frame buffer,” which was a buffer that held the pixels of the frame. The modern parallel-rendering term is “framebuffer” as a nod to history, but note that it is no longer an actual buffer. It stores the other buffers (depth, color, stencil, etc.). Old “frame buffers” stored multiple “planes” or kinds of values at each pixel, but they often stored these values in the pixel, using an array-of-structs model. Parallel processors don’t work as well with an array of structs, so a struct of arrays became preferred for the modern “framebuffer.”

Why create the framebuffer level of abstraction at all? In the previous example, instead of two buffers, one storing four channels and one with two, why not simply store a single six-channel buffer? One reason for framebuffers is the many-to-many relationship. Consider a 3D modeling program that shows two views of the same object with a common camera but different rendering styles. The left view is wireframe with hidden lines removed, which allows the artist to see the tessellation of the meshes involved. The right view has full, realistic shading. These images can be rendered with two framebuffers. The framebuffers share a single depth buffer but have different color buffers.

Another reason for framebuffers is that the semantic model of channels of specific-bit widths might not match the true implementation, even though it was motivated by implementation details. For example, depth buffers are highly amenable to lossless spatial compression because of how they are computed from continuous surfaces and the spatial-coherence characteristics of typically rendered scenes. Thus, a compressed representation of the depth buffer might take significantly less space (and correspondingly take less time to access because doing so consumes less memory bandwidth) than a naive representation. Yet the compressed representation in this case still maintains the full precision required by the semantic buffer format requested through an API. Unsurprisingly given these observations, it is common practice to store depth buffers in compressed form but present them with the semantics of uncompressed buffers [HAM06]. Taking advantage of this compressibility, especially using dedicated circuitry in a hardware renderer, requires storing the depth values separately from the other channels. Thus, the framebuffer/color buffer distinction steers the high-level system toward an efficient low-level implementation while abstracting the details of that implementation.

14.4. Building Blocks of Ray Optics

In the real world, light sources emit photons. These scatter through the world and interact with matter. Some scatter from matter, through an aperture, and then onto a sensor. The aperture may be the iris of a human observer and the sensor that person’s retina. Alternatively, the aperture may be at the lens of a camera and the sensor the film or CCD that captures the image. Photorealistic rendering models these systems, from emitter to sensor. It depends on five other categories of models:

1. Light

2. Light emitters

3. Light transport

4. Matter

5. Sensors and their imaging apertures and optics (e.g., cameras and eyes)

We now explore the concepts of each category and some high-level aspects that can be abstracted to conserve space, time, and implementation complexity. Later in the chapter we return to specific common models within each category. We must defer that until later because the models interact, so it is important to understand all before refining any.

Although the first few sections of this chapter have covered a great many details, there is a high-level message as well, one that we summarize in a principle we apply throughout the remainder of the chapter:

![]() The High-Level Design Principle

The High-Level Design Principle

Start from the broadest possible view. Elements of a graphics system don’t separate as cleanly as we might like; you can’t design the ideal representation for an emitter without considering its impact on light transport. Investing time at the high level lets us avoid the drawbacks of committing, even if it defers gratification.

14.4.1. Light

14.4.1.1. The Visible Spectrum

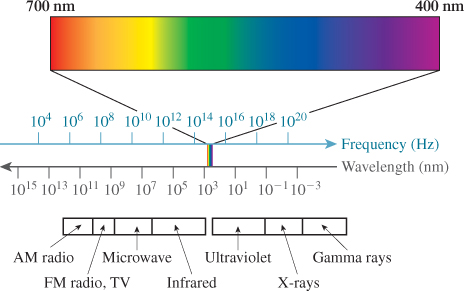

The energy of real light is transported by photons. Each photon is a quantized amount of energy, so a powerful beam of light contains more photons than a weak beam with the same spectrum, not more powerful photons. The exact amount of energy per photon determines the frequency of the corresponding electromagnetic wave; we perceive it as color. Low-frequency photons appear red to us and high-frequency ones appear blue, with the entire rainbow spectrum in between (see Figure 14.9). “Low” and “high” here are used relative to the visible spectrum. There are photons whose frequencies are outside the visible spectrum, but those can’t directly affect rendering, so they are almost always ignored.

Figure 14.9: The visible spectrum is part of the full electromagnetic spectrum. The color of light that we perceive from an electromagnetic wave is determined by its frequency. The relationship between frequency and wavelength is determined by the medium through which the wave is propagating. (Courtesy of Leonard McMillan)

The human visual system perceives light containing a mixture of photons of different frequencies as a color somewhere between those created by the individual photons. For example, a mixture of “red” and “green” photons appears yellow, and is mostly indistinguishable from pure “yellow” photons. This aliasing (i.e., the substitutability of one item for another) is fortunate. It allows displays to create the appearance of many colors using only three relatively narrow frequency bands. Digital cameras also rely on this principle—because the image will be displayed using three frequencies, they only need to measure three.2 Most significantly for our purposes, almost all 3D rendering treats photons as belonging to three distinct frequencies (or bands of frequencies), corresponding to red, green, and blue. This includes film and games; some niche predictive rendering does simulate more spectral samples. We’ll informally refer to rendering with three “frequencies,” when what we really mean is “rendering with three frequency bands,” Using only three frequencies in simulation minimizes both the space and time cost of rendering algorithms. It creates two limitations. The first is that certain phenomena are impossible to simulate with only three frequencies. For example, the colors of clothing often appear different under fluorescent light and sunlight, even though these light sources may themselves appear fairly similar. This is partly because fluorescent bulbs produce white light by mixing a set of narrow frequency bands, while photons from the sun span the entire visible spectrum. The second limitation of using only three frequencies is that renderers, cameras, and displays rarely use the same three frequencies. Each system is able to create the perception of a slightly different space of colors, called a gamut. Some colors may simply be outside the gamut of a particular device and lost during capture or display. This also means that the input and output image data for a renderer must be adjusted based on the color profile of the device. Today most devices automatically convert to and from a standard color profile, called sRGB, so color shifts are minimized on such devices but gamut remains a problem.

2. This is not strictly true; Chapter 28 explains why.

![]() The Noncommutativity Principle

The Noncommutativity Principle

The order of operations often matters in graphics. Swapping the order of operations can introduce both efficiencies in computations and errors in results. You should be sure that you know when you’re doing so.

14.4.1.2. Propagation

The speed of propagation of a photon is determined by a material. In a vacuum, it is about c = 3 × 108 m/s, which is therefore called the speed of light. The index of refraction of a material is the ratio of the speed of light in a vacuum to the rate s of propagation in that material:

For everyday materials, s < c, so η ≥ 1 (e.g., household glass has η ≈ 1.5). The exact propagation speed and index of refraction depend on the wavelength of the photon, but the variation is small within the visible spectrum, so it is common to use a single constant for all wavelengths. The primary limitation of this approximation is that the angle of refraction at the interface to a transmissive material is constant for all wavelengths, when it should in fact vary slightly. Effects like rainbows and the spectrum seen from a prism cannot be rendered under this approximation—but when simulating only three wavelengths, rainbows would have only three colors anyway.

Beware that it is common in graphics to refer to the wavelength λ of a photon, which is related to temporal frequency3 f by

3. Waves have a temporal frequency measured in 1/s (i.e., Hz) and a spatial frequency measured in 1/m. The spatial frequency of a photon is necessarily 1/λ and is rarely used in graphics because it varies with the speed of propagation.

Because the speed of propagation changes when a stream of photons enters a different medium, the wavelength also changes. Yet in graphics we assume that each of our spectral samples is fixed independent of the speed of propagation, so frequency is really what is meant in most cases.

Photons propagate along rays within a volume that has a uniform index of refraction, even if the material in that volume is chemically or structurally inhomogeneous. Photons are also selectively absorbed, which is why the world looks darker when seen through a thick pane of glass. At the boundary between volumes with different indices of refraction, light scatters by reflecting and refracting in complex ways determined by the microscopic geometry and chemistry of the material. Chapter 26 describes the physics and measurement of light in detail, and Chapter 27 discusses scattering.

14.4.1.3. Units

Photons transport energy, which is measured in joules. They move immensely fast compared to a human timescale, so renderers simulate the steady state observed under continuous streams of photons. The power of a stream of photons is the rate of energy delivery per unit time, measured in watts. You are familiar with appliance labels that measure the consumption in watts and kilowatts. Common household lighting solutions today convert 4% to 10% of the power they consume into visible light, so a typical “100 W” incandescent lightbulb emits at best 10 W of visible light, with 4 W being a more typical value.

In addition to measuring power in watts, there are two other measurements of light that appear frequently in rendering. The first is the power per unit area entering or leaving a surface, in units of W/m2. This is called irradiance or radiosity and is especially useful for measuring the light transported between matte surfaces like painted walls. The second is the power per unit area per unit solid angle, measured4 in W/(m2 sr), which is called radiance. It is conserved along a ray in a homogeneous medium. It is the quantity transported between two points on different surfaces, and from a point on a surface to a sample location on the image plane.

4. The unit “sr” is “steradians,” a measure of the size of a region on the unit sphere, described in more detail in Section 14.11.1.

14.4.1.4. Implementation

It is common practice to represent all of these quantities using a generic 3-vector class (e.g., as done in the GLSL and HLSL APIs), although in general-purpose languages it is frequently considered better practice to at least name the fields based on their frequency, as shown in Listing 14.1.

Listing 14.1: A general class for recording quantities sampled at three visible frequencies.

1 class Color3 {

2 public:

3 /** Magnitude near 650 THz ("red"), either at a single

4 frequency or representing a broad range centered at

5 650 THz, depending on the usage context. 650 THz

6 photons have a wavelength of about 450 nm in air. */

7 float r;

8

9 /** Near 550 THz ("green"); about 500 nm in air. */

10 float g;

11

12 /** Near 450 THz ("blue"); about 650 nm in air. */

13 float b;

14

15 Color3() : r(0), g(0), b(0) {}

16 Color3(float r, float g, float b) : r(r), g(g), b(b);

17 Color3 operator*(float s) const {

18 return Color3(s * r, s * g, s * b);

19 }

20 ...

21 };

One could use the type system to help track units by creating distinct classes for power, radiance, etc. However, it is often convenient to reduce the complexity of the types in a program by simply aliasing these to the common “color”5 class, as shown, for example, in Listing 14.2.

5. We discuss why color is not a quantifiable phenomenon in Chapter 28; here we use the term in a nontechnical fashion that is casual jargon in the field.

Listing 14.2: Aliases of Color3 with unit semantics.

1 typedef Color3 Power3;

2 typedef Color3 Radiosity3;

3 typedef Color3 Radiance3;

4 typedef Color3 Biradiance3;

Because bandwidth and total storage space are often limited resources, it is common to employ the fewest bits practical for your needs for each frequency-varying quantity. One implementation strategy is to parameterize the class, as shown in Listing 14.3.

Listing 14.3: A templated Color class and instantiations.

1 template<class T>

2 class Color3 {

3 public:

4 T r, g, b;

5

6 Color3() : r(0), g(0), b(0) {}

7 ...

8 };

9

10 /** Matches GL_RGB8 format */

11 typedef Color3<unint8> Color3un8;

12

13 /** Matches GL_RGB32F format */

14 typedef Color3<float> Color3f32;

15

16 /** Matches GL_RGB16I format */

17 typedef Color3<unsigned short> Color3ui16;

14.4.2. Emitters

Emitters are fairly straightforward to model accurately. They create and cast photons into the scene. The photons have locations, propagation directions, and frequencies (i.e., “colors”), and are emitted at some rate. Given probability distributions for those parameters, we can generate many representative photons and trace them through the scene. We say “representative” because real images are formed by trillions of photons, yet graphics applications can typically estimate the image very well from only a few million photons, so each graphics photon represents many real ones. Today’s computers and rendering algorithms can execute a simulation in this model for rendering images in a few minutes. The emission itself isn’t particularly expensive. Instead, the later steps of the tracing consume most of the processing time because each representative photon must be handled individually, and the interaction of millions of photons with millions or billions of polygons can be complicated.

To render even faster, we can simplify the emission model so that an aggregate of photons along a light ray can be considered by the later light transport steps. This is a common approximation for real-time rendering. The simplified models tend to fix the origin for all photons from an emitter at a single point. Doing so allows algorithms to amortize the cost of processing light rays from an emitter over the large number of light rays that share a single origin. As we said earlier, it is common practice to consider a small number of frequencies, to simplify the spectral representation, and to treat photons in the aggregate by measuring the average rate of energy emitted at each of those frequencies. Three frequencies loosely corresponding to “red,” “green,” and “blue” are almost always chosen to represent the visible spectrum, where each represents a weighted sum of the spectral values over an interval of the true spectrum, but is treated during simulation as a point sample, say, at the center of the interval. For an example of a more refined model, Pharr and Humphreys [PH10] describe a renderer with a nice abstraction of spectral curves.

14.4.3. Light Transport

In computer graphics, light transport is almost always modeled by (steady-state) ray optics on uncollimated, unpolarized light. This substantially simplifies the simulation by neglecting phase and polarization. In this model, photons propagate along straight lines through empty space. They do not interfere with one another, and their energy contribution simply sums. Under this simplification and with a discrete set of frequency samples, a geometric ray and a radiance vector (indicating radiance in the red, green, and blue portions of the spectrum) are sufficient to represent a stream of photons.

We’ll see in Chapter 27 that ignoring photon interference and polarization to simplify the representation of light energy is what forces us to complicate our representation of matter. For example, glossy and perfect reflection arises from the interference of nearly parallel streams of photons. This interference does not arise under ray optics, so we must introduce specific terms (such as Fresnel terms) to materials to model the same phenomena. One could use a richer model of light and a simpler model of a surface to produce the same image. However, a simple model of matter is not necessarily one that is easy to describe in terms of macroscopic phenomena, either for specification or for digital representation. Representing and modeling a brick as a rough, reddish slab of dried clay is both intuitive and compact. Representing it as a collection of 1026 or so molecules of varying composition is unwieldy at best.

14.4.4. Matter

There are many models of matter in graphics. The simplest is that matter is geometry that scatters light, and further, that this light scattering takes place only at the surfaces of opaque objects, ignoring the very small interactions of photons with air over short distances and any subsurface interaction effects. The surface scattering model builds on these assumptions by modeling only the surfaces of opaque objects. This reduces the complexity of a scene substantially. For example, a computer graphics car might have no engine, and a computer graphics house might be only a façade. Only the parts of objects that can interact with light need to be modeled. Of course, this approach poorly represents matter with deep interaction, such as skin and fog, and is only sufficient for rendering. To animate objects, for instance, we need to know properties such as joint locations and masses.

A consequence of computer graphics relying on complex models of matter is that different models are often employed for surface detail at different scales. Supporting different models and ways of combining them at intermediate scales complicates a graphics system. However, it also yields great efficiencies and matches our everyday perception. For example, from 100 meters, you might observe that a fir tree is similar to a green cone. From ten meters, individual branches are visible. From one meter, you can see separate needles. At one centimeter, small bumps and details on the needles and branches emerge. With a light microscope you can see individual cells, and with an electron microscope you can see molecule-scale detail. For this chapter, we consider details to be large-scale if their impact on the silhouette can be observed from about one meter, medium scale if they are smaller than that but observable by the naked eye at some scale, and small-scale if they are not observable by the naked eye.

14.4.5. Cameras

Lenses and sensors (the components of eyes and cameras) are complicated. This is true whether they have biological or mechanical origins. From a photographer’s perspective, the ideal lens would focus all light from a point that is “in focus” onto a single point on the imager (the sensing surface of the sensor) regardless of the frequency of light or the location on the imager. Real lenses have imperfect geometry that distorts the image slightly over the image plane and causes darkening near the edges, an effect known as vignetting (see Figure 14.10). They also necessarily focus different frequencies differently, creating an artifact called chromatic aberration (see Figure 14.11; see also Chapter 26). Camera manufacturers compensate for these limitations by combining multiple lenses. Unfortunately, these compound lenses absorb more light, create internal reflections, and can diffuse the focus. We perceive the reflections as lens flare—a series of iris shapes in line with the light source overlaid on the image, as seen in Figure 14.12. We perceive slightly diffused focus as bloom, where very bright objects appear defocused. Real film has a complex nonlinear response to light, and has grain that arises from the manufacturing process. Digital imagers are sensitive to thermal noise and have small divisions between pixels.

Figure 14.10: The darkening of this photograph near the edges is called vignetting. (Credit: Swanson Tennis Center at Gustavus Adolphus College by Joe Lencioni, shiftingpixel.com)

Figure 14.11: The rainbowlike edges on the objects in this photograph are caused by chromatic aberration in the camera’s lens. Different frequencies of light refract at different angles, so the resultant colors shift in the image plane. High-quality cameras use multiple lenses to compensate for this effect. (Credit: Corepics VOF/Shutterstock)

Figure 14.12: The streaks from the sun and apparently translucent-colored polygons and circles along a line through the sun in this photograph are a lens flare created by the intense light reflecting within the multiple lenses of the camera objective. Light from all parts of the scene makes these reflections, but most are so dim compared to the sun that their impact on the image is immeasurable. (Credit: Spiber/Shutterstock)

Since the simple model of a lens as an ideal focusing device and a sensor as an ideal photon measurement device yields higher image quality than a realistic camera model, there is little reason to use a more realistic model. Because lens flare, film grain, bloom, and vignetting are recognized as elements of realism from films, those are sometimes modeled using a post-processing pass. There is no need to model the true camera optics to produce these effects, since they are being added for aesthetics and not realism. Note that this arises purely from camera culture—except for bloom, none of these effects are observed by the naked eye.

14.5. Large-Scale Object Geometry

This section describes common models of object surfaces. Many rendering algorithms interact only with those surfaces. Some interact with the interior of objects, whose boundaries can still be represented by these methods. Section 14.7 briefly describes some representations for objects with substantial internal detail.

Some objects are modeled as thin, two-sided surfaces. A butterfly’s wing and a thin sheet of cloth might be modeled this way. These models have zero volume—there is no “inside” to the model. More commonly, objects have volume, but the details inside are irrelevant. For an opaque object with volume, the surface typically represents the side seen from the outside of the object. There is no need to model the inner surface or interior details, because they are never seen (see Chapter 36). To eliminate the inner side of the skin of an object, polygons have an orientation. The front face of a polygon is the side indicated to face outward and the back face is the side that faces inward. A process called backface culling eliminates the inward-facing side of each polygon early in the rendering process. Of course, this model is revealed as a single-sided, hollow skin should the viewer ever enter the model and attempt to observe the inside, as you saw in Chapter 6. This happens occasionally in games due to programming errors. Because there is no detail inside such an object and the back faces of the outer skin are not visible, in this case the entire model seems to disappear from view once the viewpoint passes through its surface.

Translucent objects naturally reveal their interior and back faces, so they require special consideration. They are often modeled either as a translucent, two-sided shell, or as two surfaces: an outside-to-inside interface and an inside-to-outside interface. The latter model is necessary for simulating refraction, which is sensitive to whether light rays are entering or leaving the object.

Surface and object geometry is useful for more than rendering. Intersections of geometry are used for modeling and simulation. For example, we can model an ice-cream cone with a bite taken out as a cone topped by a hemisphere ...with some smaller balls subtracted from the hemisphere. Simulation systems often use collision proxy geometry that is substantially simpler than the geometry that is rendered. A character modeled as a mesh of 1 million polygons might be simulated as a collection of 20 ellipsoids. Detecting the intersection of a small number of ellipsoids is more computationally efficient than detecting the intersection of a large number of polygons, yet the resultant perceived inaccuracy of simulation may be small.

14.5.1. Meshes

14.5.1.1. Indexed Triangle Meshes

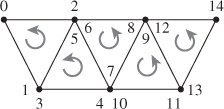

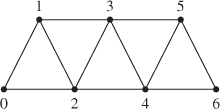

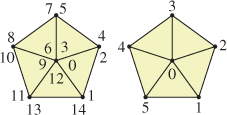

Indexed triangle meshes (see Chapter 8) are a pervasive surface representation in graphics. The minimal representation is an array of vertices and a list of indices expressing connectivity. There are three common base schemes for the index list. These are called triangle list (or sometimes soup), triangle strip, and triangle fan representations. Figures 14.13 through 14.15 describe each in the context of counterclockwise triangle winding and 0-based indexing for a list describing n > 0 triangles.

Figure 14.13: A triangle list, also known as a triangle soup, contains 3n indices. List elements 3t, 3t + 1, and 3t + 2 are the ordered indices of triangle t.

Figure 14.14: A triangle strip contains n + 2 indices. The ordered indices of triangle t are given as follows. For even t, use list elements t, t + 2, t + 1. For odd t, use list elements t, t + 1, t + 2.

Figure 14.15: A triangle soup pentagon on the left, and the more efficient triangle fan model on the right. A triangle fan contains n + 2 indices. List elements 0, t + 1, t + 2 are the ordered indices of triangle t (with indices taken mod n + 2).

14.5.1.2. Alternative Mesh Structures

For each representation there is a corresponding nonindexed representation as a list whose element j is vertex[index[j]] from the indexed representation. Such nonindexed representations are occasionally useful for streaming large meshes that would not fit in core memory through a system. Because they duplicate storage of vertices (which are frequently much larger than indices), these representations are out of favor for moderate-sized models.

One can also construct quadrilateral or higher-order polygon meshes following comparable schemes. However, triangles have several advantages because they are the 2D simplex: Triangles are always planar, define an unambiguous barycentric interpolation scheme, never self-intersect, and are irreducible to simpler polygons. These properties also make them slightly easier to rasterize, ray trace, and sample than higher-order polygons. Of course, for data such as city architecture that is naturally modeled by quadrilaterals, a triangle mesh representation increases storage space without increasing model resolution.

14.5.1.3. Adjacency Information

Some algorithms require efficient computation of adjacency information between faces, edges, and vertices on a mesh. For example, consider the problem of rendering the contour of a convex mesh for a line-art program. Each edge is drawn only if it is on the contour. An edge lies between two faces. It is on the contour if exactly one face is oriented toward the viewer. We can make this determination more quickly if we augment the minimal indexed mesh with additional information describing faces, edges, and adjacency. Under that representation, we might directly iterate over edges (instead of triangles) and expect constant-time access to the two faces adjacent to each edge.

Adjacency information depends only on topology, so it may be precomputed for an animated mesh so long as the mesh does not “tear apart” under animation. Listing 14.4 gives a possible representation for a mesh with full adjacency information. In the listing, all the integers in the Vertex, Edge, and Face classes are indices into the arrays at the bottom of the class definition. Because faces are oriented, the order of elements matters in their vertices index arrays. This is the modern array-based equivalent of a classic mesh data structure called the winged edge polyhedral representation [Bau72] (see Chapter 8).

There are several ways to encode the edge information within these data structures. One is to consider the directed half-edges that each exist in one face. A true edge that is not on the boundary of the edge would then be a pair of half-edges. The half-edge representation offers the advantage of retaining orientation information when it is reached by following an index from a face. It has the disadvantage of storing redundant information for all the edges with two adjacent faces. A common trick obfuscates the code a bit by eliminating this storage overhead. The trick is to store only one half-edge for each mesh edge, and to index from a face using two’s complement when the half-edge is oriented opposite the direction that it should appear in that face. The two’s complement of a non-negative index e (written ~e in C-like languages) is guaranteed to be a negative number, so indices of oppositely directed edges are easy to identify. The two’s complement operator is efficient on most architectures, so it incurs little overhead. Each edge then uses the same trick to encode the indices of the adjacent faces, indicating whether that half-edge or its oppositely directed mate actually appears in the face.

Listing 14.4: Sample mesh representation with full adjacency information.

1 struct Mesh {

2 enum NO_FACE = MAX_INT;

3

4 struct Vertex {

5 Point3 location;

6 std::vector<int> edges;

7 std::vector<int> faces;

8 };

9

10 struct Edge {

11 int vertices[2];

12 /* May be NO_FACE if this edge is on a boundary. */

13 int faces[2];

14 };

15

16 struct Face {

17 int vertices[3];

18 int edges[3];

19 };

20

21 std::vector<int> index;

22 std::vector<Vertex> vertex;

23 std::vector<Edge> edge;

24 std::vector<Face> face;

25 };

14.5.1.4. Per-Vertex Properties

It is common to label the vertices of a mesh with additional information. Common rendering properties include shading normals, texture coordinates, and tangent-space bases.

A polygonal approximation of a curved surface appears faceted. The perception of faceting can be greatly reduced by shading the surface as if it were curved, that is, by shading the points indicated by the surface geometry, but altering the orientation of their tangent plane during illumination computations, as you saw in Chapter 6. It is common to model the orientation by specifying the desired surface normal at each vertex and interpolating between those normals within the surface of each polygon.

Texture coordinates are the additional points or vectors specified at each vertex to create a mapping from the surface of the model to a texture space that defines material properties, such as reflectance spectrum (“color”). Mapping from the surface to a 2D square using 2D points is perhaps the most common, but mappings to 1D spaces, 3D volumetric spaces, and the 2D surface of a 3D sphere are also common; Chapter 20 discusses this in detail. The last is sometimes called cube mapping, sphere mapping, or environment mapping depending on the specific parameterization and application.

A tangent space is just a plane that is tangent to a surface at a point. A mesh’s tangent space is undefined at edges and vertices. However, when the mesh has vertex normals there is an implied tangent space (the plane perpendicular to the vertex normal) at each vertex. The interpolated normals across faces (and edges) similarly imply tangent spaces at every point on the mesh. Many rendering algorithms depend on the orientation of a surface within its tangent plane. For example, a hair-rendering algorithm that models the hair as a solid “helmet” needs to know the orientation of the hair (i.e., which way it was combed) at every point on the surface. A tangent-space basis is one way to specify the orientation; it is simply a pair of linearly independent (and usually orthogonal and unit-length) vectors in the tangent plane. These can be interpolated across the surface of the mesh in the same way that shading normals are; of course, they may cease to be orthogonal and change length as they are interpolated, so it may be necessary to renormalize or even change their direction after interpolation to achieve the goals of a particular algorithm. Finding such a pair of vectors at every point of a closed surface is not always possible, as described in Chapter 25.

14.5.1.5. Cached and Precomputed Information on the Mesh

The preceding section described properties that extend the mesh representation with additional per-vertex information. It is also common to precompute properties of the mesh and store them at vertices to speed later computation, such as curvature information (and the adjacency information that we have already seen). One can even evaluate arbitrary, expensive functions and then approximate their value at points within the mesh (or even within the volume contained by the mesh) by barycentric interpolation.

Gouraud shading is an example. We compute and store direct illumination at vertices during the rendering of a frame, and interpolate these stored values across the interior of each face. This was once common practice for all rasterization renderers. Today it is primarily used only on renderers for which the triangles are small compared to pixels so that there is no loss of shading resolution from the interpolation. The micropolygon renderers popular in the film industry use this method, but they ensure that vertices are sufficiently dense in screen space by subdividing large polygons during rendering until each is smaller than a pixel [CCC87]. Per-pixel direct illumination is now considered sufficiently inexpensive because processor performance has grown faster than screen resolutions. However, it has not grown faster than scene complexity, so some algorithms still compute global illumination terms such as ambient occlusion (an estimated reduction in brightness due to nearby geometry) or diffuse interreflection at vertices [Bun05].

The vertices of a mesh form a natural data structure for recording values that describe a piecewise linear approximation of an arbitrary function as described in Chapter 9. The drawback of this approach is that other constraints on the modeling process may lead to a tessellation that is not ideal for representing the arbitrary function. For example, many meshes are created by artists with the goal of using the fewest triangles possible to reasonably approximate the silhouette of an object. Large, flat areas of the mesh will therefore contain few triangles. If we were to compute global illumination only at the vertices, we would find that the illumination computation became extremely blurry in these areas simply because the model had too few vertices.

![]() There are two common solutions to this problem, other than simply increasing the tessellation everywhere. The first is to subdivide triangles of the mesh during computation of the function that is to be stored at vertices [Hec90], until the approximation error across each triangle is small enough. The second is to define an invertible and approximately conformal mapping from the surface of the mesh into texture space, and encode the function values in a texture map. The latter is more efficient for functions with high variance where it is hard to predict the locations where changes occur a priori. Today this approach is more popular than the per-vertex computation. For example, many games rely on light maps, which store precomputed global illumination for static scenes in textures. Combined with real-time direct illumination, these provide a reasonable approximation of true global illumination if few objects move within the scene. Traditional light maps encoded only the magnitude, but not direction, of incident light at a surface. This has since been extended to encode directionality in various bases [PRT, AtiHL2]. Texture-space diffusion, as seen in d’Eon et al.’s subsurface scattering work [dLE07], is an example of dynamic data encoded in texture space.

There are two common solutions to this problem, other than simply increasing the tessellation everywhere. The first is to subdivide triangles of the mesh during computation of the function that is to be stored at vertices [Hec90], until the approximation error across each triangle is small enough. The second is to define an invertible and approximately conformal mapping from the surface of the mesh into texture space, and encode the function values in a texture map. The latter is more efficient for functions with high variance where it is hard to predict the locations where changes occur a priori. Today this approach is more popular than the per-vertex computation. For example, many games rely on light maps, which store precomputed global illumination for static scenes in textures. Combined with real-time direct illumination, these provide a reasonable approximation of true global illumination if few objects move within the scene. Traditional light maps encoded only the magnitude, but not direction, of incident light at a surface. This has since been extended to encode directionality in various bases [PRT, AtiHL2]. Texture-space diffusion, as seen in d’Eon et al.’s subsurface scattering work [dLE07], is an example of dynamic data encoded in texture space.

14.5.2. Implicit Surfaces

Some geometric primitives are conveniently described by simple equations and correspond closely to shapes we encounter in the world around us. In 2D, these include lines, line segments, arcs of ellipses (including full circles), rectangles, trigonometric expressions such as sine waves, and low-order polynomial curves. In 3D, these include spheres, cylinders, boxes, planes, trigonometric expressions, quadrics, and other low-order polynomial surfaces.

Simple primitives can be represented via implicit equations or explicit parametric equations, as described in Chapter 7. We’ll recall some of those ideas briefly here.

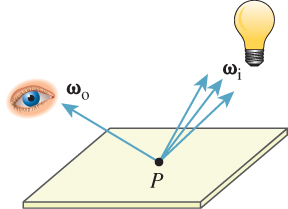

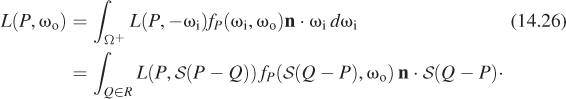

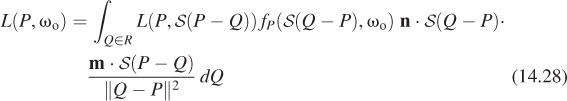

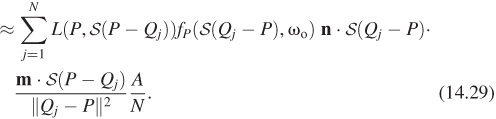

An implicit equation is a test function f : R3 ![]() R that can applied to a point. The function classifies points in space: For any point P, either f(P) > 0, f(P) < 0, or f(P) = 0. Those with f(P) = 0 are said to constitute the implicit surface defined by f; by convention, those with f(P) < 0 are said to be inside the surface, and the remainder are outside. Such a surface is an instance of a level set (for level 0) and an isocontour (for value 0) of the function.

R that can applied to a point. The function classifies points in space: For any point P, either f(P) > 0, f(P) < 0, or f(P) = 0. Those with f(P) = 0 are said to constitute the implicit surface defined by f; by convention, those with f(P) < 0 are said to be inside the surface, and the remainder are outside. Such a surface is an instance of a level set (for level 0) and an isocontour (for value 0) of the function.

As an example, consider a surface defined by the plane through point Q with normal n. A suitable test function is

For every point P in the plane, f(P) = 0. For points on the side containing Q + n, f(P) > 0; for points on the other side, f(P) < 0.

An explicit equation or parametric equation defines a generator function for points in the plane in terms of scalar parameters. We can use such a function to synthesize points on the surface. The explicit form for a plane is

where h and k are two vectors in the plane that are linearly independent. For any particular pair of numbers u and v, the point g(u, v) lies on the plane. Chapter 7 gives both implicit and parametric descriptions for spheres and ellipsoids, and parametric descriptions of several other common shapes like cylinders, cones, and toruses. These, and more general implicit surfaces, are discussed in Chapter 24.

14.5.2.1. Ray-Tracing Implicit Surfaces

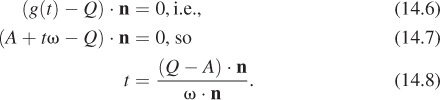

Implicit surface models are useful for ray casting and other intersection-based operations. For ray tracing, we take the parametric form of the ray with origin A and direction ω,

and solve for the point at which it intersects the plane by substituting into the plane’s implicit form and finding the roots of the resultant expression. We want to find a value t for which f(g(t)) = 0. That means

We can follow the same process for any surface whose equation admits an efficient closed-form solution after substituting the ray’s parametric form.

For a sphere of radius r about the point Q, we can use the implicit form f(P) = ||Q – P||2 – r2. Substituting the parametric form for the ray, and setting to zero, we get

This is a quadratic equation in t, at2 + bt + c = 0, where a = ||ω||2, b = (A – Q) · ω, and c = ||(A – Q||2 – r2. It can be solved with the quadratic formula to find all intersections of the ray with the sphere.

(a) Write out the solutions using the quadratic formula, and simplify.

(b) What does it mean if one of the roots of the quadratic equation is at a value t < 0? What about t = 0?

(c) In general, if b2 – 4ac = 0 in a quadratic equation, there’s only a single root. What does this correspond to geometrically in the ray-sphere intersection problem?

More general quadratics can be used to determine intersections with ellipsoids or hyperboloids, while higher-order polynomials arise in determining the intersection of a ray with a torus, for example, and for more general shapes, the equation we must solve can be very complicated. Multiple roots of the equation that results from substituting the parametric line form into the function defining the implicit surface indicate multiple potential intersections. See Chapter 15 for further discussion of ray casting and interpreting its results.

What about implicit surfaces that do not admit efficient closed-form solutions? If the implicit surface function is continuous and maps points inside the object to negative values and points outside the object to positive values, then any root-finding method such as Newton-Raphson [Pre95] will find zero points, that is, it will find the surface. The term “implicit surface” usually refers to this kind of model and intersection algorithm.

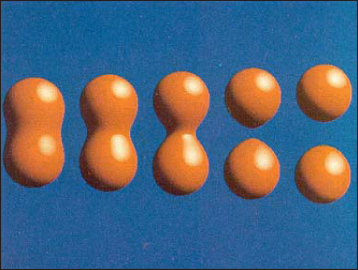

Implicit surfaces that are defined by the sum of some simple basis functions with different origins are favored for modeling organic, “blobby” shapes (see Figure 14.16). This is called blobby modeling and metaball modeling [Bli82a].

Figure 14.16: Blobby models, each defined by the isocontour of a sum of 3D Gaussian density functions [Bli82a]. (Credit: Courtesy of James Blinn © 1982 ACM, Inc. Reprinted by permission.)

14.5.3. Spline Patches and Subdivision Surfaces

We’ve seen that smooth shapes can be modeled by arbitrary expressions defining their surface curves through three dimensions and by the implicit surface defined by a parametric sum of fixed functions. Spline curves and patches and subdivision curves and surfaces are alternative representations that fall between these extremes. A spline is simply a piecewise-polynomial curve, typically represented on each interval as a linear combination of four predefined basis functions, where the coefficients are points. Thus, the curve can be represented by just storing the coefficients, which is very compact. A spline patch is a surface constructed analogously: a linear combination of several basis functions (each a function of two variables), where the coefficients are again points. This fixed mathematical form allows compact storage. The fact that the basis functions are carefully constructed, low-degree polynomials makes computations like ray-path intersection, sampling, and determining tangent and normal vectors efficient and fast. By gluing together multiple patches, we can model arbitrarily complex surfaces. (Indeed, spline patches are at the core of most CAD modeling packages.) There are many kinds of splines, each determined by a choice of the so-called basis polynomials. Graphics commonly uses third-order polynomial patches, which let us model surfaces with continuously varying normal vectors and no sharp corners. More general spline types, such as Nonuniform Rational B-Splines (NURBS), have historically been very popular modeling primitives. Splines may be rendered either by discretizing them to polygons by sampling, or by directly intersecting the spline surface, often using a root-finding method such as Newton-Raphson.

Subdivision surfaces are smooth shapes defined by recursive subdivision (using carefully designed rules) and smoothing of an initial mesh cage (see Figure 14.17). Because many modeling tools and many algorithms operate on meshes, subdivision surfaces are a practical method for adapting those tools to curved surfaces. They are especially convenient for polygon-based rendering because the mesh need only be subdivided down to the screen-space sampling density at each location. They have been favored for implementation in graphics hardware over other smooth surface representations because of this. For example, so-called tessellation, hull, and geometry shaders each map meshes to meshes inside the graphics hardware pipeline using subdivision schemes. As with all curve and surface representations, a major challenge is mixing sharp creases and other boundary conditions with smooth interiors. Representations that admit this efficiently and conveniently are an active area of research. At the time of this writing, that research has advanced sufficiently that the techniques are now being used in real-time rendering [CC98, HDD+94, VPBM01, BS05, LS08, KMDZ09].

Figure 14.17: Top: A video-game character from Team Fortress 2 rendered in real time using Approximate Catmull Clark subdivision surfaces. Bottom: The edges of the subdivision cage (projected onto the limit surface) in black, with special crease edges highlighted in bright green. (Credit: top: © Valve, all rights reserved, bottom: Courtesy of Denis Kovacs; © 2010 ACM, Inc. Reprinted by permission.)

14.5.4. Heightfields

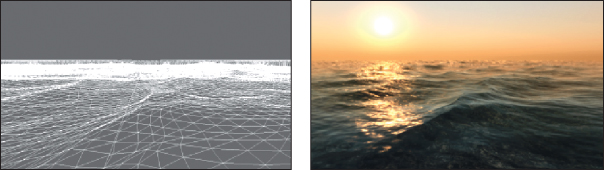

A heightfield is a surface defined by some function of the form z = f(x, y); it necessarily has the property that there is a single “height” z at each (x, y) position. This is a natural representation for large surfaces that are globally roughly planar, but have significant local detail, such as terrain and ocean waves (see Figure 14.18). The single-height property of course means that these models cannot represent overhangs, land bridges, caves, or breaking waves. By the Wise Modeling principle, you should only use heightfields when you’re certain that these things are not important to you. At a smaller scale, heightfields can be wrapped around meshes or other surface representations to represent displacements from the surface. For example, we can model a tile floor as a plane with a heightfield representing the grout lines. Heightfields used in this manner are often called displacement maps or bump maps [Bli78]. “Height” is of course relative to our orientation—it simply denotes distance from the base plane or surface along its normal, so we can use a heightmap to represent the wall of a log cabin simply by rotating our reference frame.

Figure 14.18: Left: The water surface heightfield in CryEngine2. Right: Real-time rendering of the dynamic heightfield [Mit07]. (Credit: Courtesy of Tiago Sousa, ©Crytek)

The height function can be implemented by a continuous representation, such as a sum of cosine waves, or by the interpolation of control points. The latter representation is particularly good for simulation, modeling, and measured data. The control points may be irregularly spaced so as to efficiently discretize the desired shape (e.g., a Triangulated Irregular Network (TIN), or the ROAM algorithm [DWS+97]), or they can be placed regularly to simplify the algorithms that operate on them. Because of their inability to model overhangs, heightfields are often used as a modeling primitive and then converted to generic meshes. Those meshes may be further edited without the heightfield constraint.

14.5.5. Point Sets

Heightfields, splines, implicit surfaces, and other representations based on control points all define ways to interpolate data from a fixed set of points to define a surface. As we increase the point density, the choice of interpolation scheme has less impact on the shape because the interpolation distances shrink. A natural approach to modeling complex arbitrary shapes is therefore to store dense point sets and use the most efficient interpolation scheme available. This is a particularly good approach for measured shapes, where the dense point sets naturally arise from the measurement process.

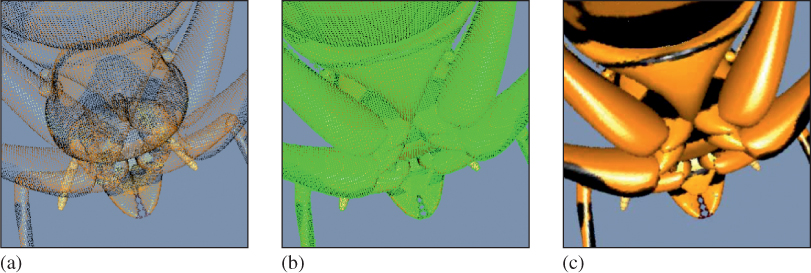

Point-based modeling often stores points at densities so high that the expected viewpoint and resolution will yield about one point per pixel, as shown in Figure 14.19. The interpolation thus need only cover gaps on the order of a pixel. Splatting is an efficient interpolation scheme under these conditions: Each point is rasterized as a small sphere (or disk facing the viewer) so that the space between points is covered but the overall shape conforms tightly to the point set. This is simply a form of convolution, and it is also equivalent to an implicit surface defined by a radial function that rapidly falls to zero (and is therefore trivial to evaluate). One can thus also directly ray-trace a point set, using the associated implicit surface.

Figure 14.19: (a) A point set, with attached surface properties. (b) The gaps between points when rendered at this resolution. (c) The surface defined by splatting interpolation of the original points [PZvBG00]. (Credit: courtesy of Hanspeter Pfister, An Wang Professor of Computer Science, © 2000 ACM, Inc. Reprinted by permission.)

Because they are a natural fit for measured data but present some efficiency challenges for animation, modeling, and storage, point representations are currently more popular in scientific and medical communities than entertainment and engineering ones.

14.6. Distant Objects

Objects that have a small screen-space footprint or that are sufficiently distant that parallax effects are negligible present an opportunity to improve rendering performance. Under perspective projection, most of the viewable frustum is “far” from the viewer, and small-scale detail is necessarily less visible there. By simplifying the representation of distant or small objects, we can improve rendering performance with minimal impact on image quality. In fact, a simplified representation may even improve image quality because excluding small details prevents them from aliasing, especially under animation (see Section 25.4 for a further discussion of this).

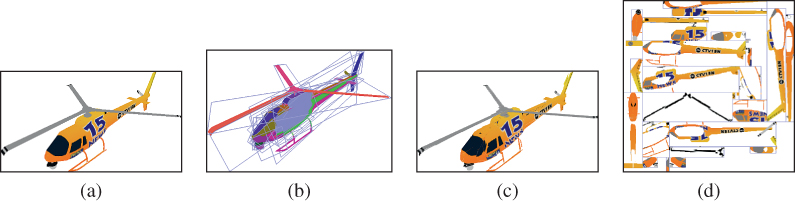

14.6.1. Level of Detail

It is common to create geometric representations of a single object with varying detail and select among them based on the screen-space footprint of the object. This is called a level of detail (LOD) scheme [HG97, Lue01]. Discrete LOD schemes contain distinct models. To conceal the transitions, they may blend rendered images of the lower- and higher-detail models when switching levels, or attempt to morph the geometry. Continuous LOD schemes parameterize the model in such a way that these morphing transitions are continuous and inherent in the structure of the model.

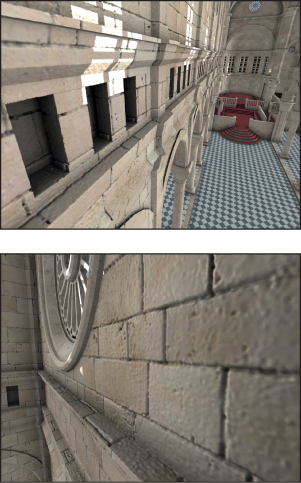

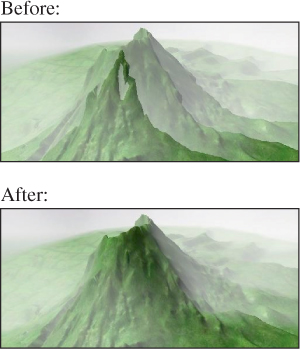

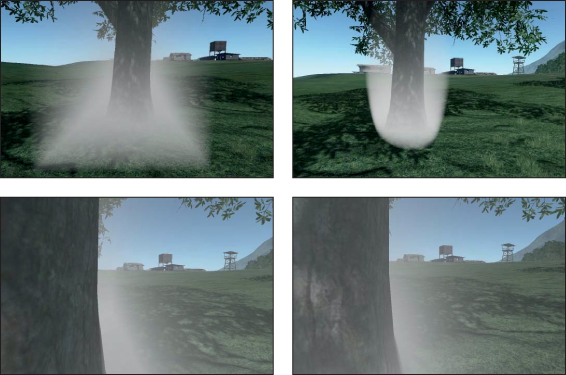

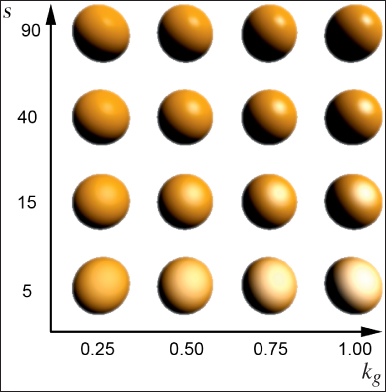

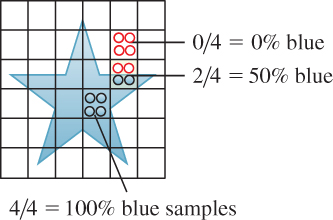

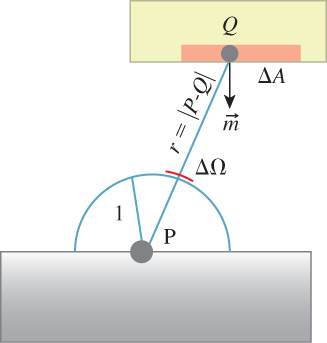

To minimize the loss of perceived detail as actual geometric detail is reduced, structure that is removed from geometry is often approximated in texture maps. For example, the highest-detail variation of a model may contain only geometry, whereas a mid-resolution variation approximates some of the geometry in a normal or displacement map, and the lowest-resolution version alters the shading algorithm to approximate the impact of the implicit subpixel geometry.