We’ve looked at three kinds of analysis that can show whether your design assumptions are valid or wrong in the aggregate. There’s another way to understand your visitors better: by watching them individually. Using either JavaScript or an inline device, you can record every page of a visit, then review what happened.

Replaying an individual visit can be informative, but it can also be a huge distraction. Before you look at a visit, you should have a particular question in mind, such as, “Why did the visitor act this way?” or “How did the visitor navigate the UI?”

Without a question in mind, watching visitors is just voyeurism. With the right question, and the ability to segment and search through visits, capturing entire visits and replaying pages as visitors interacted with them can be invaluable.

Every replay of a visit should start with a question. Some important ones that replay can answer include:

- Are my designs working for real visitors?

This is perhaps the most basic reason for replaying a visit—to verify or disprove design decisions you’ve made. It’s an extension of the usability testing done before a launch.

- Why aren’t conversions as good as they should be?

By watching visitors who abandon a conversion process, you can see what it was about a particular step in the process that created problems.

- Why is this visitor having issues?

If you’re trying to support a visitor through a helpdesk or call center, seeing the same screens that he is seeing makes it much easier to lend a hand, particularly when you can look at the pages that he saw before he picked up the phone and called you.

- Why is this problem happening?

Web applications are complex, and an error deep into a visit may have been caused by something the visitor did in a much earlier step. By replaying an entire visit, you can see the steps that caused the problem.

- What steps are needed to test this part of the application?

By capturing a real visit, you can provide test scripts to the testing or QA department. Once you’ve fixed a problem, the steps that caused it should become part of your regularly scheduled tests to make sure the problem doesn’t creep back in.

- How do I show visitors the problem is not my fault?

Capturing and replaying visits makes it clear what happened, and why, so it’s a great way to bring the blame game to a grinding halt. By replacing anecdotes with accountability, you prove what happened. Some replay solutions are even used as the basis for legal evidence.

We can answer each of these questions, but each requires a different approach.

It’s always important to watch actual visitor behavior, no matter how much in-house usability testing you do. It’s hard to find test users whose behavior actually matches that of your target market, and even if you did, your results wouldn’t be accurate simply because people behave differently when they know they’re being watched.

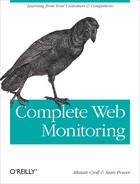

For this kind of replay, you should start with the design in question. This may be the new interface you created or the page you’ve just changed. You’ll probably want to query the WIA data for only visitors who visited the page you’re interested in. While query setup varies from product to product, you’ll likely be using filters like the ones shown in Figure 6-12.

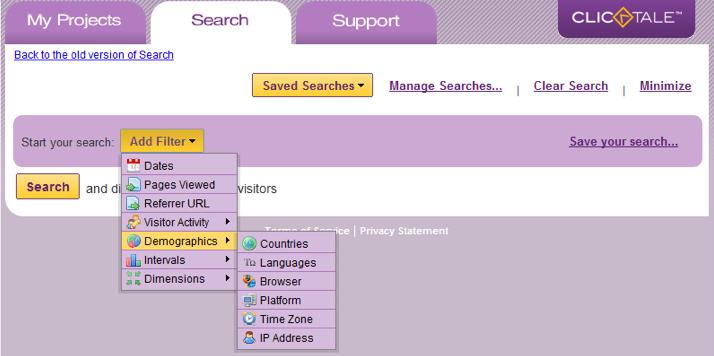

Your query will result in a list of several visits that matched the criteria you provided, such as those shown in Figure 6-13. From this list, you can examine individual visits page by page.

The WIA solution will let you replay individual pages in the order the visitor saw them, as shown in Figure 6-14.

Depending on the solution, you may see additional information as part of the replayed visit:

The WIA tool may show mouse movements as a part of the replay process.

The visualization may include highlighting of UI elements with which the visitor interacted, such as buttons.

The tool may allow you to switch between the rendered view of the page and the underlying page data (HTML, stylesheets, and so on).

You may be able to interact with embedded elements, such as videos and JavaScript-based applications, or see how the visitor interacted with them.

You may have access to performance information and errors that occurred as the page was loading.

These capabilities vary widely across WIA products, so you should decide whether you need them and dig into vendor capabilities when choosing the right WIA product.

When you launch a website, you expect visitors to follow certain paths through the site and ultimately to achieve various goals. If they aren’t accomplishing those goals in sufficient numbers, you need to find out why.

Lots of things cause poor conversion. Bad offers or lousy messages are the big culprits, and you can address these kinds of issues with web analytics and split testing. Sometimes, however, application design is the problem.

Your conversion funnels will show you where in the conversion process visitors are abandoning things. Then it’s a matter of finding visitors who started toward a goal and abandoned the process.

You should already be monitoring key steps in the conversion process to ensure visitors are seeing your content, clicking in the right places, and completing forms, as discussed earlier. To really understand what’s going on, though, you should view visits that got stuck.

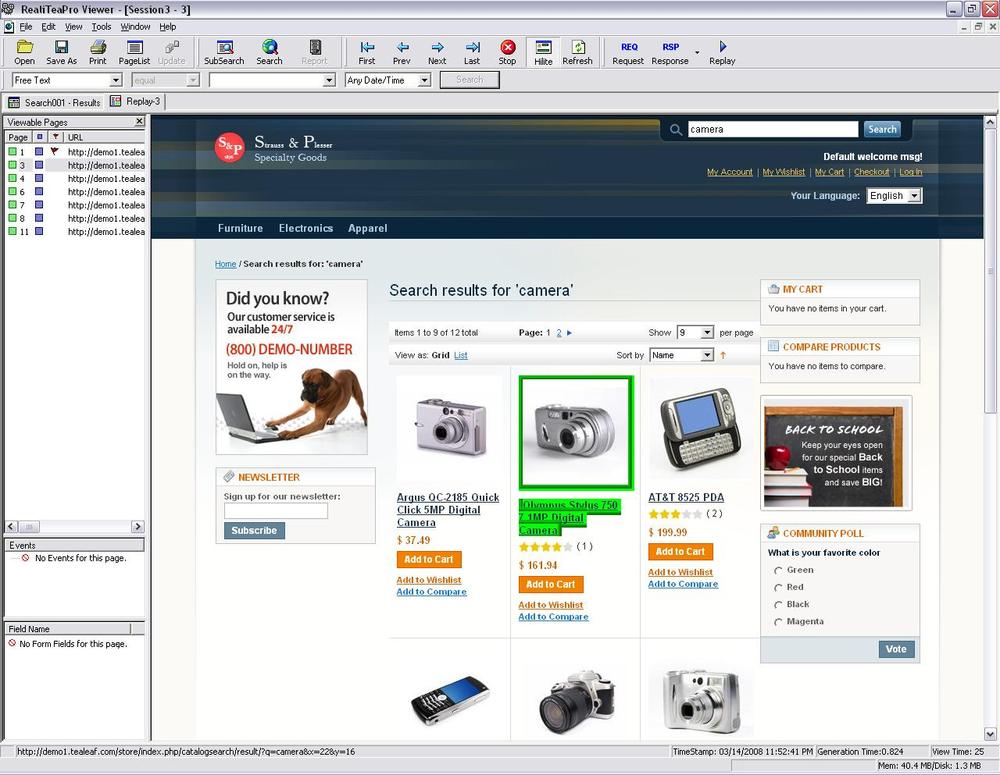

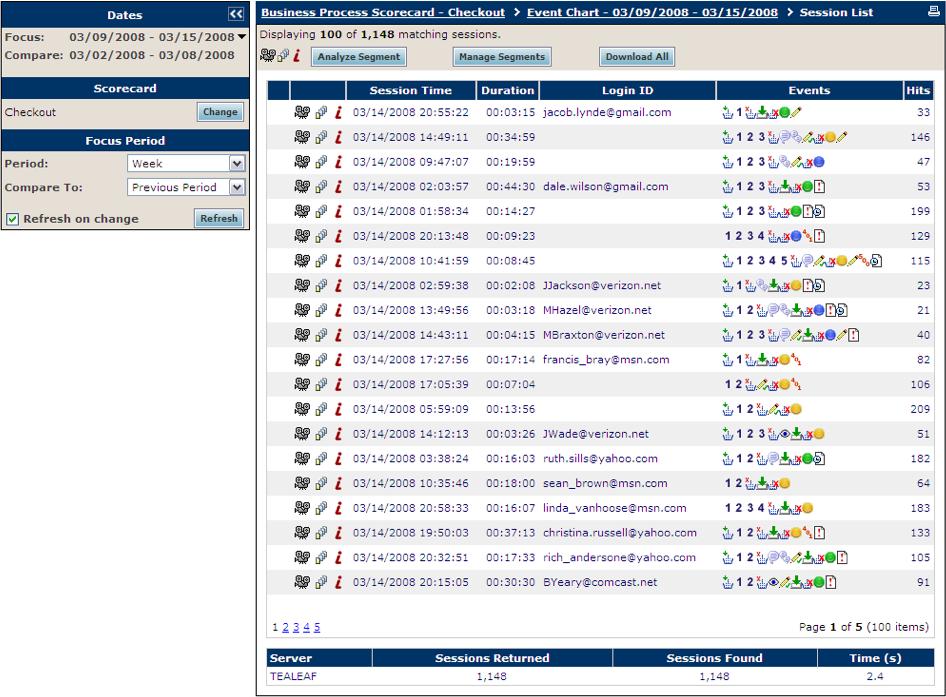

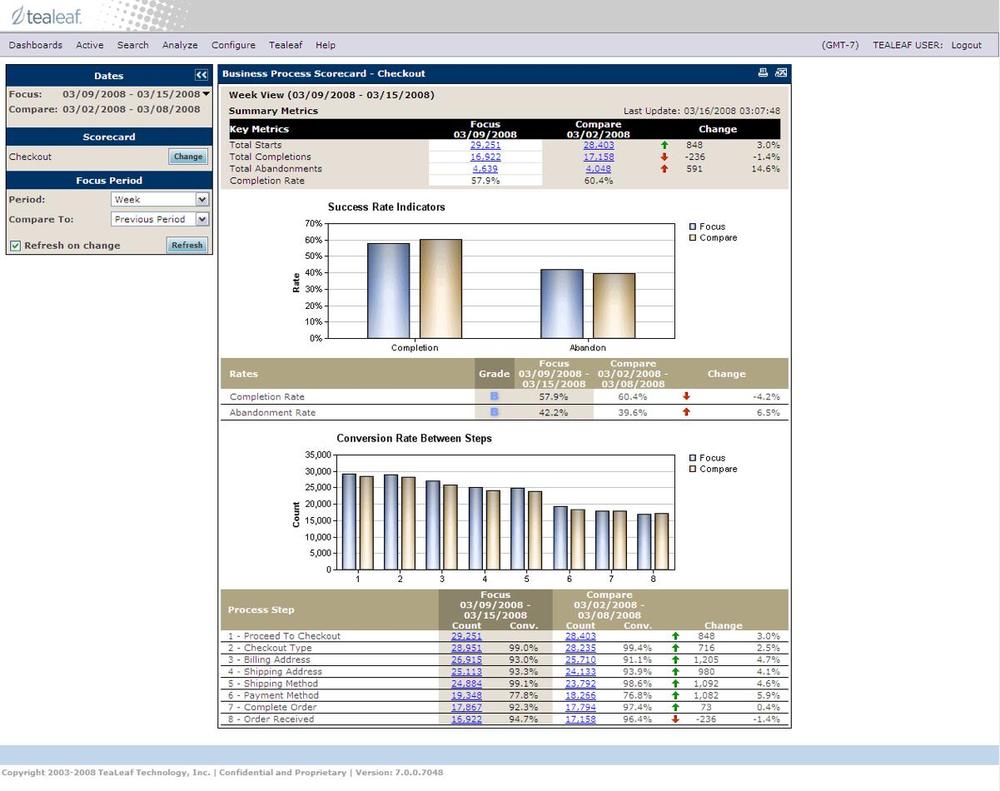

The best place to start is a conversion funnel. For example, in Figure 6-15 a segment of 29,251 visits reached the conversion stage of a sales funnel for an online store.

Let’s say you’re selling a World of

Warcraft MMORPG upgrade, and you have a high rate of

abandonment on the lichking.php page. You need to

identify visits that made it to this stage in the process but didn’t

continue to the following step. There are three basic approaches to

this:

- The hard way

Find all visits that included

lichking.php, then go through them one by one until you find a visit that ended there.- The easier way

Use a WIA tool that tags visits with metadata such as “successful conversion” when they’re captured. Query for all pages that included

lichking.phpbut did not convert.- The easiest way

If your WIA solution is integrated with an analytics tool, you may be able to jump straight from the analytics funnel into the captured visits represented by that segment, as shown in Figure 6-16.

Figure 6-16. A list of captured visits in Tealeaf corresponding to the conversion segments in Figure 6-15; in this case, they’re for World of Warcraft purchases

From this segment, you can then select an individual visit to review from a list (such as the one shown in Figure 6-17) and understand what happened.

One final note on conversion optimizations: when you’re trying to improve conversions, don’t just obsess over problem visits and abandonment. Look for patterns of success to learn what your “power users” do and to try and understand what works.

You can’t help someone if you don’t know what the problem is. When tied into call centers and helpdesks, WIA gives you a much better understanding of what transpired from the visitor’s perspective. Visitors aren’t necessarily experiencing errors. They may simply not understand the application, and may be typing information into the wrong fields. This is particularly true for consumer applications where the visitor has little training, may be new to the site, and may not be technically sophisticated.

If this is how you plan to use WIA, you’ll need to extract information from each visit that helps you to uniquely identify each visitor. For example, you might index visits by the visitor’s name, account number, the amount of money spent, the number of comments made, how many reports he generated, and so on.

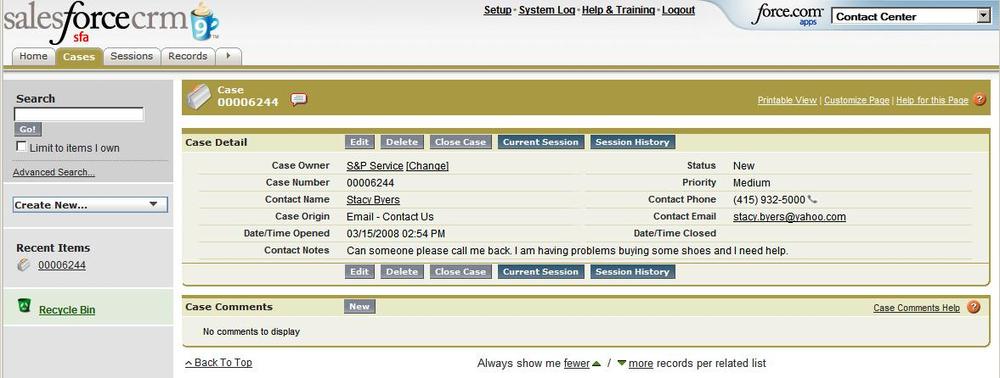

You’ll also want to tie the WIA system into whatever Customer Relationship Management (CRM) solution you’re using so that helpdesk personnel have both the visitor’s experience and account history at their fingertips. Figure 6-18 shows an example of Tealeaf’s replay integrated into a Salesforce.com CRM application.

The helpdesk staff member receives a trouble ticket that includes a link to her current session. This means she’s able to contact the suffering customer with the context of the visit.

While WIA for helpdesks can assist visitors with the application, sometimes it’s not the visitor’s fault at all, but rather an error with the application that you weren’t able to anticipate or detect through monitoring. When this happens, you need to see the problem firsthand so you can diagnose it.

While you’ll hear about many of these problems from the helpdesk, you can also find them yourself if you know where to look.

When a form is rejected as incomplete or incorrect, make sure your WIA solution marks the page and the visit as one in which there were form problems. You may find that visitors are consistently entering data in the wrong field due to tab order or page layout.

When a page is slow or has errors, check to see if visitors are unable to interact with the page properly. Your visitors may be trying to use a page that’s still missing some of its buttons and formatting, leading to errors and frustration.

If there’s an expected sequence of events in a typical visit, you may be able to flag visits that don’t follow that pattern of behavior, so that you can review them. For example, if you expect visitors to log in, then see a welcome page, any visits where that sequence of events didn’t happen is worthy of your attention.

Once you’ve filtered the visits down to those in which the event occurred, browsing through them becomes much more manageable, as shown in Figure 6-19.

In many WIA tools, the list of visits will be decorated with icons (representing visitors who spent money, enrolled, or departed suddenly, for example) so that operators can quickly identify them. Decorating visits in this way is something we’ll cover when we look at implementing WIA.

The teams that test web applications are constantly looking for use cases. Actual visits are a rich source of test case material, and you may be able to export the HTTP transactions that make up a visit so that testers can reuse those scripts for future testing.

This is particularly useful if you have a copy of a visit that broke something. It’s a good idea to add such visits to the suite of regression tests that test teams run every time they release a new version of the application, to ensure that old problems haven’t crept back in. Remember, however, that any scripts you generate need to be “neutered” so that they don’t trigger actual transactions or contain personal information about the visitor whose visit was captured.

There’s no substitute for seeing what happened, particularly when you’re trying to convince a department that the problem is its responsibility. Replacing anecdotes with facts is the fastest way to resolve a dispute, and WIA gives you the proof you need to back up your claims.

Dispute resolution may be more than just proving you’re right. In 2006, the U.S. government expanded the rules for information discovery in civil litigation (http://www.uscourts.gov/rules/congress0406.html), making it essential for companies in some industries to maintain historical data, and also to delete it in a timely fashion when allowed to do so. (WIA data that is stored for dispute resolution may carry with it certain storage restrictions, and you may have to digitally sign the data or take other steps to prevent it from being modified by third parties once captured).

As a web analyst, you’ll often want to ask “what if” questions. You’ll think of new segments along which you’d like to analyze conversion and engagement, for example, “Did people who searched the blog before they posted a comment eventually sign up for the newsletter?”

To do this with web analytics, you’d need to reconfigure your analytics tool to track the new segment, then wait for more visits to arrive. Reconfiguration would involve creating a page tag for the search page and for the comment page. Finally, once you had a decent sample of visits, you’d analyze conversions according to the newly generated segments. This would be time-consuming, and with many analytics products only providing daily reports, it would take several days to get it right. The problem, in a nutshell, is that web analytics tools can’t look backward in time at things you didn’t tell them to segment.

On the other hand, if you’ve already captured every visit in order to replay it, you may be able to mine the captured visits to do retroactive segmentation—to slice up visits based on their attributes and analyze them in ways you didn’t anticipate. Because you have all of the HTML, you can ask questions about page content. You can effectively create a segment from the recorded visits. You can mark all visits in which the text “Search Results” appears, and all the visits in which the text “Thanks for your comment!” appears—and now you have two new segments.

This is particularly useful when evaluating the impact of errors on conversions. If visitors get a page saying, “ODBC Error 1234,” for example, performing a search for that string across all visits will show you how long the issue has been happening, and perhaps provide clues about what visitors did to cause the problem before seeing that page. You will not only have a better chance of fixing things, you will also know how common the problem is.

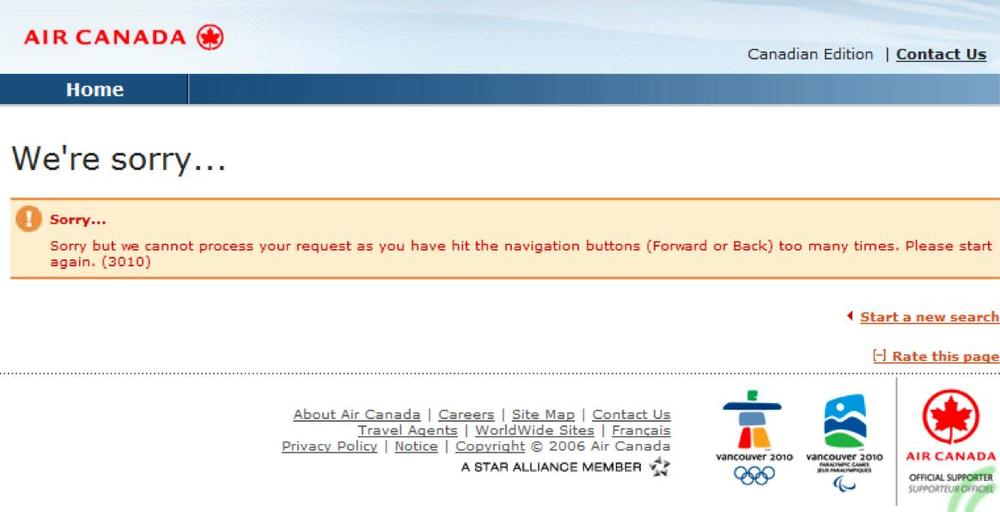

Consider the example in Figure 6-20. In this case, the visitor clicked the Back button once, entered new data, and clicked Submit.

The application broke. As an analyst, you want to know how often this happens to visitors, and how it affects conversion. With an analytics tool, you’d create a custom page tag for this message, wait a while to collect some new visits that had this tag in them, then segment conversions along that dimension.

By searching for the string “you have hit the navigation buttons,” you’re effectively constructing a new segment. You can then compare this segment to normal behavior and see how it differs. Because you’re creating that segment from stored visits, there’s no need to wait for new visits to come in. Figure 6-21 shows a comparison of two segments of captured visits.

Open text search like this consumes a great deal of storage, and carries with it privacy concerns, but it’s valuable because we seldom know beforehand that issues like this are going to happen.