Hypertext Markup Language (HTML) is the fundamental technology used to create web pages. HTML is composed of HTML elements that consist of so-called tags enveloped in slanted brackets (for example, <html>). Often, tags are paired with a starting and closing tag in a hierarchical tree-like structure. An HTML-related draft specification was first published by Berners-Lee in 1991. Initially, there were only 18 HTML elements. The formal HTML definition was published by the Internet Engineering Task Force (IETF) in 1993. The IETF completed the HTML 2.0 standard in 1995. Around 2013, the latest HTML version, HTML5, was specified. HTML is not a very strict standard if compared to XHTML and XML.

Modern browsers tolerate a lot of violations of the standard, making web pages a form of unstructured data. We can treat HTML as a big string and perform string operations on it with regular expressions, for example. This approach works only for simple projects.

I have worked on web scraping projects in a professional setting; so from personal experience, I can tell you that we need more sophisticated methods. In a real world scenario, it may be necessary to submit HTML forms programmatically, for instance, to log in, navigate through pages, and manage cookies robustly. The problem with scraping data from the Web is that if we don't have full control of the web pages that we are scraping, we may have to change our code quite often. Also, programmatic access may be actively blocked by the website owner, or may even be illegal. For these reasons, you should always try to use other alternatives first, such as a REST API.

In the event that you must retrieve the data by scraping, it is recommended to use the Python Beautiful Soup API. This API can extract data from both HTML and XML files. New projects should use Beautiful Soup 4, since Beautiful Soup 3 is no longer developed. We can install Beautiful Soup 4 with the following command (similar to easy_install):

$ pip install beautifulsoup4 $ pip freeze|grep beautifulsoup beautifulsoup4==4.3.2

Note

On Debian and Ubuntu, the package name is python-bs4. We can also download the source from http://www.crummy.com/software/BeautifulSoup/download/4.x/. After unpacking the source, we can install Beautiful Soup from the source directory with the following command:

$ python setup.py install

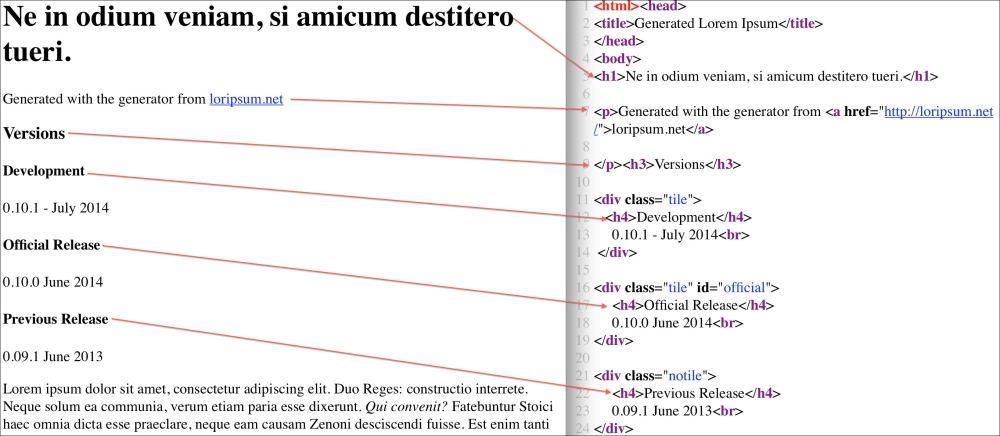

If this doesn't work, you are allowed to simply package Beautiful Soup along with your own code. To demonstrate parsing HTML, I have generated the loremIpsum.html file in this book's code bundle with the generator from http://loripsum.net/. Then, I edited the file a bit. The content of the file is a first century BC text in Latin by Cicero, which is a traditional way to create mock-ups of websites. Refer to the following screenshot for the top part of the web page:

In this example, we will be using Beautiful Soup 4 and the standard Python regular expression library:

Import these libraries with the following lines:

from bs4 import BeautifulSoup import re

Open the HTML file and create a BeautifulSoup object with the following line:

soup = BeautifulSoup(open('loremIpsum.html'))Using a dotted notation, we can access the first <div> element. The <div> HTML element is used to organize and style elements. Access the first div element as follows:

print "First div ", soup.div

The resulting output is an HTML snippet with the first <div> tag and all the tags it contains:

First div <div class="tile"> <h4>Development</h4> 0.10.1 - July 2014<br/> </div>

Note

This particular div element has a class attribute with the value tile. The class attribute pertains to the CSS style that is to be applied to this div element. Cascading Style Sheets (CSS) is a language used to style elements of a web page. CSS is a widespread specification that handles the look and feel of web pages through CSS classes. CSS aids in separating content and presentation by defining colors, fonts, and the layout of elements. The separation leads to a simpler and cleaner design.

Attributes of a tag can be accessed in a dict-like fashion. Print the class attribute value of the <div> tag as follows:

print "First div class", soup.div['class'] First div class ['tile']

The dotted notation allows us to access elements at an arbitrary depth. For instance, print the text of the first <dfn> tag as follows:

print "First dfn text", soup.dl.dt.dfn.text

A line with Latin text is printed (Solisten, I pray):

First dfn text Quareattende, quaeso.

Sometimes, we are only interested in the hyperlinks of an HTML document. For instance, we may only want to know which document links to which other documents. In HTML, links are specified with the <a> tag. The href attribute of this tag holds the URL the link points to. The BeautifulSoup class has a handy find_all() method, which we will use a lot. Locate all the hyperlinks with the find_all() method:

for link in soup.find_all('a'):

print "Link text", link.string, "URL", link.get('href')There are three links in the document with the same URL, but with three different texts:

Link text loripsum.net URL http://loripsum.net/ Link text Potera tautem inpune; URL http://loripsum.net/ Link text Is es profecto tu. URL http://loripsum.net/

We can omit the find_all() method as a shortcut. Access the contents of all the <div> tags as follows:

for i, div in enumerate(soup('div')):

print i, div.contentsThe contents attribute holds a list with HTML elements:

0 [u' ', <h4>Development</h4>, u' 0.10.1 - July 2014', <br/>, u' '] 1 [u' ', <h4>Official Release</h4>, u' 0.10.0 June 2014', <br/>, u' '] 2 [u' ', <h4>Previous Release</h4>, u' 0.09.1 June 2013', <br/>, u' ']

A tag with a unique ID is easy to find. Select the <div> element with the official ID and print the third element:

official_div = soup.find_all("div", id="official")

print "Official Version", official_div[0].contents[2].strip()Many web pages are created on the fly based on visitor input or external data. This is how most content from online shopping websites is served. If we are dealing with a dynamic website, we have to remember that any tag attribute value can change in a moment's notice. Typically, in a large website, IDs are automatically generated resulting in long alphanumeric strings. It's best to not look for exact matches but use regular expressions instead. We will see an example of a match based on a pattern later. The previous code snippet prints a version number and month as you might find on a website for a software product:

Official Version 0.10.0 June 2014

As you know, class is a Python keyword. To query the class attribute in a tag, we match it with class_. Get the number of <div> tags with a defined class attribute:

print "# elements with class", len(soup.find_all(class_=True))

We find three tags as expected:

# elements with class 3

Find the number of <div> tags with the class "tile":

tile_class = soup.find_all("div", class_="tile")

print "# Tile classes", len(tile_class)There are two <div> tags with class tile and one <div> tag with class notile:

# Tile classes 2

Define a regular expression that will match all the <div> tags:

print "# Divs with class containing tile", len(soup.find_all("div", class_=re.compile("tile")))Again, three occurrences are found:

# Divs with class containing tile 3

In CSS, we can define patterns in order to match elements. These patterns are called CSS selectors and are documented at http://www.w3.org/TR/selectors/. We can select elements with the CSS selector from the BeautifulSoup class too. Use the select() method to match the <div> element with class notile:

print "Using CSS selector

", soup.select('div.notile')The following is printed on the screen:

Using CSS selector [<div class="notile"> <h4>Previous Release</h4> 0.09.1 June 2013<br/> </div>]

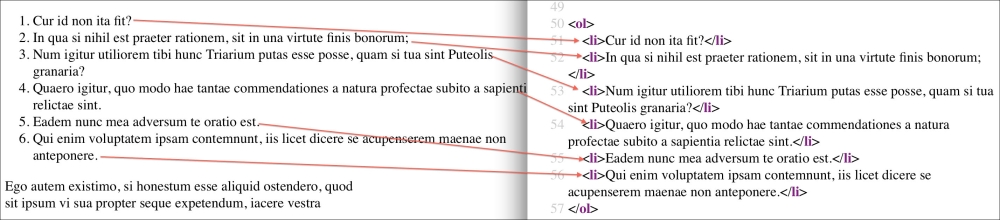

An HTML-ordered list looks like a numbered list of bullets. The ordered list consists of an <ol> tag and several <li> tags for each list item. The result from the select() method can be sliced as any Python list. Refer to the following screenshot of the ordered list:

Select the first two list items in the ordered list:

print "Selecting ordered list list items

", soup.select("ol> li")[:2]The following two list items are shown:

Selecting ordered list list items [<li>Cur id non ita fit?</li>, <li>In qua si nihil est praeter rationem, sit in una virtute finis bonorum;</li>]

In the CSS selector mini language, we start counting from 1. Select the second list item as follows:

print "Second list item in ordered list", soup.select("ol>li:nth-of-type(2)")The second list item can be translated in English as In which, if there is nothing contrary to reason, let him be the power of the end of the good things in one:

Second list item in ordered list [<li>In qua si nihil est praeter rationem, sit in una virtute finis bonorum;</li>]

If we are looking at a web page in a browser, we may decide to retrieve the text nodes that match a certain regular expression. Find all the text nodes containing the string 2014 with the text attribute:

print "Searching for text string", soup.find_all(text=re.compile("2014"))This prints the following text nodes:

Searching for text string [u'

0.10.1 - July 2014', u'

0.10.0 June 2014']

This was just a brief overview of what the BeautifulSoup class can do for us. Beautiful Soup can also be used to modify HTML or XML documents. It has utilities to troubleshoot, pretty print, and deal with different character sets. Please refer to soup_request.py for the code:

from bs4 import BeautifulSoup

import re

soup = BeautifulSoup(open('loremIpsum.html'))

print "First div

", soup.div

print "First div class", soup.div['class']

print "First dfn text", soup.dl.dt.dfn.text

for link in soup.find_all('a'):

print "Link text", link.string, "URL", link.get('href')

# Omitting find_all

for i, div in enumerate(soup('div')):

print i, div.contents

#Div with id=official

official_div = soup.find_all("div", id="official")

print "Official Version", official_div[0].contents[2].strip()

print "# elements with class", len(soup.find_all(class_=True))

tile_class = soup.find_all("div", class_="tile")

print "# Tile classes", len(tile_class)

print "# Divs with class containing tile", len(soup.find_all("div", class_=re.compile("tile")))

print "Using CSS selector

", soup.select('div.notile')

print "Selecting ordered list list items

", soup.select("ol> li")[:2]

print "Second list item in ordered list", soup.select("ol>li:nth-of-type(2)")

print "Searching for text string", soup.find_all(text=re.compile("2014"))