Once your analytics is in place and running correctly, you need to share the data with those who can make use of it.

No organization wants to be overwhelmed with dozens of reports at the outset. Giving your stakeholders open access to the entire system may backfire, as they won’t understand the various terms and reports without some explanation. It’s far better to pick a few reports that are tailored to each recipient and send regular mailouts. For example:

For web designers, provide information on conversion, abandonment, and click heatmaps.

For marketers and advertisers, show which campaigns are working best and which keywords are most successful.

For operators, show information on technical segments such as bandwidth and browser type, as well as countries and service providers from which visitors are arriving. They can then include those regions in their testing and monitoring.

For executives, provide comparative reports of month-over-month and quarterly growth of KPIs like revenue and visitors.

For content creators, show which content has the lowest bounce rates, which content makes people leave quickly, and what visitors are searching for.

For community managers, show which sites are referring the most visitors and which are leading to the most outcomes, as well as which articles have the most content.

For support personnel, show which search terms are most popular on help pages and which URLs are most often exits from the site, as well as which pages immediately precede a click on the Support button.

Include a few KPIs and targets for improvement in your business plans. Revisit those KPIs and see how you’re doing against them. If you really want to impress executives, use a waterfall report of KPIs to show whether you’re making progress against targets. Waterfall reports consist of a forecast (such as monthly revenue) below which actual values are written. They’re a popular reporting format for startups, as they show both performance against a plan and changes to estimates at a glance. See http://redeye.firstround.com/2006/07/one_of_the_toug.html for some examples of waterfall reports.

Once analytics is gaining acceptance within your organization, find a couple of key assumptions about an upcoming release to the site and instrument them. If the home page is supposed to improve enrollment, set up a test to see if that’s the case. If a faster, more lightweight page design is expected to lower bounce rates, see if it’s actually having the desired effect. Gradually add KPIs and fine-tune the site. What you choose should be directly related to your business:

If you’re focused on retention, track metrics such as time spent on the site and time between return visits.

If you’re in acquisition mode, measure and segment your viral coefficient (the number of new users who sign up because of an existing user) to discover which groups will get you to critical mass most quickly.

If you’re trying to maximize advertising ROI, compare the cost of advertising keywords to the sales they generate.

If you’re trying to find the optimum price to charge, try different pricing levels for products and determine your price elasticity to set the optimum combination of price and sales volume.

There are many books on KPIs, such as Eric Peterson’s Big Book of Key Performance Indicators (www.webanalyticsdemystified.com/), to get you started.

For your organization to become analytics-driven, web activity needs to be communicated consistently. Companies that don’t use analytics as the basis for decision-making become lazy and resort to gut-level decisions. The Web has given us an unprecedented ability to track and improve our businesses, and it shouldn’t be squandered.

The best way to do this is to communicate analytics data regularly and to display it prominently. Annotate your analytics reports with key events, such as product launches, marketing campaigns, or online mentions. Build analytics into product requirements. The more your organization understands the direct impact its actions have on web KPIs, the more they will demand analytical feedback for what they do.

If you want to add a new metric to analytics reports you share with others, consider showcasing a particular metric for a month or so, rather than changing the reports constantly. You want people to learn the four or five KPIs on which the business is built.

Think of your website as a living organism rather than a finished product. That way, you’ll know that constant adjustment is the norm. By providing a few key reports and explaining what changes you’d like to see—lower bounce rates, for example, or fewer abandonments on the checkout page—you can solicit suggestions from your stakeholders, try some of those suggestions, and show them which one(s) worked best. You’ll have taught them an important lesson in experimentation.

One of the main ways your monitoring data will be used is for experimenting, whether it’s simply analytics, or other metrics from performance, usability, and customer feedback. Knowing your site’s desired outcomes and the metrics by which you can judge them, you can try new tactics—from design, to campaigns, to content, to pricing—and see which ones improve those metrics. Avoid the temptation to trust your instincts—they’re almost certainly wrong. Instead, let your visitors show you what works through the science of A/B testing.

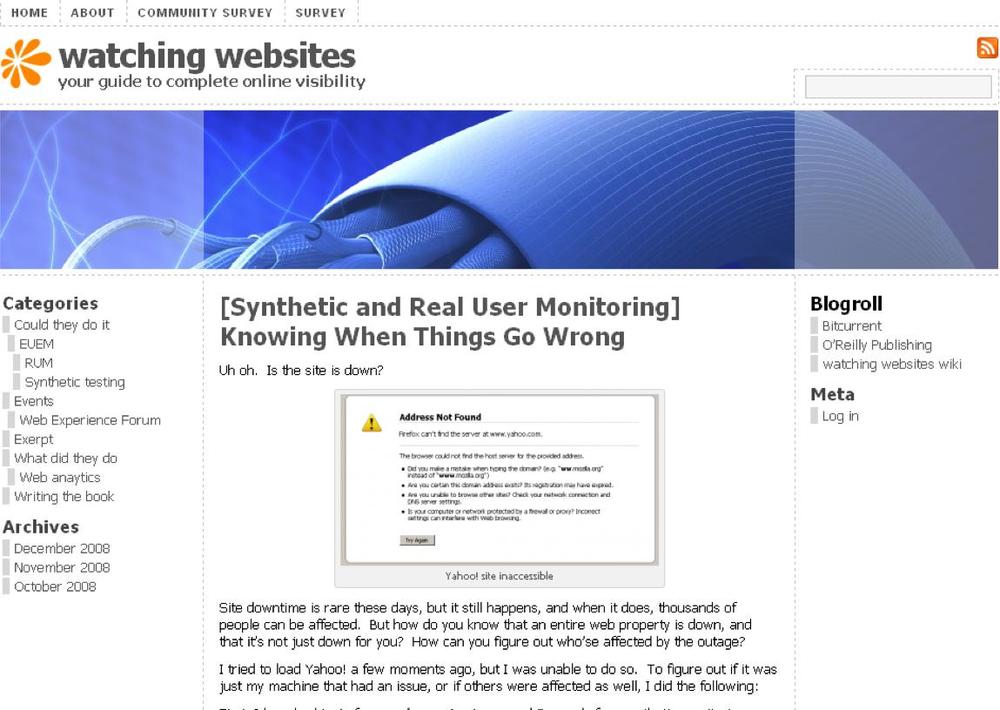

A/B testing involves trying two designs, two kinds of content, or two layouts to see which is most effective. Start with a hypothesis. Maybe you think that the current web layout is inferior to a proposed new one. Pit the two designs against one another to see which wins. Of course, “winning” here means improving your KPIs, which is why you’ve worked so hard to define them. Let’s assume that you want to maximize enrollment on the Watching Websites website. Your metric is the number of RSS feed subscribers. The current layout, shown in Figure 5-54, hides a relatively small icon for RSS subscription in the upper-right of the page.

We might design a new layout with a hard-to-miss enrollment icon (called the “treatment” page) like the one shown in Figure 5-55 and test the treatment against the original or “control” design.

RSS subscriptions are our main metric for success, but you should also monitor other KPIs such as bounce rate and time on site to be sure that you have improved enrollment without jeopardizing other important metrics.

Testing just two designs at once can be inefficient—you’d probably like to try several things at once and improve more quickly—so advanced marketers do multivariate testing. This involves setting up several hypotheses, then testing them out in various combinations to see which work best together.

It’s hard to do multivariate testing by hand, so more advanced analytics packages can automatically try out content across visitors, taking multiple versions of offers and discovering which offers work best for which segments, essentially redesigning portions of your website without your intervention.

When embarking on testing, keep the following in mind:

- Determine your goals, challenge your assumptions

What you think works well may be horrible for your target audience. You simply don’t know. The only thing you know is which KPIs matter to your business and how you’d like to see them change. You’re not entitled to an opinion on how you achieve those KPIs. Decide what to track, then test many permutations while ignoring your intuition. Perhaps your designer has a design you hate. Why not try it? Maybe your competitors do something differently. Find out if they have a reason for doing so.

- Know what normal is

You need to establish a baseline before you can measure improvement. Other factors, such as a highly seasonal sales cycle, may muddy your test results. For example, imagine that your site gets more comments on the weekend. You may try an inferior design on a weekend and see the number of comments climb, and think that you’ve got a winner, but it may just be the weekend bump. Know what normal looks like, and be sure you’re testing fairly.

- Make a prediction

Before the test, try and guess what will happen to your control (baseline) and treatment (change) version of the site. This will force you to list your assumptions, for example, “The new layout will reduce bounce rate, but also lower time on site.” By forcing yourself to make predictions, you’re ensuring that you don’t engage in what Eric Ries calls “after-the-fact rationalization.”

- Test against the original

The best way to avoid other factors that can cloud your results is to continuously test the control site against past performance. If you see a sudden improvement in the original site over past performance, you know there’s another factor that changed things. We’ve seen one case where a bandwidth upgrade improved page performance, raising conversion rates in the middle of multivariate testing. It did wonders for sales, but completely invalidated the testing. The analytics team knew something had messed up their tests only because both the baseline and control websites improved.

- Run the tests for long enough

Big sites have the luxury of large traffic volumes that let them run tests quickly and get results in hours or days. If you have only a few conversions a day, it may take weeks or months for you to have a statistically significant set of results. Even some of the world’s largest site operators told us they generally run tests for a week or more to let things “settle” before drawing any important conclusions.

- Make sure both A and B had a fair shake

For the results to be meaningful, all of your segments have to be properly represented. If all of the visitors who saw page A were from Europe, but all of those who saw page B were from North America, you wouldn’t know whether it was the page or the continent that made a difference. Compare segments across both the control and the treatment pages to be sure you have a fair comparison.

Once you’ve completed a few simple A/B tests, you can try changing several factors at once and move on to more complex testing. As an organization, you need to be allergic to sameness and addicted to constant improvement.

For more information on testing, we suggest Always Be Testing: The Complete Guide to Google Website Optimizer by Bryan Eisenberg et al. (Sybex) and “Practical Guide to Controlled Experiments on the Web: Listen to Your Customers not to the HiPPO” (http://exp-platform.com/hippo.aspx).