3.1 Data and Computers

Without data, computers would be useless. Every task a computer undertakes deals with managing data in some way. Therefore, our need to represent and organize data in appropriate ways is paramount.

Let’s start by distinguishing the terms data and information. Although these terms are often used interchangeably, making the distinction is sometimes useful, especially in computing. Data is basic values or facts, whereas information is data that has been organized and/or processed in a way that is useful in solving some kind of problem. Data can be unstructured and lack context. Information helps us answer questions (it “informs”). This distinction, of course, is relative to the needs of the user, but it captures the essence of the role that computers play in helping us solve problems.

In this chapter, we focus on representing different types of data. In later chapters, we discuss the various ways to organize data so as to solve particular types of problems.

In the not-so-distant past, computers dealt almost exclusively with numeric and textual data. Today, however, computers are truly multimedia devices, dealing with a vast array of information categories. Computers store, present, and help us modify many different types of data:

Numbers

Text

Audio

Images and graphics

Video

Ultimately, all of this data is stored as binary digits. Each document, picture, and sound bite is somehow represented as strings of 1s and 0s. This chapter explores each of these types of data in turn and discusses the basic ideas behind the ways in which we represent these types of data on a computer.

We can’t discuss data representation without also talking about data compression—reducing the amount of space needed to store a piece of data. In the past, we needed to keep data small because of storage limitations. Today, computer storage is relatively cheap—but now we have an even more pressing reason to shrink our data: the need to share it with others. The Web and its underlying networks have inherent bandwidth restrictions that define the maximum number of bits or bytes that can be transmitted from one place to another in a fixed amount of time. In particular, today’s emphasis on streaming video (video played as it is downloaded from the Web) motivates the need for efficient representation of data.

The compression ratio gives an indication of how much compression occurs. The compression ratio is the size of the compressed data divided by the size of the original data. The values could be in bits or characters (or whatever is appropriate), as long as both values measure the same thing. The ratio should result in a number between 0 and 1. The closer the ratio is to zero, the tighter the compression.

A data compression technique can be lossless, which means the data can be retrieved without losing any of the original information, or it can be lossy, in which case some information is lost in the process of compaction. Although we never want to lose information, in some cases this loss is acceptable. When dealing with data representation and compression, we always face a tradeoff between accuracy and size.

Analog and Digital Data

The natural world, for the most part, is continuous and infinite. A number line is continuous, with values growing infinitely large and small. That is, you can always come up with a number that is larger or smaller than any given number. Likewise, the numeric space between two integers is infinite. For instance, any number can be divided in half. But the world is not just infinite in a mathematical sense. The spectrum of colors is a continuous rainbow of infinite shades. Objects in the real world move through continuous and infinite space. Theoretically, you could always close the distance between you and a wall by half, and you would never actually reach the wall.

Computers, by contrast, are finite. Computer memory and other hardware devices have only so much room to store and manipulate a certain amount of data. We always fail in our attempt to represent an infinite world on a finite machine. The goal, then, is to represent enough of the world to satisfy our computational needs and our senses of sight and sound. We want to make our representations good enough to get the job done, whatever that job might be.

Data can be represented in one of two ways: analog or digital. Analog data is a continuous representation, analogous to the actual information it represents. Digital data is a discrete representation, breaking the information up into separate elements.

A mercury thermometer is an analog device. The mercury rises in a continuous flow in the tube in direct proportion to the temperature. We calibrate and mark the tube so that we can read the current temperature, usually as an integer such as 75 degrees Fahrenheit. However, the mercury in such a thermometer is actually rising in a continuous manner between degrees. At some point in time, the temperature is actually 74.568 degrees Fahrenheit, and the mercury is accurately indicating that, even if our markings are not detailed enough to note such small changes. See FIGURE 3.1.

FIGURE 3.1 A mercury thermometer continually rises in direct proportion to the temperature

Analog data is directly proportional to the continuous, infinite world around us. Computers, therefore, cannot work well with analog data. Instead, we digitize data by breaking it into pieces and representing those pieces separately. Each of the representations discussed in this chapter takes a continuous entity and separates it into discrete elements. Those discrete elements are then individually represented using binary digits.

But why do we use the binary system? We know from Chapter 2 that binary is just one of many equivalent number systems. Couldn’t we use, say, the decimal number system, with which we are already more familiar? We could. In fact, it’s been done. Computers have been built that are based on other number systems. However, modern computers are designed to use and manage binary values because the devices that store and manage the data are far less expensive and far more reliable if they have to represent only one of two possible values.

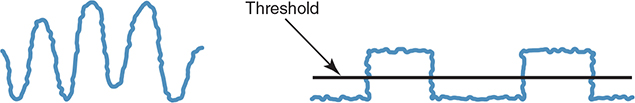

Also, electronic signals are far easier to maintain if they transfer only binary data. An analog signal continually fluctuates up and down in voltage, but a digital signal has only a high or low state, corresponding to the two binary digits. See FIGURE 3.2.

FIGURE 3.2 An analog signal and a digital signal

All electronic signals (both analog and digital) degrade as they move down a line. That is, the voltage of the signal fluctuates due to environmental effects. The trouble is that as soon as an analog signal degrades, information is lost. Because any voltage level within the range is valid, it’s impossible to know what the original signal state was or even that it changed at all.

Digital signals, by contrast, jump sharply between two extremes—a behavior referred to as pulse-code modulation (PCM). A digital signal can become degraded by quite a bit before any information is lost, because any voltage value above a certain threshold is considered a high value, and any value below that threshold is considered a low value. Periodically, a digital signal is reclocked to regain its original shape. As long as it is reclocked before too much degradation occurs, no information is lost. FIGURE 3.3 shows the degradation effects of analog and digital signals.

FIGURE 3.3 Degradation of analog and digital signals

Binary Representations

As we investigate the details of representing particular types of data, it’s important to remember the inherent nature of using binary. One bit can be either 0 or 1. There are no other possibilities. Therefore, one bit can represent only two things. For example, if we wanted to classify a food as being either sweet or sour, we would need only one bit to do it. We could say that if the bit is 0, the food is sweet, and if the bit is 1, the food is sour. But if we want to have additional classifications (such as spicy), one bit is not sufficient.

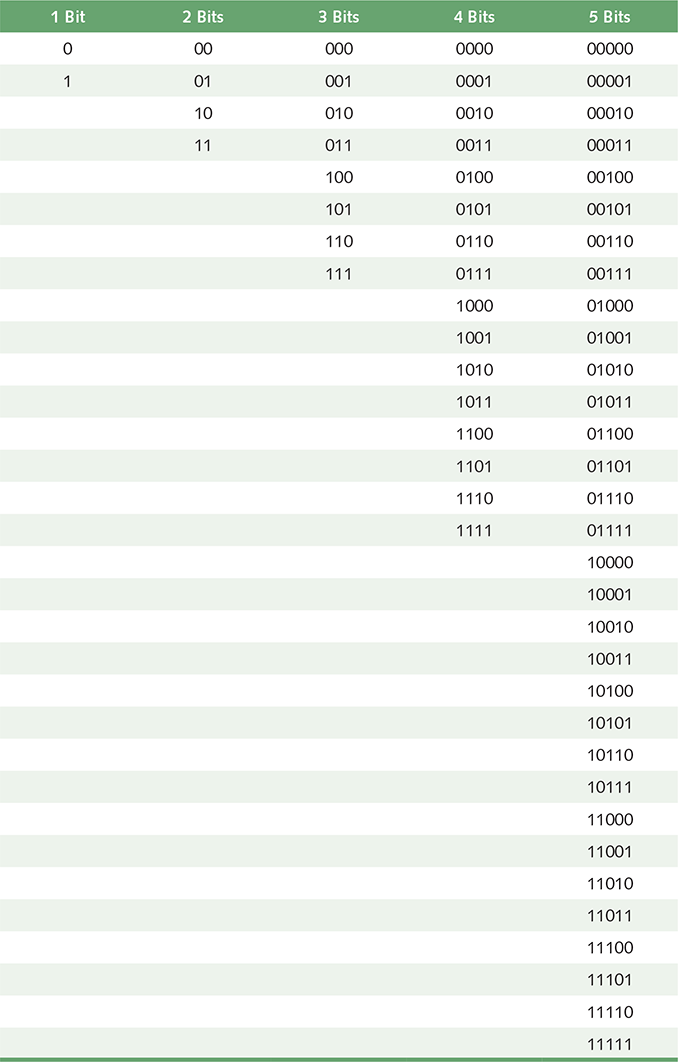

To represent more than two things, we need multiple bits. Two bits can represent four things because four combinations of 0 and 1 can be made from two bits: 00, 01, 10, and 11. For instance, if we want to represent which of four possible gears a car is in (park, drive, reverse, or neutral), we need only two bits: Park could be represented by 00, drive by 01, reverse by 10, and neutral by 11. The actual mapping between bit combinations and the thing each combination represents is sometimes irrelevant (00 could be used to represent reverse, if you prefer), although sometimes the mapping can be meaningful and important, as we discuss in later sections of this chapter.

If we want to represent more than four things, we need more than two bits. For example, three bits can represent eight things because eight combinations of 0 and 1 can be made from three bits. Likewise, four bits can represent 16 things, five bits can represent 32 things, and so on. See FIGURE 3.4. In the figure, note that the bit combinations are simply counting in binary as you move down a column.

FIGURE 3.4 Bit combinations

In general, n bits can represent 2n things because 2n combinations of 0 and 1 can be made from n bits. Every time we increase the number of available bits by 1, we double the number of things we can represent.

Let’s turn the question around. How many bits do you need to represent, say, 25 unique things? Well, four bits wouldn’t be enough because four bits can represent only 16 things. We would have to use at least five bits, which would allow us to represent 32 things. Given that we need to represent only 25 things, some of the bit combinations would not have a valid interpretation.

Keep in mind that even though we may technically need only a certain minimum number of bits to represent a set of items, we may allocate more than that for the storage of data. There is a minimum number of bits that a computer architecture can address and move around at one time, and it is usually a power of 2, such as 8, 16, or 32 bits. Therefore, the minimum amount of storage given to any type of data is allocated in multiples of that value.