13.4 Neural Networks

As mentioned earlier, some artificial intelligence researchers focus on how the human brain actually works and try to construct computing devices that work in similar ways. An artificial neural network in a computer attempts to mimic the actions of the neural networks of the human body. Let’s first look at how a biological neural network works.

Biological Neural Networks

A neuron is a single cell that conducts a chemically based electronic signal. The human brain contains billions of neurons connected into a network. At any point in time a neuron is in either an excited state or an inhibited state. An excited neuron conducts a strong signal; an inhibited neuron conducts a weak signal. A series of connected neurons forms a pathway. The signal along a particular pathway is strengthened or weakened according to the state of the neurons it passes through. A series of excited neurons creates a strong pathway.

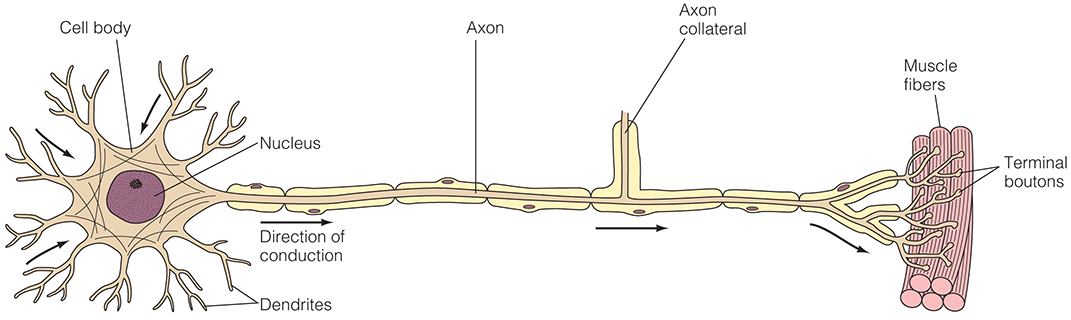

A biological neuron has multiple input tentacles called dendrites and one primary output tentacle called an axon. The dendrites of one neuron pick up the signals from the axons of other neurons to form the neural network. The gap between an axon and a dendrite is called a synapse. (See FIGURE 13.6 .) The chemical composition of a synapse tempers the strength of its input signal. The output of a neuron on its axon is a function of all of its input signals.

FIGURE 13.6 A biological neuron

A neuron accepts multiple input signals and then controls the contribution of each signal based on the “importance” the corresponding synapse assigns to it. If enough of these weighted input signals are strong, the neuron enters an excited state and produces a strong output signal. If enough of the input signals are weak or are weakened by the weighting factor of that signal’s synapse, the neuron enters an inhibited state and produces a weak output signal.

Neurons fire, or pulsate, up to 1000 times per second, so the pathways along the neural nets are in a constant state of flux. The activity of our brain causes some pathways to strengthen and others to weaken. As we learn new things, new strong neural pathways form in our brain.

Artificial Neural Networks

Each processing element in an artificial neural network is analogous to a biological neuron. An element accepts a certain number of input values and produces a single output value of either 0 or 1. These input values come from the output of other elements in the network, so each input value is either 0 or 1. Associated with each input value is a numeric weight. The effective weight of the element is defined as the sum of the weights multiplied by their respective input values.

Suppose an artificial neuron accepts three input values: v1, v2, and v3. Associated with each input value is a weight: w1, w2, and w3. The effective weight is therefore

v1 * w1 + v2 * w2 + v3 * w3

Each element has a numeric threshold value. The element compares the effective weight to this threshold value. If the effective weight exceeds the threshold, the unit produces an output value of 1. If it does not exceed the threshold, it produces an output value of 0.

This processing closely mirrors the activity of a biological neuron. The input values correspond to the signals passed in by the dendrites. The weight values correspond to the controlling effect of the synapse for each input signal. The computation and use of the threshold value correspond to the neuron producing a strong signal if “enough” of the weighted input signals are strong.

Let’s look at an actual example. In this case, we assume there are four inputs to the processing element. There are, therefore, four corresponding weight factors. Suppose the input values are 1, 1, 0, and 0; the corresponding weights are 4, 22, 25, and 22; and the threshold value for the element is 4. The effective weight is

1(4) + 1(22) + 0(25) + 0(22)

or 2. Because the effective weight does not exceed the threshold value, the output of this element is 0.

Although the input values are either 0 or 1, the weights can be any value at all. They can even be negative. We’ve used integers for the weights and threshold values in our example, but they can be real numbers as well.

The output of each element is truly a function of all pieces of the puzzle. If the input signal is 0, its weight is irrelevant. If the input signal is 1, the magnitude of the weight, and whether it is positive or negative, greatly affects the effective weight. And no matter what effective weight is computed, it’s viewed relative to the threshold value of that element. That is, an effective weight of 15 may be enough for one element to produce an output of 1, but for another element it results in an output of 0.

The pathways established in an artificial neural net are a function of its individual processing elements. And the output of each processing element changes on the basis of the input signals, the weights, and/ or the threshold values. But the input signals are really just output signals from other elements. Therefore, we affect the processing of a neural net by changing the weights and threshold value in individual processing elements.

The process of adjusting the weights and threshold values in a neural net is called training. A neural net can be trained to produce whatever results are required. Initially, a neural net may be set up with random weights, threshold values, and initial inputs. The results are compared to the desired results and changes are made. This process continues until the desired results are achieved.

Consider the problem we posed at the beginning of this chapter: Find a cat in a photograph. Suppose a neural net is used to address this problem, using one output value per pixel. Our goal is to produce an output value of 1 for every pixel that contributes to the image of the cat, and to produce a 0 if it does not. The input values for the network could come from some representation of the color of the pixels. We then train the network using multiple pictures containing cats, reinforcing weights and thresholds that lead to the desired (correct) output.

Think about how complicated this problem is! Cats come in all shapes, sizes, and colors. They can be oriented in a picture in thousands of ways. They might blend into their background (in the picture) or they might not. A neural net for this problem would be incredibly large, taking all kinds of situations into account. The more training we give the network, however, the more likely it will produce accurate results in the future.

What else are neural nets good for? They have been used successfully in thousands of application areas, in both business and scientific endeavors. They can be used to determine whether an applicant should be given a mortgage. They can be used in optical character recognition, allowing a computer to “read” a printed document. They can even be used to detect plastic explosives in luggage at airports.

The versatility of neural nets lies in the fact that there is no inherent meaning in the weights and threshold values of the network. Their meaning comes from the interpretation we apply to them.