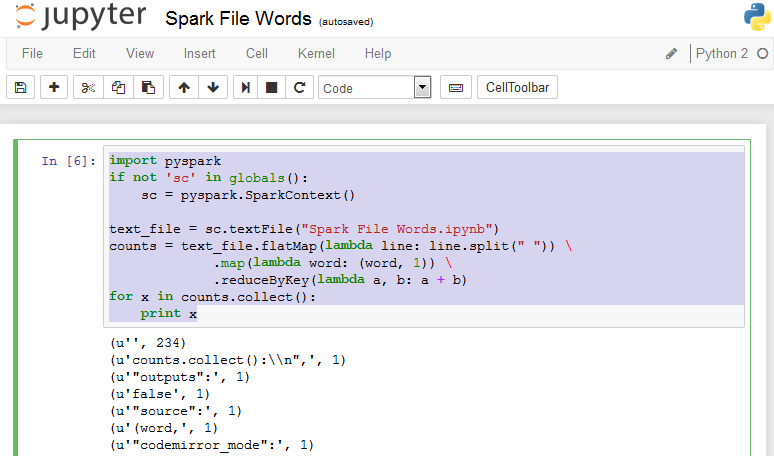

We can use MapReduce in another example where we get the word counts from a file. A standard problem, but we use MapReduce to do most of the heavy lifting. We can use the source code for this example. We can use a script similar to this to count the word occurrences in a file:

import pyspark

if not 'sc' in globals():

sc = pyspark.SparkContext()

text_file = sc.textFile("Spark File Words.ipynb")

counts = text_file.flatMap(lambda line: line.split(" "))

.map(lambda word: (word, 1))

.reduceByKey(lambda a, b: a + b)

for x in counts.collect():

print x

Then we load the text file into memory.

It is assumed to be massive and the contents distributed over many handlers.

Once the file is loaded we split each line into words, and then use a lambda function to tick off each occurrence of a word. The code is truly creating a new record for each word occurrence, such as at appears 1, at appears 1. For example, if the word at appears twice each occurrence would have a record added like at appears 1. The idea is to not aggregate results yet, just record the appearances that we see. The idea is that this process could be split over multiple processors where each processor generates these low-level information bits. We are not concerned with optimizing this process at all.

Once we have all of these records we reduce/summarize the record set according to the word occurrences mentioned.

The counts object is also RDD in Spark. The last for loop runs a collect() against the RDD. As mentioned, this RDD could be distributed among many nodes. The collect() function pulls in all copies of the RDD into one location. Then we loop through each record.

When we run this in Jupyter we see something akin to this display:

The listing is abbreviated as the list of words continues for some time.