Now that we know how to interact with the data, let's build a neural network-based optical character-recognition system.

- Create a new Python file, and import the following packages:

import numpy as np import neurolab as nl

- Define the input filename:

# Input file input_file = 'letter.data'

- When we work with neural networks that deal with large amounts of data, it takes a lot of time to train. To demonstrate how to build this system, we will take only

20datapoints:# Number of datapoints to load from the input file num_datapoints = 20

- If you look at the data, you will see that there are seven distinct characters in the first 20 lines. Let's define them:

# Distinct characters orig_labels = 'omandig' # Number of distinct characters num_output = len(orig_labels)

- We will use 90% of the data for training and remaining 10% for testing. Define the training and testing parameters:

# Training and testing parameters num_train = int(0.9 * num_datapoints) num_test = num_datapoints - num_train

- The starting and ending indices in each line of the dataset file:

# Define dataset extraction parameters start_index = 6 end_index = -1

- Create the dataset:

# Creating the dataset data = [] labels = [] with open(input_file, 'r') as f: for line in f.readlines(): # Split the line tabwise list_vals = line.split(' ') - Add an error check to see whether the characters are in our list of labels:

# If the label is not in our ground truth labels, skip it if list_vals[1] not in orig_labels: continue - Extract the label, and append it the main list:

# Extract the label and append it to the main list label = np.zeros((num_output, 1)) label[orig_labels.index(list_vals[1])] = 1 labels.append(label) - Extract the character, and append it to the main list:

# Extract the character vector and append it to the main list cur_char = np.array([float(x) for x in list_vals[start_index:end_index]]) data.append(cur_char) - Exit the loop once we have enough data:

# Exit the loop once the required dataset has been loaded if len(data) >= num_datapoints: break - Convert this data into NumPy arrays:

# Convert data and labels to numpy arrays data = np.asfarray(data) labels = np.array(labels).reshape(num_datapoints, num_output)

- Extract the number of dimensions in our data:

# Extract number of dimensions num_dims = len(data[0])

- Train the neural network until

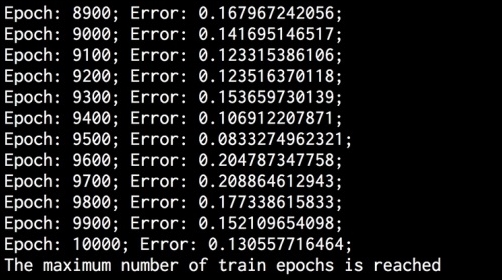

10,000epochs:# Create and train neural network net = nl.net.newff([[0, 1] for _ in range(len(data[0]))], [128, 16, num_output]) net.trainf = nl.train.train_gd error = net.train(data[:num_train,:], labels[:num_train,:], epochs=10000, show=100, goal=0.01) - Predict the output for test inputs:

# Predict the output for test inputs predicted_output = net.sim(data[num_train:, :]) print " Testing on unknown data:" for i in range(num_test): print " Original:", orig_labels[np.argmax(labels[i])] print "Predicted:", orig_labels[np.argmax(predicted_output[i])] - The full code is in the

ocr.pyfile that's already provided to you. If you run this code, you will see the following on your Terminal at the end of training:

The output of the neural network is shown in the following screenshot:

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.