According to the dataset description, glyphs are scanned using an OCR reader on to the computer then they are automatically converted into pixels. Consequently, all the 16 statistical attributes (in figure 2) are recorded to the computer too. The the concentration of black pixels across various areas of the box provide a way to differentiate 26 letters using OCR or a machine learning algorithm to be trained.

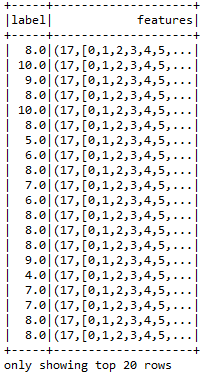

In most practical cases, we might need to normalize the data against all the features points. In short, we need to convert the current tab-separated OCR data into LIBSVM format to make the training step easier. Thus, I'm assuming you have downloaded the data and converted into LIBSVM format using their own script. The resulting dataset that is transformed into LIBSVM format consisting of labels and features is shown in the following figure:

Now let's dive into the example. The example that I will be demonstrating has 11 steps including data parsing, Spark session creation, model building, and model evaluation.

Step 1. Creating Spark session - Create a Spark session by specifying master URL, Spark SQL warehouse, and application name as follows:

val spark = SparkSession.builder

.master("local[*]") //change acordingly

.config("spark.sql.warehouse.dir", "/home/exp/")

.appName("OneVsRestExample")

.getOrCreate()

Step 2. Loading, parsing, and creating the data frame - Load the data file from the HDFS or local disk and create a data frame, and finally show the data frame structure as follows:

val inputData = spark.read.format("libsvm")

.load("data/Letterdata_libsvm.data")

inputData.show()

Step 3. Generating training and test set to train the model - Let's generate the training and test set by splitting 70% for training and 30% for the test:

val Array(train, test) = inputData.randomSplit(Array(0.7, 0.3))

Step 4. Instantiate the base classifier - Here the base classifier acts as the multiclass classifier. For this case, it is the Logistic Regression algorithm that can be instantiated by specifying parameters such as the number of max iterations, tolerance, regression parameter, and Elastic Net parameters.

Note that Logistic Regression is an appropriate regression analysis to conduct when the dependent variable is dichotomous (binary). Like all regression analyses, Logistic Regression is a predictive analysis. Logistic regression is used to describe data and to explain the relationship between one dependent binary variable and one or more nominal, ordinal, interval, or ratio level independent variables.

In brief, the following parameters are used to training a Logistic Regression classifier:

- MaxIter: This specifies the number of maximum iterations. In general, more is better.

- Tol: This is the tolerance for the stopping criteria. In general, less is better, which helps the model to be trained more intensively. The default value is 1E-4.

- FirIntercept: This signifies if you want to intercept the decision function while generating the probabilistic interpretation.

- Standardization: This signifies a Boolean value depending upon if would like to standardize the training or not.

- AggregationDepth: More is better.

- RegParam: This signifies the regression params. Less is better for most cases.

- ElasticNetParam: This signifies more advanced regression params. Less is better for most cases.

Nevertheless, you can specify the fitting intercept as a Boolean value as true or false depending upon your problem type and dataset properties:

val classifier = new LogisticRegression()

.setMaxIter(500)

.setTol(1E-4)

.setFitIntercept(true)

.setStandardization(true)

.setAggregationDepth(50)

.setRegParam(0.0001)

.setElasticNetParam(0.01)

Step 5. Instantiate the OVTR classifier - Now instantiate an OVTR classifier to convert the multiclass classification problem into multiple binary classifications as follows:

val ovr = new OneVsRest().setClassifier(classifier)

Here classifier is the Logistic Regression estimator. Now it's time to train the model.

Step 6. Train the multiclass model - Let's train the model using the training set as follows:

val ovrModel = ovr.fit(train)

Step 7. Score the model on the test set - We can score the model on test data using the transformer (that is, ovrModel) as follows:

val predictions = ovrModel.transform(test)

Step 8. Evaluate the model - In this step, we will predict the labels for the characters in the first column. But before that we need instantiate an evaluator to compute the classification performance metrics such as accuracy, precision, recall, and f1 measure as follows:

val evaluator = new MulticlassClassificationEvaluator()

.setLabelCol("label")

.setPredictionCol("prediction")

val evaluator1 = evaluator.setMetricName("accuracy")

val evaluator2 = evaluator.setMetricName("weightedPrecision")

val evaluator3 = evaluator.setMetricName("weightedRecall")

val evaluator4 = evaluator.setMetricName("f1")

Step 9. Compute performance metrics - Compute the classification accuracy, precision, recall, f1 measure, and error on test data as follows:

val accuracy = evaluator1.evaluate(predictions)

val precision = evaluator2.evaluate(predictions)

val recall = evaluator3.evaluate(predictions)

val f1 = evaluator4.evaluate(predictions)

Step 10. Print the performance metrics:

println("Accuracy = " + accuracy)

println("Precision = " + precision)

println("Recall = " + recall)

println("F1 = " + f1)

println(s"Test Error = ${1 - accuracy}")

You should observe the value as follows:

Accuracy = 0.5217246545696688

Precision = 0.488360500637862

Recall = 0.5217246545696688

F1 = 0.4695649096879411

Test Error = 0.47827534543033123

Step 11. Stop the Spark session:

spark.stop() // Stop Spark session

This way, we can convert a multinomial classification problem into multiple binary classifications problem without sacrificing the problem types. However, from step 10, we can observe that the classification accuracy is not good at all. It might be because of several reasons such as the nature of the dataset we used to train the model. Also even more importantly, we did not tune the hyperparameters while training the Logistic Regression model. Moreover, while performing the transformation, the OVTR had to sacrifice some accuracy.