Spark Streaming is not the first streaming architecture to come into existence. Several technologies have existenced over time to deal with the real-time processing needs of various business use cases. Twitter Storm was one of the first popular stream processing technologies out there and was in used by many organizations fulfilling the needs of many businesses.

Apache Spark comes with a streaming library, which has rapidly evolved to be the most widely used technology. Spark Streaming has some distinct advantages over the other technologies, the first and foremost being the tight integration between Spark Streaming APIs and the Spark core APIs making building a dual purpose real-time and batch analytical platform feasible and efficient than otherwise. Spark Streaming also integrates with Spark ML and Spark SQL, as well as GraphX, making it the most powerful stream processing technology that can serve many unique and complex use cases. In this section, we will look deeper into what Spark Streaming is all about.

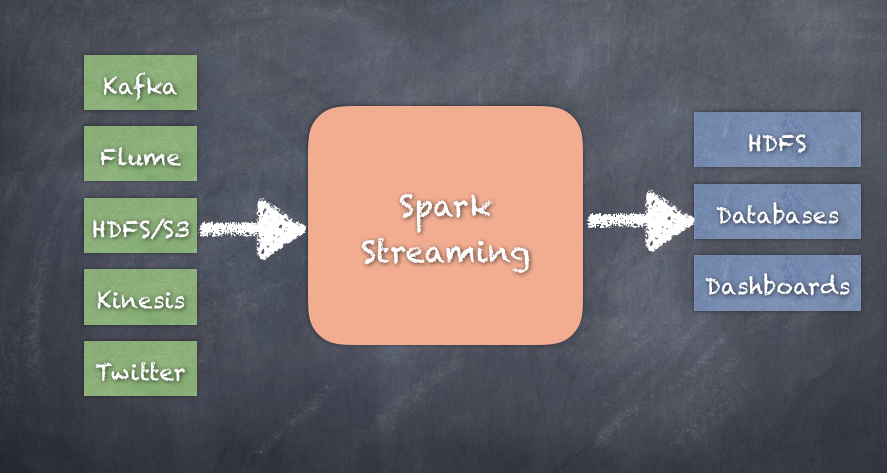

Spark Streaming supports several input sources and can write results to several sinks.

While Flink, Heron (successor to Twitter Storm), Samza, and so on all handle events as they are collected with minimal latency, Spark Streaming consumes continuous streams of data and then processes the collected data in the form of micro-batches. The size of the micro-batch can be as low as 500 milliseconds but usually cannot go lower than that.

The way streaming works are by creating batches of events at regular time intervals as per configuration and delivering the micro-batches of data at every specified interval for further processing.

Just like SparkContext, Spark Streaming has a StreamingContext, which is the main entry point for the streaming job/application. StreamingContext is dependent on SparkContext. In fact, the SparkContext can be directly used in the streaming job. The StreamingContext is similar to the SparkContext, except that StreamingContext also requires the program to specify the time interval or duration of the batching interval, which can be in milliseconds or minutes.