A broadcast variable enables a Spark developer to keep a read-only copy of an instance or class variable cached on each driver program, rather than transferring a copy of its own with the dependent tasks. However, an explicit creation of a broadcast variable is useful only when tasks across multiple stages need the same data in deserialize form.

In Spark application development, using the broadcasting option of SparkContext can reduce the size of each serialized task greatly. This also helps to reduce the cost of initiating a Spark job in a cluster. If you have a certain task in your Spark job that uses large objects from the driver program, you should turn it into a broadcast variable.

To use a broadcast variable in a Spark application, you can instantiate it using SparkContext.broadcast. Later on, use the value method from the class to access the shared value as follows:

val m = 5

val bv = sc.broadcast(m)

Output/log: bv: org.apache.spark.broadcast.Broadcast[Int] = Broadcast(0)

bv.value()

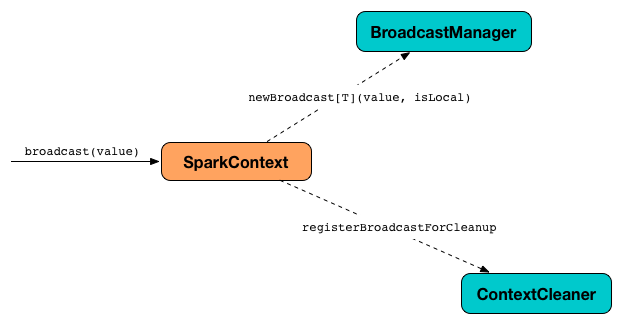

The Broadcast feature of Spark uses the SparkContext to create broadcast values. After that, the BroadcastManager and ContextCleaner are used to control their life cycle, as shown in the following figure:

Spark application in the driver program automatically prints the serialized size of each task on the driver. Therefore, you can decide whether your tasks are too large to make it parallel. If your task is larger than 20 KB, it's probably worth optimizing.