In this recipe, we will use Openstack-Ansible to deploy the Cinder service. We assume that your environment has been deployed using the recipes described in Chapter 1, Installing OpenStack with Ansible.

To use Cinder volumes with LVM and iSCSI, you will need a host (or hosts) running Ubuntu 16.04. Additionally, you will need to ensure the volume hosts are manageable by your Openstack-Ansible deployment host.

The following is required:

- An

openrcfile with appropriate credentials for the environment - Access to the

openstack-ansibledeployment host - A dedicated server to provide Cinder volumes to instances:

- An LVM volume group, specifically named

cinder-volumes - An IP address accessible from the deployment host

- An LVM volume group, specifically named

If you are using the lab environment that accompanies this book, cinder-volume is deployed to a host with the following details:

cinder-volumeAPI service host IP:172.29.236.10cinder-volumeservice host IP: OpenStack management calls over172.29.236.100and storage traffic over172.29.244.100

To deploy the cinder-volume service, first we will create a YAML file to describe how to deploy Cinder. Then we will use openstack-ansible to deploy the appropriate services.

To configure a Cinder LVM host that runs the cinder-volume service, perform the following steps on the deployment host:

- First, edit the

/etc/openstack_deploy/openstack_user_config.ymlfile to add the following lines, noting that we are using both the address of the API and storage networks:--- storage-infra_hosts: controller-01: ip: 172.29.236.10 storage_hosts: cinder-volume: ip: 172.29.236.100 container_vars: cinder_backends: limit_container_types: cinder_volume lvm: volume_group: cinder-volumes volume_driver: cinder.volume.drivers.lvm.LVMVolumeDriver volume_backend_name: LVM_iSCSI iscsi_ip_address: "172.29.244.100" - Now edit the

/etc/openstack_deploy/user_variables.ymlfile to contain the following lines. The defaults for an OpenStack-Ansible deployment are shown here; so edit to suit your environment if necessary:## Cinder iscsi cinder_iscsi_helper: tgtadm cinder_iscsi_iotype: fileio cinder_iscsi_num_targets: 100 cinder_iscsi_port: 3260

- When using LVM, as we are here, we must tell OpenStack-Ansible to not deploy the service onto a container (by setting

is_metal: true). Ensure that the/etc/openstack_deploy/env.d/cinder.ymlfile has the following contents:--- container_skel: cinder_volumes_container: properties: is_metal: true - As we are specifically choosing to install Cinder with the

LVMVolumeDriverservice as part of this example, we must ensure that the host (or hosts) that has been set as running thecinder-volumeservice, has a volume group created, named very specificallycinder-volumes, before we deploy Cinder. Carry out the following steps to create this important volume group. The following example simply assumes that there is an extra disk, at/dev/sdb, that we will use for this purpose:pvcreate /dev/sdb vgcreate cinder-volumes /dev/sdb

This will bring back an output like the following:

Physical volume "/dev/sdb1" successfully created Volume group "cinder-volumes" successfully created

- We can now deploy our Cinder service with the

openstack-ansiblecommand:cd /opt/openstack-ansible/playbooks openstack-ansible os-cinder-install.yml

Note that the Ansible output has been omitted here. On success, Ansible will report that the installation has been successful or will present you information about which step failed.

Tip

Did you use more than one

storage-infra_hosts? These are the Cinder API servers. As these run behind a load balancer, ensure that you have updated your load balancer VIPs with the IP addresses of these new nodes. The Cinder service runs on port8776. If you are running HAProxy that was installed using OpenStack-Ansible, you must also run the following command:openstack-ansible haproxy-install.yml - Verify that the Cinder services are running with the following checks from an OpenStack client or one of the utility containers from one of the controller nodes, as shown here:

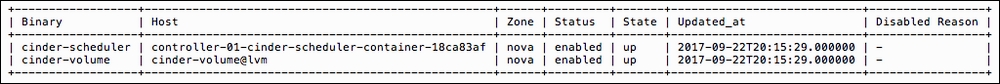

lxc-attach --name $(lxc-ls -1 | grep util) source openrc cinder service-list

This will give back an output like the following:

In order for us to use a host as a cinder-volume server, we first needed to ensure that the logical volume group (LVM VG) named cinder-volumes has been created.

Tip

Tip: You are able to rename the volume group to something other than cinder-volumes; however, there are very few reasons to do so. If you do require this, ensure that the volume_group: parameter in /etc/openstack_deploy/openstack_user_config.yml matches your LVM Volume Group name that you create.

Once that has been done, we will configure our OpenStack-Ansible deployment to specify our cinder-volume server (as denoted by the storage_hosts section) and the servers that run the API service (as denoted by the storage-infra_hosts section). We will then use openstack-ansible to deploy the packages onto the controller hosts and our nominated Cinder volume server.

Note that if you are using multiple networks or VLAN segments, to configure your OpenStack-Ansible deployment accordingly. The storage network presented in this book should be used for this iSCSI traffic (that is to say, when a volume attaches to an instance), and it is separate from the container network that is reserved for API traffic and interservice traffic.

In our example, cinder-volume uses iSCSI as the mechanism for attaching a volume to an instance; openstack-ansible then installs the packages that are required to run iSCSI targets.

OpenStack-Ansible provides the additional benefit of deploying any changes required to support Cinder. This includes creating the service within Keystone and configuring Nova to support volume backends.