4

Media Visualization

Visual Techniques for Exploring Large Media Collections

ABSTRACT

Over the last 20 years, information visualization became a common tool in science and also a growing presence in the arts and culture at large. This chapter outlines a taxonomy of new visualization techniques particularly useful for media research, what Manovich calls “media visualization.” Where information visualization involves translating the world into numbers and then visualizing the relationship between those numbers, media visualization involves translating a set of images into another image that can reveal patterns that might not otherwise be apparent in the collection of images. Using the open source image processing software called ImageJ (normally used in medical research and other scientific fields), Manovich and his collaborators have designed a set of tools for media visualization that can be used by other researchers interested in exploring large sets of images, even without knowing exactly what they are looking for beforehand. The techniques outlined here are based on the work in visualization of patterns in cinema, TV, animation, videogames, and other media carried out in the Software Studies Initiative at the University of California, San Diego.

Some of the illustrations for this chapter are available only online at:

http://lab.softwarestudies.com/p/media-visualizations-illustrations.html.

Introduction: How to Work with Massive Media Data Sets

Early twenty-first-century media researchers have access to unprecedented amounts of media – more than they can possibly study, let alone simply watch or even search. A number of interconnected developments that took place between 1990 and 2010 – the digitization of analog media collections, a decrease in prices and expanding capacities of portable computer-based media devices (laptops, tablets, phones, cameras, etc.), the rise of user-generated content and social media, and globalization, which increased the number of agents and institutions producing media around the world – led to an exponential increase in the quantity of media while simultaneously making it much easier to find, share, teach with, and research. Millions of hours of television programs already digitized by various national libraries and media museums, millions of digitized newspaper pages from the nineteenth and twentieth centuries,1 150 billion snapshots of web pages covering the period from 1996 until today,2 and hundreds of billions of videos on YouTube and photographs on Facebook (every day users upload 100 million images, according to the stats provided by Facebook in the summer of 2011) and numerous other media sources are waiting to be “digged” into. (For more examples of large media collections, see the list of repositories made available to the participants of the Digging Into Data 2011 Competition [Digging into Data Challenge, 2011].)

How do we take advantage of this new scale of media in practice? For instance, let's say that we are interested in studying how presentation and interviews by political leaders are reused and contextualized by TV programs in different countries. (This example comes from our application for Digging Into Data 2011.) The relevant large media collections that were available at the time we were working on our application (June 2011) include 1,800 Barack Obama official White House videos, 500 George W. Bush Presidential Speeches, 21,532 programs from Al Jazeera English (2007–2011), and 5,167 Democracy Now! TV programs (2001–2011). Together, these collections contain tens of thousands of hours of video. We want to describe the rhetorical, editing, and cinematographic strategies specific to each video set, understand how different stations may be using the video of political leaders in different ways, identify outliers, and find clusters of programs that share similar patterns. But how can we simply watch all this material to begin pursuing these and other questions?

Even when we are dealing with large collections of still images – for instance, 167,00 images on “Art Now” Flickr gallery, 236,000 professional design portfolios on coroflot.com (both numbers as of 6/2011), or over 170,000 Farm Security Administration (FSA)/Office of War Information photographs taken between 1935 and 1944 and digitized by Library of Congress3 – such tasks are no easier to accomplish. The basic method that always worked when numbers of media objects were small – see all images or video, notice patterns, and interpret them – no longer works.

Given the size of many digital media collections, simply seeing what's inside them is impossible (even before we begin formulating questions and hypotheses and selecting samples for closer analysis). Although it may appear that the reasons for this are the limitations of human vision and human information processing, I think that it is actually the fault of current interface designs. Popular interfaces for massive digital media collections such as list, gallery, grid, and slide (the example from the Library of Congress Prints and Photographs site) do not allow us to see the contents of a whole collection. These interfaces usually display only a few items at a time. This access method does not allow us to understand the “shape” of the overall collection and notice interesting patterns.

Most media collections contain some kind of metadata such as author names, production dates, program titles, image formats, or, in the case of social media services such as Flickr, upload dates, user-assigned tags, geodata, and other information.4 If we are given access to such metadata for a whole collection in the easy-to-use form such as a set of spreadsheets or a database, this allows us to at least understand distributions of content, dates, access statistics, and other dimensions of the collection. Unfortunately, usually online collections and media sites do not make available the complete collection's metadata to the users. Even if they did, this still would not substitute for directly seeing, watching, or reading the actual media. Even the richest metadata available today for media collections do not capture many patterns that we can easily notice when we directly watch video, look at photographs, or read texts – i.e., when we study the media themselves as opposed to metadata about them.5

The popular media access technologies of the nineteenth and twentieth centuries, such as slide lanterns, film projectors, microforms, Moviola and Steenbeck, record players, audio and videotape recorders, were designed to access single media items at a time at a limited range of speeds. This went hand in hand with the organization of media distribution: record and video stores, libraries, television, and radio would make available only a few items at a time. For instance, you could not watch more than a few TV channels simultaneously, or borrow more than a few videotapes from a library.

At the same time, hierarchical classification systems used in library catalogs made it difficult to browse a collection or navigate it in orders not supported by catalogs. When you walked from shelf to shelf, you were typically following a classification system based on subjects, with books organized by author names inside each category.

Together, these distribution and classification systems encouraged twentieth-century media researchers to decide beforehand what media items to see, hear, or read. A researcher usually started with some subject in mind – films by a particular author, works by a particular photographer, or categories such as “1950s experimental American films” and “early twentieth-century Paris postcards.” It was impossible to imagine navigating through all films ever made or all postcards ever printed. (One of the first media projects that organizes its narrative around navigation of a media archive is Jean-Luc Godard's Histoire(s) du cinéma, which draws samples from hundreds of films.) The popular social science method for working with larger media sets in an objective manner – content analysis, i.e., tagging of semantics in a media collection by several people using a predefined vocabulary of terms (for more details, see Stemler, 2001) – also requires that a researcher decide beforehand what information would be relevant to tag. In other words, as opposed to exploring a media collection without any preconceived expectations or hypotheses – just to “see what is there” – a researcher has to postulate “what is there,” i.e., what are the important types of information worth seeking out.

Unfortunately, the current standard in media access – computer search – does not take us out of this paradigm. The search interface is a blank frame waiting for you to type something. Before you click on the search button, you have to decide what keywords and phrases to search for. So while the search brings a dramatic increase in speed of access, its deep assumption (which we may be able to trace back to its origins in the 1950s, when most scientists did not anticipate how massive digital collections would become) is that you know beforehand something about the collection worth exploring further.

The hypertext paradigm that defined the web of the 1990s likewise only allows a user to navigate though the web according to the links defined by others, as opposed to moving in any direction. This is consistent with the original vision of hypertext as articulated by Vannevar Bush in 1945: a way for a researcher to create “trails” though massive scientific information and for others to be able to follow those traces later.

My informal review of the largest online institutional media collections available today (europeana.org, archive.org, artstor.com, etc.) suggests that the typical interfaces they offer combine nineteenth-century technologies of hierarchical categories and mid-twentieth-century technology of information retrieval (i.e., search using metadata recorded for media items). Sometimes collections also have subject tags. In all cases, the categories, metadata, and tags were entered by the archivists who manage the collections. This process imposes particular orders on the data. As a result, when a user accesses institutional media collections via their websites, she can only move along a fixed number of trajectories defined by the taxonomy of the collection and types of metadata.

In contrast, when you observe a physical scene directly with your eyes, you can look anywhere in any order. This allows you to quickly notice a variety of patterns, structures, and relations. Imagine, for example, turning the corner on a city street and taking in the view of the open square, with passersby, cafés, cars, trees, advertising, store windows, and all other elements. You can quickly detect and follow a multitude of dynamically changing patterns based on visual and semantic information: cars moving in parallel lines, houses painted in similar colors, people who move along their own trajectories and people talking to each other, unusual faces, shop windows that stand out from the rest, and so on.

We need similar techniques that would allow us to observe vast “media universes” and quickly detect all interesting patterns. These techniques have to operate with speeds many times faster than the normally intended playback speed (in the case of time-based media). Or, to use the example of still images, I should be able to see important information in one million images in the same amount of time it takes me to see it in a single image. These techniques have to compress massive media universes into smaller observable media “landscapes” compatible with the human information processing rates. At the same time, they have to keep enough of the details from the original images, video, audio, or interactive experiences to enable the study of the subtle patterns in the data.

Media Visualization

The limitations of the typical interfaces to online media collections also apply to interfaces of software for media viewing, cataloging, and editing. These applications allow users to browse through and search image and video collections, and display image sets in an automatic slide show or a PowerPoint-style presentation format. However, as research tools, their usefulness is quite limited. Desktop applications such as iPhoto, Picasa, and Adobe Bridge, and image sharing sites such as Flickr and Photobucket, can only show images in a few fixed formats – typically a two-dimensional grid, a linear strip, or a slide show, and, in some cases, a map view (photos superimposed on the world map). To display photos in a new order, a user has to invest time in adding new metadata to all of them. She cannot automatically organize images by their visual properties or by semantic relationships. Nor can she create animations, compare collections that each may have hundreds of thousands of images, or use various information visualization techniques to explore patterns across image sets.

Graphing and visualization tools that are available in Google Docs, Excel, Tableau,6 manyeyes,7 and other graphing, spreadsheet, and statistical software do offer a range of visualization techniques designed to reveal patterns in data. However, these tools have their own limitations. A key principle, which underlies the creation of graphs and information visualizations, is the representation of data using points, bars, lines, and similar graphical primitives. This principle has remained unchanged from the earliest statistical graphics of the early nineteenth century to contemporary interactive visualization software that can work with large data sets (Manovich, 2011). Although such representations make clear the relationships in a data set, they also hide the objects behind the data from the user. While this is perfectly acceptable for many types of data, in the case of images and video this becomes a serious problem. For instance, a 2D scatter plot that shows a distribution of grades in a class with each student represented as a point serves its purpose, but the same type of plot representing stylistic patterns over the course of an artist's career by means of points has more limited use if we cannot see the images of the artworks.

Figure 4.1 Exploring a visualization of one million manga pages on the HIPerSpace visual supercomputer. Source: Lev Manovich. http://www.flickr.com/photos/culturevis/5866777772/

Since 2008, our Software Studies Initiative at the University of California, San Diego, has been developing visual techniques that combine the strengths of media viewing applications and graphing and visualization applications.8 Like the latter, they create graphs to show relationships and patterns in a data set. However, if plot-making software can only display data as points, lines, or other graphic primitives, our software can show all the images in a collection superimposed on a graph. We call this approach media visualization.

Typical information visualization involves first translating the world into numbers and then visualizing relations between these numbers. In contrast, media visualization involves translating a set of images into a new image that can reveal patterns in the set. In short, pictures are translated into pictures.

Media visualization can be formally defined as creating new visual representations from the visual objects in a collection (Manovich, 2012a). In the case of a collection containing single images (for instance, the already mentioned 1930s FSA photographs collection from the Library of Congress), media visualization involves displaying all images, or their parts, organized in a variety of configurations according to their metadata (dates, places, authors), content properties (for example, presence of faces), and/or visual properties. If we want to visualize a video collection, it is usually more convenient to select key frames that capture the properties and the patterns of video. This selection can be done automatically using a variety of criteria – for example, significant changes in color, movement, camera position, staging, and other aspects of cinematography, changes in content such as shot and scene boundaries, start of music or dialogue, new topics in characters' conversations, and so on.

Our media visualization techniques can be used independently, or in combination with digital image processing (Digital image processing, n.d.). Digital image processing is conceptually similar to automatic analysis of texts already widely used in digital humanities (Text Analysis, 2011). Text analysis involves automatically extracting various statistics about the content of each text in a collection, such as word usage frequencies, their lengths, and their positions, sentence lengths, and noun and verb usage frequencies. These statistics (referred to in computer science as “features”) are then used to study the patterns in a single text, relationships between texts, literary genres, and so on.

Similarly, we can use digital image processing to calculate statistics about various visual properties of images: average brightness and saturation, the number and the properties of shapes, the number of edges and their orientations, key colors, and so on. These features can then be used for similar investigations – for example, the analysis of visual differences between news photographs in different magazines or between news photographs in different countries, the changes in visual style over the career of a photographer, or the evolution of news photography in general over the twentieth century. We can also use them in a more basic way – for the initial exploration of any large image collection. (This method is described in detail in Manovich, 2012a.)

In the remainder of this chapter, I focus on media visualization techniques that are similarly suitable for initial exploration of any media collection but do not require digital image processing of all the images. I present the key techniques and illustrate them with examples drawn from different types of media. Researchers can use a variety of software tools and technologies to implement these techniques – scripting Photoshop, using open source media utilities such as ImageMagick,9 or writing new code in Processing,10 for example. In our lab we rely on the open source image processing software called ImageJ.11 This software is normally used in biological and medical research, astronomy, and other scientific fields. We wrote many custom macros, which add new capacities to existing ImageJ commands to meet the needs of media researchers. (You can find these macros, detailed tutorials on how to use them, and sample data sets on our site.12) These macros allow you to create all types of visualizations described in this chapter, and also to do basic visual feature extraction on any number of images and videos. In other words, these techniques are available right now to anyone interested in trying them on their own data sets.

We have also developed a version of our tools that runs on the next generation visual supercomputer systems such as HIPerSpace, developed at Calit2 (California Institute for Telecommunication and Information) where our lab is housed.3 In its present configuration, it consists of seventy 30-inch monitors, which offer a combined resolution of 287 megapixels. We can load up to 10,000 images at one time and then interactively create a variety of media visualizations in real time (see Figure 4.1).

The development of our tools was driven by research applications. Since 2009, we have used our software for the analysis and visualization of over 20 different image and video collections covering a number of fields. The examples include 776 paintings by Vincent van Gogh, 4,535 covers of Time magazine published between 1923 and 2009 (see Figure 2 online and Figure 4.3), one million manga (Japanese comics) pages, a hundred hours of gameplay video, and 130 Obama weekly video addresses (2009–2011). The software has also been used by undergraduate and graduate students at the University of California, San Diego (UCSD), in art history and media classes. In addition, we collaborate with cultural institutions that are interested in using our methods and tools with their media collections and data sets: New York Times, the Getty Research Institute, Austrian Film Museum, Netherlands Institute for Sound and Image, and Magnum Photos. Finally, we have worked with a number of individual scholars and research groups from UCSD, University of Chicago, UCLA, University of Porto in Portugal, and Singapore National University, applying these tools to visual data sets in their respective disciplines – art history, film and media studies, dance studies, and area studies.

Our software has been designed to work with image and video collections of any size. Our largest application to date is the analysis of one million pages of fan-translated manga (Douglass, Huber, & Manovich, 2011); however, the tools do not have built-in limits and can be used with even larger image sets.

Dimensions of Media Visualization

In this section I discuss media visualization methods in relation to other methods for analyzing and visualizing data. As I have already noted, media visualization can be understood as the opposite of statistical graphs and information visualization.

Media visualization can also be contrasted with content analysis (manual coding of a media collection typically used to describe semantics) and automatic media analysis methods commonly used by commercial companies (video fingerprinting, content-based image search, cluster analysis, concept detection, image and video mining, etc.). In contrast to content analysis, media visualization techniques do not require the time-consuming creation of new metadata about media collections. And, in contrast to automatic computational methods, media visualization techniques do not require specialized technical knowledge and can be used by anybody with only a basic familiarity with digital media tools (think QuickTime, iPhoto, and Excel).

The media visualization method exploits the fact that image collections typically contain at least minimal metadata. These metadata define how the images should be ordered, and/or group them in various categories. In the case of digital video, the ordering of individual frames is built-in to the format itself. Depending on the genre, other higher-level sequences can be also present: shots and scenes in a narrative theme, the order of subjects presented in a news program, the weekly episodes of a TV drama.

We can exploit this already-existing sequence of information in two complementary ways. On the one hand, we can bring all images in a collection together in the order provided by metadata. For example, in the visualizations of all 4,535 Time magazine covers, the images are organized by publication dates (see Figure 4.3). On the other hand, to reveal patterns that such an order may hide, we can also place the images in new sequences and layouts. In doing this, we deliberately go against the conventional understanding of cultural image sets which metadata often reify. We call such conceptual operation “remapping.” By changing the accepted ways of sequencing media artifacts and organizing them in categories, we create new “maps” of our familiar media universes and landscapes.

Media visualization techniques can be also situated along a second conceptual dimension depending on whether they use all media in a collection, or only a sample. We may sample in time (using only some of the available images) or in space (using only parts of the images). An example of the former technique is the visualization of Dziga Vertov's 1928 feature documentary The Eleventh Year, which uses only the first frame of each shot (see Figure 4.8). An example of the latter is the “slice” of 4,535 covers of Time magazine that uses only a single-pixel vertical line from each cover.

The third conceptual dimension that also helps us to sort out possible media visualization techniques is the sources of information they use. As already explained, media visualization exploits the presence of at least minimal metadata in a media collection, so it does not require the addition of new metadata about the individual media items. However, if we decide to add such metadata – for example, content tags created via manual content analysis, labels that divide the media collection into classes generated via automatic cluster analysis, automatically detected semantic concepts, face detection results, and visual features extracted with digital image processing – all this information can also be used in visualization.

In fact, media visualization offers a new way to work with this information. For example, consider information about content we added manually to our Time covers data set, such as whether each cover features portraits of particular individuals or, conversely, a conceptual composition that illustrates some concept or issue. Normally a researcher would use graphing techniques such as a line graph to display this information. However, we can also create a high-resolution visualization that shows all the covers and uses red borders to highlight the covers that fall in one of these categories (see Figure 2 at http://lab.softwarestudies.com/p/media-visualizations-illustrations.html).

The rest of this chapter discusses some of these distinctions in more detail, illustrating them with visualization examples created in our lab. Section 1 starts with the simplest technique: using all images in a collection and organizing them in a sequence defined by existing metadata. Section 2 focuses on temporal and spatial sampling. Section 3 discusses and illustrates the operation of remapping.

Media Visualization Techniques

1 Zoom Out (Image Montage)

Conceptually and technically, the simplest technique is to bring a number of related visual artifacts together and show them in a single image. Since media collections always come with some kind of metadata such as creation or upload dates, we can organize the display of the artifacts using information in these metadata.

This technique can be seen as an extension of the most basic intellectual operations of media studies and humanities – comparing a set of related items. However, if twentieth-century technologies only allowed for a comparison between a small number of artifacts at the same time – for example, the standard lecturing method in art history was to use two slide projectors to show and discuss two images side by side – today's applications running on standard desktop computers, laptops, netbooks, tablets, and the web allow for simultaneous display of thousands of images in a grid format. These image grids can be organized by any of the available metadata such as year of production, country, creator name, image size, or upload dates. As already discussed above, all commonly used photo organizers and editors including iPhoto, Picasa, Adobe Photoshop, and Apple Aperture include this function. Similarly, image search services such as Google Image Search and content sharing services such as Flickr and Picasa also use image grid as a default interface.

However, it is more convenient to construct image grids using dedicated image processing software such as ImageMagick or ImageJ, since they provide many more options and greater control. ImageJ calls the appropriate command to make image grids “make montage” (“Make Montage,” ImageJ User Guide, n.d.), and since this is the software we commonly use in our lab, we will refer to such image grids as montages.

This quantitative increase in the number of images that can be visually compared leads to a qualitative change in the type of observations that can be made. Being able to display thousands of images simultaneously allows us to see gradual historical changes over time, the images that stand out from the rest (i.e., outliers), and the differences in variability between different image groups. It also allows us to detect possible clusters of images that share some characteristics in common, and to compare the shapes of these clusters. In other words, montage is a perfect technique for “exploratory media analysis.”

To be effective, even this simple technique may still require some transformation of the media being visualized. For example, in order to be able to observe similarities, differences, and trends in large image collections, it is crucial to make all images the same size. We also found that it is best to position images side by side without any gaps (that is why typical software for managing media collections, which leaves such gaps in the display, is not suited for discovering trends in image collections).

The following example illustrates the image montage technique.

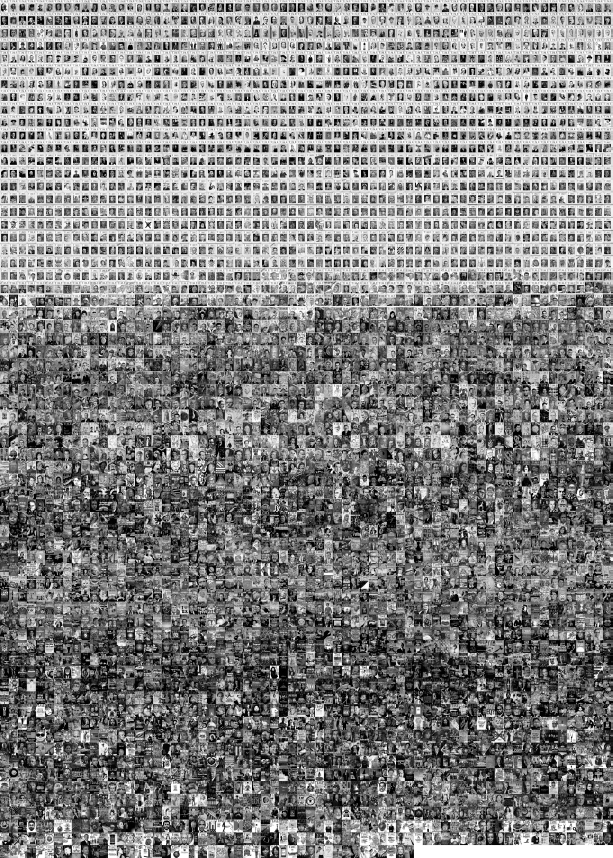

Placing 4,535 Time magazine covers published over 86 years within a single high-resolution image reveals a number of historical patterns, including the following:

- Medium: In the 1920s and 1930s Time covers use mostly photography. After 1941, the magazine switches to paintings. In the later decades, photography gradually comes to dominate again. In the 1990s we see the emergence of the contemporary software-based visual language, which combines manipulated photography and graphic and typographic elements.

Figure 4.3 Montage of all 4,535 covers of Time magazine organized by publication date (1923 to 2009) from left to right and top to bottom. A large percentage of the covers included color borders. We cropped these borders and scaled all images to the same size to allow a user to see more clearly the temporal patterns across all covers. Source: Jeremy Douglass and Lev Manovich, 2009. http://www.flickr.com/photos/culturevis/4038907270/in/set-72157624959121129/

- Color vs. black and white: The shift from early black-and-white to full-color covers happens gradually, with both types coexisting for many years.

- Hue: Distinct “color periods” appear in bands: green, yellow/brown, red/blue, yellow/brown again, yellow, and a lighter yellow/blue in the 2000s.

- Brightness: The changes in brightness (the mean of all pixels' grayscale values for each cover) follow a similar cyclical pattern.

- Contrast and saturation: Both gradually increase throughout the twentieth century. However, since the end of the 1990s, this trend is reversed: recent covers have lower contrast and lower saturation.

- Content: Initially most covers are portraits of individuals set against neutral backgrounds. Over time, portrait backgrounds change to feature compositions representing concepts. Later, these two different strategies come to coexist: portraits return to neutral backgrounds, while concepts are now represented by compositions that may include both objects and people – but not particular individuals.

The visualization also reveals an important “meta-pattern”: almost all changes are gradual. Each of the new communication strategies emerges slowly over a number of months, years, or even decades.

Montage technique has been pioneered in digital art projects such as Cinema Redux (Dawes, 2004) and The Whale Hunt (Harris, 2007). To create their montages, artists wrote their custom code; in each case, the code was used with particular data sets and not released for others to use with other data. ImageJ software, however, allows easy creation of image montages without the need to program. We found that this technique is very useful for exploring any media data set that has a time dimension, such as shots in a movie, covers and pages of magazine issues, or paintings created by an artist in the course of her career.

Although the montage method is the simplest technically, it is quite challenging to characterize it theoretically. Given that information visualization normally takes data that are not visual and represents them in a visual domain, is it appropriate to think of montage as a visualization method? To create a montage, we start in the visual domain and end up in the same domain – that is, we start with individual images, put them together, and then zoom out to see them all at once.

We believe that calling this method “visualization” is justified if, instead of focusing on the transformation of data characteristic of information visualization (from numbers and categories to images), we focus on its other key operation: arranging the elements of visualization in such a way as to allow the user to easily notice the patterns that are hard to observe otherwise. From this perspective, montage is a legitimate visualization technique. For instance, the current interface of Google Books can only show a few covers of any magazine (including Time) at a time, so it is hard to observe the historical patterns. However, if we gather all the covers of a magazine and arrange them in a particular way (making their size the same and displaying them in a rectangular grid in publication date order – i.e., making everything the same so the eye can focus on comparing what is different – their content), these patterns are now easy to see. (For further discussion of the relations between media visualization and information visualization, see Manovich, 2011.)

2 Temporal and Spatial Sampling

Our next technique adds another conceptual step. Instead of displaying all the images in a collection, we can sample them using some procedure to select their subsets and/or their parts. Temporal sampling involves selecting a subset of images from a larger image sequence. We assume that this sequence is defined by existing metadata, such as frame numbers in a video, upload dates of user-generated images on a social media site, or page numbers in a manga chapter. The selected images are then arranged back-to-back, in the order provided by the metadata.

Temporal sampling is particularly useful in representing cultural artifacts, processes, and experiences that unfold over significant periods of time – such as playing a videogame. Completing a single-player game may take dozens and even hundreds of hours. In the case of massively multiplayer online role-playing games (MMORPG), users may be playing for years. (A 2005 study by Nick Yee found the MMORPG players spend an average of 21 hours per week in the game world.)

We created two visualizations that together capture 100 hours of gameplay of Kingdom Hearts (2002, Square Co., Ltd.) and Kingdom Hearts II (2005, Square-Enix, Inc.) (see Figures 4 and 5 at http://lab.softwarestudies.com/p/media-visualizations-illustrations.html). Each game was played from beginning to end over a number of sessions. The video captured from all game sessions of each game was assembled into a single sequence. The sequences were sampled at six frames per second. This resulted in 225,000 frames for Kingdom Hearts and 133,000 frames for Kingdom Hearts II. The visualizations use every tenth frame from the complete frame sets. Frames are organized in a grid in order of gameplay (left to right, top to bottom).

Kingdom Hearts is a franchise of videogames and other media properties created in 2002 via a collaboration between Tokyo-based videogame publisher Square (now Square-Enix) and The Walt Disney Company. The franchise includes original characters created by Square, traveling through worlds representing Disney-owned media properties (e.g., Tarzan, Alice in Wonderland, The Nightmare Before Christmas, etc.). Each world has its distinct characters derived from the respective Disney-produced films. It also features distinct color palettes and rendering styles, which are related to visual styles of the corresponding Disney film. Visualizations reveal the structure of the gameplay, which cycles between progressing through the story and visiting various Disney worlds.

Temporal sampling is used in the interfaces of some media collections – for instance, Internet Archive14 includes regularly sampled frames for some of the videos in its collection (sampled rates vary depending on the type of video). In computer science, many researchers are working on video summarization: algorithms to automatically create compact representations of video content which typically produce a small set of frames (the search for “video summarization” on Google Scholar returned 17,600 articles). Since applying these algorithms does require substantial technical knowledge, we promote much simpler techniques that can be carried out without such knowledge using built-in commands in ImageJ software.

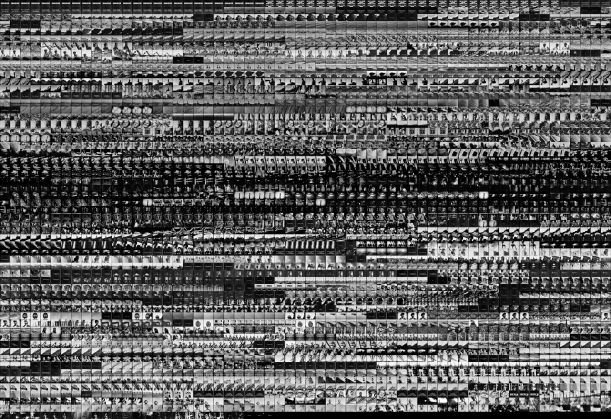

Figure 4.6 Slice of 4,535 covers of Time magazine organized by publication date (from 1923 to 2009, left to right). Every one-pixel-wide vertical column is sampled from a corresponding cover. Source. Jeremy Douglass and Lev Manovich, 2009. http://www.flickr.com/photos/culturevis/4040690842/in/set-72157622525012841

Spatial sampling involves selecting parts of images, according to some procedure. For example, our visualization of Time covers used spatial sampling, since we cropped some of the images to get rid of the red borders. However, as the example below demonstrates, often more dramatic sampling, which leaves only a small part of an image, can be quite effective in revealing additional patterns that a full image montage may not show as well. Following the standard name for such visualizations as used in medical and biological research and in ImageJ software, we refer to them as slices (“Orthogonal Views”, ImageJ User Guide, n.d.).

Naturally, the question arises of the relations between the techniques for visual media sampling as illustrated here, and the standard theory and practice of sampling in statistics and social sciences. (For a very useful discussion of general sampling concepts as they apply to digital humanities, see Kenny, 1982.) While this question will require its own detailed analysis, we can make one general comment. Statistics is concerned with selecting a sample in such a way as to yield some reliable knowledge about the larger population. Because it was often not practical to collect data about the whole population, the idea of sampling was the foundation of the twentieth-century applications of statistics.

In creating media visualization, we often face similar limitations. For instance, in our visualizations of Kingdom Hearts we sampled the complete videos of gameplay using a systematic sampling method. Ideally, if ImageJ software was capable of creating a montage using all of the video frames, we would not have to do this.

However, as the Time covers slice visualization demonstrates, sometimes dramatically limiting part of the data is actually better for revealing certain patterns than using all the data. While we can also notice historically changing patterns in cover layout in full image montage (e.g., placement and size of the word “Time,” the size of images in the center in relation to the whole covers), using a single-pixel column from each cover makes them stand out more clearly.

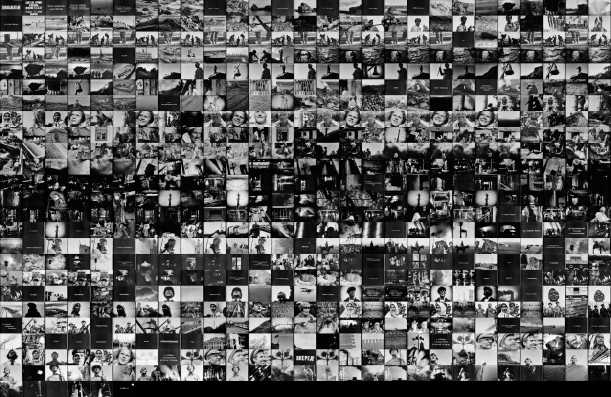

The following two visualizations (Figures 4.7 and 4.8) further illustrate this idea. The first uses regular sampling (1fps) to represent a 60-minute film, The Eleventh Year, directed by Dziga Vertov (1928). The resulting representation of the film is not very useful. The second visualization follows semantically and visually important segmentation of the film – the shots sequence. Each shot is represented by its first frame. (The frames are organized left to right and top to bottom following shot order in the film.) Although this visualization uses a much smaller number of frames to represent the film than the first, it is much more revealing. We can think of it as a reverse-engineered imaginary storyboard of the film – a reconstructed plan of its cinematography, editing, and content.

Figure 4.7 Visualization of The Eleventh Year (Dziga Vertov, 1928). The film is sampled at one frame per second. The resulting 3,507 frames are organized left to right and top to bottom following their order in the film. Source: Dziga Vertov, The Eleventh Year, 1928. http://www.flickr.com/photos/culturevis/4052703447/in/set-72157622608431194

Figure 4.8 Visualization of The Eleventh Year (Dziga Vertov, 1928). Every shot in the film is represented by its first frame. Frames are organized left to right and top to bottom following the order of shots in the film. Source, Dziga Vertov, The Eleventh Year, 1928. http://www.flickr.com/photos/culturevis/3988919869/in/set-72157622608431194

3 Remapping

Any representation can be understood as a result of a mapping operation between two entities: the sign and the thing being signified. Mathematically, mapping is a function that creates a correspondence between the elements in two domains. A familiar example of such mapping is geometric projection techniques used to create two-dimensional images of three-dimensional scenes, such as isometric projection and perspective projection. Another example is 2D maps that represent physical spaces. We can think of the well-known taxonomy of signs defined by philosopher Charles Peirce – icon, index, symbol, and diagram – as different types of mapping between an object and its representation. (Twentieth-century cultural theory often stressed that cultural representations are always partial maps, since they can only show some aspects of the objects. However, this assumption needs to be rethought, given the dozens of recently developed technologies for capturing data about physical objects, and the ability to process massive amounts of data to extract features and other information. Google, for instance, performs this operation a few times a day as it analyzes over a trillion web links. For further discussion of this point, see Manovich, 2012b.)

While any representation is a result of a mapping operation, modern media technologies – photography, film, audio records, fax and copy machines, audio and video magnetic recoding, media editing software, the web – led to a new practice of cultural mapping: using an already-existing media artifact and creating new meaning or a new aesthetic effect by sampling and rearranging parts of this artifact. While this strategy was already used in the second part of the nineteenth century (photomontage), it became progressively more central to modern art since the 1960s. Its different manifestations include pop art, compilation film, appropriation art, remix, and a large part of media art – from Bruce Conner's very first compilation film, A Movie (1958), to Dara Birnbaum's Technology/Transformation: Wonder Woman (1979), Douglas Gordon's 24 Hour Psycho (1993), Joachim Sauter and Dirk Joachim's The Invisible Shapes of Things Past (1995), Mark Napier's Shredder (1998), Jennifer and Kevin McCoy's Every Shot/Every Episode (2001), Cinema Redux by Brendan Dawes (2004), Natalie Bookchin's Mass Ornament (2009), Christian Marclay's The Clock (2011), and many others. While earlier remappings were done manually and had a small number of elements, use of computers allowed artists to automatically create new media representations containing thousands of elements.

If the original media artifact, such as a news photograph, a feature film, or a website, can be understood as a “map” of some “reality,” an art project that rearranges the elements of this artifact can be understood as “remapping.” Such projects derive their meanings and aesthetic effects from systematically rearranging the samples of original media in new configurations.

In retrospect, many of these art projects can also be understood as media visualizations. They examine ideological patterns in mass media, experiment with new ways to navigate and interact with media, and defamiliarize our media perceptions.

The techniques described in this chapter already use some of the techniques explored earlier by artists. However, the practices of photomontage, film editing, sampling, remix, photomontage, and digital art are likely to contain many other strategies that can be appropriated for media visualization. Just as with the sampling issue, a more detailed analysis is required of the relationship between these artistic practices of media remapping and media visualization as a research method for media studies, but one difference is easy to describe.

Artistic projects typically sample media artifacts, selecting parts and then deliberately assembling these parts in new orders. For example, in a classical work of video art, titled Technology/Transformation: Wonder Woman (1979), Dara Birnbaum sampled the television series Wonder Woman to isolate a number of moments, such as the transformation of the woman into a superhero. These short clips were then repeated many times to create a new narrative. The order of the clips did not follow their order in the original television episodes.

In our case, we are interested in revealing the patterns across the complete media artifact or a series of artifacts. Therefore, regardless of whether we are using all media objects (such as in the Time covers montage or Kingdom Hearts montages) or their samples (the Time covers slice), we typically start by arranging them in the same order as in the original work. Additionally, rather than selecting samples to support our new message (communicating a new meaning, expressing our opinion about the work), we start by following a systematic sampling method – for instance, selecting every second frame in a short video, or a single frame from every shot in a feature film.

As already argued earlier, we also purposely experiment with new sampling orders and different spatial arrangements of the media objects in visualization. Such deliberate remappings are closer to the artistic practice of aggressive rearrangements of media materials – although our purpose is revealing the patterns that are already present, as opposed to making new statements about the world using media samples. Whether this purpose is realizable is an open question. It is certainly possible to argue that our remappings are reinterpretations of the media works which force viewers to notice some patterns at the expense of others.

As an example of remapping technique, consider the following visualization that uses the first and last frame of every shot in Vertov's The Eleventh Year.

![]()

Figure 4.9 Visualization of The Eleventh Year (Dziga Vertov, 1928). Every shot in the film is represented by its first frame. Frames are organized left to right and top to bottom following the order of shots in the film. Every shot was analyzed using software to measure the average amount of movement across all frames in the shot. These measurements are plotted below the frames. Longer bars = more motion; shorter bars = less motion. Source: Dziga Vertov, The Eleventh Year, 1928. http://www.flickr.com/photos/culturevis/4117658480/in/set-72157622608431194/

“Vertov” is a neologism invented by the director who adapted it as his last name early in his career. It comes from the Russian verb vertet, which means “to rotate.” “Vertov” may refer to the basic motion involved in filming in the 1920s – rotating the handle of a camera – and also the dynamism of film language developed by Vertov who, along with a number of other Russian and European artists, designers, and photographers working in that decade, wanted to defamiliarize familiar reality by using dynamic diagonal compositions and shooting from unusual points of view. However, our visualization suggests a very different picture of Vertov. Almost every shot of The Eleventh Year starts and ends with practically the same composition and subject. In other words, the shots are largely static. Going back to the actual film and studying these shots further, we find that some of them are indeed completely static – such as the close-ups of people's faces looking in various directions without moving. Other shots employ a static camera that frames some movement – such as working machines, or workers at work – but the movement is localized completely inside the frame (in other words, the objects and human figures do not cross the view framed by the camera). Of course, we may recall that a number of shots in Vertov's most famous film, Man with A Movie Camera (1929), were specifically designed as opposites: shooting from a moving car meant that the subjects were constantly crossing the camera view. But even in this most experimental of Vertov's films, such shots constitute a very small part of the film.

Conclusion

In this chapter we described visual techniques for exploring large media collections. These techniques follow the same general idea: use the content of the collection – all images, their subsets (temporal sampling) or their parts (spatial sampling) – and present it in various spatial configurations in order to make visible the patterns and the overall “shape” of a collection. To contrast this approach to the more familiar practice of information visualization, we call it “media visualization.”

We included examples that show how media visualization can be used with already-existing metadata. However, if researchers add new information to the collection, this new information can also be used to drive media visualization. For example, images can be plotted using manually coded or automatically detected content properties, or visual features extracted via digital image processing. In the case of video, we can also use automatically extracted or coded information about editing techniques, framing, presence of human figures and other objects, amount of movement in each shot, and so on.

Conceptually, media visualization is based on three operations: zooming out to see the whole collection (image montage), temporal and spatial sampling, and remapping (rearranging the samples of media in new configurations). Achieving meaningful results with remapping techniques often involves experimentation and time. The first two methods usually produce informative results more quickly. Therefore, every time we assemble or download a new media data set, we next explore it using image montages and slices of all items.

Consider this definition of “browse” from Wiktionary: “To scan, to casually look through in order to find items of interest, especially without knowledge of what to look for beforehand” (“Browse,” n.d.). Also relevant is one of the meanings of the word “exploration”: “to travel somewhere in search of discovery” (“Explore,” n.d.). How can we discover interesting things in massive media collections? In other words, how can we browse through them efficiently and effectively, without prior knowledge of what we want to find? Media visualization techniques give us some basic ways of doing this.

ACKNOWLEDGMENTS

The research presented in this chapter was supported by an Interdisciplinary Collaboratory Grant, “Visualizing Cultural Patterns” (UCSD Chancellors Office, 2008–2010), Humanities High Performance Computing Award, “Visualizing Patterns in Databases of Cultural Images and Video” (NEH/DOE, 2009), Digital Startup Level II grant (NEH, 2010), and CSRO grant (Calit2, 2010). We also are very grateful to the California Institute for Information and Telecommunication (Calit2) and UCSD Center for Research in Computing and the Arts (CRCA) for their ongoing support. All the visualizations and software development are the result of systematic collaboration among the key lab members: Lev Manovich, Jeremy Douglass, Tara Zappel, and William Huber.

NOTES

1 http://chroniclingamerica.loc.gov/

3 http://www.loc.gov/pictures/

4 http://www.flickr.com/services/api/

5 For a good example of rich metadata in a media collection, see http://www.gettyimages.com/EditorialImages

6 http://www.tableausoftware.com

7 http://www-958.ibm.com/software/data/cognos/manyeyes

8 http://www.softwarestudies.com

12 http://www.softwarestudies.com

13 http://vis.ucsd.edu/mediawiki/index.php/Research_Projects:_HIPerSpace

REFERENCES

Art Now Flickr group. (n.d.). [Flickr group]. Retrieved June 26, 2011, from http://www.flickr.com/groups/37996597808@N01/

Browse. (n.d.). Retrieved June 26, 2011, from the Wiktionary wiki: http://en.wiktionary.org/wiki/browse

Bush, V. (1945). As we may think. Atlantic Monthly. Retrieved from http://www.theatlantic.com/magazine/archive/1945/07/as-we-may-think/3881/

Dawes, B. (2004). Cinema redux [Digital art project]. Retrieved from http://www.brendandawes.com/project/cinema-redux

Digging Into Data Challenge 2011. (2011). List of data repositories. Retrieved from http://www.diggingintodata.org/Repositories/tabid/167/Default.aspx

Digital image processing. (n.d.). Retrieved June 26, 2011, from the Wikipedia wiki: http://en.wikipedia.org/wiki/Digital_image_processing

Douglass, J., Huber, W., & Manovich, L. (2011). Understanding scanlation: How to read one million fan-translated manga pages. Image and Narrative, 12(1), 206–228. Retrieved June 26, 2011, from http://www.imageandnarrative.be/index.php/imagenarrative/article/viewFile/133/104

Explore. (n.d.). Retrieved June 26, 2011, from the Wiktionary wiki: http://en.wiktionary.org/wiki/explore

Flickr. (n.d.). The Flickr API. Retrieved June 26, 2011, from http://www.flickr.com/services/api/

Getty Images. (n.d.). Editorial images. Retrieved June 26, 2011, from http://www.gettyimages.com/EditorialImages

Harris, J. (2007). The whale hunt [Digital art project]. Retrieved from http://thewhalehunt.org/

Kenny, A. (1982). The computation of style: An introduction to statistics for students of literature and humanities. Oxford, UK: Pergamon Press.

Library of Congress. (n.d.). Farm Security Administration/Office of War Information black-and-white negatives [Online photo collection]. Retrieved June 26, 2011, from http://www.loc.gov/pictures/collection/fsa/about.html

Library of Congress. (n.d.). Prints and photographs online catalog. Retrieved June 26, 2011, from http://www.loc.gov/pictures/

Make Montage. (n.d.). ImageJ user guide. Retrieved June 26, 2011, from http://rsbweb.nih.gov/ij/docs/guide/userguide-25.html#toc-Subsection-25.6

Manovich, L. (2011). What is visualization? Visual Studies, 26(1), 36–49. Retrieved from http://lab.softwarestudies.com/2008/09/cultural-analytics.html

Manovich, L. (2012a). How to compare one million images? In D. Berry (Ed.), Computational turn. New York, NY: Macmillan. Retrieved from http://lab.softwarestudies.com/2008/09/cultural-analytics.html

Manovich, L. (2012b). Trending: The promises and the challenges of big social data. In M. Gold (Ed.), Debates in digital humanities. Minneapolis, MN: Minnesota University Press. Retrieved from http://lab.softwarestudies.com/2011/04/new-article-by-lev-manovich-trending.html

Orthogonal Views. (n.d.). ImageJ user guide. Retrieved June 26, 2011, from http://rsbweb.nih.gov/ij/docs/guide/userguide-25.html#toc-Subsection-25.6

Stemler, S. (2001). An overview of content analysis. Practical Assessment, Research and Evaluation, 7(17). Retrieved from http://PAREonline.net/getvn.asp?v=7&n=17

Text Analysis. (2011). Tooling up for digital humanities. Retrieved from http://toolingup.stanford.edu/?page_id=981

Yee, N. (2005). MMORG hours vs. TV hours. The Daedalus project. Retrieved June 26, 2011, from http://www.nickyee.com/daedalus/archives/000891.php