i

i

i

i

i

i

i

i

588 22. Visual Perception

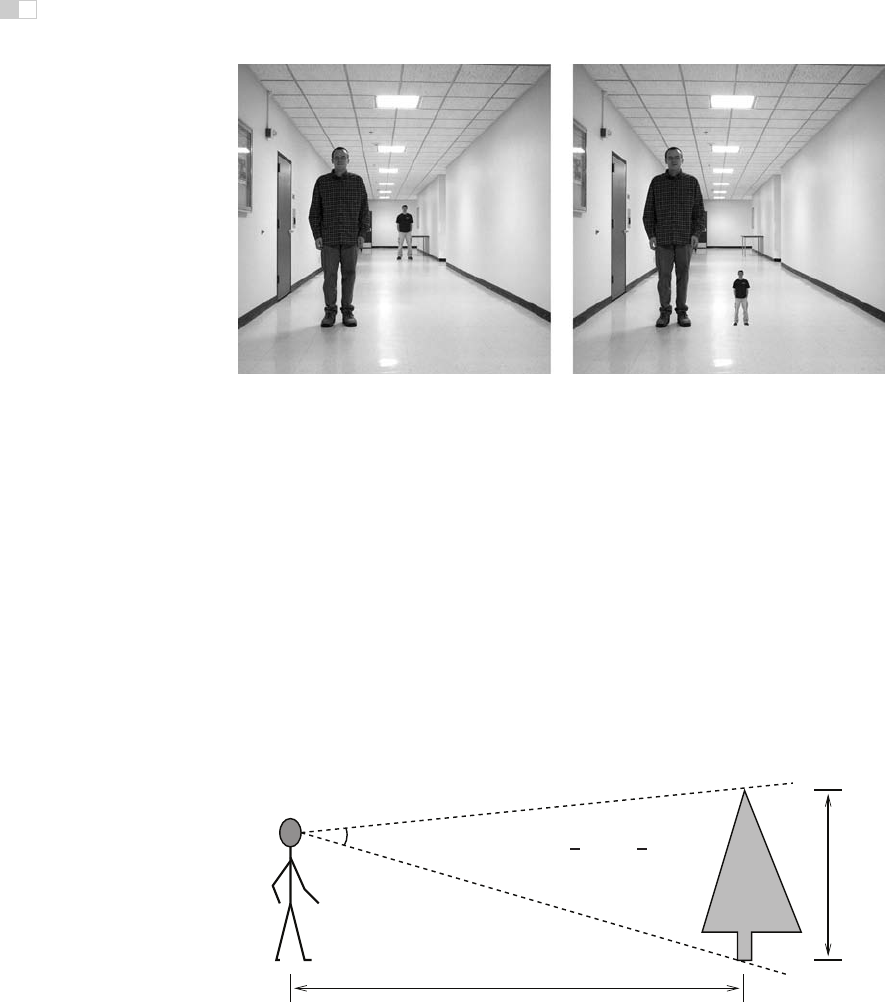

Figure 22.35. Left: perspective and familiar size cues are consistent. Right: perspec-

tive and familiar size cues are inconsistent.

Images courtesy Peter Shirley, Scott Kuhl, and

J. Dylan Lacewell.

project to a smaller retinal area, an effect called relative size. A more powerful

cue involves familiar size, which can provide information for absolute distance

to recognizable objects of known size. The strength of familiar size as a depth

cue can be seen in illusions such as Figure 22.35, in which it is put in conflict

with ground-plane, perspective-based depth cues. Familiar size is one part of the

size-distance relationship, relating the physical size of an object, the optical size

of the same object projected onto the retina, and the distance of the object from

the eye (Figure 22.36).

When objects are sitting on top of a flat ground plane, additional sources for

depth information become available, particularly when the horizon is either vis-

θ

h

d

d ≈

1

2

hcot

θ

2

Figure 22.36. The

size-distance relationship

allows the distance to objects of known size

to be determined based on the visual angle subtended by the object. Likewise, the size of

an object at a know distance can be determined based on the visual angle subtended by the

object.

i

i

i

i

i

i

i

i

22.4. Objects, Locations, and Events 589

b

c

a

s

h

v

viewpoint height

o

object height

o ≈ v ·

s

h

(a) (b)

Figure 22.37. (a) The

horizon ratio

can be used to determine depth by comparing the visible

portion of an object below the horizon to the total vertical visible extent of the object. (b) A

real-world example.

ible or can be derived from other perspective information. The angle of decli-

nation to the contact point on the ground is a relative depth cue and provides

absolute egocentric distance when scaled by eye height, as previously shown in

Figure 22.25. The horizon ratio, in which the total visible height of an object

is compared with the visible extent of that portion of the object appearing below

the horizon, can be used to determine the actual size of objects, even when the

distance to the objects is not known (Figure 22.37). Underlying the horizon ratio

is the fact that for a flat ground plane, the line of sight to the horizon intersects

objects at a position that is exactly an eye height above the ground.

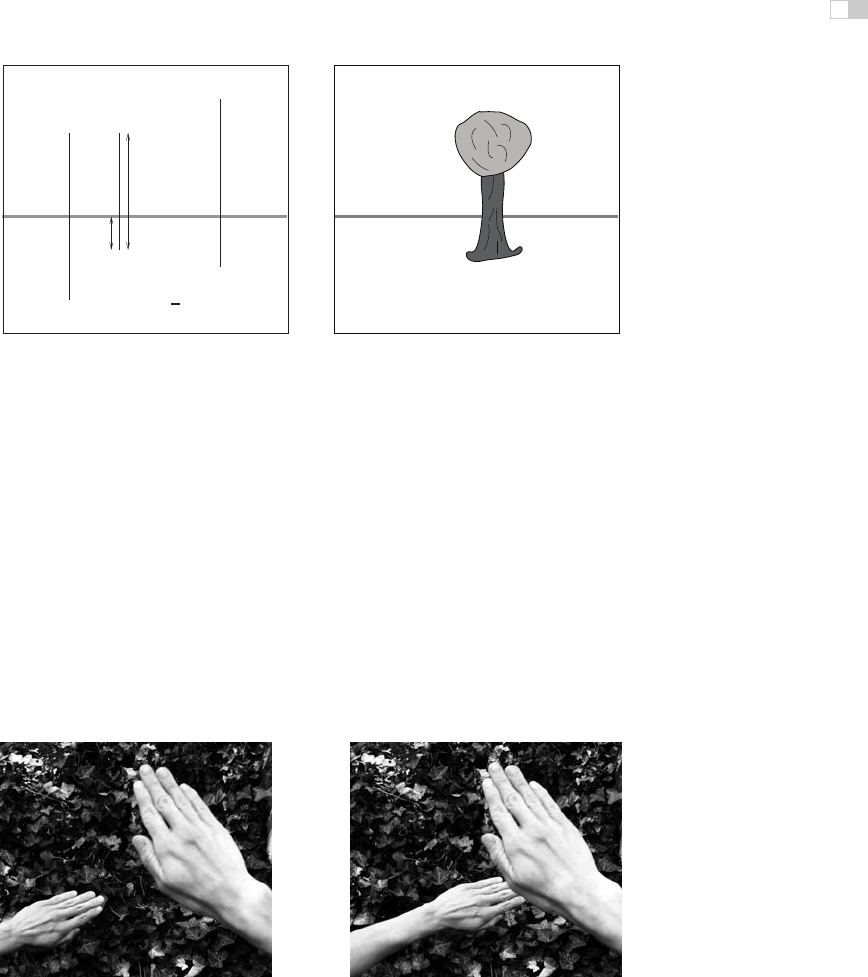

(a) (b)

Figure 22.38. (a) Size constancy makes hands positioned at different distances from the

eye appear to be nearly the same size for real-world viewing, even though the retinal sizes

are quite different. (b) The effect is less strong when one hand is partially occluded by the

other, particularly when one eye is closed.

Images courtesy Peter Shirley and Pat Moulis.

i

i

i

i

i

i

i

i

590 22. Visual Perception

The human visual system is sufficiently able to determine the absolute size of

most viewed objects; our perception of size is dominated by the the actual physi-

cal size, and we have almost no conscious awareness of the corresponding retinal

size of objects. This is similar to lightness constancy, discussed earlier, in that

our perception is dominated by inferred properties of the world, not the low level

features actually sensed by photoreceptors in the retina. Gregory (1997) describes

a simple example of size constancy. Hold your two hands out in front of you, one

at arms length and the other at half that distance away from you (Figure 22.38(a)).

Your two hands will look almost the same size, even though the retinal sizes differ

by a factor of two. The effect is much less strong if the nearer hand partially oc-

Figure 22.39. Shape

constancy—the table looks

rectangular even though its

shape in the image is an ir-

regular four sided polygon.

cludes the more distant hand, particularly if you close one eye (Figure 22.38(b)).

The visual system also exhibits shape constancy, where the perception of geomet-

ric structure is close to actual object geometry than might be expected given the

distortions of the retinal image due to perspective (Figure 22.39).

22.4.3 Events

Most aspects of event perception are beyond the scope of this chapter, since they

involve complex non-visual cognitive processes. Three types of event perception

are primarily visual, however, and are also of clear relevance to computer graph-

ics. Vision is capable of providing information about how a person is moving in

the world, the existence of independently moving objects in the world, and the

potential for collisions either due to observer motion or due to objects moving

towards the observer.

Vision can be used to determine rotation and the direction of translation rel-

ative to the environment. The simplest case involves movement towards a flat

surface oriented perpendicularly to the line of sight. Presuming that there is suffi-

cient surface texture to enable the recovery of optic flow, the flow field will form

a symmetric pattern as shown in Figure 22.40(a). The location in the field of view

of the focus of expansion of the flow field will have an associated line of sight

corresponding to the direction of translation. While optic flow can be used to vi-

sually determine the direction of motion, it does not contain enough information

to determine speed. To see this, consider the situation in which the world is made

twice as large and the viewer moves twice as fast. The decrease in the magnitude

of flow values due to the doubling of distances is exactly compensated for by the

increase in the magnitude of flow values due to the doubling of velocity, resulting

in an identical flow field.

Figure 22.40(b) shows the optic flow field resulting from the viewer (or more

accurately, the viewer’s eyes) rotating around the vertical axis. Unlike the situa-

i

i

i

i

i

i

i

i

22.4. Objects, Locations, and Events 591

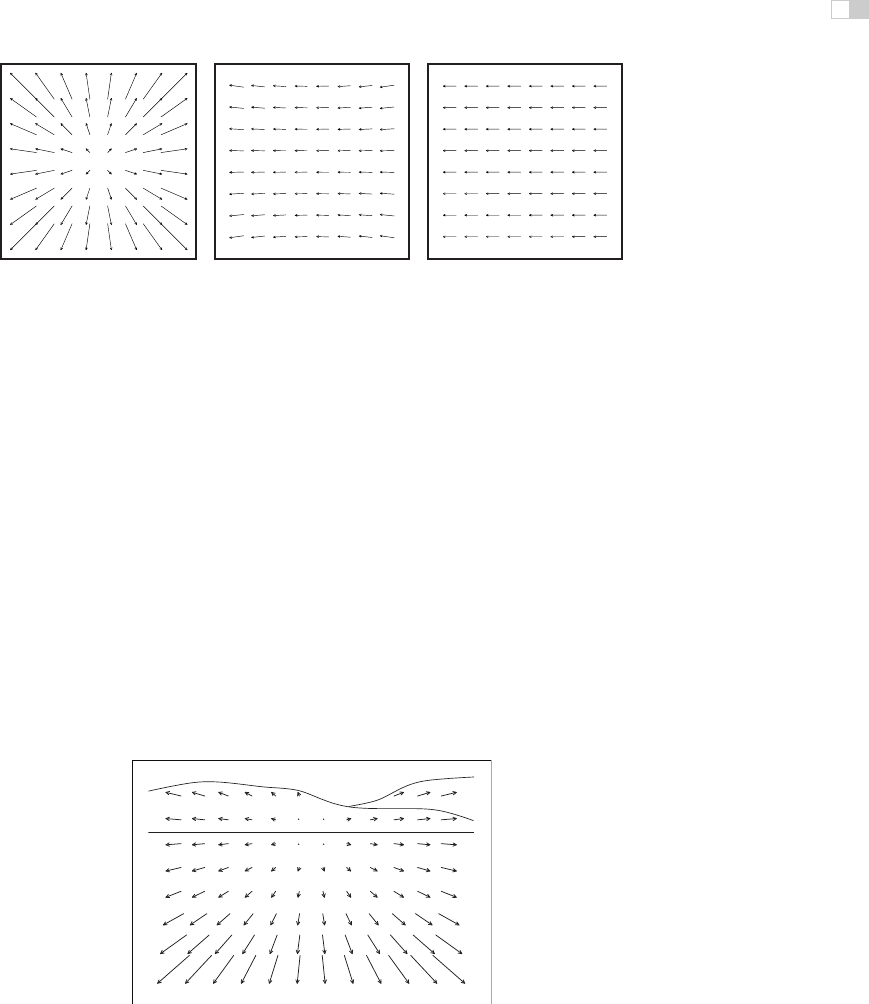

(a) (b) (c)

Figure 22.40. (a) Movement towards a flat, textured surface produces an expanding flow

field, with the

focus of expansion

indicating the line of sight corresponding to the direction

of motion. (b) The flow field resulting from rotation around the vertical axis while viewing

a flat surface oriented perpendicularly to the line of sight. (c) The flow field resulting from

translation parallel to a flat, textured surface.

tion with respect to translational motion, optic flow provides sufficient informa-

tion to determine both the axis of rotation and the (angular) speed of rotation. The

practical problem in exploiting this is that the flow resulting from pure rotational

motion around an axis perpendicular to the line of sight is quite similar to the

flow resulting from pure translation in the direction that is perpendicular to both

the line of sight and this rotational axis, making it difficult to visually discriminate

between the two very different types of motion (Figure 22.40(c)). Figure 22.41

shows the optical flow patterns generated by movement through a more realistic

environment.

If a viewer is completely stationary, visual detection of moving objects is easy,

since such objects will be associated with the only non-zero optic flow in the field

Figure 22.41. The optic flow generated by moving through an otherwise static environment

provides information about both the motion relative to the environment and the distances to

points in the environment. In this case, the direction of view is depressed from the horizon,

but as indicated by the focus of expansion, the motion is parallel to the ground plane.

i

i

i

i

i

i

i

i

592 22. Visual Perception

of view. The situation is considerably more complicated when the observer is

moving, since the visual field will be dominated by non-zero flow, most or all of

which is due to relative motion between the observer and the static environment

(Thompson & Pong, 1990). In such cases, the visual system must be sensitive

to patterns in the optic flow field that are inconsistent with flow fields associated

with observer movement relative to a static environment (Figure 22.42).

Section 22.3.4 described how vision can be used to determine time to contact

with a point in the environment even when the speed of motion is not known.

Assuming a viewer moving with a straight, constant-speed trajectory and no in-

dependently moving objects in the world, contact will be made with whatever

Figure 22.42. Visual

detection of moving objects

from a moving observation

point requires recognizing

patterns in the optic flow

that cannot be associated

with motion through a static

environment.

surface is in the direction of the line of sight corresponding to the focus of expan-

sion at a time indicated by the τ relationship. An independently moving object

complicate the matter of determining if a collision will in fact occur. Sailors use

a method for detecting potential collisions that may also be employed in the hu-

man visual system: for non-accelerating straight-line motion, collisions will occur

with objects that are visually expanding but otherwise remain visually stationary

in the egocentric frame of reference.

One form of more complex event perception merits discussion here, since it is

so important in interactive computer graphics. People are particularly sensitive to

motion corresponding to human movement. Locomotion can be recognized when

the only features visible are lights on the walker’s joints (Johansson, 1973). Such

moving light displays are often even sufficient to recognize properties such as the

sex of the walker and the weight of the load that the walker may be carrying.

In computer graphics renderings, viewers will notice even small inaccuracies in

animated characters, particularly if they are intended to mimic human motion.

The term visual attention covers a range of phenomenon from where we point

our eyes to cognitive effects involving what we notice in a complex scene and how

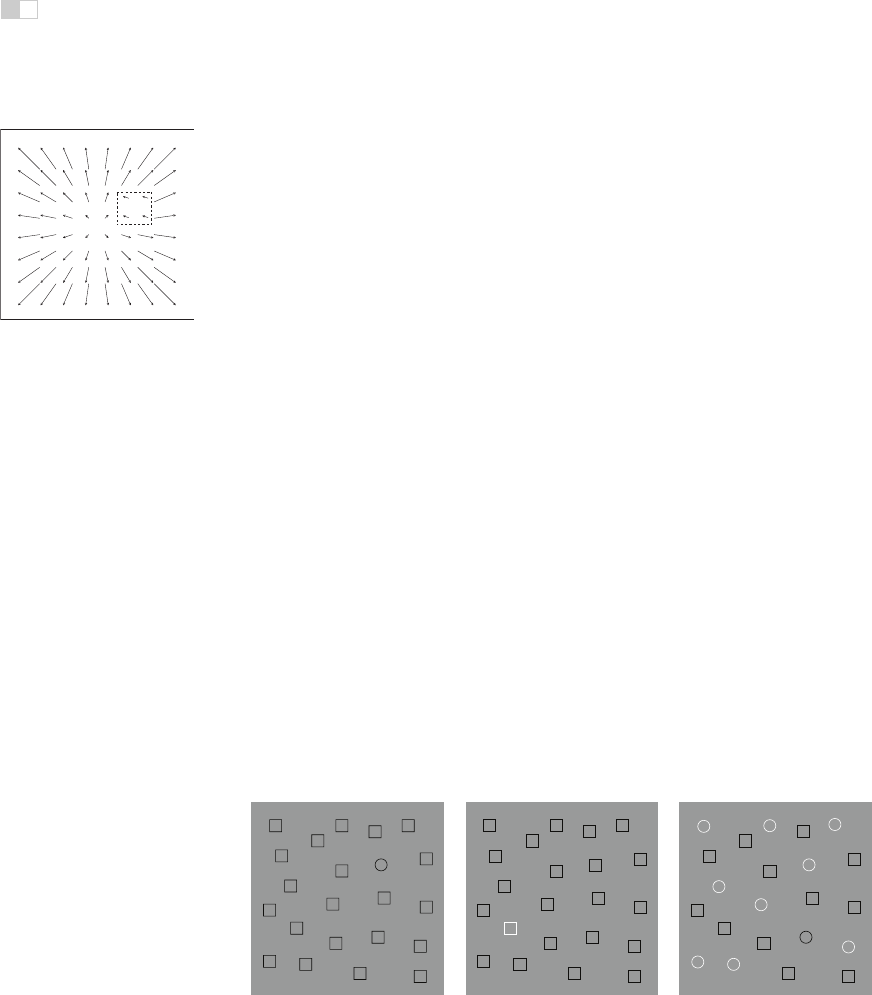

(a) (b) (c)

Figure 22.43. In (a) and (b), visual attention is quickly drawn to the item of different shape

or color. In (c), sequential search appears to be necessary in order to find the one item that

differs in both shape and color.

..................Content has been hidden....................

You can't read the all page of ebook, please click here login for view all page.