Standard Performance Evaluation Corporation OpenMP (SPECOMP) is a well known HPC benchmark suite for multi-threaded applications running on multiple nodes. Each benchmark (for example, Swim) is listed along with the x axis.

For each benchmark, there are three pairs of comparisons: one for a 16-vCPU VM, one for a 32-vCPU VM, and one for a 64-way VM. Default-16 means performance for a 16-way VM with no vNUMA support and vNUMA-16 means the same 16-way VM, but with vNUMA enabled.

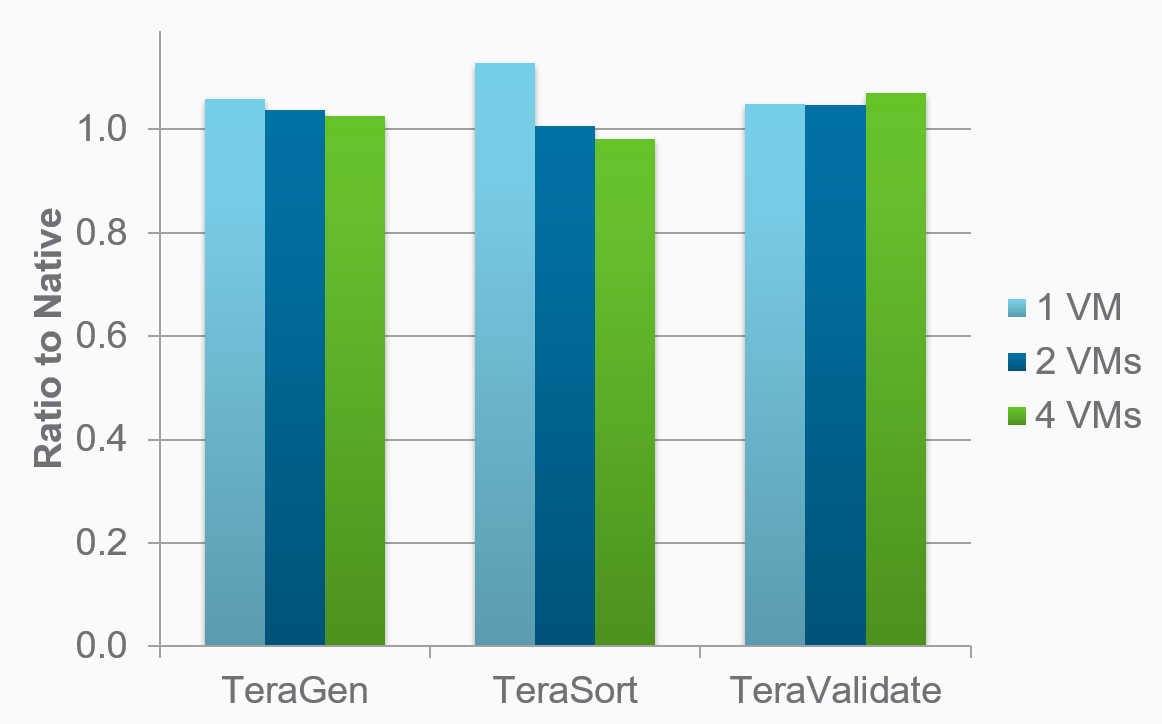

Ratio to Native, lower is better:

The chart shows run-times, so lower is better. We can see significant run-time drops in virtually all cases when moving from default to vNUMA. This is a hugely important feature for HPC users with a need for wide VMs. These charts show published performance results for a variety of life sciences workloads running on ESXi.

They show that these throughput-oriented applications run with generally under a 5% penalty when virtualized. More recent reports from customers indicate that this entire class of applications (throughput), which includes not just life sciences, but financial services, electronic design automation (EDA) chip designers, and digital content creation (movie rendering, and so on), run with well under a 5% performance degradation. Platform tuning, not application tuning, is required to achieve these results.

We have results reported by one of EDA (chip designer) customers who ran first a single instance of one of their EDA jobs on a bare-metal Linux node. They then ran the same Linux and the same job on ESXi and compared the results. They saw a 6% performance degradation. We believe this would be lower with additional platform tuning.

They then ran the second test with four instances of the application running in a single Linux instance versus four VMs running the same jobs. So, we have the same workload running in both cases. In this configuration, they discovered that the virtual jobs completed 2% sooner than the bare-metal jobs.

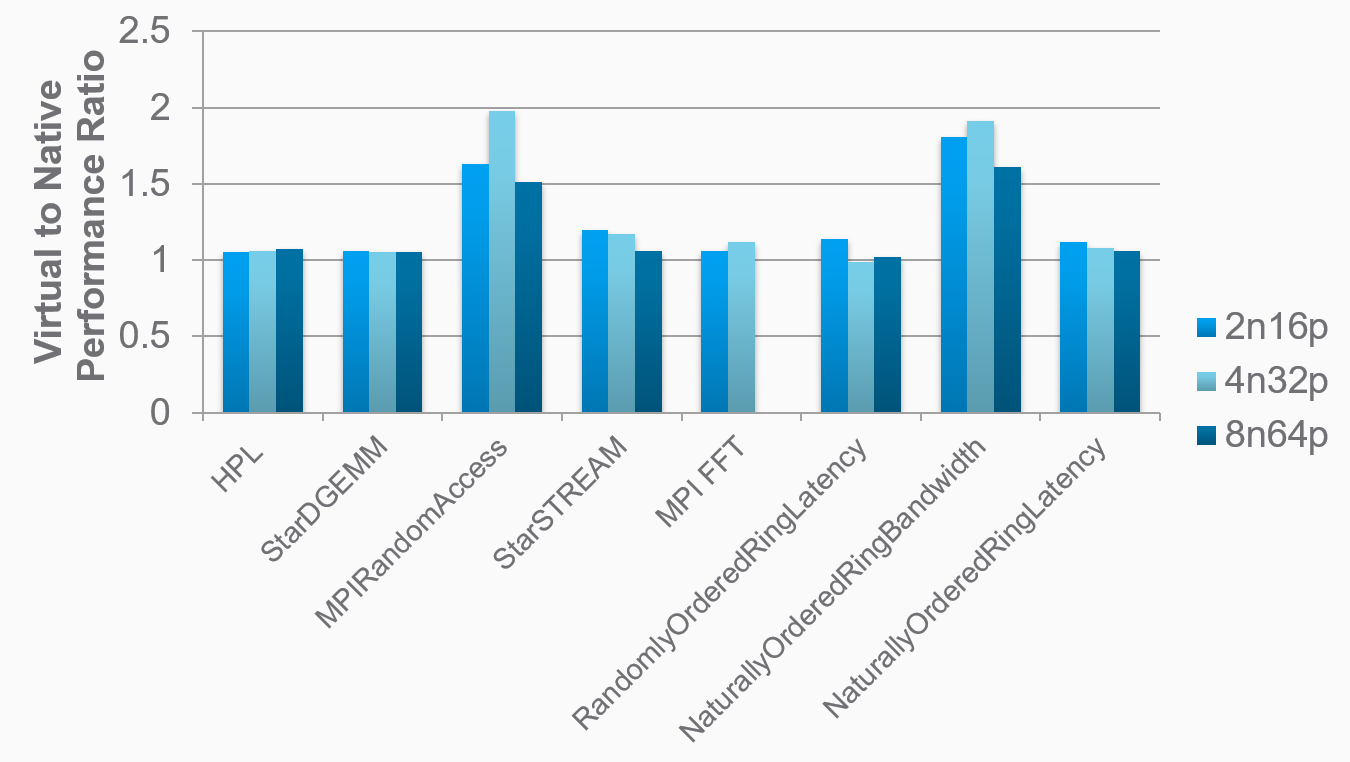

HPCC performance ratios (lower is better):

This speedup usually results from a NUMA effect and an OS scheduling effect. The Linux instance must do resource balancing between the four job instances and it must also handle the NUMA issues related to this being a multi-socket system.

Virtualization will help us with the following advantages:

- Each Linux instance must handle only one job

- Because of the ESXi scheduler's NUMA awareness, each VM would be scheduled onto a socket so none of the Linux instances needs to suffer the potential inefficiencies of dealing with NUMA issues

We don't have to worry about multiple Linux instances and VMs consuming more memory: transparent page sharing (TPS) can mitigate this as the hypervisor will find common pages between VMs and share them where possible.