First of all, let's make some changes to the current simulation so that we are able to work with perception better. We will create a new file named table.urdf in our workspace and add the following code into that file:

<robot name="simple_box">

<link name="my_box">

<inertial>

<origin xyz="0 0 0.0145"/>

<mass value="0.1" />

<inertia ixx="0.0001" ixy="0.0" ixz="0.0" iyy="0.0001" iyz="0.0" izz="0.0001" />

</inertial>

<visual>

<origin xyz="-0.23 0 0.215"/>

<geometry>

<box size="0.47 0.46 1.3"/>

</geometry>

</visual>

<collision>

<origin xyz="-0.23 0 0.215"/>

<geometry>

<box size="0.47 0.46 1.3"/>

</geometry>

</collision>

</link>

<gazebo reference="my_box">

<material>Gazebo/Wood</material>

</gazebo>

<gazebo>

<static>true</static>

</gazebo>

</robot>

Next, we will execute the following command in order to spawn an object right in front of the fetch robot:

$ rosrun gazebo_ros spawn_model -file /home/user/catkin_ws/src/table.urdf -urdf -x 1 -model my_object

The output is shown in the following diagram:

Robot with an object in the environment

Later, we will execute the following command in order to move the fetch robot's head so that it points to the newly spawned object:

$ roslaunch fetch_gazebo_demo move_head.launch

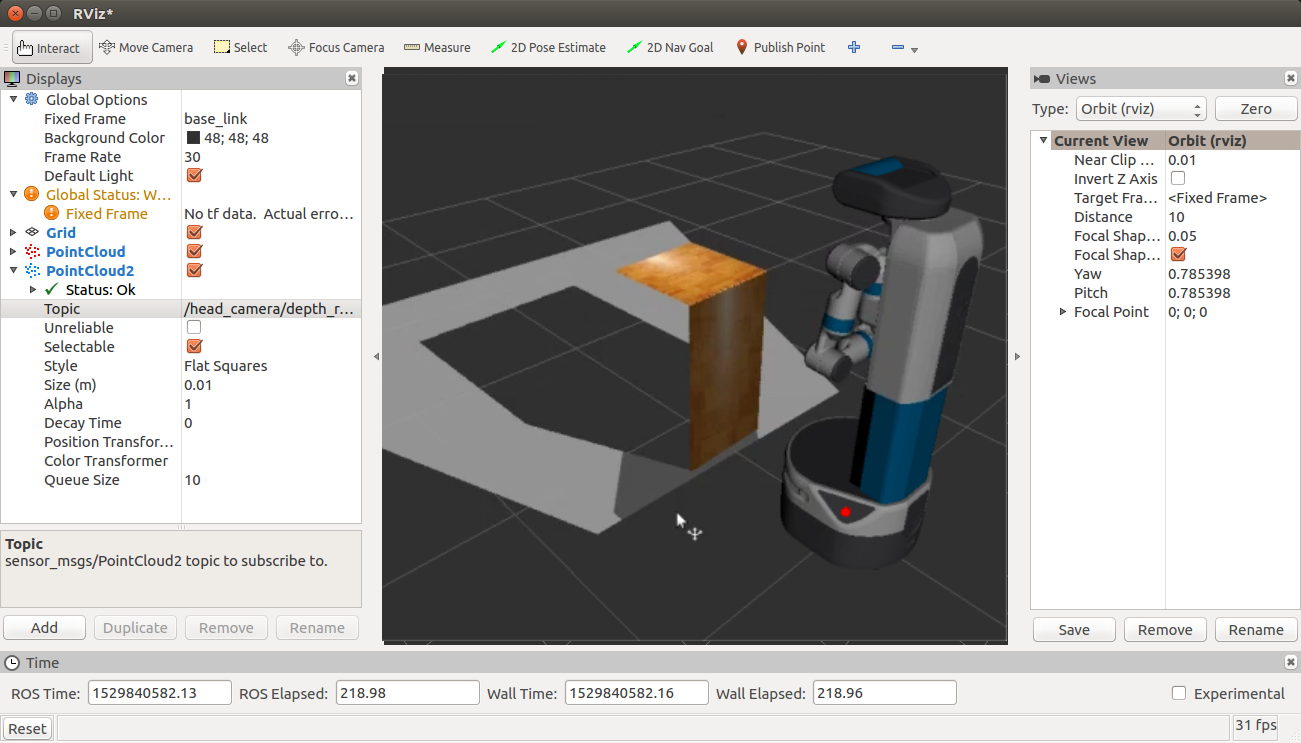

We can also launch RViz and add the corresponding element in order to visualize the PointCloud of the camera, which is shown in the following screenshot:

Point cloud in Rviz