Now that we have the target object defined in the scene, we need to generate grasping poses to pick it up. To achieve this aim, we use the grasp generator server from the moveit_simple_grasps package, which can be found at https://github.com/davetcoleman/moveit_simple_grasps.

Unfortunately, there isn't a debian package available in Ubuntu for ROS Kinetic. Therefore, we need to run the following commands to add the kinetic-devel branch to our workspace (inside the src folder of the workspace):

$ wstool set moveit_simple_grasps --git https://github.com/davetcoleman/moveit_simple_grasps.git -v kinetic-devel

$ wstool up moveit_simple_grasps

We can build this using the following commands:

$ cd ..

$ caktin_make

Now we can run the grasp generator server as follows (remember to source devel/setup.bash):

$ roslaunch rosbook_arm_pick_and_place grasp_generator_server.launch

The grasp generator server needs the following grasp data configuration in our case:

base_link: base_link gripper: end_effector_name: gripper # Default grasp params joints: [finger_1_joint, finger_2_joint] pregrasp_posture: [0.0, 0.0] pregrasp_time_from_start: &time_from_start 4.0 grasp_posture: [1.0, 1.0] grasp_time_from_start: *time_from_start postplace_time_from_start: *time_from_start # Desired pose from end effector to grasp [x, y, z] + [R, P, Y] grasp_pose_to_eef: [0.0, 0.0, 0.0] grasp_pose_to_eef_rotation: [0.0, 0.0, 0.0] end_effector_parent_link: tool_link

This defines the gripper we are going to use to grasp objects and the pre- and post-grasp postures, basically.

Now we need an action client to query for the grasp poses. This is done inside the pick_and_place.py program, right before we try to pick up the target object. So,we create an action client using the following code:

# Create grasp generator 'generate' action client: self._grasps_ac =

SimpleActionClient('/moveit_simple_grasps_server/generate',

GenerateGraspsAction) if not self._grasps_ac.wait_for_server(rospy.Duration(5.0)): rospy.logerr('Grasp generator action client not available!') rospy.signal_shutdown('Grasp generator action client not

available!') return

Inside the _pickup method, we use the following code to obtain the grasp poses:

grasps = self._generate_grasps(self._pose_coke_can, width)

Here, the width argument specifies the width of the object to grasp. The _generate_grasps method does the following:

def _generate_grasps(self, pose, width):

# Create goal:

goal = GenerateGraspsGoal()

goal.pose = pose

goal.width = width

# Send goal and wait for result:

state = self._grasps_ac.send_goal_and_wait(goal)

if state != GoalStatus.SUCCEEDED:

rospy.logerr('Grasp goal failed!: %s' %

self._grasps_ac.get_goal_status_text())

return None

grasps = self._grasps_ac.get_result().grasps

# Publish grasps (for debugging/visualization purposes):

self._publish_grasps(grasps)

return grasps

To summarize, it sends an actionlib goal to obtain a set of grasping poses for the target goal pose (usually at the object centroid). In the code provided with the section, there are some options commented upon, but they can be enabled to query only for particular types of grasps, such as some angles, or pointing up or down. The outputs of the function are all the grasping poses that the pickup action will try later. Having multiple grasping poses increases the possibility of a successful grasp.

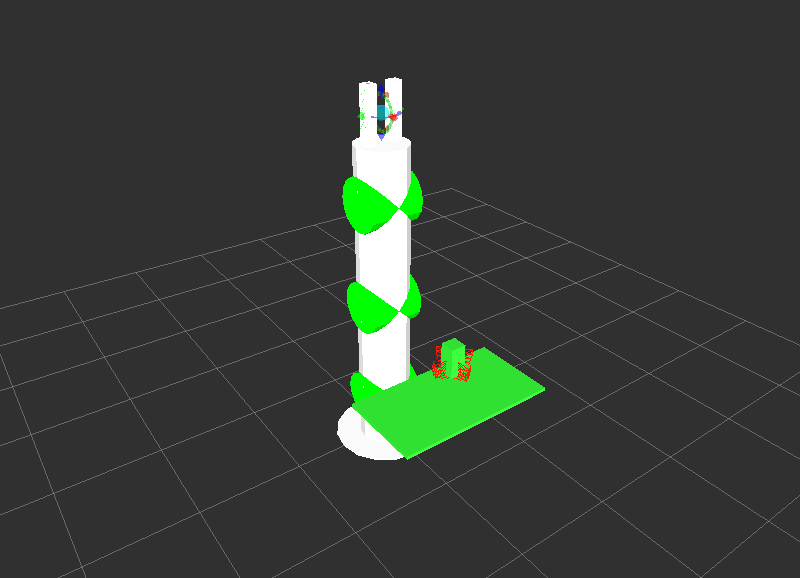

The grasp poses provided by the grasp generation server are also published as PoseArray using the _publish_grasps method for visualization and debugging purposes. We can see them on RViz running the whole pick and place task as before:

$ roslaunch rosbook_arm_gazebo rosbook_arm_grasping_world.launch

$ roslaunch rosbook_arm_moveit_config moveit_rviz.launch config:=true

$ roslaunch rosbook_arm_pick_and_place grasp_generator_server.launch

$ rosrun rosbook_arm_pick_and_place pick_and_place.py

A few seconds after running the pick_and_place.py program, we will see multiple arrows on the target object which correspond to the grasp pose that will be tried in order to pick it up. This is shown in the following figure as follows: