The next step consists of working with stereo cameras. One option is to run two monocular camera nodes, but in general, it is better to consider the whole stereo pair as a single sensor because the images must be synchronized. In ROS, there is no driver for FireWire stereo cameras, but we can use an extension to stereo using the following command line:

$ git clone git://github.com/srv/camera1394stereo.git

However, FireWire stereo pairs are quite expensive. For this reason, we provide a stereo camera driver for USB cameras. We use the Logitech C120 USB webcam, which is very cheap. It is also noisy, but we will see that we can do great things with it after calibration. It is important that in the stereo pair, the cameras are similar, but you can try with different cameras as well. Our setup for the cameras is shown in the images. You only need the two cameras on the same plane, pointing in parallel directions.

We have a baseline of approximately 12 cm, which will also be computed in the stereo calibration process. As you can see in the following screenshot, you only need a rod to attach the cameras to, with zip ties:

Now, connect the cameras to your USB slots. It is good practice to connect the left-hand side camera first and then the right-hand side one. This way, they are assigned to the /dev/video0 and /dev/video1 devices, or 1 and 2 if 0 was already taken. Alternatively, you can create a udev rule.

Then, you can test each camera individually, as we would for a single camera. Some tools you will find useful are the video4linux control panels for cameras:

$ sudo apt-get install v4l-utils qv4l2

You might experience the following problem:

In case of problems with stereo:

libv4l2: error turning on stream: No space left on device

This happens because you have to connect each camera to a different USB controller; note that certain USB slots are managed by the same controller, and hence it cannot deal with the bandwidth of more than a single camera. If you only have a USB controller, there are other options you can try. First, try to use a compressed pixel format, such as MJPEG in both cameras. You can check whether or not it is supported by your by using the following command:

$ v4l2-ctl -d /dev/video2 --list-formats

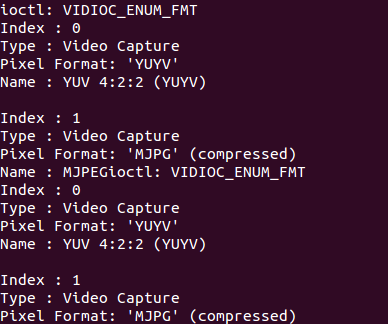

The command will generate something similar to the following output:

If MJPEG is supported, we can use more than one camera in the same USB controller; otherwise, with uncompressed pixel formats, we must use different USB controllers or reduce the resolution to 320 x 240 or lower. Similarly, with the GUI of qv4l2, you can check this and test your camera. You can also check whether it is possible to set the desired pixel format. In fact, this does not work for our USB cameras using the OpenCV set method, so we use a USB slot managed by a different USB controller.

The USB stereo camera driver that comes with this section is based on the USB camera discussed so far. Basically, the driver extends the camera to support camera publishers, which send the left-hand side and right-hand side images and the camera information as well. You can run it and view the images by using the following command:

$ roslaunch chapter5_tutorials camera_stereo.launch view:=true

It also shows the disparity image of the left-hand side and right-hand side cameras, which will be useful once the cameras are calibrated as it is used by the ROS image pipeline. In order to calibrate the cameras, use the following command:

$ roslaunch chapter5_tutorials camera_stereo.launch calibrate:=true

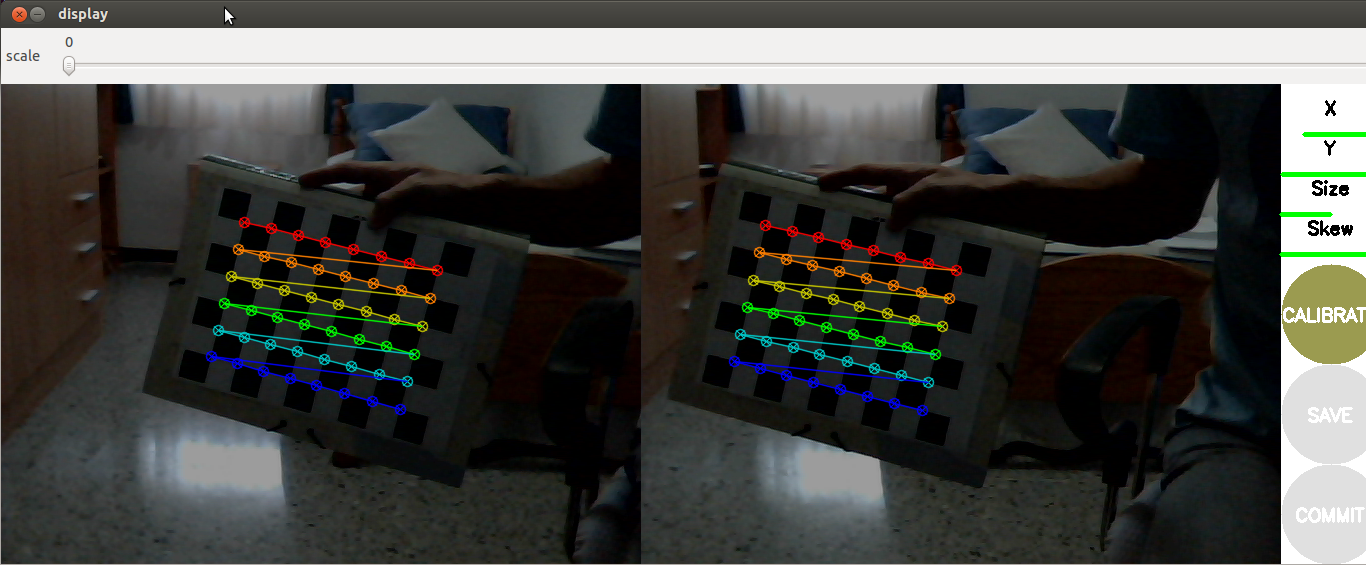

You will see a GUI for monocular cameras similar to the following screenshot:

At the time the preceding image was taken, we had shown enough views to start the calibration. Note that the calibration pattern must be detected by both cameras simultaneously to be included in the calibration optimization step. Depending on the setup, this may be quite tricky, so you should put the pattern at an appropriate distance from the camera. You will see the setup used for the calibration of this section in the following image:

The calibration is done by the same cameracalibrator.py node used for monocular cameras. We pass the left-hand side and right-hand side cameras and images, so the tool knows that we are going to perform stereo calibration. The following is the node in the launch file:

<node ns="$(arg camera)" name="cameracalibrator" pkg="camera_calibration" type="cameracalibrator.py" args="--size 8x6 --square 0.030" output="screen"> <remap from="left" to="left/image_raw"/> <remap from="right" to="right/image_raw"/> <remap from="left_camera" to="left"/> <remap from="right_camera" to="right"/> </node>

The result of the calibration is the same as for monocular cameras, but in this case, we have two calibration files, one for each camera. In accordance with the parameters file in config/camera_stereo/logitech_c120.yaml, we have the following code:

camera_info_url_left:

package://chapter5_tutorials/calibration/camera_stereo/

${NAME}.yamlcamera_info_url_right:

package://chapter5_tutorials/calibration/camera_stereo/

${NAME}.yaml

${NAME} is the name of the camera, which resolved to logitech_c120_left and logitech_c120_right for the left-hand side and right-hand side cameras, respectively. After the commit of the calibration, those files are updated with the calibration of each camera. They contain the calibration matrix, the distortion model coefficients, and the rectification and projection matrix, which includes the baseline, that is, the separation between each camera in the x axis of the image plane. In the parameters file, you can also see values for the camera properties that have been set for indoor environments with artificial light; the camera model used has some auto correction, so sometimes the images may be bad, but these values seem to work well in most cases.