We can do pick and place in various ways. One is by using pre defined sequences of joint values; in this case, we put the object in a predefined position and move the robot into that position by providing direct joint values or forward kinematics. Another method of pick and place is by using inverse kinematics without any visual feedback; in this case, we command the robot to go to an X,Y, and Z position with respect to the robot, and by solving IK, the robot can reach that position and pickup that object. One more method is vision assisted pick and place; in this case, a vision sensor is used to identify the object's position and the arm goes to that location by solving IK and picks the object.

In this section, we will demonstrate a pick and place in which we will give the grasping object position and the robot will move to that coordinate and pick the object. It can be tied up with vision in such a way that we need to tell the object position, which is seen by the sensor in robot coordinate system. Here we are not performing object recognition and finding position of the object. Instead of that, we are directly giving the object position.

We can work with this example along with Gazebo or simply use the MoveIt! demo interface. First, we will look at a direct pick and place mechanism by giving the grasp object position in MoveIt! using the python grasp client.

Launch MoveIt! demo:

$ roslaunch seven_dof_config demo.launch

Launch MoveIt! Grasp server:

$ roslaunch seven_dof_arm_gazebo grasp_generator_server

Run the Grasp client:

$ rosrun seven_dof_arm_gazebo pick_and_place.py

This will do a basic pick and place routine with a grasp object inserted in the planning scene.

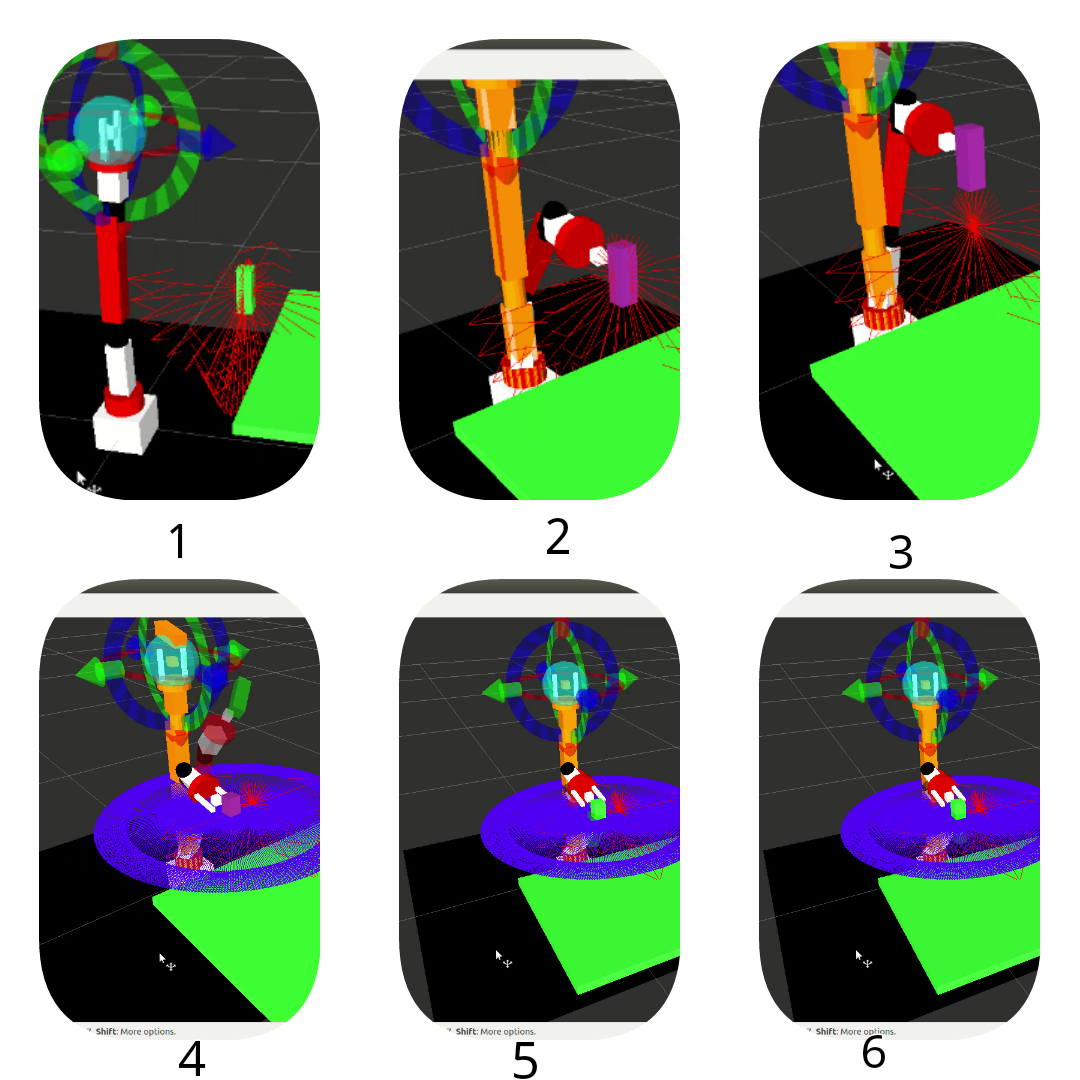

Following is the screenshot of the grasping process:

The various steps in the grasping process are explained next:

- Step 1- Grasp Pose: In the first step, we can see a green block, which is the object that is going to be grasped by the robot gripper. We have created this object inside the planning scene using the pick_and_place.py node and it gives the block position to the grasp server. When the pick and place starts, we can see a Pose Array of values from the /grasp topic, indicating that this is the grasp object position.

- Step 2 - Pick Action: After getting the grasp object position, this grasp client sends this position of pick and place to the grasp server to generate IK and check whether any feasible IK for this object position. If it is a valid IK, the arm gripper will come to pick the object.

- Step 3,4,5,6 Place Action - After picking the block, the grasp server checks for the valid IK pose in the place pose. If there is a valid IK in the place pose, the gripper holds the object in a trajectory and places it in the appropriate position. The place Pose Array is shown as blue color from the topic /place.

We can have a look on to the pick_and_place.py code, this is a modified version of sample code mentioned in the following Git repository.(https://github.com/AaronMR/Learning_ROS_for_Robotics_Programming_2nd_edition.git)